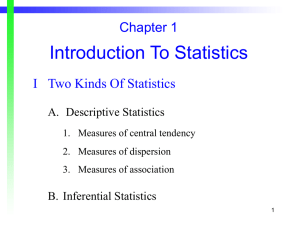

Wald, LM and LR based on GMM and MLE

advertisement

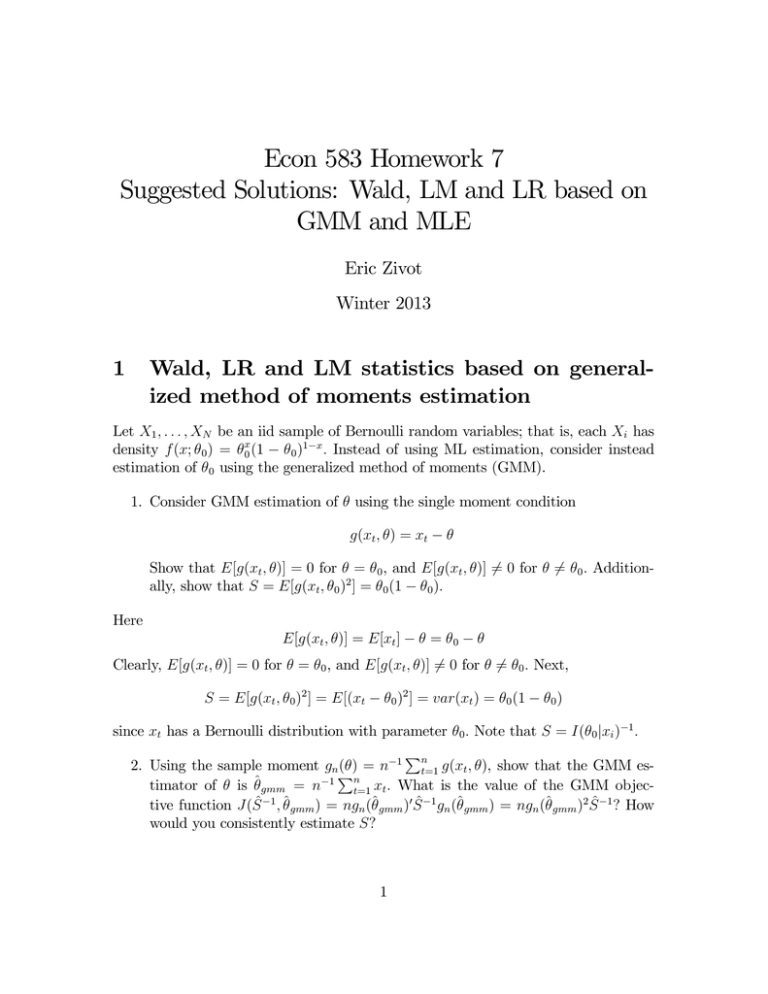

Econ 583 Homework 7 Suggested Solutions: Wald, LM and LR based on GMM and MLE Eric Zivot Winter 2013 1 Wald, LR and LM statistics based on generalized method of moments estimation Let 1 be an iid sample of Bernoulli random variables; that is, each has density (; 0 ) = 0 (1 − 0 )1− Instead of using ML estimation, consider instead estimation of 0 using the generalized method of moments (GMM). 1. Consider GMM estimation of using the single moment condition ( ) = − Show that [( )] = 0 for = 0 , and [( )] 6= 0 for 6= 0 Additionally, show that = [( 0 )2 ] = 0 (1 − 0 ) Here [( )] = [ ] − = 0 − Clearly, [( )] = 0 for = 0 , and [( )] 6= 0 for 6= 0 Next, = [( 0 )2 ] = [( − 0 )2 ] = ( ) = 0 (1 − 0 ) since has a Bernoulli distribution with parameter 0 Note that = (0 | )−1 P 2. Using the sample moment () = −1 =1 ( ), show that the GMM esP timator of is ̂ = −1 =1 What is the value of the GMM objective function (̂ −1 ̂ ) = (̂ )0 ̂ −1 (̂ ) = (̂ )2 ̂ −1 ? How would you consistently estimate ? 1 Equating the sample moment with the population moment gives the estimating equation X X −1 −1 (̂ ) = ( ̂ ) = − ̂ = 0 =1 =1 ⇒ ̂ = −1 X = ̄ =1 Hence, the GMM estimator is the same as the ML estimator. The GMM objective function evaluated at ̂ is ! à X − ̂ ̂ −1 = 0 (̂ −1 ̂ ) = (̂ )2 ̂ −1 = −1 =1 P regardless of the value of ̂ since ̂ = −1 =1 This is to be expected since with one moment condition and one parameter to estimate the model is exactly identified. Since = 0 (1 − 0 ) a nature estimate is ̂ = ̂ (1 − ̂ ) Alternatively, one could use the the usual non-parametric estimator of : −1 ̂ = X 2 −1 ( ̂ ) = =1 X ( − ̂ )2 =1 3. Determine the asymptotic normal distribution for ̂ Is this distribution the same as the asymptotic normal distribution for ̂ ? Using the asymptotic normality result for GMM estimators we have ̂ ∼ (0 −1 (0 −1 )−1 ) ¸ ∙ ( 0 ) = [( 0 )2 ] = 0 Now, ( 0 ) = ( − 0 ) = −1 0 0 = 0 (1 − 0 ) so that and (0 −1 )−1 = = 0 (1 − 0 ) ̂ ∼ (0 −1 0 (1 − 0 )) which is equivalent to the asymptotic distribution for the MLE. Next, consider testing the hypotheses 0 : = 0 vs. 1 : 6= 0 using a 5 significance level. 2 4. and 5. Derive the Wald, LR and LM statistics for testing the above hypothesis. Because is a scalar, these statistics have the form = (̂ − 0 )2 ( (̂ )2 ̂ −1 ) = ( (0 )̂ −1 (0 ))2 ( (0 )2 ̂ −1 )−1 = (̂ −1 0 ) − (̂ −1 ̂ ) () For the Wald and LR statistic it is useful to estimate where () = using ̂ For the LM statistic, it is useful to an estimate of imposing = 0 Are the GMM Wald, LM and LR statistics numerically equivalent? Compare the GMM Wald, LR and LM statistics with the ML equivalents. Do you see any similarities or differences? The Wald statistic has the form = (̂ − 0 )2 ( (̂ )2 ̂ −1 ) = (̂ − 0 )2 ̂ (1 − ̂ ) which is equivalent to the ML Wald statistic. For the LM statistic, first note that (0 ) = 1 −1 (0 ) = X =1 − 0 = ̂ − 0 ̂ = 0 (1 − 0 ) Then, the LM statistic has the form = ( (0 )̂ −1 (0 ))2 ( (0 )2 ̂ −1 )−1 = (̂ − 0 )2 0 (1 − ̂0 ) which is identical to the ML LM statistic. For the LR statistic, recall that (̂ ̂ −1 ) = 0 since the model is just identified. Therefore, the LR statistic has the form = (̂ −1 0 ) − (̂ −1 ̂ ) = (̂ −1 0 ) = (0 )0 ̂ −1 (0 ) = (0 )2 ̂ −1 = (̂ − 0 )2 ̂ (1 − ̂ ) 3 which is not equivalent to the ML LR statistic. However, the GMM LR statistic is equivalent to the GMM Wald statistic. If you use ̂ = 0 (1 − 0 ) then the GMM LR statistics is equivalent to the the GMM LM statistic. Note:P for GMM, = P= if the same ̂ is used for all statistics. If ̂ = −1 =1 ( ̂ )2 = −1 =1 ( − ̂ )2 is used then the GMM statistics will not be equal to the ML statistics in finite samples, but will be as the sample size gets large. 2 Wald, LR and LM statistics based on maximum likelihood estimation Let 1 be an iid sample of Bernoulli random variables; that is, each has density (; 0 ) = 0 (1 − 0 )1− First, consider estimation of 0 using maximum likelihood (ML). Let = (1 ) ln (|x) 1. Derive the log-likelihood function, ln (|x) score function, (|x) = 2 Hessian function, (|x) = 2 ln (|x) and information matrix, (0 |x) = −[(0 |x)] = ((0 |x)) Verify P that [(0 |x)] = 0 and show that the ML estimate for is ̂ = −1 =1 The joint density/likelihood function for the sample is given by (x; ) = (|x) = Y =1 The log-likelihood function is (1 − )1− = =1 (1 − )− =1 ´ ³ ln (|x) = ln =1 (1 − )− =1 ! à X X = ln() + − ln(1 − ) =1 =1 The score function for the Bernoulli log-likelihood is ln (|x) 1 1X (|x) = − = =1 1− P =1 ( − ) = (1 − ) à − X Using the chain rule it can be shown that −̄ (1 − ̄) (|x) = − (1 − )2 ¶ µ + (|x) − 2(|x) = − (1 − ) (|x) = 4 =1 ! where ̄ = −1 P =1 Therefore, the information matrix is (0 |x) = −[(0 |x)] = = 0 (1 − 0 ) since [(0 |x)] = since [ ] = 0 The MLE solves (̂ |x) = + [(0 |x)] − 20 [(0 |x)] 0 (1 − 0 ) P − 0 ) =0 0 (1 − 0 ) =1 ([ ] P =1 ( − ̂ ) =0 ̂ (1 − ̂ ) 1X = ̄ ⇒ ̂ = =1 2. Determine the asymptotic normal distribution for ̂ Using the asymptotic properties of the MLE for a regular model, (0 |x)−1 ̂ ∼ (0 (0 |x)−1 ) 0 (1 − 0 ) = Since 0 is unknown, a practically useful result replaces (0 |x)−1 with (̂ |x)−1 Next, consider testing the hypotheses 0 : = 0 vs. 1 : 6= 0 using a 5 significance level. 3. Derive the Wald, LR and LM statistics for testing the above hypothesis. These statistics have the form = (̂ − 0 )2 (̂ |x) = (0 |x)2 (0 |x)−1 = −2[ln (0 |x) − ln (̂ |x)] Are these statistics numerically equivalent? For a 5% test, what is the decision rule to reject 0 for each statistic? 5 The Wald statistic has the form = (̂ − 0 )2 (̂ |x) ³ ´ = (̂ − 0 )2 ̂ 1 − ̂ (̂ − 0 )2 ³ ´ = ̂ 1 − ̂ For the LM statistic, note that P − ) (̂ − 0 ) = (1 − ) 0 (1 − 0 ) 0 (1 − 0 ) = (0 |x) = (0 |x)−1 =1 ( so that = (0 |x)2 (0 |x)−1 à !2 0 (1 − 0 ) (̂ − 0 ) = 0 (1 − 0 ) = (̂ − 0 )2 0 (1 − 0 ) Notice that the LM statistic has the same form as the Wald statistic. The difference between the two statistics is based on how (|x) is estimated. With the Wald statistic, (|x) is estimated using (̂ |x) and with the LM statistic, (|x) is estimated using (0 |x) For the statistic, note that ! à X X ln(̂ ) + − ln(1 − ̂ ) ln ( |x) = ln (0 |x) = =1 X ln(0 ) + =1 à − =1 X =1 ! ln(1 − 0 ) Therefore = −2[ln (0 |x) − ln (̂ |x)] " ! à # ³ ´ X X = −2 ln(1 − ) − ln(1 − ̂ ) (ln 0 − ln ) + − =1 ∙=1 ¶ ¶¸ µ µ 0 1 − 0 = −2 ̂ ln + (1 − ̂ ) ln ̂ 1 − ̂ 6 4. Prove that the Wald and LM statistics have asymptotic 2 (1) distributions. First, consider the Wald statistic: (̂ − 0 )2 ³ ´ ̂ 1 − ̂ ⎛ ⎞2 √ ⎜ (̂ − 0 ) ⎟ ⎟ r = ⎜ ³ ´⎠ ⎝ ̂ 1 − ̂ = From the asymptotic normality of the MLE, we have that √ (̂ − 0 ) → (0 0 (1 − 0 )) and from the consistency of the MLE we have that ̂ → 0 Slutsky’s theorem then gives r ³ ´ p ̂ 1 − ̂ → 0 (1 − 0 ) Finally, by a combination of Slutsky’s theorem and the continuous mapping theorem (CMT) we have ⎛ ⎞2 à !2 √ ⎜ ⎟ ( ̂ − ) (1 − )) (0 0 0 ⎜r ⎟ p 0 ³ ´⎠ → ⎝ 0 (1 − 0 ) ̂ 1 − ̂ = (0 1)2 = 2 (1) where 2 (1) denotes a chi-square random variable with 1 degree of freedom. A similar proof works for the LM statistic: (̂ − 0 )2 = 0 (1 − 0 ) Ã√ !2 (̂ − 0 ) = p 0 (1 − 0 ) à !2 (0 0 (1 − 0 )) p → = 2 (1) 0 (1 − 0 ) 7