Ranger - T

advertisement

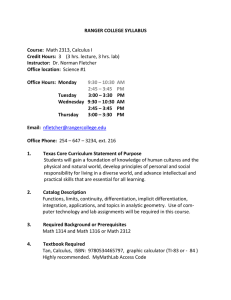

Experiences and Achievements in Deploying Ranger, the First NSF "Path to Petascale" System Tommy Minyard Associate Director Texas Advanced Computing Center The University of Texas at Austin HPCN Workshop May 14, 2009 Ranger Ranger: What is it? • Ranger is a unique instrument for computational scientific research housed at UT • Results from over 2 ½ years of initial planning and deployment efforts beginning Nov. 2005 • Funded by the National Science Foundation as part of a unique program to reinvigorate High Performance Computing in the United States • Oh yeah, it’s a Texas-sized supercomputer How Much Did it Cost and Who’s Involved? • TACC selected for very first NSF ‘Track2’ HPC system – $30M system acquisition – Sun Microsystems is the vendor – We competed against almost every US open science HPC center • TACC, Cornell, Arizona State HPCI are teamed to operate/support the system four 4 years ($29M) Ranger Project Timeline Dec05 initial planning and system conceptual design Feb06 TACC submits proposal, get’s a few nights sleep Sep06 award, press release, relief 1Q07 equipment begins arriving 2Q07 facilities upgrades complete 2Q-4Q07 construction of system 4Q07 early users Jan08 acceptance testing Feb08 initial production and operations Jun08 processor upgrade complete, system acceptance Who Built Ranger? An international collaboration which leverages a number of freely-available software packages (e.g. Linux, InfiniBand, Lustre) Ranger System Summary • Compute power – 579.4 Teraflops – 3,936 Sun four-socket blades – 15,744 AMD “Barcelona” processors • Quad-core, four flops/clock cycle • Memory - 123 Terabytes – 2 GB/core, 32 GB/node – 123 TB/s aggregate bandwidth • Disk subsystem - 1.7 Petabytes – 72 Sun x4500 “Thumper” I/O servers, 24TB each – 50 GB/sec total aggregate I/O bandwidth – 1 PB raw capacity in largest filesystem • Interconnect – 1 GB/s, 1.6-2.85 μsec latency, 7.8 TB/s backplane – Sun Data Center Switches (2), InfiniBand, up to 3456 4x ports each – Full non-blocking 7-stage fabric – Mellanox ConnectX InfiniBand HCAs InfiniBand Fabric Connectivity Ranger Space, Power and Cooling • Total Power: 3.4 MW! • System: 2.4 MW – 96 racks – 82 compute, 12 support, plus 2 switches – 116 APC In-Row cooling units – 2,054 sq.ft. footprint (~4,500 sq.ft. including PDUs) • Cooling: ~1 MW – In-row units fed by three 350-ton chillers (N+1) – Enclosed hot-aisles by APC – Supplemental 280-tons of cooling from CRAC units • Observations: – Space less an issue than power – Cooling > 25kW per rack a challenge – Power distribution a challenge, almost 1,400 circuits External Power and Cooling Infrastructure Last racks delivered: Sept 15, 2007 Switches in place InfiniBand Cabling in Progress Hot aisles enclosed InfiniBand Cabling Complete InfiniBand Cabling for Ranger • Sun switch design with reduced cable count, manageable, but still a challenge to cable – 1312 InfiniBand 12x to 12x cables – 78 InfiniBand 12x to three 4x splitter cables – Cable lengths range from 9-16m • 15.4 km total InfiniBand cable length Connects InfiniBand switch to C48 Network Express Module Connects InfiniBand switch to standard 4x connector HCA Ranger Cable Envy? • On a system like Ranger, even designing the cables is a big challenge (1 cable can transfer data ~2000 times faster then your best ever wireless connection) • The cables on Ranger are 1st demonstration of their kind for InfiniBand cabling (1 cable is really 3 cables inside), new 12x connector • Routing them to all the various components is no fun either Hardware Deployment Challenges • AMD quad-core processor delays and TLB errata • Shear quantity of components, logistics nightmare • InfiniBand cable quality • New hardware, many firmware and BIOS updates • Scale, scale, scale Software Deployment Challenges • Lustre with latest OFED InfiniBand, RAID5 crashes, quota problems • Sun Grid Engine scalability and performance • InfiniBand Subnet manager and routing algorithms • MPI collective tuning and large job startup MPI Scalability and Collective Tuning 10000000 MVAPICH MVAPICH-devel OpenMPI Average Time (usec) . 1000000 100000 10000 1000 100 10 1 10 100 1000 10000 100000 1E+06 1E+07 Size (bytes) Current Ranger Software Stack • OFED 1.3.1 with Linux 2.6.18.8 kernel • Lustre 1.6.7.1 • Multiple MPI stacks supported – MVAPICH 1.1 – MVAPICH2 1.2 – OpenMPI 1.3 • Intel 10.1 and PGI 7.2 compilers • Modules package to setup environment MPI Tests: P2P Bandwidth 1200 Ranger - OFED 1.2 - MVAPICH 0.9.9 Lonestar - OFED 1.1 MVAPICH 0.9.8 Bandwidth (MB/sec) 1000 800 600 400 200 Effective Effective Bandwith Bandwith is is improved improved at smaller message size at smaller message size 0 1 10 100 1000 10000 100000 1000000 1000000 0 Message Size (Bytes) 1E+08 Ranger: Bisection BW Across 2 Magnums 120.0% 1800 Ideal 1600 Measured Full Bisection BW Efficiency Bisection BW (GB/sec) 2000 1400 1200 1000 800 600 400 200 100.0% 80.0% 60.0% 40.0% 20.0% 0 0 20 40 60 # of Ranger Compute Racks 80 100 0.0% 1 2 4 8 16 32 64 # of Ranger Compute Racks • Able to sustain ~73% bisection bandwidth efficiency with all nodes communicating (82 racks) • Subnet routing is key! – Using fat-tree routing from OFED 1.3 which has cached routing to minimize the overhead of remaps 82 Software Challenges: Large MPI Jobs Time to run 16K hello world: MVAPICH: MVAPICH: 50 50 secs secs OpenMPI: OpenMPI: 140 140 secs secs Upgraded Upgraded performance performance in in Oct. Oct. 08 08 First Year Production Experiences • Demand for system exceeded expectations • Applications scaled better than predicted – Jobs with 16K MPI tasks routine on system now – Several groups scaling applications to full system • Filesystem performance very good • HPC expert user support required to solve some system issues – Application performance variability – System noise and OS jitter Ranger Usage • Who uses Ranger? – a community of researchers from around the US (along with international collaborators) – more than 2200 allocated users as of Apr 2009 – 450 individual research projects • Usage to Date? – >700,000 jobs have been run through the queues – >450 million CPU hours consumed • How long did it take to fill up the largest Lustre file system? – We were able to go ~6months prior to turning on the file purging mechanism – Steady state usage allows us to retain data for about 30 days – Generate ~10-20 TB a day currently Who Uses Ranger? Parallel Filesystem Performance $SCRATCH File System Throughput 35 Stripecount=1 Stripecount=4 50 Write Speed (GB/sec) Write Speed (GB/sec) 60 40 30 20 10 0 $SCRATCH Application Performance Stripecount=1 Stripecount=4 30 25 20 15 10 5 0 1 10 100 1000 # of Writing Clients 10000 1 10 100 1000 # of Writing Clients Some applications measuring 35GB/s of performance 10000 Application Performance Variability Problem • User code running and performing consistently per iteration at 8K and 16K tasks • Intermittently during a run, iterations would slow down for a while, then resume • Impact was tracked to be system wide • Monitoring InfiniBand error counters isolated problem to single node HCA causing congestion • Users don’t have access to the IB switch counters, hard to diagnose in application System Noise, OS Jitter Measurement • Application scales and performs poorly at larger MPI task counts, 2K and above • Application dominated by Allreduce with a brief amount of computational work between – No performance problem when code run 15-way – Significant difference at 8K between 15-way and 16-way • Noise isolated to system monitoring processes: – SGE health monitoring – 30% at 4K – IPMI daemon – 12% at 4K Note: Other applications not measurably impacted by the health monitoring processes OS Jitter Impact on Performance 20.0 15‐Way 18.0 16‐Way Time (secs) 16.0 14.0 12.0 10.0 8.0 6.0 4.0 2.0 0.0 0 2000 4000 6000 # of Cores 8000 10000 Weather Forecasting • TACC worked with NOAA to produce accurate simulations of Hurricane Ike, and new storm surge models Using up to 40,000 processing cores at once, researchers simulating both global and regional weather predictions received ondemand access to Ranger, enabling not only ensemble forecasting, but also real-time, highresolution predictions. Volker Bromm is investigating the conditions during the formation of the first galaxies in the universe after the big bang. This image shows two separate quantities, temperature and hydrogen density, as the first galaxy is forming and evolving. Volker Bromm, Thomas Grief, Chris Burns, The University of Texas at Austin Researching the Origins of the Universe Computing the Earth’s Mantle • Omar Ghattas is studying convection in the Earth’s interior. He is simulating a model mantle convection problem. Images depict rising temperature plume within the Earth's mantle, indicating the dynamicallyevolving mesh required to resolve steep thermal gradients. • Ranger's speed and memory permit higher resolution simulations of mantle convection, which will lead to a better understanding of the dynamic evolution of the solid Earth Carsten Burstedde, Omar Ghattas, Georg Stadler, Tiankai Tu, Lucas Wilcox, The University of Texas at Austin Application Example: Earth Sciences Mantle Convection, AMR Method Courtesy: Omar Ghattas, et. al. Application Example: Earth Sciences Courtesy: Omar Ghattas, et. al. Application Examples: DNS/Turbulence Courtesy: P.K. Yeung, Diego Donzis, TG 2008 UINTAH Framework Lessons Learned • Planning for risks essential, agile deployment schedule a must • Everything breaks as you increase scale, hard to test until system complete • Close vendor/customer/3rd party provider collaboration required for successful deployment • New hardware needs thorough testing in “real-world” environments Ongoing Challenges • Supporting multiple groups running at 32K+ cores • Continued application and library porting and tuning • Enhanced MPI job startup reliability and speed beyond 32K tasks, continued tuning of MPI • Optimal CPU and memory affinity settings • Better job scheduling and scalability of SGE • Improving application scalability and user productivity • Ensuring filesystem and IB fabric stability Spur - Visualization System • 128 cores, 1.125 TB distributed memory, 32 GPUs • 1 Sun Fire X4600 server • • – 8 AMD dual-core CPUs – 256 GB memory – 4 NVIDIA FX5600 GPUs 7 Sun Fire X4440 servers – 4 AMD quad-core CPUs per node – 128 GB memory per node – 4 NVIDIA FX5600 GPUs per node Shares Ranger InfiniBand and file systems Summary • Significant challenges deploying system at the size of Ranger, especially with new hardware and software • Application scalability and system usage exceeding expectations • Collaborative effort with many groups successfully overcoming the challenges posed by system of this scale • Open source software, commodity hardware based supercomputing at “petascale” feasible More About TACC: Texas Advanced Computing Center www.tacc.utexas.edu info@tacc.utexas.edu (512) 475-9411