Test Plan and Test Procedures Document

advertisement

BETA

Operational Benefit Evaluation by Testing an A-SMGCS

Growth Project 1999-RD.10804

Test Plan and Test Procedures Document

Test Procedures for Braunschweig

Operational Benefit Evaluation by Testing an A-SMGCS

H. P. Zenz, J. Jakobi

Project Funded by

European Commission, DG TREN

The Fifth Framework Programme

Competitive and Sustainable Growth

Contract 1999-RD.10804

Project Manager

Michael Roeder

Deutsches Zentrum für Luft und Raumfahrt

Lilienthalplatz 7, D-38108 Braunschweig, Germany

Phone: +49 (0) 531 295 3026, Fax: +49 (0) 531 295 2550

e-mail: beta@dlr.de

Copyright Notice: © 2002, EC Sponsored Project Beta

This document and the information contained herein is the property of Deutsches Zentrum für Luft- und Raumfahrt, Park Air Systems AS,

Deutsche Flugsicherung, Nationaal Lucht- en Ruimtevaartlaboratorium, QinetiQ, Air Navigation Services of the Czech Republic, Ceská

správa letist, Hamburg Airport, ERA, Thales ATM and the European Commission (EC). Any reproduction or other use of this material shall

acknowledge the BETA-Project, the companies involved and the EC as the information sources.

Visit the BETA Web page: http://www.dlr.de/beta

BETA

Operational Benefit Evaluation by Testing an A-SMGCS

Growth Project 1999-RD.10804

Test Plan and Test Procedures Document

Project Manager

Responsible Author(s):

Additional Author(s):

Company:

Subject / Title of Document:

Related Task('s):

Deliverable No. and Target Date

Save Date of File:

Document Version:

Reference / File Name

Number of Pages

Distribution Category: (P/R/I)*

Target Date

Document Control Sheet

M. Roeder

H. P. Zenz, J. Jakobi

K. Klein

DLR

Test Procedures for Braunschweig

WP5000

D16Ci

2002-02-18

1.0

D16CI-TPP-10.DOC

39

Public

2001-05-01

*Type: P: Public, R: Restricted, I: Internal

Member type

Document Distribution

Organisation

Name

Contractors

Sub-Contractors

dt-wordtmp-10.dot chg. "0"

Customer

Additional

Distributed **

http://www.dlr.de/beta

ANS-CR

AUEB

BA

CSL

QINETIQ

DFS

DLR

ERA

FHGG

HITT

TATM

BAE Systems

PAS

NLR

Quality Assurance

AHA

Airport BS (BWE)

intranet

internet

M. Tykal

K. Zografos

J. Conlon

P. Hlousek

A. Wolfe

K.-R. Täglich

M. Röder

Z. Svoboda

D. Wolf

A. Vermeer

S. Paul

B. Wortley

A. R. Johansen

F. van Schaik

B. Helgerud

D. Gleave

K. Oertel

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

EC

ACI

EUROCONTROL

IATA

IFATCA

C. Bernabei

J. Waechtler

P. Adamson

T. van der Veldt

L. Staudt

2002-02-18

2002-02-18

2002-02-18

2002-02-18

2002-02-18

Web page

** Distributed: insert date of delivery

A date in the Webpage line marked intranet corresponds to a delivery to all Project members (Contractors)

A date in the Webpage line marked internet column corresponds to a delivery to all on the list

Copyright Notice: © 2002, EC Sponsored Project Beta

This document and the information contained herein is the property of Deutsches Zentrum für Luft- und Raumfahrt, Park Air Systems AS,

Deutsche Flugsicherung, Nationaal Lucht- en Ruimtevaartlaboratorium, QinetiQ, Air Navigation Services of the Czech Republic, Ceská

správa letist, Hamburg Airport, ERA, Thales ATM and the European Commission (EC). Any reproduction or other use of this material shall

acknowledge the BETA-Project, the companies involved and the EC as the information sources.

Visit the BETA Web page: http://www.dlr.de/beta

BETA

Test Plan and Test Procedures Document

DLR

Change Control Sheet

Date

Issue

2001-03-19

2001-03-30

2001-04-17

2002-02-01

0.1

0.2

0,21

1.0

Changed Items/Chapters

Document Outline

First Draft

Final Draft

Formal changes

Comment

Working paper for the test phase I

Approved Document

Contents

Change Control Sheet.........................................................................................................................................3

Contents..............................................................................................................................................................3

1.

Scope of Document ...............................................................................................................................4

1.1 Objectives ..............................................................................................................................................4

1.2 Document Structure...............................................................................................................................4

2.

Introduction ...........................................................................................................................................4

2.1 Subsystems ............................................................................................................................................4

2.2 Test Equipment at Braunschweig ..........................................................................................................5

2.3 Data Recording used at Braunschweig ..................................................................................................9

2.4 Evaluation Tools..................................................................................................................................10

2.5 Human Actors during the BETA Test at Braunschweig .....................................................................10

3.

Testing A-SMGCS Performance Parameters ......................................................................................11

3.1 Testing Surveillance Performance Parameters ....................................................................................11

3.1.1 Testing Surveillance Accuracy (F1)...............................................................................................11

3.1.2 Testing Surveillance Identification and Classification Parameters (F2) ........................................18

3.2 Testing Monitoring/Alerting Performance Parameters (F3)................................................................20

3.3 Testing Guidance Performance Parameters (F5).................................................................................23

3.4 Testing ATCO-HMI Performance Parameters (F6) ............................................................................25

3.5 Test Equipment and Human Actors involved at Braunschweig Functional Tests ..............................27

4.

Testing A-SMGCS Operational Benefit Parameters ...........................................................................29

5.

Test Forms ...........................................................................................................................................31

5.1 Test Forms for Testing Performance Parameter at Braunschweig ......................................................31

5.2 Test Forms for Testing Operational Tests at Braunschweig ...............................................................37

6.

References ...........................................................................................................................................37

7.

List of Figures......................................................................................................................................37

8.

List of Tables.......................................................................................................................................37

9.

Acronyms and Abbreviations ..............................................................................................................38

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 3 of 39

Version 1.0

BETA

1.

Test Plan and Test Procedures Document

DLR

Scope of Document

This document is one of three parts of the “Test Plan and Test Procedures Document” for three airports:

•

•

•

1.1

D16a-TPP

D16b-TPP

D16c-TPP

Test Plan and Test Procedures document, test procedures for Prague (PRG).

Test Plan and Test Procedures document, test procedures for Hamburg (HAM).

Test Plan and Test Procedures document, test procedures for Braunschweig (BWE).

Objectives

This document, D16c-TPP, is the output of BETA WP5100 and describes the specific test procedures for

Braunschweig airport.

This document builds upon:

• WP 1200 Operational Concept, D03-OCD [1]

• WP 2100 General Test Concept, D10-GTC [2]

• Test Handbook,D33-THE [3]

• EUROCAE Working Group 41, MASPS on A-SMGCS, [4]

The document General Test Concept, D10-GTC [2], was not available at this time so that differences are

possible.

1.2

Document Structure

This document is structured into 5 chapters:

• Chapter 1 is this introduction

• Chapter 2 lists the involved subsystems, test equipment, recording systems, evaluation tools and

human actors

• Chapter 3 describes the functional tests

Surveillance, Guidance and HMI Performance Test

• Chapter 4 describes the operational benefit parameter testing

• Chapter 5 gives test forms for protocols and observer notes

2.

Introduction

Figure 2-1 describes the subsystems, used at Braunschweig, with the interface connections using ASTERIX

and SSIC format and the data recording subsystems ( Data Logger ).

2.1

Subsystems

To execute the tests at Braunschweig the following subsystems (responsible partners in brackets) are used:

Surveillance:

Co-operative Sensor Subsystems

− GP&C System, DGPS Subsystem, (DLR)

− Mode S, Multilateration Subsystem, (ERA)

− ARMI, Aircraft Registration Mark Identification (DLR)

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 4 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

Non-Co-operative Sensor Subsystems

− NRN, Near range Radar Network, (DLR)

Sensor Data Processing

− SDF, Surveillance Data Fusion (DLR)

− SDS, Surveillance Data Server incl. monitoring and alert (PAS)

CHMI

− Tower CWP I (DLR)

− Tower CWP II (PAS)

Guidance/Communication

− Guidance Server, Data Link Interface, (PAS, DLR)

− Onboard System for Test Van [GP&C, Interface, Pilot HMI] (DLR)

− Onboard System for Test Aircraft [GP&C, Interface, Pilot HMI] (DLR)

System Management

− System Management (DLR)

− Recording (DLR and PAS)

2.2

Test Equipment at Braunschweig

The following test equipment is available at Braunschweig

Test Van ( DLR )

equipped with

• GP&C transponder

• Mode S transponder

• Driver HMI

• SAPOS (high precision DGPS)

• INS, Inertial Navigation System

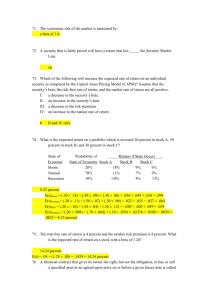

The subsystems of the Test Van are shown in Figure 2-2.

The Test Van can be used as reference for the position calibration and measurement of the

A-SMGCS surveillance part. SAPOS represents a position accuracy of better then 10cm with

an update rate of once per second. For filling up the time gap in between this reference

position can be calculated by interpolation using the INS velocity with an update rate of

10Hz.

Specifications of the reference system, SAPOS:

Position accuracy 0.1 m

Velocity accuracy 0,2 m/s

Update rate

1 Hz.

Specifications of the inertial navigation system, INS:

Velocity accuracy 1,0 m/s

Update rate

10 Hz.

Heading accuracy 1 degree.

Data recording is available onboard and via telemetry data link on ground.

Onboard recording:

The update rate for the onboard recording is 1 Hz for SAPOS position reports and

10Hz for INS velocity and heading reports.

Ground recording:

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 5 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

SAPOS position reports and INS velocity reports are transmitted via telemetry to the

ground station and are recorded with an update rate of 1 Hz.

Test Aircraft ( DLR )

equipped with

• GP&C transponder

• Mode S transponder

• Pilot HMI

Test CAR ( DLR )

equipped with

• GP&C transponder

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 6 of 39

Version 1.0

SSIC

SSIC

SSIC

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

plan-BWE-0.dsf

ARMI System

(DLR)

GP&C System

(DLR)

Mode S System

(ERA)

SSIC

NRN System

(DLR)

BETA

In

Data Logger

D1

(DLR)

SSIC

Data Logger

D2

(DLR)

Out

CORBA

ATCO HMI

(DLR)

SSIC to ASTERIX

Data Logger

D3

(DLR)

Public

Figure 2-1 Subsystems and Data-Logger at Braunschweig

SDF /

DBMS /

(DLR)

SSIC

Guidance

Server

(PAS)

Test Plan and Test Procedures Document

ASTERIX

In

Out

Data Logger

C

(PAS)

Surveillance

Data Server

/ Target

Database

(PAS)

GP&C

Data Logger

(DLR)

GP&C-Datalink

Page 7 of 39

Version 1.0

ASTERIX

C HMI

(PAS)

DLR

BETA

Test Plan and Test Procedures Document

Antenna

VHF

Antenna

GPS L1/L2

Antenna

VHF

Antenna

DLR

Splitter

INS

LTN-90

GP&C

D-GPS

SAPOS

D -GPS

Driver

Monitor

ARINC429

Hopf Uhr

COM 2

COM 1

Signal Conditioning Processor

RS232

RS232

RS232

GPS-Clock

129.247.62.4

ARINC 429

Mode S

System

COM 1

PC -Driver I

PCPilot HMI

GP&C-Server

CATS

DALICON

TR-SERV1

129.247.62.2 - 4102

129.247.62.5

LAN

VF.ESMGCS.DLR.DE

129.247.62.1

CISCO Router

On Bord System

2ASx

Telemetrie

UHF

Antenna

VHF

GPS

Antenne Antenne

UHF

Antenna

2ASx

Telemetrie

GP&C

D-GPS

On Ground System

CISCO Router

129.247.38.111

ESMGCS.DLR.DE

LAN

GP&C

Monitor

129.247.38.67

AVES_D

129.247.38.64

FP_INTERF

GP&CServ

AVES_Display

TELEServ

CATS

TELE-Log

129.247.38.41

TRES_A

DEFAMMLogger

TELNET

CATS

AVES-DSP

TEST-VAN-1.dsf

Figure 2-2 DLR Test Van at Braunschweig

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 8 of 39

Version 1.0

BETA

2.3

Test Plan and Test Procedures Document

DLR

Data Recording used at Braunschweig

SDF-Logger (DLR)

Recording SDF Data (Surveillance Data Fusion) for offline evaluation.

Recording SDS Data (Surveillance Data Server) for offline evaluation.

Recording of output data from all sensors

• SDF-In/Out

Recording all data at the SDF in- and output (Position Reports)

• NRN

Recording NRN Position Reports

• Mode-S

Recording Mode-S Position Reports

• ARMI

Recording ARMI Reports

• GP&C

Recording GP&C Position Reports

SDS-Logger (PAS)

Recording all SDS data (Surveillance Data Server) for offline evaluation.

• SDS-In/Out

Recording all data at the SDS (Position Reports)

HMI-Logger (DLR)

Recording all HMI data for offline replay and offline evaluation.

• ATCO-HMI, air traffic controller human machine interface.

• Pilot HMI, pilot human machine interface.

HMI-Logger (PAS)

Recording all HMI data at the HMI from PAS for offline replay and offline evaluation.

• ATCO-HMI, air traffic controller human machine interface.

GP&C-Logger (DLR)

• GP&C Data Link Logger

recording all data at the GP&C Data Link for the guidance tests and recording of all

Position Reports of GP&C equipped a/c and cars.

• GP&C CATS Logger

recording GP&C data for offline demonstration of movement of GP&C equipped a/c and

cars.

Video Camera Recorder

• For offline demonstration the HMI and the situation at the airfield is recorded with an

video camera with time marks.

Form Sheets

• ON

• DEB

• QUE

• TPR

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Observers Notes

Debriefing Notes

Questionnaire

Test Protocol

Public

Page 9 of 39

Version 1.0

BETA

2.4

Test Plan and Test Procedures Document

DLR

Evaluation Tools

Following evaluation tools for offline evaluation are defined:

Evaluation tools for offline analysis of recorded data.

SWA-P

Analysis software for time stamped position reports.

Different software tools are necessary for offline analysis of the recorded data to find out the

parameters.

Form sheets.

QUE

Filled-out Questionnaires.

DEB

Debriefing Notes.

ON

Observer Notes.

TPR

Test Protocols

2.5

Human Actors during the BETA Test at Braunschweig

The following human actors during the BETA tests are defined [3] below, responsible in brackets:

OTC

Operational Test Co-ordinator (DLR)

TTC

Technical Test Co-ordinator (DLR)

BC

BETA Controller (Controller at Braunschweig Airport)

BO

BETA Operator and BETA Observer (PAS & DLR) and

BETA Interviewer (DLR)

Driver

BETA Test Van and Test Car Driver (DLR)

Pilot

BETA Test Aircraft Pilot (DLR)

ATCO

Air Traffic Controller in the Tower ( BWE )

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 10 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

3.

Testing A-SMGCS Performance Parameters

3.1

Testing Surveillance Performance Parameters

DLR

Two scenarios for testing the Surveillance Performance Parameters are planned:

• Position Accuracy, tested with Case Studies.

• Classification, tested with Regular Traffic Studies.

3.1.1

Testing Surveillance Accuracy (F1)

These tests are performed through case studies.

The goal of this test is to evaluate the Position Accuracy and Time Latency of the Surveillance System as

described at the General Test Concept [2] chapter “Technical Function Test “. All Surveillance Sensors

(Mode-S, NRN, ARMI and GP&C) and the output of the Surveillance Data Fusion will be tested. The

surveillance function must automatically gather accurate and timely positional information on aircraft,

vehicles and other objects within the surveyed area of the aerodrome surface and the surrounding airspace.

Surveillance Position Accuracy Parameter [2]:

• Probability of Detection of co-operative and non co-operative sensors (PD)

• Probability of False Detection of co-operative and non co-operative sensors (PFD)

• Probability of Identification of co-operative targets (PID)

• Probability of False Identification of co-operative targets (PFID)

• Reported Position Accuracy (RPA)

• Reported Velocity Accuracy (RVA)

• Target Report Update Rate (TRUR)

• Target Report Latency (TRL)

• Position Renewal Time Out Period (PRTOP)

• Target Separation

Test Procedure:

Testing the position accuracy parameters by comparing the position report of each sensor and the

Surveillance Data Fusion, SDF, with a reference position. The reference position will be derived

from a satellite positioning system, SAPOS, and an inertial navigation system, INS, installed in the

Test Van.

Test Scenario:

F1A: Test Van taxiing on RWY

The Test Van is taxiing on RWY 26/08 and on TWY C, A, B, F and on the apron and is

proceeding at the centreline.

For static test of the Reported Position Accuracy the Test Van is stopped at a known position

on the TWY and RWY.

The dynamic test will be repeated with different velocities, e.g. 10m/s and 20m/s.

F1B:

A/C Take Off and Landing

Take off and landing of the Test A/C on RWY 26/08 and taxiing to the apron.

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 11 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

F1C:

A/C and Test Van taxiing on RWY

Take off and landing of the Test A/C on RWY 26/08 and taxiing to the apron.

The Test Van is taxiing on RWY 26/08 and on taxiways C, A, B, F and on the apron.

The Test Van is proceeding behind the a/c in a distance of more then 100m and is than

approaching the a/c until a distance of less then 10m.

F1D:

The Test Van and a GP&C equipped Test Car are taxiing on taxiways and runway.

The two test vehicles are taxiing on RWY 26/08 and on Taxiways A, B, and F.

The second Test Car is moving in opposite direction and is passing the other.

Data Recording:

• NRN-Data -Logger

• Mode-S-Data -Logger

• GP&C-Data -Logger

• SDF-In/ Out-Logger

• SDS-In/ Out-Logger

• HMI-Logger

• GP&C-Data Link Logger

• GP&C-CATS

NRN-Data recorded at SDF Input

Mode-S-Data recorded at SDF Input

GP&C-Data recorded at SDF Input

Recording all data at SDF Input and Output

Recording all data at SDS Input and Output

Recording all data at HMI

Recording GP&C Radio Link data

Recording GP&C data for offline display

Test protocol of BETA Observer:

Observing the real airport traffic and the HMI output:

Number and operation time of GP&C equipped vehicles in the vicinity.

Number of GP&C equipped vehicles shown on the HMI.

Number and operation time of unequipped vehicles in the vicinity.

Number of all vehicles shown on the HMI.

BETA Test Equipment and Human Actors involved:

F1A

1

2

3

Test Equipment

BETA Test Van (DLR), GP&C and Mode-S equipped

BETA Test A/C (DLR), GP&C and Mode-S equipped

Test Car, GP&C equipped

1

2

Human Actors

Operational Test Co-ordinator

Technical Test Co-ordinator

3

4

5

6

7

8

9

BETA Test VAN Driver No. 1

BETA Test Aircraft Pilot

BETA Test Vehicle Driver No. 2

BETA Operator (DLR) for NRN, Mode-S, GP&C, SDF, HMI

BETA Operator (PAS) for Data Server and HMI

BETA Observer ( DLR, Observer for Airport and HMI )

BETA Operator for Test Van

F1B

F1C

F1D

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

Table 3-1 Human Actors involved in F1 Tests

Measuring Instruments:

• Analysis Software (SWA-P) for time stamped position reports from each sensor

and the SDF-Output,

• Observer Notes, ON,

• Debriefing reports, DEB,

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 12 of 39

Version 1.0

BETA

•

Test Plan and Test Procedures Document

DLR

Questionnaires, QUE.

Measuring the Probability of Detection (PD)

Analysis of the SDF-Logging data to get the number of reports from the test targets

(= total number of correct reports ).

Analysis of the Observer Notes to get the number of traffic objects recorded

(= expected number of reports ).

Calculate the Probability of Detection by following formula:

PD = ( Total no. of correct reports ) / ( Expected no. of reports ) * 100%

This calculation will be done for each sensor ( co-operative and non co-operative sensor ) and for the

SDF output.

Measuring the Probability of False Detection (PFD)

Analysis of the SDF-Logging data to get the number of reports from the test targets

(= total number of target reports ).

Analysis of the Observer Notes to get the number of fixed targets

(= number of fixed targets ).

Analysis of the SDF-Logging data to get the number of updates

(= number of updates ).

Calculate the Probability of False Detection by following formula:

PFD = ( No. of target reports – ( No. of fixed targets x No. of updates )

/ ( No. of updates ) * 100%

This calculation will be done for each sensor ( co-operative and non co-operative sensor ) and for the

SDF output.

Measuring the Probability of Identification of co-operative targets (PID)

Analysis of the SDF-Logging data to get the number of target reports with correct identification.

Analysis of the Observer Notes to get the total number of reports from identifiable targets.

Calculate the Probability of Identification by following formula:

PID = ( No. of target reports with correct identification )

/ ( Total no. of reports from identifiable targets)

* 100%

This calculation will be done only for co-operative sensors, at Braunschweig for the GP&C system

and the Mode-S system.

Measuring the Probability of False Identification of co-operative targets (PFID)

Analysis of the SDF-Logging data to get the number of target reports with erroneous identification.

Analysis of the Observer Notes to get the total number of reports from identifiable targets.

Calculate the Probability of False Identification by following formula:

PID = ( No. of target reports with erroneous identification )

/ ( Total no. of target reports ) * 100%

This calculation will be done only for co-operative sensors, at Braunschweig for the GP&C System

and the Mode-S system.

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 13 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

Measuring the Reported Position Accuracy (RPA)

The true position is recorded from the reference system using the SAPOS.

The reported position of each sensor will be compared with the true position.

a) Static Reported Position Accuracy:

The true position is given by the position report of the SAPOS. If there is the possibility to

readout the reported A-SMGCS position online, the difference between the reported position and

the true position can be calculated directly while the test van or aircraft is stopped.

b) Dynamic Reported Position Accuracy :

The true position is given by the position report of SAPOS and INS. The difference between the

reported position and the true position can be calculated offline using the timestamp.

Calculate the RPA as follows:

For each position report the error in the X position, ∆x and in the Y position, ∆y is calculated with:

∆x = ( true X position - reported X position)

∆y = ( true Y position - reported Y position)

mean deviation X,

mean deviation Y,

quadratic

X,

quadratic

Y,

mx

my

qx

qy

=

=

=

=

RMSX =

√ ( qx - mx 2 )

RMSy =

√ ( qy – my 2 )

Rx

Ry

1/n

1/n

1/n

1/n

in metres

in metres

∑ ∆xi

∑ ∆yi

∑ (∆xi )2

∑ (∆yi )2

= C • RMSX + mx

= C • RMSy + my

RPA =

√

( Rx2 + Ry2 )

Where the coefficient C is given by the following table:

Confidence Level %

90

91

92

93

94

C

1.645

1.695

1.751

1.812

1.881

Confidence Level %

95

96

97

98

99

C

1.960

2.054

2.170

2.326

2.576

Table 3-2 Confidence Level

Measuring the Reported Velocity Accuracy (RVA)

The true velocity is given by the INS or by the SAPOS. The data are recorded during the test

onboard. The reported velocity (speed and heading or speed x and speed y) will be compared with

the true velocity.

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 14 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

This calculation will be done only for the co-operative sensors, GP&C, and for the Position Report at

the SDF-output with dynamic tests.

Calculate the RVA as follows [4] :

For each position report calculate the error in velocity, ∆v, and heading ∆ϕ.

∆v = ( true velocity - reported velocity)

∆ϕ = ( true heading - reported heading)

mean deviation X,

mean deviation Y,

quadratic

X,

quadratic

Y,

mv

mϕ

qv

qϕ

=

=

=

=

RMSV =

√ qv – mv 2 )

RMSϕ =

√ qϕ – mϕ2 )

Rv

Rϕ

1/n

1/n

1/n

1/n

in m/s

in degree

∑ ∆Vi

∑ ∆ϕ i

∑ (∆Vi )2

∑ (∆ϕi )2

= C • RMSV + mv

= C • RMSϕ + mϕ

Where the coefficient C is given by the Table 3-2 listed above:

Measuring the Target Update Rate (TRUR)

Analysis of the SDF-Logging data to get the number of reports from the test targets.

TRUR = ( No. of target reports ) / ( No. of seconds )

in samples per sec

This calculation will be done for each sensor and for the SDF output.

Measuring the Target Report Latency (TRL)

Each reported position includes the timestamp (TPRT) and also the time of reception (RT) at the

data logger is recorded. This assumes that the delivering time to the SDF and the data logger process

is equal. The difference is the Target Report Latency and will be shown as time histogram.

TRL i = ( Target Position Report Timestamp ) i -- (Reception Timestamp )i

This calculation will be done for each sensor and for the output of the Surveillance Data Server.

Measuring the Position Renewal Time Out Period (PRTOP)

Analysis of the SDF-Logging data to get the number of reports from the test targets.

Count the time out periods for an observation time of 3600sec, that means 3600 possible position

reports:

Time Out Period

1 sec

2 sec

3 sec

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Number of

occurrences (N)

3000

220

30

Public

Percentage/

Second

83.33

6.11

0.83

Page 15 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

4 sec

15 sec

10

2

DLR

0.28

0.05

This calculation will be done for each sensor and for the SDF output.

Measuring the Target Separation of non co-operative targets

Test the smallest distance for separation of two targets. Two targets are operating at the airfield and

one target is passing the other. Evaluate the distance at that point where the two targets are shown as

one target at the HMI.

Measuring the distance by analysis of the SDF-Logging data at the sensor output and the SDF

output.

Measuring the Coverage Volume (CV)

Analysis of the SDF-Logging data by plotting all tracks over the airport map.

This evaluation will be done for co-operative and non co-operative sensors.

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 16 of 39

Version 1.0

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

BS-DOC-B.dsf

H. P. Zenz

C

Aerodata

C

G

E

900 x 50 m

1560 x 30 m

Glider

Apron

Test Plan and Test Procedures Document

Public

Figure 3-1 Scenario for Functional Performance Tests F1

on

LBA-Apr

F1A

D

08

Re

fu

sta elin

tio g

n

BETA

08

B

26

F1D

F

DLR-A

pron

A

26

26

Page 17 of 39

Version 1.0

DLR

BETA

3.1.2

Test Plan and Test Procedures Document

DLR

Testing Surveillance Identification and Classification Parameters (F2)

From the Surveillance System the co-operating targets (aircraft and controlled vehicles) have to be detected

and identified by callsign or some other unique identifier, and the non co-operative targets and obstacles

should be detected and classified by size or type. The goal of this test is to verify these functions by

Regular Traffic Studies.

Surveillance Identification and Classification of non co-operative targets Parameters [2]:

• Probability of False Detection of non co-operative targets (PFD)

• Probability of False Classification of non co-operative targets (PFC)

• Coverage Volume of non co-operative targets(CV)

Test Procedure:

Testing the Surveillance Parameters (False Detection, False Classification and Coverage Volume )

will be done by observing the airport traffic by the BETA observer for a period of more then 1 hour.

The observer writes a protocol of the relevant differences between the airport traffic and the airport

situation shown on the ATCO-HMI display.

For offline replay the data on the SDF Output and ATCO-HMI Input will be recorded. With a plot of

the ATCO-HMI input data the coverage area will be shown.

No specific procedures with Test Van or Test A/C is necessary.

Test Scenario:

F2A: Test during normal airport traffic situation.

The BETA Observer writes a protocol of the situation and differences between the ATCO-HMI

display and the real airport situation. The duration shall be more than 1 hour.

F2B:

Test with absence of traffic (e.g. over night).

The BETA Observer writes a protocol of the situation and differences between the ATCO-HMI

display and the real airport situation. During absence of any known targets (typically overnight) the

duration shall be more than 1 hour.

Data Recording:

• NRN-Logger

• Mode-S-Logger

• GP&C-Logger

• SDF In/Out-Logger

• SDS In/ Out-Logger

• HMI-Logger

NRN-Data

recorded at SDF input

Mode-S-Data recorded at SDF input

GP&C-Data recorded at SDF input

Recording all data at SDF input and output

Recording all data at SDS input and output

Recording all data at HMI

BETA Test Equipment and Human Actors involved:

1

2

3

4

5

F2A

F2B

X

X

X

X

X

X

Test Equipment

Human Actors

Operational Test Co-ordinator

Technical Test Co-ordinator

BETA Operator (DLR) for NRN, Mode-S, GP&C, SDF, HMI

BETA Operator (PAS) for Data Server and HMI

BETA Observer ( Observer for Airport Traffic and HMI )

Table 3-3 Human Actors involved in F2 Tests

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 18 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

Test protocol of BETA Observer:

The following items should be noted in the test protocol. For validation an ATCO-HMI replay of the HMI

input data can be used.

Protocol for approaching aircraft:

Observing real airport traffic:

Call Sign

Registration Mark

Type of Aircraft

Landing Time

Runway and Taxi Routing

Parking Place

Total number of identifiable targets

Total number of targets

Observing ATCO-HMI:

Appearance Time

Landing Time

Identification

Classification

Disappearance Time

Number of target reports with correct identification

Number of target reports with erroneous identification

Number of false classification

Protocol for departing aircraft:

Observing real airport traffic:

Call Sign

Registration Mark

Type of Aircraft

Parking Place

Taxi Routing and Runway

Take Off Time

Total number of identifiable targets

Total number of targets

Observing ATCO-HMI:

Appearance Time

Identification

Classification

Taxi Routing and Runway

Take Off Time

Disappearance Time

Number of target reports with correct identification

Number of target reports with erroneous identification

Number of false classification

Measuring Instruments:

Measuring Instruments are Observer Notes, Debriefing and Questionnaire (ON, DEB, QUE).

Measuring the Probability of False Detection (PFD)

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 19 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

For this test a period with low traffic should be selected, e.g. during night.

Analysis of the SDF-Logging data to get the number of reports from the test targets.

Analysis of the Observer Notes to get the number of fixed targets.

Analysis of the SDF-Logging data to get the number of updates.

Calculate the Probability of False Detection by following formula:

PFD = [ ( No. of target reports – No. of known targets) – ( No. of fixed targets x No. of

updates ) ] / ( No. of updates )

This calculation will be done only for non co-operative sensors, at Braunschweig for the NRN

system.

Measuring the Probability of False Classification (PFC)

Analysis of the Observer Notes to get the number of target reports with erroneous classification

and the total number of target reports.

Calculate the Probability of False Classification by following formula:

PFC = No. of false classification / Total no. of target reports * 100%

This calculation will be done only for non co-operative sensors, at Braunschweig for the NRN

system.

Measuring the Coverage Volume (CV)

Analysis of the SDF-Logging data by plotting all tracks over the airport map.

This evaluation will be done for co-operative and non co-operative sensors.

3.2

Testing Monitoring/Alerting Performance Parameters (F3)

The role of the alerting function is to monitor and assess the aerodrome traffic situation against the planned

situation and a set of safety rules, and provide alerts in the event of situations requiring intervention. Alerting

rules will be tailored to suit the aerodrome layout and operational conditions, and to ensure the required level

of safety for the operations.

The goal of this test is to verify the Alert Functions with Case Studies (CS).

There are two Alert Functions to be tested:

• Runway Incursion Alert

• Violation of separation minima

Alerting Performance Parameters [2] :

• Probability of Detection of an Alert Situation (PDAS)

• Probability of False Alert (PFA)

• Alert Response Time (ART)

Test Procedure:

a) Runway Incursion Alert:

Just before the Test A/C is taking off the Test Van is entering the runway and forces a Runway Incursion

Alert.

b) Violation of Separation minima:

Two GP&C equipped Test Cars are proceeding on a Taxiway and reducing the distance to a minimum.

This should generate a Violation of Separation Alarm.

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 20 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

Test Scenario:

F3A: Runway Incursion Alert

The Test A/C is proceeding from the DLR apron to the RWY26 via TWY F and A, lining up at the

RWY26 and waits for take off clearance.

The Test Van is following the A/C as number two via F and B. After the A/C has lined up the Test

Van enters the RWY26 at TWY B. The Test Van than leaves the RWY via D and returns to the

apron via C and F.

After the Test Van has cleared the RWY, the Test A/C takes off and after finishing a traffic circuit,

lands again and returns to the apron.

F3B:

Violation of Separation Minima.

Two GP&C equipped Test Cars are proceeding at the taxiway. The second car is overtaking the

preceding car. A Violation of Separation Alarm should be generated

Data Recording:

• SDS-Data In/Out-Logger

• HMI-Logger

• GP&C-CATS

• Planning Data Logger

• Alert Data Logger

Recording on SDS in- and output

Recording all data on HMI

Recording for GP&C offline replay

Recording Route Planning Data

Recording Alert Data

BETA Test Equipment and Human Actors involved:

1

1

3

Test Equipment

BETA Test Van (DLR) GP&C equipped

BETA Test CAR (DLR) GP&C equipped

BETA Test A/C (DLR) GP&C equipped

1

2

3

4

5

6

Human Actors

Operational Test Co-ordinator

Technical test Co-ordinator

BETA Test Vehicle Driver I

BETA Test Vehicle Driver II

BETA Operator (DLR)

BETA Observer (Airport and HMI )

F3A

F3B

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

Table 3-4 Human Actors involved in F3A and F3B Tests

Test protocol of BETA Observer:

The following items should be noted at the Test protocol. For validation the ATCO-HMI replay can be used.

Protocol for approaching aircraft:

Observing real airport traffic:

Call Sign

Registration Mark

Type of Aircraft

Landing Time

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 21 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

Runway and Taxi Routing

Parking Place

Observing ATCO-HMI:

Appearance Time

Landing Time

Identification

Classification

Alert One Indication Time

Alert Two Indication Time

Alert Disappearance Time

A/C Symbol Disappearance Time

Protocol for departing aircraft:

Observing real airport traffic:

Call Sign

Registration Mark

Type of Aircraft

Parking Place

Taxi Routing and Runway

Take Off Time

Observing ATCO-HMI:

Appearance Time

Identification

Classification

Taxi Routing and Runway

Take Off Time

Alert One Indication Time

Alert Two Indication Time

Alert Disappearance Time

a/c Symbol Disappearance Time

Measuring Instruments:

Measuring Instruments are Observer Notes, Debriefing and Questionnaire (ON, DEB, QUE).

Measuring Probability of Detection of an Alert Situation (PDAS)

Calculate the Probability of Detection of an Alert by following formula:

PDAS = ( No. of correct alert reports ) / ( Total no. of actual alert situations )

* 100%

Measuring Probability of False Alert (PFA)

Calculate the Probability of an False Alert by following formula:

PFA = ( No. of false alerts ) / ( Total no. of aircraft movements )

* 100%

Measuring Alert Response Time (ART)

Note the time (t1) of entering the restricted area at which the restricted area incursion alerts occurs

by evaluation of the Position reports. Note the time (t2) at which the alert is given by evaluation the

HMI recording.

Calculate the Alert response Time by following formula:

ART = 1 / n ∑ni=1 ( t2 –t1 )

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 22 of 39

Version 1.0

BETA

3.3

Test Plan and Test Procedures Document

DLR

Testing Guidance Performance Parameters (F5)

During the tests at Braunschweig an onboard-HMI is installed in the Test Van (DLR ) and in the Test

Aircraft as well. The GP&C is used as data link for transmitting the guidance information from the ground

system to the onboard system. The goal of this test is to ensure the functionality of the guidance system, by

validation of

• the data link physically,

• the transmission of clearances,

• the transmission of read-backs and other information

This will be tested by Case Studies.

Guidance performance parameters:

• Clearance Delivery Response Time (CDRT)

• Guidance Aid Response Time (GART)

• Guidance Aid Confirmation Time (GACT)

Test Procedure:

The BETA Operator at the Guidance Server generates a Taxi Plan. The taxi plan guidance

information and the taxi plan clearances are transmitted to a Pilot HMI equipped Test Van or Test

A/C. The BETA Operator in the Test Van or Test A/C is operating the Pilot HMI and reports the

incoming data to the ground station via radio. The BETA Operator at the Guidance Server writes a

protocol of the actions and the Response Time. All data link information will be recorded by a

GP&C Data Link Logger.

Test Scenario:

F5A Test Van equipped with Pilot-HMI and GP&C data link located at a parking position on the ramp.

The BETA Operator at the guidance server generates a taxi plan and transmits the taxi clearance. The

onboard BETA Operator in the Test Van has to operate the Pilot-HMI and reports the guidance

information via radio to the ground station. The Test Van starts taxiing and follows the guidance

information from the Pilot-HMI.

The BETA Operator at the guidance server writes a protocol and the BETA Operator at the PilotHMI as well.

F5B

Test A/C equipped with Pilot -HMI and GP&C Data Link located at a parking position on the ramp.

The BETA Operator at the guidance server generates a taxi plan and transmits the taxi clearance. The

Pilot has to operate the Pilot-HMI and reports the guidance information via radio to the ground

station. The Test A/C is taxiing and follows the guidance information at the Pilot-HMI.

The BETA Operator at the guidance server writes a protocol.

Data Recording:

• GP&C-Data Link Logger

BETA Test Equipment and Human Actors involved:

F5A

1

1

1

Test Equipment

BETA Test Vehicle (DLR),

GP&C equipped and Pilot-HMI equipped

BETA Test A/C (DLR),

GP&C equipped and Pilot-HMI equipped

Human Actors

Operational Test Co-ordinator

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

X

X

X

Public

F5B

X

Page 23 of 39

Version 1.0

BETA

2

3

4

5

6

Test Plan and Test Procedures Document

Technical test Co-ordinator

BETA Test Vehicle Driver (DLR)

BETA Pilot for Test A/C (DLR)

BETA Operator for Guidance Server (DLR)

BETA Operator for Pilot HMI (DLR)

F5A

X

X

X

X

DLR

F5B

X

X

X

X

Table 3-5 Human Actors involved in F5 Tests

Test protocol of BETA Operator:

The following items should be noted in the test protocol from the guidance server operator.

Start Time of taxi plan generation

Response Time of taxi plan generation

Taxi plan routing

Measuring Instruments:

Measuring Instruments are Observer Notes, Debriefing and Questionnaires (ON, DEB, QUE)

and analysis of recorded data of GP&C Data Link Logger and Pilot HMI Data Logger.

Measuring the Clearance Delivery Response Time (CDRT)

Readout the Clearance Delivery Time from GP&C Data Logger

(t1 = Time of transmission of the guidance aid information ).

Readout the Clearance Delivery Response Time from GP&C Data Logger

(t2 = Time of receiving the response of the clearance ).

Calculation of the alert response time by following formula:

CDRT = 1 / n ∑ni=1 ( t2 –t1 )

Measuring the Guidance Aid Response Time (GART)

Readout the Guidance Aid Transmission Time from GP&C Data Logger

(t1 = Time of transmission of the guidance aid information ).

Readout the Guidance Aid Response Time from GP&C Data Logger

(t2 = Time of receiving a response from the guidance aid ).

Calculation of the alert response time by following formula:

GART = 1 / n ∑ni=1 ( t2 –t1 )

Measuring the Guidance Aid Confirmation Time (GACT)

Readout the Guidance Aid Transmission Time from GP&C Data Logger

(t1 = Time of transmission of the guidance aid information ).

Readout the Guidance Aid Confirmation Time from GP&C Data Logger

(t2 = Time of receiving the Confirmation of the guidance aid).

Calculation of the alert response time by following formula:

GACT = 1 / n ∑ni=1 ( t2 –t1 )

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 24 of 39

Version 1.0

BETA

3.4

Test Plan and Test Procedures Document

DLR

Testing ATCO-HMI Performance Parameters (F6)

Associated with each basic functional element of an A-SMGCS will be an HMI through which users can

interact with the system. HMI performance parameters are mostly concerned with the ability of the HMI to

provide accurate and timely presentation of information, with no degradation of the content or integrity of

the information received from the source, and to quickly accept and process user inputs.

The HMI Performance Parameters will be tested in Case Studies (CAS).

HMI Performance Parameters:

• Position Registration Accuracy (PRA)

• Response Time to Operator Input (RTOI)

• Display Latency (TDL)

Test Procedure:

Testing the position registration accuracy at the ATCO-HMI a car equipped with GP&C will operate

on all taxiways and runways. The car will drive at the centreline and an observer will watch the HMI

and write a protocol noting whether the display is correct and in time. To generate the data for

measuring the Display Latency the car will drive on the RWY at high speed.

Test Scenario:

F6

The car is taxiing on RWY 26/08 and on taxiways F, C, A, B and on apron.

On the RWY the car drives with high speed along the centreline.

Data Recording:

• SDF-In/Out-Logger

• GP&C CATS

• HMI-Logger

Recording all data at SDF in- and output.

Recording GP&C data for offline replay

Recording al data at the HMI

BETA Test Equipment and Human Actors involved:

F6

1

Test Equipment

BETA Test Vehicle (DLR) GP&C equipped

X

1

2

3

4

5

Human Actors

Operational Test Co-ordinator

Technical Test Co-ordinator

BETA Operator (DLR) for NRN, Mode-S, GP&C, SDF, HMI

BETA Operator (PAS) for Data Server and HMI

BETA Operator ( Observer for ATCO-HMI )

X

X

X

X

X

Table 3-6 Human Actors involved in F6 Tests

Test protocol of BETA Observer:

The following items should be noted in the test protocol. For validation an ATCO-HMI replay can be used.

Protocol ATCO-HMI Operator:

Events reported by the driver during Test Car drives along the centreline

Response Time on HMI Input

Display Time delay

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 25 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

Measuring Instruments:

Measuring Instruments are Observer Notes and Debriefing (ON, DEB).

Video camera with recording device.

Measuring the Position Registration Accuracy (PRA)

A video with time registration for an offline replay and testing the Display Latency will be produced.

The Position Registration Accuracy is measured by the analysis of a replay of known moving targets.

Measuring the Target Display Latency (TDL)

a)

Display Test

Measurement of the Target Display Latency by comparing the HMI Display with the

actual moving of a known target.

b)

HMI Input Test

The latency is measured by comparing the actual time stamp of the SDF data output with

the registration time at HMI input.

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 26 of 39

Version 1.0

Test Plan and Test Procedures Document

OTC

TTC

BC

TCD

Pilot

TCD-II

BO-DLR

BO-PAS

BO-Airport

BO-VAN

NRN

Mode-S

GP&C

ARMI

SDF-DLR

C-HMI-DLR

SDS-PAS

C-HMI-PAS

NRN

Mode-S

GP&C

ARMI

SDF

SDF

GP&C

GP&C

HMI

Human Actors

Operational Test Co-ordinator

Technical Test Co-ordinator

BETA Controller

BETA Test Van I Driver

BETA Test A/C Pilot

BETA Test Van II Driver

BETA Operator for Data Recording

BETA Operator for Data Recording

BETA Observer for Airport + HMI

BETA Operator for Test Van

BETA Subsystems

NRN-System (DLR)

Mode-S System (DLR)

GP&C System (DLR)

ARMI System (DLR)

Surveillance Data Fusion (DLR)

Controller HMI (DLR)

Surveillance Data Server (PAS)

Controller HMI (PAS)

Data Recording

SDF NRN

SDF-Mode-S

SDF-GP&C

SDF-ARMI

SDF-All Input

SDF Out

GP&C Logger

GP&C CATS

HMI-Data

Test Equipment

Test Van equipped

Test A/C equipped

Test Car GP&C equipped

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F1A

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F1E

Public

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F1

Surveillance Accuracy

F1B

F1C

F1D

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F2

Surveillance Classification

F2A

F2B

F2C

F2D

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F3B

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F3A

F3

Alert

Test Equipment and Human Actors involved at Braunschweig Functional Tests

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

4

5

6

7

8

1

2

3

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

1

3

5

3.5

BETA

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F5

Guidance

F5A

F5B

Page 27 of 39

Version 1.0

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

F6

HMI

F6A

DLR

Form Sheets

Test Protocol of TTC

Debriefing

Observer Notes

Questionnaire

Test Protocol of Driver I

Test Protocol of Pilot

Test Protocol of Driver II

TPR-TTC

DEB

ON

QUE

TPR-DRV

TPR-PIL

TPR-DRVII

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Table 3-7 Test Tools for Functional Tests

1

2

3

4

5

6

7

BETA

X

X

X

X

F1A

X

X

X

X

X

X

X

X

X

F1E

Public

X

X

X

X

X

F1

Surveillance Accuracy

F1B

F1C

F1D

X

X

X

X

X

X

F2

Surveillance Classification

F2A

F2B

F2C

F2D

Test Plan and Test Procedures Document

X

X

X

X

F3A

F3

Alert

F3B

X

X

X

X

F5

Guidance

F5A

F5B

Page 28 of 39

Version 1.0

X

X

X

F6

HMI

F6A

DLR

BETA

4.

Test Plan and Test Procedures Document

DLR

Testing A-SMGCS Operational Benefit Parameters

Only the surveillance function of the HMI can be shown at Braunschweig airport. The controller HMI is

located at the tower and shows the actual traffic situation. The controllers are able to watch the HMI for

several days. The impression of the controllers are given by interview. This test will be done by statistic

approach, STA.

The questionnaire contains following items:

• Display of the airport map

• Display of aircraft and other targets

• Labelling of the targets

• Detection of all targets

Test Procedure:

The normal airport traffic is shown on the HMI. The NRN system is showing all non co-operative

targets operating in the NRN coverage volume. At Braunschweig are normally no co-operative

targets available. To show the indication of co-operative targets the Test Van and the Test A/C is

operating at the airport.

Test Scenario:

OP1: Co-operative targets operating at Braunschweig.

The Test Van is driving at TWY F, A, RW26, C and F.

The HMI is located at the tower and the interviewer is interviews the controller.

OP2: Co-operative a/c operating at Braunschweig.

The Test A/C driving at TWY F, A, RW26, C and F.

The HMI is located at the tower and the interviewer is interviews the controller.

Data Recording:

• SDF-In/Out-Logger

• SDS-In/Out-Logger

• GP&C CATS

• HMI-Logger

Recording all data at SDF in- and output.

Recording all data at SDS in- and output.

Recording GP&C data for offline replay

Recording al data at the HMI

BETA Test Equipment and Human Actors involved:

OP1

1

2

Test Equipment

BETA Test Vehicle (DLR) GP&C equipped

BETA Test A/C (DLR) GP&C equipped

1

2

3

4

5

6

7

Human Actors

Operational Test Co-ordinator

Technical Test Co-ordinator

BETA Operator (DLR) for NRN, Mode-S, GP&C, SDF, HMI

BETA Operator (PAS) for Data Server and HMI

BETA Operator ( Observer for ATCO-HMI )

BETA Controller ( Tower Controller )

BETA Interviewer ( DLR interviewing the Controller )

OP2

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

X

Table 4-1 Human Actors involved in OP Tests

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 29 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

DLR

Measuring instruments:

Measuring instruments are Observer Notes, Debriefing and Questionnaire (ON, DEB, QUE).

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 30 of 39

Version 1.0

BETA

5.

Test Plan and Test Procedures Document

DLR

Test Forms

5.1

Test Forms for Testing Performance Parameter at Braunschweig

Following test forms are prepared:

•

•

•

•

•

Protocol of TTC, Technical Test Co-ordinator

Protocol of BETA Operator / Driver

Protocol of BETA Observer

Protocol of BETA Observer for test series F1 and F2 for approaching aircraft

Protocol of BETA Observer for test series F1 and F2 for departing aircraft

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 31 of 39

Version 1.0

BETA

BETA

Test Plan and Test Procedures Document

Test Protocol

Functional Test at Braunschweig

DLR

Sheet

Technical Test Co-ordinator

Test Series: F

Test No.:

Date:

Operational Test Co–ordinator

Technical Test Co–ordinator

Airport Test Co–ordinator

OTC

TTC

ATO

Weather Conditions:

Test Procedure:

BETA Subsystem:

NRN System

Mode - S

GP&C

SDF – In/ Out

SDS - In/ Out

HMI PAS

HMI DLR

GP&C Data Link

GP&C – CATS

Telemetrie

BETA Operator:

DLR

DLR

DLR

DLR

PAS

PAS

DLR

DLR

DLR

DLR

Data Recording:

DLR

DLR

DLR

DLR

PAS

PAS

DLR

DLR

DLR

DLR

BETA Test Equipment:

Test Van

Test Van GP&C

Test Van SAPOS

Test Van TRES

Test Van INS

BETA Operator:

DLR

DLR

DLR

DLR

DLR

Data Recording:

DLR

DLR

DLR

DLR

Remarks

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 32 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

Test Protocol

Functional Test at Braunschweig

BETA

DLR

Sheet

BETA Operator / Driver

Test Series: F

Sub System:

Test No.:

Date:

BO –Name:

Weather Conditions:

Test Procedure:

26

G l i d e r

B

C

F

A p ron

E

A e rod ata

DLR-

G

B S - D O C - B .d s f

H . P . Z e nz

A

Re

fu

el

stat in g

io

n

08

D

C

26

08

m

1560 x 30

900 x 50 m

A p ro n

pron

L B A -A

Data Recording:

No. Time

Remarks

Procedures:

No. Time

Remarks

File Name

*.TR1

*.NAV

End Time

Check List at TWY F

Mode-S

ON

SAPOS

Green and Dynamic

GP&C

ON

INS

NAV Mode

TR_SERV-B0.EXE

Running

Start Test Run

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 33 of 39

Version 1.0

BETA

Test Plan and Test Procedures Document

Test Protocol

Functional Test at Braunschweig

BETA

BETA Observer

Test No.:

Date:

Remarks

No.: of equipped targets on Airport / on HMI =

No.: of unequipped targets on Airport / on HMI =

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Sheet

Name:

Test Series: F

No. Time

DLR

Public

/

/

Page 34 of 39

Version 1.0

Call Sign

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Time

Parking

No. of

Targets

Public

Sheet

Class.

/Ident.

Date:

Disappear.

Appear.

Routing

.

No

Ldg Time

Name:

HMI Traffic

Reg.Mark Type

Test No.:

BETA Observer

Test Protocol

Functional Test at Braunschweig

Test Plan and Test Procedures Document

Airport Traffic

Approaching Aircrafts

Test Series: F1 / F2

BETA

BETA

Page 35 of 39

Version 1.0

No. of

Targets

DLR

Parking

Routing

T/O Time

Date:

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Time

Public

Sheet

DLR

Page 36 of 39

Version 1.0

Disappearance Class. /Ident.

Appearance

Type

.

No

Reg.Mark

Name:

HMI Traffic

Call Sign

Test No.:

BETA Observer

Test Protocol

Functional Test at Braunschweig

Test Plan and Test Procedures Document

Airport Traffic

Departing Aircrafts

Test Series: F1 / F2

BETA

BETA

BETA

5.2

Test Plan and Test Procedures Document

DLR

Test Forms for Testing Operational Tests at Braunschweig

To be completed!

6.

References

[1]

Operational Test Concept

BETA-D03-OCD-10.DOC

[2]

General Test Concept

BETA-D10-GTC-10.DOC

[3]

BETA Test Handbook

BETA-D33-THE-10.DOC

[4]

EUROCAE Working Group 41:

Minimum Aviation System Performance Standards for A-SMGCS, FINAL DRAFT,

EUROCAE, Paris 2001

7.

List of Figures

Figure 2-1 Subsystems and Data-Logger at Braunschweig..............................................................................7

Figure 2-2 DLR Test Van at Braunschweig .....................................................................................................8

Figure 3-1 Scenario for Functional Performance Tests F1.............................................................................17

8.

List of Tables

Table 3-1

Table 3-2

Table 3-3

Table 3-4

Table 3-5

Table 3-6

Table 3-7

Table 4-1

Table 9-1

Human Actors involved in F1 Tests ..............................................................................................12

Confidence Level ...........................................................................................................................14

Human Actors involved in F2 Tests ..............................................................................................18

Human Actors involved in F3A and F3B Tests .............................................................................21

Human Actors involved in F5 Tests ..............................................................................................24

Human Actors involved in F6 Tests ..............................................................................................25

Test Tools for Functional Tests .....................................................................................................28

Human Actors involved in OP Tests..............................................................................................29

Acronyms and Abbreviations.........................................................................................................38

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 37 of 39

Version 1.0

BETA

9.

Test Plan and Test Procedures Document

DLR

Acronyms and Abbreviations

a/c

ARMI

A-SMGCS

ATC

ATCO

BC

BETA

BO

BWE

CATS

CDRT

CV

CWP

DEB

DGPS

DLR

Fx

GACT

GART

GP&C

GPS

HMI

INS

MASPS

MASPS

Mode S

NRN

ON

OTC

PAS

PD

PFC

PFD

PFID

PID

PRA

PRTOP

QUE

RMS

RPA

RTOI

RWY

SAPOS

SDF

SDS

SSIC

SSR

STDMA

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Aircraft

Aircraft Registration Mark Identification

Advanced Surface Movement Guidance and Control System

Air Traffic Control

Air Traffic Control Officer

BETA Controller

Operational Benefit Evaluation by Testing an A-SMGCS

BETA Operator and BETA Observer

Research Airport Braunschweig

......

Clearance Delivery Response Time

Coverage Volume

Controller Working Position

Debriefing Notes

Differential GPS

German Aerospace Center (Deutsches Zentrum für Luft- und Raumfahrt)

Performance Parameter Test Procedure no. x

Guidance Aid Confirmation Time

Guidance Aid Response Time

Global Positioning and Communication System

Global Positioning System

Human Machine Interface

Inertial Navigation System

Minimum Aviation System Performance Standards

Minimum Aviation System Performance Standards

Mode S Multilateration System (here: ASCS from ERA)

Nearrange Radar Network

Observer Notes

Operational Test Co-ordinator

Park Air Systems

Probability of Detection

Probability of False Classification

Probability of False Detection

Probability of False Identification

Probability of Identification

Position Registration Accuracy

Position Renewal Time-out Period

Questionnaires

Root-Mean-Square

Reported Position Accuracy

Response Time to Operator Input

Runway

Satellite Positioning Service

( Satellitenpositionierungsdienst der deutschen Landesvermessung )

Surveillance Data Fusion

Surveillance Data Server

Structured SMGCS Information exchange ......... (DLR internal message

protocol)

Secondary Surveillance Radar

Self organised Time Division Multiple Access (here: the experimental data link

Public

Page 38 of 39

Version 1.0

BETA

TDL

TPR

TRL

TRUR

TTC

TWR

TWY

VDL

WP

Test Plan and Test Procedures Document

DLR

based on GPS, predecessor of VDL Mode 4)

Display Latency

Test Protocol

Target Report Latency

Target Update Rate

Technical Test Co-ordinator

Tower Control (normally: ATC for RWY and inbound traffic)

Taxiway

VHF Data Link

Work Package

Table 9-1 Acronyms and Abbreviations

Issued: 2002-02-18

Doc ID: d16ci-tpp-10.doc

Public

Page 39 of 39

Version 1.0