Sets of Nonlinear Equations

advertisement

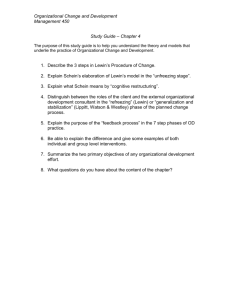

LECTURE FIVE 054374 NUMERICAL METHODS Process Analysis using Numerical Methods LECTURE FIVE Solution of Sets of Non-linear Equations 1 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Lecture Five: Nonlinear Equations Methods for the solution of a nonlinear equation are at the heart of many numerical Part One: Basic Building Blocks methods: from the Solution Ax=b Finite Difference Approximations solution of M & E balances, to the Interpolation Solution f(x)=0 Line Integrals optimization of chemical processes. Furthermore, the min/max f(x) need for numerical Nonlinear solution of nonlinear Solution Solution Regression of ODE's of BVP's equations also arises Linear Solution from the formulation Regression of IVPDE's of other numerical Part Two: Applications methods. 2 1 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS Examples: ♦ The minimization or maximization of a multivariable objective function can be formulated as the solution of a set of nonlinear equations generated by differentiating the objective relative to each of the independent variables. These applications are covered in Day 6. ♦ The numerical solution of a set of ordinary differential equations can either be carried out explicitly or implicitly. In implicit methods, the dependent variables are computed in each integration step in an iterative manner by solving a set of nonlinear equations. These applications are covered in Day 10. 3 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Lecture Five: Objectives This is an extension of last week’s lecture to sets of equations. On completion of this material, the reader should be able to: – Formulate and implement the Newton-Raphson method for a set of nonlinear equations. – Use a steepest descent method to provide robust initialization of Newton-Raphson’s method. – Formulate and implement the multivariable extension of the method of successive substitution, including acceleration using Wegstein’s method. 4 2 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.1 Newton-Raphson Method Solve the set of nonlinear equations: f1 (x1 , x2 ) = 4 − (x1 − 3)2 + 0.25x1x2 − (x2 − 3)2 = 0 (5.1) (5.2) f2 (x1 , x2 ) = 5 − (x1 − 2) − (x2 − 2) = 0 2 3 Consider initial guess of x = [2,4]T. Approximating first equation using a Taylor expansion: ∂f ∂f f1 (x1 , x2 ) ≈ f1 x (0 ) + 1 x1 − x1(0 ) + 1 x 2 − x2(0 ) ∂x1 x ( 0 ) ∂x2 x ( 0 ) where: ( ( ) ∂f1 = −2(x1 − 3) + 0.25x2 ∂x1 Thus: f1 ( x1 , x2 ) a linear plane. 5 ) ( ) ∂f1 = −2(x2 − 3) + 0.25x1 ∂x2 4 + 3 ( x1 − 2 ) − 1.5 ( x2 − 4 ) , NUMERICAL METHODS - (c) Daniel R. Lewin (5.3) LECTURE FIVE 5.1 Newton-Raphson Method (Cont’d) f1(x1,x2) f1 (x1 , x2 ) ≈ 4 + 3(x1 − 2) − 1.5(x2 − 4 ) Linear plane approximating f1 Intersection of linear plane approximating f1 with zero. 4 + 3(x1 − 2) − 1.5(x2 − 4 ) = 0 (5.4) 6 3 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.1 Newton-Raphson Method (Cont’d) Similarly, the intersection of the linear approximation for f2(x1,x2) with the zero plane gives the line: − 3 + 0(x1 − 2) − 12(x2 − 4 ) = 0 (5.5) Eqs.(5.4)-(5.5) are a system in 2 unknowns, d1 = x1 – 2, and d2 = x2 – 4, which are the changes in x1 and x2 from the previous estimate: −1 d1 3 − 1.5 − 4 − 1.4583 d = 0 − 12 3 = − 0.2500 2 (5.6) The solution of the linear system of equations is a vector that defines a change in the estimate of the solution, found by the intersection of Eqs.(5.4) and (5.5). Thus, an updated estimate for the solution is: x = [0.5417, 3.7500]T 7 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.1 Newton-Raphson Method (Cont’d) f2(x1,x2) f1(x1,x2) The intersection of the two linear planes generate a linear vector d(0), from initial guess, x(0). This intersects the zero plane at x(1), which is the next estimate of the solution. 8 4 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.1 Newton-Raphson Method (Cont’d) This constitutes a single step of the NR method. This is continued to convergence to 4 sig. figs. in four iterations: k x1 x2 f (x2) f (x1) ||d || 2 (k) ||f (x )||2 (k) 0 1 2 3 4 2.0000 0.5417 0.8606 0.8836 0.8838 4.0000 3.7500 3.5806 3.5546 3.5542 4.000 –2.098 –0.144 -3 –1.36×10 -7 –2.64×10 –3.000 –2.486 –0.247 -3 –3.72×10 -6 –1.00×10 1.4796 0.3611 0.0348 -4 4.92×10 -7 1.33×10 5.0000 3.2531 0.2862 -3 3.95×10 -6 1.04×10 It is noted that the Newton-Raphson method exhibits quadratic convergence near the solution; norms of both the correction and residual vectors in the last iteration are the square of those in the previous one. 9 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.1 Newton-Raphson Method (Cont’d) The method can be generalized for an n-dimensional problem. Given the set of nonlinear equations: (5.7) fi (x ) = 0, i = 1,2, …, n then a single step of the Newton-Raphson method is: x (k +1 ) = x (k ) + d (k ) (5.8) where d(k) is the solution of the linear set of equations: 10 5 ∂f1 ∂f ∂f d (k ) + 1 d (k ) + … + 1 ∂x1 x (k ) 1 ∂x2 x (k ) 2 ∂xn x (k ) ∂f2 ∂f ∂f d (k ) + 2 d (k ) + … + 2 ∂x1 x (k ) 1 ∂x2 x (k ) 2 ∂xn x (k ) ∂fn ∂f ∂f d (k ) + n d (k ) + … + n ∂x1 x (k ) 1 ∂x2 x (k ) 2 ∂xn x (k ) ( ) ( ) ( ) dn(k ) + f1 x (k ) = 0 dn(k ) + f2 x (k ) = 0 (5.9) dn(k ) + fn x (k ) = 0 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.1 Newton-Raphson Method (Cont’d) Newton-Raphson Algorithm: Step 1: Initialize estimate, x(0) and k = 0. ( ) ( − 1 d (k ) = −[J (x (k ) )] f (x (k ) ) ) (k ) ≡ J x (k ) = i ,j Step 2: Compute Jacobian matrix: J x Step 3: Solve for d(k): ∂fi ∂x j x (k ) Step 4: Update x(k+1): x (k +1 ) = x (k ) + d (k ) Step 5: Test for convergence: use 2-norms for d(k) and f(x(k)). Consider scaling both. If convergence criteria are satisfied, END, else k = k + 1 and go to Step 2. 11 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.1 Newton-Raphson Method (Cont’d) ISSUES 1: Initial estimate of the solution. • When solving a large set of nonlinear equations or a set of highly nonlinear equations, the starting point used is important. • It the initial guess is not a good estimate of the desired solution, the N-R method can seek an extraneous root or may not converge. • A robust method for estimating a good starting point for the N-R method is the method of steepest descent, which is described next. 12 6 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.1 Newton-Raphson Method (Cont’d) ISSUES 2: Computation of the Jacobian matrix. • The Jacobian matrix can either be determined analytically or can be approximated numerically. • For numerical approximations, it is recommended that central difference rather than forward or backward difference approximations be employed. • If the system of nonlinear equations is well behaved (i.e. if they can be solved relatively easily), either method works well. However, for ill-behaved systems, analytical determination of the Jacobian is preferred. • Apart from the computationally intensive Jacobian matrix calculation, the N-R method involves matrix inversion. Adopting so-called Quasi-Newton methods reduces these computational overheads. 13 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.2 Method of Steepest Descent • An accurate estimate of the solution of f(x) = 0 is necessary to fully exploit the quadratic convergence of the N-R method. • The method of steepest descent achieves this robustly - it estimates a vector x that minimizes the function: n 2 g ( x ) = ∑ (fi ( x ) ) - minimized when x is a solution of f(x). i =1 Steepest Descent Algorithm: Step 1: Evaluate g(x) at x(0). Step 2: Determine steepest descent direction. Step 3: Move an appropriate amount in this direction and update x(1). 14 7 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.2 Method of Steepest Descent (Cont’d) • For the function g(x), the steepest descent direction is -∇g(x): 2 2 ∂ n ∂ n ∂ ( ( fi (x )) , fi (x )) , …, ∇g (x ) = ∑ ∑ ∂x1 i =1 x x ∂ ∂ n 2 i =1 n 2 T i =1 ∑ (fi (x )) ∂f ∂f ∂f = 2f1 (x ) 1 (x ) + 2f2 (x ) 2 (x ) + ... + 2fn (x ) n (x ), ∂x1 ∂x1 ∂x1 ∂f1 ∂f2 ∂f (x ) + 2f2 (x ) (x ) + ... + 2fn (x ) n (x ),.... 2f1 (x ) ∂x2 ∂x2 ∂x2 2f1 (x ) T ∂f1 ∂f ∂f (x ) + 2f2 (x ) 2 (x ) + ... + 2fn (x ) n (x ) ∂xn ∂xn ∂xn = 2J (x )T f (x ) 15 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.2 Method of Steepest Descent (Cont’d) Figure illustrates contours of g(x1, x2) = x12 + x22 Here, -∇g(x) = -(2x1, 2x2)T at x = [2,2]T, -∇g(x) = -(4,4)T at x = [-3, 0]T, -∇g(x) = (6, 0)T 16 8 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.2 Method of Steepest Descent (Cont’d) Defining the steepest descent step: ( ) x (1 ) = x (0 ) − α∇g x (0 ) , α > 0 (5.15) Need to select the step length, α, that minimizes: ( ( )) (5.16) h (α ) = g x (0 ) − α∇g x (0 ) Instead of differentiating h(α) with respect to α, we construct an interpolating polynomial, P( α) and select α to minimize the value of P(α). h(α) P(α) g1 g2 g3 α 17 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.2 Method of Steepest Descent (Cont’d) Interpolating polynomial, P(α): P ( α ) = g1 + ∆g1 ( α − α 1 ) + ∆2 g1 (α − α1 ) (α − α 2 ) (5.17) where: g − g2 g − g1 ∆g − ∆g1 ∆g1 = 2 , ∆g2 = 3 and ∆2 g1 = 2 α2 − α1 α3 − α 2 α 3 − α1 h(α) P(α) g1 g2 α1 α2 α̂ α1 =0 18 9 g3 α3 α Differentiating (5.17) with respect to α: ∆g αˆ = 0.5α2 − 2 1 ∆ g1 Hence, update for x(1) is: x (1 ) = x (0 ) − αˆ∇g x (0 ) ( ) Set α3 so that g3 < g1. Set α2 = α3/2. NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.2 Method of Steepest Descent (Cont’d) Example: Estimating initial guess for N-R using the method of steepest descent (from x(0) = [0,0]T) k α x1 x2 g(x(k)) 0 1 2 3 4 5 6 1.234 0.881 1.189 0.419 0.400 0.286 0.000 0.299 0.912 0.296 0.696 0.587 0.862 0.000 1.198 1.831 2.848 2.973 3.358 3.435 227 48.52 16.31 11.97 6.284 2.377 0.562 Notes: (a) Values of α greater than 1 imply extrapolation. (b) Convergence is much slower that with N-R. 19 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.3 Wegstein’s Method Recall one-dimensional successive substitution: x (k +1 ) = g x (k ) ( ) (5.19) Convergence rate of Eq.(5.19) depends on the gradient of g(x). For gradients close to unity, very slow convergence is expected. As shown on the right, using two values of g(x), a third value can be predicted using linear extrapolation, using the estimated gradient: At x = x (1 ) : ( ) ( ) dg (x ) g x (1 ) − g x (0 ) ≈ ≡s dx x (1 ) − x (0 ) 20 10 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.3 Wegstein’s Method (Con’t) Linear interpolation gives an estimate for the function value at the next iteration, x(2): ( ) ( ) ( g x (2 ) = g x (1 ) + s ⋅ x (2 ) − x (1 ) ) However, since at convergence, x(2) = g(x(2)), then: ( ) ( x (2 ) = g x (1 ) + s ⋅ x (2 ) − x (1 ) ) Defining q = s/(s -1), the Wegstein update is: ( ) x (2 ) = g x (1 ) ⋅ (1 − q ) + x (1 ) ⋅ q (5.21) Note: for q = 0, Eq.(5.21) is generic successive substitution 21 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.3 Wegstein’s Method (Con’t) For a nonlinear set of equations, the method of successive substitutions is: ( ) xi(k +1 ) = gi x1(k ), x2(k ), … , xn(k ) , i = 1,2, … , n (5.22) This method is commonly used in the solution of material and energy balances in the flowsheet simulators, where sets of equations such as Eq(5.22) are invoked while accounting for the unit operations models that are to be solved. In cases involving significant material recycle, the convergence rate of the successive substitution method can be very slow, because the local gradient of the functions gi(x) are close to unity. In such cases, the Wegstein acceleration method is often used. 22 11 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.3 Wegstein’s Method (Con’t) For the multivariable case, the Wegstein method is implemented as follows: 1 Starting from an initial guess, x(0), Eq(5.22) is applied twice to generate estimates x(1) = g(x(0)) and x(2) = g(x(1)), respectively. 2 From k = 1, the local gradients si are computed: si = ( ) ( gi x1(k ), x2(k ), … , xn(k ) − gi x1(k −1 ), x2(k −1 ), … , xn(k −1 ) x (k ) − x (k −1 ) i ), i = 1,2, … , n i 3 The Wegstein update is used to estimate x(2) and subsequent estimates of the solution: ( ) xi(k +1 ) = gi x1(k ), x2(k ), … , xn(k ) ⋅ (1 − qi ) + xi(k ) ⋅ qi , i = 1,2, … , n , k = 2,3, … s where qi = i , i = 1,2, … , n , k = 2,3, … si − 1 23 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.3 Wegstein’s Method (Con’t) Value of qi Expected convergence 0 < qi < 1 Damped successive substitutions. Slow, stable convergence qi = 1 Regular successive substitutions qi < 0 Accelerated successive substitutions. Can speed convergence; may cause instabilities When solving sets of nonlinear equations, it is often desirable to ensure that none of the equations converge at rates outside a pre-specified range. Resetting values of qi that fall outside the desired limits (i.e., qmin < qi < qmax) ensures this. 24 12 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.3 Wegstein’s Method (Con’t) Mixer Splitter R Example Application: P F The figure shows a flowsheet for S1 the production of B from raw S3 Reactor material A. The gaseous feed stream F , (99 wt.% of A and 1 wt.% of C, an inert material), is Separator S2 mixed with the recycle stream R (pure A) to form the reactor feed, S1. The conversion of A to B in the reactor is wt. fraction The reactor L products, S2 are fed to the separator, that produces a liquid product, L , containing only B, and a vapor overhead product, S3, which is free of B. To prevent the accumulation of inerts in the synthesis loop, a portion of stream S3, P is purged. 25 • • NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE 5.3 Wegstein’s Method (Con’t) Mixer Splitter The material balances for R the flowsheet consist of 15 equations P F involving 19 variables (4 degrees of freedom). Since it is known that ξ = 0.15, XA,F = 0.99 and F = 20 S1 T/hr, the effect of P on the performance of the process is investigated by solving the 15 equations iteratively: Reactor S3 1 Initial values are assumed for R and XA,R (usually zero). 2 The three mixer equations are solved. 3 The four reactor equations are solved. S2 4 The five separator equations are solved. Separator 5 The three splitter equations are solved. 6 This provides updated estimates for R and XA,R. If the estimates have changed by more than the convergence L tolerance, return to Step 2. 26 13 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS • 5.3 Wegstein’s Method (Con’t) Mixer Splitter Steps 1 to 5 are equivalentRto the two recursive formulae: F R (k +1) = g (R (k ), X (k ) , P , constants ) P 1 A,R (k +1 ) S1 (k ) (k ) ( XA,R = g2 R , XA,R , constants ) • For example, Eq.(5.25) is generatedS3 using the process Reactor material and energy balances: R = S3−P = S 1(1 − ξ ⋅ XA,S 1 ) − P S2 Separator FXA,F + RXA,R − P = (F + R ) 1 − ξ F +R = (F (1 − ξ ⋅ XA,F ) − P ) + (1 − ξ ⋅ XA,R )R 27 • NUMERICAL METHODS - (c) Daniel R. Lewin L LECTURE FIVE 5.3 Wegstein’s Method (Con’t) Mixer Splitter R for R(k+1) Hence, the recursion formula is: P ( ( kF +1 ) k ) (k ) R = (F (1 − ξ ⋅ XA,F ) − P ) + (1 − ξ ⋅ XA,R )R = g1 (R (k ), P ) S1 (k) • Similarly, the recursion formula for XA,R is: XA(k,R+1 ) = (k )X (k ) ) S3 (1 − ξ )(FXReactor A,F + R A,R ( 1 − ξ FXA,F + R (k )XA(k,R) ) • Substituting for known XA,F and F in Eq.(5.27): ( ) ( ) R (k +1 ) = g1 R (k ), P = S2 (17.03 − P ) + 1 −Separator 0.15XA(k,R) R (k ) • This means that local gradient of g1 is: ∂g1 ( = 1 − 0.15XA(k,R) ∂R (k ) 28 14 ) 0 < XA(k,R) < 1 0.85 < ∂g1 ∂R (k ) <1 L NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion LECTURE FIVE 054374 NUMERICAL METHODS 5.3 Wegstein’s Method (Con’t) This means that successive substitution will converge very slowly in such cases 29 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Summary Having completing this lesson, you should now be able to: – Formulate and implement the Newton-Raphson method for a set of nonlinear equations. You should be aware that an accurate initial estimate of the solution may be required to guarantee convergence. – Use a steepest descent method to provide robust initialization of the N-R method. Since this method only gives linear convergence, the N-R method should be applied once the residuals are sufficiently reduced. – Formulate and implement the multivariable extension of the method of successive substitution, including acceleration using Wegstein’s method. This method is commonly used in the commercial flowsheet simulators to converge material and energy balances for flowsheets involving material recycle. 30 15 NUMERICAL METHODS - (c) Daniel R. Lewin LECTURE FIVE Daniel R. Lewin, Technion