EECE 577: Information and Coding Theory

advertisement

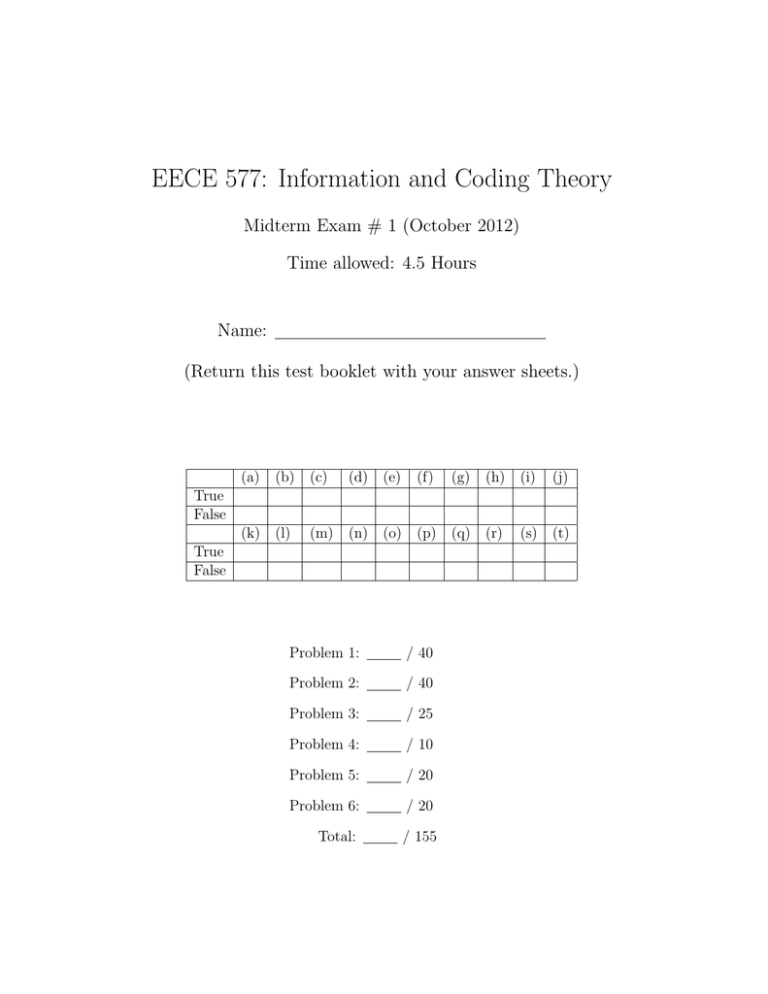

EECE 577: Information and Coding Theory

Midterm Exam # 1 (October 2012)

Time allowed: 4.5 Hours

Name:

(Return this test booklet with your answer sheets.)

(a)

(b) (c)

(d) (e)

(f)

(g)

(h) (i)

(j)

True

False

(k) (l)

(m) (n) (o) (p) (q) (r)

True

False

Problem 1:

/ 40

Problem 2:

/ 40

Problem 3:

/ 25

Problem 4:

/ 10

Problem 5:

/ 20

Problem 6:

/ 20

Total:

/ 155

(s)

(t)

Problem 1. (40 points: Correct answer = +2, no answer = 0, incorrect answer= −1.)

Say True or False to the following statements. You don’t need to justify your answer.

(a) A uniquely decodable (UD) code is a prefix-free code.

(b) A UD code can be represented by a tree, where codewords cannot be assigned to

interior nodes.

(c) In the Huffman procedure for a D-ary (D > 2) zero-error data compression, all the

siblings of the node associated with the smallest probability mass are codewords.

(d) Given a DMS, a binary Huffman code has exactly twice a expected length of a

quaternary (=4-ary) Huffman code.

(e) If Xi ’s

i.i.d. discrete random variables with pmf pX (x) and sample space X ,

Pare

n

then i=1 logD (1/pX (Xi ))/n converges to HD (X) in probability.

(f) Given a discrete source with |X | outcomes and a D-ary code with |X | codewords,

the minimum expected codeword length is achieved by assigning longer codewords

to less probable outcomes.

(g) The fixed-length source coding that relies on the Asymptotic Equi-partition Property (AEP) is in general classified as lossless source coding.

(h) If there exists a D-ary UD code with the codeword-length profile [l1 , l2 , ..., l|X | ],

then there always exists a D-ary prefix-free(PF) code that has exactly the same

codeword-length profile as the UD code even without rearranging the codeword

lengths.

(i) The Huffman codes always make the equality hold in Kraft’s inequality.

(j) Rate-distortion theory is about source coding.

2

(k) Given a discrete source with |X | outcomes of non-zero probabilities, a Huffman

procedure that considers up to the level K of a D-ary tree can assign at least

D + (K − 1)(D − 1) distinct codewords to the outcomes.

(l) If our interest is in finding an optimal solution, we just need to search the subset

of search space which guarantees the existence of an optimal solution.

(m) The almost sure convergence of a sequence of random variables always implies the

convergence in distribution.

(n) The weak law of large numbers is a special case of the convergence of a sequence

of random variables with probability one.

(o) The convergence of a sequence of random variables is essentially the convergence

of a sequence of functions.

(p) The Huffman codes are not only optimal but also asymptotically achieve the entropy

lower bound for any distribution of X.

(q) To achieve the entropy lower bound by using variable-length PF codes constructed

through Huffman procedures, we always need to encode asymptotically large number of data symbols together.

(r) The central limit theorem is a special case of the convergence of a sequence of

random variables in probability.

(n)

(s) Given a discrete-random variable with sample space X , if x ∈ Aε where xi ∈ X

(n)

(n)

and Aε is the set of ε-typical sequences of length n, then |Aε | ≥ (1−ε)Dn(HD (X)−ε)

for any integers D(≥ 2) and n.

(t) According to the converse of the source coding theorem, the probability of message

symbol error converges to one if the code rate is greater than the entropy.

3

Problem 2. (40 points) Answer the following questions:

(a) (5 points) A D-ary source code C for a source random variable X is defined as a

mapping from X to D∗ , i.e.,

C : X → D∗ ,

where X is the range space of X and D∗ is the set of all finite-length sequences of

symbols taken from a D-ary code alphabet D. What is the cardinality of D∗ ?

(b) (5 points) Draw a Venn diagram that shows the relationship among the following

sets:

(i) the set of variable-length source codes

(ii) the set of prefix-free codes

(iii) the set of uniquely-decodable codes

(iv) the set of nonsingular codes

(c) (5 points) Let X be a discrete random variable with pmf pX (x) and sample space X .

Show that the entropy of X is less than or equal to log |X |, ∀pX (x) with |X | < ∞.

(d) (5 points) Sketch the binary entropy function hb (p), and find the maximum and

the minimum points.

4

(e) (5 points) The mathematical induction is a method of proof typically used to establish that a given statement is true for all natural numbers. The proof consists

of three steps. Write down the name of each step and explain each step briefly.

(f) (5 points) What is the definition of a Discrete Memoryless Source (DMS)?

(g) (5 points) Formulate the variable-length source coding and the fixed-length source

coding problems, and point out the major differences of them.

(h) (5 points) Given a source random variable X that generates a data sequence Z =

(X1 , X2 , · · · , Xn ), we can construct a D-ary Huffman code for Z. Prove that the

expected length per source symbol of the Huffman code converges to the entropy

HD (X) of the source distribution as n goes to infinity.

5

Problem 3. (25 points: 1 point each) Fill in the blanks below.

• An ( (a) ) of a code X is a mapping from the set of length-N strings of letters

in X to the set D0 of finite-length strings.

• The optimization problem

min f (x)

is equivalent to

x∈Ω

minminf (x)

i

x∈Ωi

with a

sufficient condition ( (b) ).

• Theorem: the ( (c) ) inequality

Let C be a D-ary source code, and let l1 , l2 , · · · , l|X | be the lengths of the codewords.

|X |

P

If C is uniquely decodable, then

D−lk ≤ 1.

k=1

(Proof) Consider the identity

N

|X |

|X | |X |

|X |

X

X

X

X

D−lk =

( (d) ).

···

kN =1

k1 =1k2 =1

k=1

By collecting terms on the right side, we write

N

|X |

NX

·lmax

X

(N )

D−lk =

Ai D−i

i=1

k=1

where lmax = max lk , and

1≤k≤|X |

(N )

Ai

is the coefficient of D−i in

i=1

k=1

NX

·lmax

≤

( (f) )

i=1

=( (g) ),

|X |

P

1

D−lk ≤ ( (g) ) N , ∀N.

k=1

Now,

1

N

lim ( (g) ) = lim exp

N →∞

N →∞

=( (h) ).

Therefore,

|X |

P

!N

D−lk

k=1

If C is uniquely decodable, then ( (e) ) ∀N, ∀i holds.

By using ( (e) ), we have

N

|X |

NX

·lmax

X

(N )

D−lk =

Ai D−i

which leads to

|X |

P

D−lk ≤ 1.

k=1

6

1

ln ( (g) )

N

.

• Theorem: the entropy lower bound on L(C) for a D-ary UD code

Let L(C) be the expectation

i of codeword L(C), and let HD (X) is the

h of length

1

entropy of X defined as E logD p(X) , then L(C) ≥ HD (X).

(Proof)

L(C) − HD (X) = ( (i) ) − ( (j) )

|X |

X

pi · logD ( (k) )

=

i=1

|X |

=

X pi · ln ( (k) )

ln D

i=1

|X |

X

pi · ln ( (l) )

≥

by ( (m) )

ln D

i=1

|X |

|X |

X

X

1

=

( (n) ) −

( (o) )

ln D i=1

i=1

≥ ( (p) )

by( (q) ).

• Theorem [Yeung, p.52]

The expected length of a Huffman code denoted by LHuff , satisfies

LHuff < ( (w) ) + ( (x) ).

(Proof) Consider constructing a code with codeword lengths {l1 , l2 , · · · , l|X | } where

li = ( (r) ).

Then, − logD pi ≤ li < − logD pi + 1. Therefore,

D−li ≤ ( (s) ),

which implies

|X |

X

D

|X |

X

≤

( (s) ) = 1.

−li

i=1

i=1

It satisfies the ( (c) ) inequality, which implies there exists a ( (t) ), having

codeword length profile as {l1 , l2 , · · · , l|X | }. Let’s compute the expected length

of this PF code. Then,

LPF = ( (u) )

|X |

X

<

pi ( (v) )

i=1

= ( (w) ) + ( (x) ).

Therefore,

( (y) ) ≤ LHuff ≤ LPF < ( (w) ) + ( (x) ).

7

Problem 4. (10 points)

(a) (5 points) Derive the Markov and the Chebychev inequalities.

(b) (5 points) Let X be any random variable with mean µ and standard deviation σ.

Find a nontrivial lower bound, in a decimal number representation, on Pr(|X −µ| <

kσ) when k = 1, 2, and 3.

Problem 5. (20 points)

(a) (5 points) Illustrate the source coding theorem.

(b) (5 points) Illustrate the converse part of the source coding theorem.

(c) (10 points: 1 point each) Fill in the blanks below.

Theorem: If we have a sequence of block codes with length n and coding rate less than

(n)

H(X)−ζ, where ζ ≥ 0 does not change with n, then Pr(X (n) 6= X̂ ) → 1 as ( (10) ).

8

(proof) Consider any block code with block length n and coding data rate less than H(X)−

ζ, so that the total number of distinct codewords is at most 2n(H(X)−ζ) .

Among these distinct codewords, some are assigned to some of the typical sequences

and the others are assigned to some of the atypical sequences.

The rest of the sequences in X n have arbitrary codewords assigned and all result

in decoding error Now, the probability of the correct decision can be written as

Pc = Pr( (1) ).

Then, by total probability theorem, we have

X

Pr( (1) | X (n) = x(n) ) Pr(X (n) = x(n) )

Pr( (1) ) =

x(n) ∈X n

X

=

(n)

x(n) ∈A

|

X

··· +

{z

(F)

··· .

(n)

/ x(n) ∈A

}

|

{z

(FF)

}

The first term (F) can be written as

(F) ≤

X

1(

(1) ) (x

(n)

) · Pr(X (n) = x(n) )

(n)

x(n) ∈A

=

X

Pr(X (n) = x(n) )

( (2) )

≤ ( (3) ) · ( (4) )

= 2−n(ζ−) .

because ( (5) ) , and ( (6) )

The second part (FF) can be rewritten as

X

(FF) ≤

1 · Pr(X (n) = x(n) ) = Pr( (7) ) < (n)

x(n) ∈A

/ for sufficiently large n.

Therefore, Pc < 2−n(ζ−) + for sufficiently large n, for any block code with rate

less than H(X) − ζ, with ζ > 0 and for any > 0. This probability is equal to

1 − Pr(X (n) 6= Xˆ(n) ). Thus,

Pr(X (n) 6= X̂

(n)

) > ( (8) )

holds for any , take ( (9) )(( (9) ) means ( (10) )), then

Pr(X (n) 6= X̂

(n)

) → 1 as ( (10) ).

9

Problem 6. (20 points) Suppose that X is a discrete random variable with pmf pX (x)

and sample space X , from which a set Ω(n) , {(x1 , x2 , · · · , xn ) : xi ∈ X , ∀i ∈ N} of data

sequences is generated. Answer the following questions.

(a) (5 points) We want to construct a binary fixed-length source code. How many bits

are needed per source output to make no decoding error?

(b) (5 points) We want to construct a binary variable-length source code. How many

bits on average are needed per source output to make no decoding error?

(c) (5 points) Ω(n)0 is the subset of Ω(n) such that

1

Ω(n)0 , {x ∈ Ω(n) : H(X) − ≤ − log PX1 ,X2 ,··· ,Xn (x1 , x2 , · · · , xn ) ≤ H(X) + }.

n

Find a nontrivial upper bound on the cardinality of Ω(n)0 .

(d) (5 points) We want to construct a binary fixed-length source code. How many bits

are needed per source output to make decoding error less than for sufficiently

large n?

10