research.

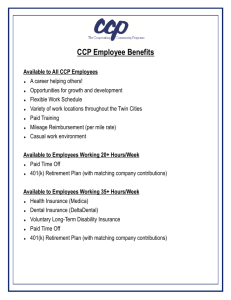

advertisement

EXPERIMENTAL STUDY OF RANDOM

PROJECTIONS BELOW THE JL LIMIT

A Thesis presented to

the Faculty of the Graduate School

at the University of Missouri

In Fulfillment

of the Requirements for the Degree

Master of Science

by

XIUYI YE

Dr. James Keller, Thesis Supervisor

MAY 2015

The undersigned, appointed by the Dean of the Graduate School, have examined

the thesis entitled:

EXPERIMENTAL STUDY OF RANDOM

PROJECTIONS BELOW THE JL LIMIT

presented by Xiuyi Ye,

a candidate for the degree of Master of Science and hereby certify that, in their

opinion, it is worthy of acceptance.

Dr. James Keller

Dr. Alina Zare

Dr. Mihail Popescu

ACKNOWLEDGMENTS

First of all, I would like to thank Dr. Keller for giving me the precious opportunity

to work on this random projection project. Dr. Keller helped me understand the key

points of the theory and pointed out directions whenever I came across difficulties.

Later on, I got to meet Dr. Bezdek from University of West Florida, Dr. Popescu

from the Computer Science Department, Dr. Zare and Dr. Han from the Electrical

and Computer Engineering Department. We had lots of fun learning the theory and

discussing the possible projects involving random projections.

I would like to thank Dr. Bezdek for helping me design the experiments and

frequently asking questions, which really pushed me to think about the mechanisms

behind the experiments. Dr. Bezdek also has very deep understanding of the theory

and incredibly good prediction about experiment results, which narrows down the

topics and directions on exploring random projections below the JL limit.

Last but not least, I would like to thank Dr. Popescu and Dr. Zare for sharing

valuable insights all the way along the study and the experiment as well as their

professional technical advices.

With all their help, I was able to finish this project.

Thank you!

ii

TABLE OF CONTENTS

ACKNOWLEDGMENTS . . . . . . . . . . . . . . . . . . . . . . . . . .

ii

LIST OF TABLES . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

vi

LIST OF FIGURES . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

vii

ABSTRACT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

ix

CHAPTER

1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

1.1

1

Johnson Lindenstrauss Lemma . . . . . . . . . . . . . . . . . . . . . .

1

1.1.1

Random Projection . . . . . . . . . . . . . . . . . . . . . . . .

2

Our Work[1] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4

1.2.1

Explore below the JL Limit . . . . . . . . . . . . . . . . . . .

4

1.2.2

Examine the Methods of Defining the Projection Matrices . .

4

2 Literature Review . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

5

1.2

2.1

Construction of the Projection Matrix . . . . . . . . . . . . . . . . .

5

2.2

JL bound . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

2.3

Bounding Probability . . . . . . . . . . . . . . . . . . . . . . . . . . .

8

3 Methodology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

10

3.1

3.2

Construction of Data Sets . . . . . . . . . . . . . . . . . . . . . . . .

10

3.1.1

Two Single-Cluster Datasets . . . . . . . . . . . . . . . . . . .

11

3.1.2

Two Two-Cluster Datasets . . . . . . . . . . . . . . . . . . . .

12

Selection of Projection Methods

. . . . . . . . . . . . . . . . . . . .

iii

13

3.3

3.4

3.2.1

Dasgupta and Gupta’s N (0,1) Gaussian Projection Matrix . .

13

3.2.2

Achlioptas’ {-1, 1} Projection Matrix . . . . . . . . . . . . . .

13

Quantitative Distortion Measurements . . . . . . . . . . . . . . . . .

14

3.3.1

Distance Matrix . . . . . . . . . . . . . . . . . . . . . . . . . .

14

3.3.2

Pearson Correlation Coefficient . . . . . . . . . . . . . . . . .

15

3.3.3

Spearman’s Correlation Coefficient . . . . . . . . . . . . . . .

16

3.3.4

Distortion Ratio Measurement . . . . . . . . . . . . . . . . . .

16

Visual Distortion Measurements

. . . . . . . . . . . . . . . . . . . .

17

3.4.1

Scatter Plots in 2D . . . . . . . . . . . . . . . . . . . . . . . .

17

3.4.2

iVAT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

17

4 Experiments and Results

. . . . . . . . . . . . . . . . . . . . . . . .

20

4.1

Experiment Design . . . . . . . . . . . . . . . . . . . . . . . . . . . .

20

4.2

Method 1: Dasgupta and Gupta’s[2] N(0, 1) . . . . . . . . . . . . . .

21

4.2.1

4.3

Pearson Correlation Coefficent (CCp) and Spearman’s Correlation Coefficient (CCs) Tables and Histograms . . . . . . . .

21

4.2.2

Ensemble Distortion over 100 Times . . . . . . . . . . . . . .

28

4.2.3

2D Scatter Plots . . . . . . . . . . . . . . . . . . . . . . . . .

33

Method 2: Achlioptas’[3] {−1, 1} . . . . . . . . . . . . . . . . . . . .

38

4.3.1

4.4

Pearson Correlation Coefficent (CCp) and Spearman’s Correlation Coefficient (CCs) Tables . . . . . . . . . . . . . . . . .

38

4.3.2

Ensemble Distortion over 100 Times . . . . . . . . . . . . . .

41

4.3.3

2D Scatter Plots . . . . . . . . . . . . . . . . . . . . . . . . .

45

iVAT images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

50

iv

5 Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

56

5.0.1

PCA experiments . . . . . . . . . . . . . . . . . . . . . . . . .

59

5.0.2

Gaussian-based data set . . . . . . . . . . . . . . . . . . . . .

61

BIBLIOGRAPHY . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

66

VITA . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

68

v

LIST OF TABLES

Table

Page

3.1

4 data sets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

11

3.2

VAT algorithm pseudocode . . . . . . . . . . . . . . . . . . . . . . . .

18

3.3

iVAT algorithm pseudocode . . . . . . . . . . . . . . . . . . . . . . .

18

4.1

M1:CCp, CCs under 100 trials on Data Set X11 . . . . . . . . . . . .

21

4.2

M1:CCp, CCs under 100 trials on Data Set X12 . . . . . . . . . . . .

22

4.3

M1:CCp, CCs under 100 trials on Data Set X21 . . . . . . . . . . . .

24

4.4

M1:CCp, CCs under 100 trials on Data Set X22 . . . . . . . . . . . .

25

4.5

M2:CCp, CCs under 100 trials on Data Set X11 . . . . . . . . . . . .

39

4.6

M2:CCp, CCs under 100 trials on Data Set X12 . . . . . . . . . . . .

39

4.7

M2:CCp, CCs under 100 trials on Data Set X21 . . . . . . . . . . . .

40

4.8

M2:CCp, CCs under 100 trials on Data Set X22 . . . . . . . . . . . .

40

5.1

CCp, CCs of PCA on Data Sets X11 and X21

. . . . . . . . . . . . .

59

5.2

M1:CCp, CCs under 100 trials on Data Set X31 . . . . . . . . . . . .

64

vi

LIST OF FIGURES

Figure

Page

3.1

iVAT image example . . . . . . . . . . . . . . . . . . . . . . . . . . .

18

4.3

M1:CCp distribution histograms of X11 and X12 . . . . . . . . . . . .

24

4.5

M1:CCp distribution histograms of X21 and X22 . . . . . . . . . . . .

27

4.6

Scale factor demonstration . . . . . . . . . . . . . . . . . . . . . . . .

28

4.9

M1:X11 , X12 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2. 31

4.12 M1:X21 , X22 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2. 33

4.14 M1:X11 , X12 : 2D scatter plots at q = 2 . . . . . . . . . . . . . . . . .

35

4.16 M1:X21 , X22 : 2D scatter plots at q = 2 . . . . . . . . . . . . . . . . .

38

4.19 M2:X11 , X12 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2 43

4.22 M2:X21 , X22 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2 45

4.24 M2:X11 , X12 : 2D scatter plots at q = 2 . . . . . . . . . . . . . . . . .

47

4.26 M2:X21 , X22 : 2D scatter plots at q = 2 . . . . . . . . . . . . . . . . .

49

4.27 iVAT of data set X11 at p = 1000 . . . . . . . . . . . . . . . . . . . .

50

4.28 iVAT of data set X21 at p = 1000 . . . . . . . . . . . . . . . . . . . .

51

4.29 M1:iVAT of data set X21 and X22 at q = 2 . . . . . . . . . . . . . . .

52

4.30 M2:iVAT of data set X22 at q = 2 . . . . . . . . . . . . . . . . . . . .

53

vii

4.31 M2:iVAT of data set X22 at q = 5 . . . . . . . . . . . . . . . . . . . .

55

5.1

PCA scatter plots of X11 and X21 at q = 2 . . . . . . . . . . . . . . .

60

5.2

M1:scatter plots of X31 at Max/Min ccp . . . . . . . . . . . . . . . .

62

5.3

M1:iVAT images of X31 at Max/Min ccp and q=5 and 2 . . . . . . .

63

5.4

M1:scatter plots of X31 at ccp/ccs around 0.5 . . . . . . . . . . . . .

65

viii

ABSTRACT

Random projection is a method used to reduce dimensionality of desired objects

with pairwise distances preserved at a relatively high probability. The mathematical

theory behind this is called the Johnson-Lindenstrauss (JL) lemma. So, the basic idea

of the JL lemma is that a set of points in a high dimensional space p are randomly

projected down to a lower dimensional space q. This q can be as low as q0 to still

make sure that with a certain probability the projected pairwise distances are within

(1±ε ) of the pairwise distances before the projection, where ε is usually a very small

value. This technique has already been used in a variety of areas like clustering, image

and text data processing. Lots of researchers have already studied the properties and

performance of the JL lemma above q0 (q0 is usually called the JL limit or JL bound),

where q = p − 1, p − 2, ..., q0 , but no research has investigated using the JL lemma

below the JL limit (q = q0 − 1, q0 − 2, ..., 2). With much lower dimension, the data

processing, storing almost everything is going to be so much easier. We can visualize

the clustering information about data sets in 2D plots. One thing should not be

forgotten is that the distance preservation is probabilistic. How well will the distances

being preserved below the JL bound? Will it affect or even completely destroy the

cluster structure after the projection? What is a good projection method? We are

going to study and answer these questions as much as we can in this thesis.

ix

Chapter 1

Introduction

1.1

Johnson Lindenstrauss Lemma

In the year of 1984, Johnson, W. and Lindenstrauss, J.[4] first presented a lemma

in perusing the extensions of Lipschitz mapping into a Hilbert space. The lemma

states that a set of n points in a high dimensional space can be mapped down to

a much lower dimensional space with pairwise distances preserved at a well-defined

probability. This lemma later on was recognized as the JL lemma. This mapping

method was called the JL transformation or JL embedding. The technique of linearly

transforming data sets with a constructed random projection matrix which satisfies

the JL lemma is called the random projection. Random projection is now used as

a powerful dimensionality deduction tool on high dimensional data sets in the area

of machine learning and data mining. Bingham and Mannila[5] in 2001 compared

random projection with other well-known dimensionality reduction tools on high-

1

dimensional image and text data sets pointing out that random projection is fast,

efficient and computationally simple without introducing too much distortion.

1.1.1

Random Projection

Originally we have a set of n points X in the p-dimensional space. We call it

the upper space in this paper. Let X = {x1 , x2 , ..., xn } ⊂ Rp . The mechanism

behind the JL lemma is actually a linear transformation T from Rp to Rq . Let

Y = T [X] = {y1 , y2 , ..., yn } and Rq∗p = [rij ] denotes the matrix representation of T ,

i.e. the projection matrix. Note that rij denotes the element in the matrix. Now

we have yj = T (xj ) = Rp∗q xj ⊂ Rq , where j = 1, 2, ..., n. We call T the random

projection operator and the process from X to Y the random projection.

There have been different versions of the theorems and the proofs come with it.

In the original proof from Johnson, W. and Lindenstrauss, J.[4], heavy geometric

approximation machinery was used with concentration bound for the projection. In

1987, a simplified proof by Frankl and Maehara[6] considered a direct projection

on k random orthonormal vectors. Later on, in 1998, Indyk and Motwani[7] proposed N (0, 1) Gaussian-based projection matrix. In 2003, Achlioptas[3] presented

the simplified random projection method which elements in the projection matrix are

randomly drawn from {−1, 0, 1}.

Here we repeat the original Johnson-Lindenstrauss[4] lemma and Indyk and Motwani’s[7]

simplified version:

Johnson-Lindenstrauss Lemma.[8] For any ε, such that 0<ε<1/2, and any set

of n points X ⊂ Rp upon projection to a uniform random q-dimensional subspace

9

where q ≥ [ (ε2 −2ε

3 /3) ]ln(n) + 1, the following property holds: with probability at least

2

1/2, for every pair u, v ∈ X,

(1 − ε)||u − v||2 ≤ ||f (u) − f (v)||2 ≤ (1 + ε)||u − v||2

(1.1)

where f (u), f (v) are the projections of u, v. In the equation above, the norm is the

Euclidean norm.

Dasgupta and Gupta: Theorem 2.1[2]. For any ε, such that 0<ε<1, and any

set of n points X ⊂ Rp upon projection using N (0, 1) as projection matrix to a q] with a probability of (1 − 1/n2 ). For

dimensional space, where q ≥ q0 = [ ε2 4ln(n)

/2−ε3 /3

every pair u, v ∈ X,

(1 − ε)||u − v||2 ≤ ||f (u) − f (v)||2 ≤ (1 + ε)||u − v||2

(1.2)

where f (u), f (v) are the projections of u, v, the space of q0 is called the JL limit and

q is called the target dimension or embedding dimension. From the two theorems

above, we can draw some conclusions: 1) The target dimension in the Dasgupta

and Gupta’s[2] theorem with N (0, 1)-based projection matrix is determined by the

number of points n and the choice of the value ε. 2) Different thermos may use

different projection matrices with the corresponding JL bound q0 and the guaranteed

probability 3) The choosing of ε determines how well the Euclidean distances are

preserved. If ε = 0, we say that the projection is an isometry. Otherwise, we say

that the projection is a (1 + ε) isometry. Note that the preservation of the pairwise

distance is only guaranteed probabilistically.

3

1.2

Our Work[1]

1.2.1

Explore below the JL Limit

As mentioned earlier, there already have been a lot of research studying projections

above the JL limit. In those cases, the distance preservation is guaranteed within the

corresponding probability. But, we have not seen any study exploring the properties

of the random projection below the JL limit. When we use random projections to

reduce the dimensionality of the data sets to a much lower level, not only will we have

much less complex data sets to deal with, significantly reduced data storing size, but

also we want to see if we can observe the cluster structure with multi-cluster data

sets in the 2D form. In other words, we want to see if the cluster information of the

data sets can be preserved after the JL transformation.

1.2.2

Examine the Methods of Defining the Projection Matrices

We also compare the performance of two different projection methods, which are based

on taking values from N (0, 1) and {−1, 1}. We used the Pearson product-moment

correlation coefficient, the Spearman’s rank correlation coefficient and the distance

distortion ratio as numerical measurement methods to measure the distortion. We

also use 2D scatter plots and iVAT, an visual assessment tool to visually demonstrate

the cluster information of the data sets before and after the projection.

More details of the theory and experiments can also be found in our PAMI

paper.[1]

4

Chapter 2

Literature Review

We will divide this chapter into three sections: the construction of projection matrices,

the JL bound and the bounding probability to briefly introduce the development of

random projection.

2.1

Construction of the Projection Matrix

The original JL Lemma used an uniformly random orthogonal matrix whose columns

are orthogonal unit vectors as the projection matrix[4]. Frankl and Maehara[6] then

simplified the proof by using an orthogonal projection matrix of a fixed unit vector projected onto a random q-dimensional subspace. Indyk and Motwani[7] in 1998

pointed out that orthogonality is not necessary for building a projection matrix.

What we actually need are spherical symmetry and randomness. They chose q random vectors independently from a p-dimensional matrix whose elements are drawn

from the Gaussian distribution N (0, 1) as the projection matrix. Their use of the

5

Gaussian distribution is to achieve the spherical symmetry of the projection matrix.

Dasgupta and Gupta[2] used the same matrix in their paper in 1999. Achlioptas[3]

used a sparser projection matrix with elements rij randomly drawn from {−1, 0, 1}

with probability of {1/6, 2/3, 1/6} respectively and another projection matrix with

elements rij drawn from {−1, 1} with equal probability. Their {−1, 0, 1} method also

achieves computational speed-up with roughly 2/3 of the entries having zeros during

the JL transformation. Bingham and Mannila[5] used Achlioptas’ {-1,0,1} as their

projection matrix and they also compared it with well-known dimensionality reduction tools like PCA, SVD, LSI and an image dimensionality reduction tool DCT. They

pointed out that random projection significantly reduces the computational complexity. In 2011, Ventkatasubramanian and Wang[9] divided the projection matrices into

two categories: dense and sparse matrices. They considered the matrix dense if it has

all nonzero entries. Otherwise, they call it a sparse matrix.

Note that, for most recent methods, the vectors in Rp have to be preconditioned

during the random projection. The mechanism of precondition is actually inserting a

scaling factor into the JL transformation. This factor is used to make the expected

squared length of the original point pairs equal to the expected squared length of

the projected point pairs. For example, the scaling factor of [rij ] = {−1, 0, 1} in

r

3

1

and the scaling factor of [rij ] = {−1, 1} is simply √ . We are

Achlioptas[3] is

q

q

going to demonstrate a little experiment in section 4.2.2.

Other than direct construction methods we mentioned above, another concept,

the Fast-Johnson-Lindenstrauss-Transform(FJLT) also has been studied. Ailon and

Chazelle[10] constructed the FJLT with three different matrices: φ = P DH. Here,

P is a q-by-p matrix whose entries are a mix of 0 with probability of s and unbi-

6

1

ased normal distribution N (0, ) with probability (1 - s); H is a p-by-p normalized

s

Hadamard matrix, where hij = p−1/2 (−1)<i−1,j−1> , < i − 1, j − 1 >is the dot-product

of the m-bit vectors and i, j are in binary; D is a p-by-p diagonal matrix with main

diagonal elements drawn from {−1, 1} with equal probability. Their FJLT managed

to accelerate the random projection process with low distortion.

2.2

JL bound

With different ways of constructing projection matrices, we now have different JL

bounds.

[2] : q ≥ q0 = [

[6] : q ≥ q0 = [

[3] : q ≥ q0 = [

(ε2 /2

4

]ln(n).

− ε3 /3)

(2.1)

9

]ln(n) + 1.

− 2ε3 /3)

(2.2)

(4 + 2β)

]ln(n); β > 0.

− ε3 /3)

(2.3)

(ε2

(ε2 /2

[9] : q ≥ q0 = [

2

]ln(n).

ε2

(2.4)

All JL bounds above have two things in common: 1) they all have number of

points n in their numerator; 2) they all have ε in their denominator. Therefore,

the JL limit (JL certificate) q0 increases with increasing sample number n and fixed

ε or decreasing ε (for ε < 1) and fixed sample number n. This explains why the

distortion increases when we decrease the target dimension. Ventkatasubramanian

7

and Wang[9] conducted some experiments to study the distortion effect of random

projection. They then pointed out that equation 2.4: q ≥ q0 = [2/ε2 ]ln(n) is not the

lowest bound can be reached ,which still satisfies the JL lemma. They then rewrote

q0 = Cln(n)/ε2 . Measurement of norms indicates that constant C is very close to 1

and measurement of pair-wise distances tells that constant C is a little less than 2.

The final JL bound they provided is k = [lnP/ε2 ], where P is the number of norm

measurements to be preserved.

2.3

Bounding Probability

One interesting thing about the JL lemma is that the preservation of pair-wise distances is guaranteed with a probability.

[2] : P rob. ≥ (1 − 1/n).

(2.5)

[3] : P rob. ≥ (1 − 1/nβ ); β > 0.

(2.6)

[9] : P rob. ≥ (1 − 1/n).

(2.7)

From the bounding probability listed above, we can tell that all of them are related

to the sample number n. With the increment of the sample number n, the bounding

probability increases.

Notice that there have been a lot of proofs and applications about random projection

but not every single of them has talked about the probability. However, Ventkatasubramanian and Wang[9] in their paper claims that probability (1 − 1/n) works well

8

on all random projection methods to preserve pair-wise distances. They even wrote

a definition, which we repeat it here:

Definition 2.1[9].A probability distribution m over the space of q∗ p matrices is said

to be a JL-transform if a matrix R drawn uniformly at random from µ is distance

preserving with probability (1 − 1/n).

It seems that random projection researchers focus more on the experimental distortion other than the corresponding theoretical probability, because they all know that

the distance preservation of the JL lemma is probabilistic. But when it comes to use

random projection as a tool, the construction of projection matrices, the JL bound

and the bounding probability should all come from the same source.

9

Chapter 3

Methodology

3.1

Construction of Data Sets

In the experiment, we synthetically built 4 data sets to have two single-cluster data

sets and two two-cluster data sets in the upper space. We did not simply generate

Gaussion-based data sets in the high dimension because Dasgupta[11] pointed out

that in the high dimensional space, univariate Gaussians are not as compact as they

are in the low dimensional space. In this case, they cannot be simply considered as

individual clusters. In the low dimensional space, the points within the cluster are

compact around the center. Therefore, with the centers of the two clusters fairly away

from each other, we can determine which point belongs to which cluster. However, in

the high dimensional space, it turns out that there are quite a big number of points

lying away from the center.

10

3.1.1

Two Single-Cluster Datasets

We call the 4 data sets we built X11 , X12 , X21 and X22 accordingly.

Data Set X11 : X11 was generated by randomly drawing 1000 points from hypercube

in R1000 . The data set is centered at (0, ..., 0) and each axis was randomly drawn from

[−10−6 , 10−6 ]. Table 3.1 demonstrates the basic information about the four data sets

we built.

Table 3.1: 4 datasets :X11 , X12 , X21 , X22 .

Data Set Name Num. of Clusters

X11

1

X12

1

X21

2

X22

2

Data Set Size

1000

1000

1000

1000

We call the data set X11 the “boxy” data set. This is because “axes” of each point

are drawn from this small range, [−10−6 , 10−6 ]. In 2D, it will be the points drawn

from the square; In 3D, it will be the points drawn from the cube. In 1000D, the

points should be ”evenly” lying inside of this 1000D hypercube. We chose the small

interval 2 ∗ 10−6 to make the data set as compact as possible. At least, the points

in the “boxy” data set will not mostly be lying on the outer layer away from the center.

Data Set X12 : First, we define a very small interval about zero I0 = [−10−6 , 10−6 ].

A 1000-dimensional vector x̂1 ∈ R1000 was then built, x̂1j = rand(I0 ), j = 1, ..., 1000.

The function rand(I0 ) denotes the process of choosing a random value within the

interval I0 . Then, we replicate x̂1 for 999 times and now we have a data set X̂12 =

{x̂1 , x̂2 , ..., x̂1000 } ⊂ R1000 . We chose a small number δ = 10−6 ∈ I0 , and for k = 2

to 1000, we incremented the k-th coordinate of xk by δ, resulting in the new data set

11

X12 = {x̂1 , x2 , ..., x1000 } ⊂ R1000 .

The Euclidean distance between x̂1 and xk for k = 2 to 1000 is k x̂1 − xk k2 =

√

δ = 10−6 ; and for pair (i 6= j), k xi − xj k2 = 2δ = 1.414 ∗ 10−6 . Compared to

X11 , X12 is a more compact data set with only one coordinate different from each

coordinate of the first point. The idea is that X11 and X12 , potentially are singlecluster data sets in the high dimensional space. With that assumption, we can now

precede to our experiments on random projections to discover their affect on data sets.

To further explore the effect of random projection on the cluster structure of data sets,

we need multiple-cluster data sets to see if they can be preserved after the projection.

Based on the way we built X11 and X12 , we also built X21 and X22 .

3.1.2

Two Two-Cluster Datasets

Data Set X21 : Similar to the way we constructed X11 , the first 500 points of X21

are centered at (0, 0, ..., 0); the next 500 points are centered at (1, 1, ..., 1). To do so,

we simply add 1 to each coordinate for the next 500 points.

Data Set X22 : Similar to the way we constructed X12 , the first 500 points are

centered at (0, 0, ..., 0); the next 500 points of X22 are centered at (1, 1, ..., 1). To do

so, we simply add 1 to each coordinate for the next 500 points.

12

3.2

Selection of Projection Methods

As discussed earlier in section 2.1, there are different approaches to construct projection matrices in the previous studies. We simply choose two representative projection

methods: Dasgupta and Gupta’s[2] and Achlioptas’[3] to conduct our experiments.

3.2.1

Dasgupta and Gupta’s N (0,1) Gaussian Projection Matrix

Method 1: Dasgupta and Gupta[2] constructed their projection matrix based on

N (0, 1) Gaussian distribution. We set the values of parameters as following:

4ln(n)

n = 1000, ε = 0.9 ⇒ q0 = [ 2

] = 171;

ε /2 − ε3 /3

n = 1000 ⇒ P robability = 1 − (1/n) = 0.999

Rq∗p = [rij ], where rij is chosen from Gaussian distribution N (0, 1)

1

yi = √ Rxi , i = 1, 2, ..., n.

q

3.2.2

Achlioptas’ {-1, 1} Projection Matrix

Method 2: Achlioptas[3] drew elements randomly from {−1, 1} with equal probability to construct their projection matrix. We set the values of parameters as following

to achieve the same ε and JL limit q0 as method 1:

(4 + 2β)ln(n)

] = 171;

n = 1000, ε = 0.9, β = 0.01 ⇒ q0 = [ 2

ε /2 − ε3 /3

n = 1000 ⇒ P

robability = 1 − (1/nβ ) = 0.066

1 with 1/2 probability

1

Rq∗p = [rij ] =

, yi = √ Rxi , i = 1, 2, ..., n.

q

-1 with 1/2 probability

13

3.3

Quantitative Distortion Measurements

To evaluate the performance of Dasgupta and Gupta’s[2] and Achilioptas’[3] random

projection methods, we used a set of mathematical tools like the Pearson productmoment correlation coefficient, the Spearman’s rank correlation coefficient and the

distortion ratio to measure the distance distortion caused by the projection.

3.3.1

Distance Matrix

Before any of the distortion measurements, we need to build distance matrices before

and after the projection. Remember that the JL lemma talks about the relationship

between pairwise distances before and after the JL transformation. Distances between

any two points from the data set is actually the comparison targets we are using

to determine the distortion after the projection. We built the distance matrix as

following: calculate squared Euclidean distances between any two points xi , xj in the

data set, then we have dij = kxi − xj k, and a distance matrix DX = [dij ], where

i, j = 1, ..., 1000. When we are using the distance matrices, we actually only use the

top half of the matrix, which is the top right area above the main diagonal. This

is because the distance matrices are symmetrical about their main diagonals. It will

increase the computational complexity if we used the entire distance matrix. In the

experiments, we put all the elements in the upper half of the matrix into a huge array

D̂X from left to right, top to bottom. Now, we have this huge array D̂X with 499,500

pair-wise distances. For the notation simplicity, we use Dx and Dy to denote the

arrays of all elements in the upper half of the distance matrices in the rest of this

thesis.

14

3.3.2

Pearson Correlation Coefficient

The Pearson correlation coefficient is usually represented by the Greek letter ρ or

rp . Let X = {x1 , x2 , ..., xn } denote a point set before the random projection and

Y = {y1 , y2 , ..., yn } denote the data set after the projection, where n is the number

of points in the data set.

Now we have:

n

P

rp X,Y

(xi − x̄)(yi − ȳ)

rn

=rn

P

P

2

(xi − x̄)

(yi − ȳ)2

i=1

i=1

(3.1)

i=1

where x̄ and ȳ are the means of data sets X, Y. In equation 3.1, the numerator is

the covariance of the two data sets and the denominator is the product of the two

standard deviations. In our case, since squared pairwise Euclidean distances are the

actual measurement targets, the equation now becomes:

n

P

rp DX ,DY

(dxi − d¯x )(dy i − d¯y )

< D̂X , D̂Y >

rn

= rn

=

P

P

k D̂X k · k D̂Y k

(dxi − d¯x )2

(dy i − d¯y )2

i=1

i=1

(3.2)

i=1

where n = 499, 500, and D̂X , D̂Y are centered distances. The numerator is the inner

product of the centered Euclidean distances and the denominator is the product of the

norms of the centered Euclidean distances. We will use CCp to denote the Pearson

correlation coefficient in the rest of the sections.

15

3.3.3

Spearman’s Correlation Coefficient

The Spearman’s correlation coefficient or Spearman’s rank correlation coefficient is

often denoted as ρ or rs . It is calculated with the rank information of the two data

sets. Rank is regarding to the position of the elements in an ascending or descending

order. With data sets X = {x1 , x2 , ..., xn } and Y = {y1 , y2 , ..., yn }, we can get the

ranks X̂ = rank[X] and Ŷ = rank[Y].

6

rsX,Y = 1 −

n

P

δi 2

i=1

n(n2 −

1)

(3.3)

Where n is the number of points in the data set and δi = x̂i − ŷi , i = 1, ..., 1000. In

the experiment we simply insert the ranks of Dx̂ and Dŷ into equation 3.3. Also, we

will use CCs to denote the Spearman’s rank correlation coefficient in the rest of the

sections.

3.3.4

Distortion Ratio Measurement

The ratio of pair-wise distances before and after the projection is a most measurement we need to do according to the JL lemma. Assuming ε ≥ 0, if X ←→ Y, the

pair-wise distances are exactly the same before and after the random projections,

and we say that the projection is Lipschitz isometric. In this case: CCp(DX , DY ) =

CCs(DX , DY ) = 1. This means that the Pearson and Spearman’s correlation coefficient are identical and equal to 1; If the projected distance matrix DY is (1 + ε)

times of the distance matrix before the projection DX , we say that the projection

is Lipschitz continuous; If the projected distance matrix DY is (1 − ε) times of the

16

distance matrix before the projection Dx , we say that the projection is Lipschitz contracting. In Chapter 4, we will see the histograms illustrating the distributions of the

distortion ratio of pair-wise distances.

3.4

3.4.1

Visual Distortion Measurements

Scatter Plots in 2D

When the target dimension has be significantly reduced to q = 2, we now have this

advantage to see all random-projected points on a two-dimensional scatter plot. By

using different colors indicating the points from each cluster, we now can see if the

clusters are overlapped or clearly separated after random projections.

3.4.2

iVAT

VAT/iVAT is a visual assessment tool to help human visually identify cluster tendency

which is the potential number of the clusters in a data set. One very important

advantage of it is that we can obtain this information for any data set, no matter

what dimensional space it is in.

17

Table 3.2: VAT Algorithm Pseudocode

VAT Algorithm[12]

In

Dn , n × n matrix of dissimilarities

1

K = {1, ..., n}, I = J = ∅: select (i, j) ∈ argmax{Dst : s ∈ K, t ∈ K} :

P (1) = i; I = i : J = K − {i}

2

For m = 2, ...n, do: select (i, j) ∈ argmin{Dst : s ∈ I, t ∈ J}:

P (m) = j : I = I ∪ {j} : J = J − {j}: Next m

3

For 1 ≤ i, j ≤ n do: [Dn ∗ ] = [Dn ]P (i)P (j)

Out Reordered Dn∗ , VAT image I(Dn∗ ), arrays P, d

Table 3.3: iVAT Algorithm Pseudocode

iVAT Algorithm[13]

0

Dn ∗ , VAT reordered dissimilarity matrix: D ∗ = [0]

0

For k = 2, ...n, do: select j = argmin{Dkr ∗ , r = 1, ..., k − 1}: Dkr ∗ = Dkr ∗ ;

0

0

c = j : Dkr ∗ = max{Dkj ∗ , Djc ∗ }, c = 1, ..., k − 1; c 6= j

0

0

2

For j = 2, ..., n, i ≤ j do: Dji ∗ = Dij ∗

0

0

Out Reordered Dn∗ , iVAT image I(Dn∗ ), S, arrays P, d(from VAT)

In

1

A 3-cluster iVAT looks like following:

(a) iVAT of a 3-Gaussian data set at

q = 10

(b) Distance matrix of a 3-Gaussian data

set

Figure 3.1: iVAT image of a c = 3 data set

18

Table 3.2 and 3.3 show the pseudocode for VAT and iVAT algorithm. The mechanism behind it is actually the using of minimum spinning tree algorithm(MST). More

detailed information of VAT or iVAT can be found in Bezdek and Hathway[12] and

Havens and Bezdek[13].

Figure 3.1(a) is a 3-cluster data set with a mixture of 3 Gaussians in R10 . The three

black blocks in the figure represent 3 potential clusters in the data set. Figure 3.1(b)

is the corresponding graph of its distance matrix. We can clearly see that there are

3 Gaussians in Figure 3.1(b).

19

Chapter 4

Experiments and Results

4.1

Experiment Design

To explore random projection below the JL limit(q0 = 171), we chose R1000 as the

upper space and q = 171, 100, 25, 5, 2 as target dimensions. We projected the data

sets down each time from R1000 directly for 100 times. We saved the maximum and

the minimum Pearson and Spearman’s correlation coefficient along with their corresponding distortion ratio distribution plots in these 100 trials. We also put these

100 distortion ratio distributions together to see the ensemble distortion distribution

over 100 trails. Moreover, we draw 2D scatter plots and iVAT images to study their

cluster structure in the projections to R2 . We will introduce the experimental results

of method 1 first and then the ones of method 2.

20

4.2

4.2.1

Method 1: Dasgupta and Gupta’s[2] N(0, 1)

Pearson Correlation Coefficent (CCp) and Spearman’s

Correlation Coefficient (CCs) Tables and Histograms

Table 4.1 and 4.2 show the Pearson and Spearman’s correlation coefficient of two

single-cluster data sets for 100 trials. We expect both of the Pearson and Spearman’s

correlation coefficient to decrease with the decrement of the target dimension. This is

because distortion ε increases with fixed sample number n and decreasing JL bound

q0 . We have already talked about this in section 2.2. In Table 4.1 and 4.2, we can see

that the mean of both CCp and CCs for X11 , X12 are small and it does decrease when

the embedding dimension q decreases. Also in Table 4.1, the maximum CCp at q = 2

is 0.0590 and the minimum CCp at q = 5 is 0.0325. This suggests that because the

projection is random, a projection to a lower dimension may have bigger correlation

coefficient than a projection to a higher dimension.

Table 4.1: CCp, CCs under 100 trials on Data Set X11

CCp

q

Max

Min

Mean

Variance

171

0.3582

0.2942

0.3265

1.4076 × 10−4

100

0.2922

0.2282

0.2554

1.1559 × 10−4

q

Max

Min

Mean

Variance

171

0.3433

0.2807

0.3132

1.3408 × 10−4

100

0.2804

0.2183

0.2442

1.1043 × 10−4

25

0.1698

0.0985

0.1312

1.2918 × 10−4

CCs

25

0.1599

0.0938

0.1240

1.1580 × 10−4

21

5

0.0796

0.0325

0.0544

1.4983 × 10−4

2

0.0590

-0.0039

0.0384

1.1546 × 10−4

5

0.0796

0.0281

0.0544

1.3346 × 10−4

2

0.0527

-0.0051

0.0324

8.9627 × 10−5

Table 4.2: CCp, CCs under 100 trials on Data Set X12

CCp

q

Max

Min

Mean

Variance

171

0.2080

0.1979

0.2029

4.3969 × 10−6

100

0.1608

0.1532

0.1563

2.5287 × 10−6

q

Max

Min

Mean

Variance

171

0.0511

-0.0290

0.0073

3.3218 × 10−4

100

0.0565

-0.0454

0.0022

4.1022 × 10−4

25

0.0804

0.0763

0.0789

6.6201 × 10−7

CCs

25

0.0646

-0.0504

0.0070

5.2019 × 10−4

5

0.0363

0.0338

0.0353

2.1286 × 10−7

2

0.0233

0.0216

0.0224

1.4788 × 10−7

5

0.0662

-0.0451

0.0034

4.5145 × 10−4

2

0.0559

-0.0561

0.0034

3.9857 × 10−4

With 100 times of the same experiment, we now have 100 Pearson and Spearman’s

correlation coefficient. We demonstrate the distribution histograms for these 100 trials

at each embedding dimension.

(a) X11 : CCp at q = 171

(b) X12 : CCp at q = 171

22

(c) X11 CCp at q = 100

(d) X12 CCp at q = 100

(e) X11 CCp at q = 25

(f) X12 CCp at q = 25

(g) X11 CCp at q = 5

(h) X12 CCp at q = 5

23

(i) X11 CCp at q = 2

(j)X12 CCp at q = 2

Figure 4.3: M1:CCp distribution histograms of X11 and X12

In Figure 4.3, we can see that most of the CCps are around the mean. In Figure

4.3(a), the CCps are roughly around 0.325; the minimum CCp is around 0.295 and

the maximum CCp is around 0.359. This verifies the maximum, mean and minimum

CCp we have in Table 4.1.

Next, we show the CCp/CCs tables and histograms for X21 and X22 .

Table 4.3: CCp, CCs under 100 trials on Data Set X21

CCp

q

Max

Min

Mean

Variance

171

1

1.0000

1.0000

9.3316 × 10−27

100

1

1.0000

1.0000

1.4321 × 10−26

q

Max

Min

Mean

Variance

171

0.8461

0.8265

0.8363

1.6738 × 10−5

100

0.8277

0.8033

0.8174

1.7626 × 10−5

24

25

1

1.0000

1.0000

1.2288 × 10−26

CCs

25

0.7993

0.7757

0.7849

2.1143 × 10−5

5

1

1.0000

1.0000

4.6498 × 10−25

2

1

1.0000

1.0000

5.5382 × 10−20

5

0.7756

0.7533

0.7650

2.2975 × 10−5

2

0.7706

0.7457

0.7587

2.5624 × 10−5

Table 4.4: CCp, CCs under 100 trials on Data Set X22

CCp

q

Max

Min

Mean

Variance

171

1

1.0000

1.0000

1.2441 × 10−25

100

1

1.0000

1.0000

1.5100 × 10−25

q

Max

Min

Mean

Variance

171

0.7626

0.7414

0.7521

2.0393 × 10−5

100

0.7676

0.7392

0.7520

2.1799 × 10−5

25

1

1.0000

1.0000

1.1159 × 10−25

CCs

25

0.7624

0.7381

0.7508

2.8317 × 10−5

5

1

1.0000

1.0000

1.3897 × 10−25

2

1

1.0000

1.0000

1.7473 × 10−25

5

0.7625

0.7401

0.7509

1.8713 × 10−5

2

0.7622

0.7406

0.7513

1.7971 × 10−5

Table 4.3 and 4.4 show the Pearson and Spearman’s correlation coefficient of two

two-cluster data sets for 100 trials. We can see that CCp for both X21 and X22 is

nearly equal to 1 and “1.0000”in the table represents a value that is slightly less

than 1. An example would be 0.999999999998946. The variance of CCp at each

target dimension is extremely small, which is from 10−27 ot 10−20 . Compared to

Table 4.1 and 4.2, both CCp and CCs increase significantly. This is because as in

n

P

(dxi − d¯x )(dy i − d¯y )

rn

equation 3.2 rp DX ,DY = r n i=1

, now d¯x is the mean of the

P

P

(dxi − d¯x )2

(dy i − d¯y )2

i=1

i=1

√

2

2 ∗ C500

∗ 0 + 5002 ∗ 1000

= 15.83. Assuming

pair-wise distances, which is roughly

2

2 ∗ C500

+ 5002

that, the projected points from the same cluster are compact as they were before the

projection, dyi is either a value very close to zero or the distance between the two

clusters. d¯y is also another fixed number. In this case, the numerator and denominator

of equation 3.2 are almost the same. This explains the increasing of the CCp and the

the ”1.0000”s in Table 4.3 and 4.4.

Figure 4.5 shows the corresponding distribution plots.

25

(a) X21 : CCp at q = 171

(b) X22 : CCp at q = 171

(c) X21 : CCp at q = 100

(d) X22 : CCp at q = 100

(e) X21 CCp at q = 25

(f) X22 CCp at q = 25

26

(g) X21 CCp at q = 5

(h) X22 CCp at q = 5

(i) X21 CCp at q = 2

(j) X22 CCp at q = 2

Figure 4.5: M1:CCp distribution histograms of X21 and X22

We can find a spike in each distribution plots. The corresponding axis of that

spike is the actual 1. All other “1”s to its left are the “1.0000”s we show in Table

4.3 and 4.4, which are the values slightly less than 1. The difference of any two of

the CCps is so small (around 10−12 ) that even Matlab had a hard time displaying the

horizontal axis.

27

4.2.2

Ensemble Distortion over 100 Times

The distortion ratio is the ratio between the pair-wise distances before and after the

projection. Now we show the importance of using the scaling factor in the following

example. Figure 4.6 is the warm-up experiment we did prior to this project. The

data set used is a Gaussian whose mean is 0 and covariance is a diagonal matrix

with all diagonal elements equal to 0.5. The method used is Achlioptas’ {−1, 0, 1}.

√

According to the method, there is a scaling factor of 3 need to be inserted into

the JL transformation equation. Both histograms are at q = 222, which is the JL

limit we set for this experiment. Figure 4.6(a) is the distortion ratio histogram using

√

3 as the scaling factor and 4.6(b) is the distortion ratio histogram without using

the scaling factor. Note that we used squared distance ratios in the experiment. We

can see that the center of Figure 4.6(a) is around 1 and (b) is around 0.33, which is

roughly 1/3 times of the distortion ratio of the projection using a scaling factor.

(a) Distortion ratio with a scaling factor at (b) Distortion ratio without a scaling factor

q = 222

at q = 222

Figure 4.6: Scaling factor demonstration

28

Now let us go back to the experiments we did using method 1. After running the

same experiment for 100 times, we will have 100 different arrays storing the ratios

between each pair-wise distances. This is due to the randomness of generating the

projection matrices. We then combine the results from these 100 experiments, divide the range between the maximum and minimum ratios into 101 bins and draw a

histogram to demonstrate the ensemble distortion. Figure 4.9 and 4.12 are ensemble

distortion histograms for four data sets at q = 171, 100, 25, 5, 2 respectively over 100

trials.

Note that when q = 171(embedding dimension equals to the JL bound), Figure4.9(a)(b)

show that the distortion ratios fall into [0.3, 1.7], which is consistent with the expecting distortion ratio ∈ [0.1, 1.9] set in section 3.2.1. What about other lower embedding

dimensions? From q = 171 all the way down to 25, we can tell the histograms are almost symmetrically centered around 1 and distortion ratios lay in [0, 2]. When q = 5

and 2, the histograms start to shift to the left.

(a) X11 at q = 171

(b) X12 at q = 171

29

(c) X11 at q = 100

(d) X12 at q = 100

(e) X11 at q = 25

(f) X12 at q = 25

(g) X11 at q = 5

(h) X12 at q = 5

30

(i) X11 at q = 2

(j) X12 at q = 2

Figure 4.9: X11 , X12 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2.

Similarly, Figure 4.12 shows the ensemble distortion ratio plots of the two twocluster data sets X21 and X22 . Unlike the smooth Gaussian distribution X11 and X12

had, Figure 4.12 demonstrates some sparsity added into the Gaussian distribution.

(a) X21 at q = 171

(b) X22 at q = 171

31

(c) X21 at q = 100

(d) X22 at q = 100

(e) X21 at q = 25

(f) X22 at q = 25

(g) X21 at q = 5

(h) X22 at q = 5

32

(i) X21 at q = 2

(j) X22 at q = 2

Figure 4.12: X21 , X22 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2.

But still, we can tell that for q = 171 and 100, the histograms lay perfectly

within [0.4, 1.9]. Histograms of q = 25 is not within the JL bound, but still they are

symmetrical about 1 and are within [0, 3]. For q = 2, Figure 4.12 (i) and (j) no longer

form a Gaussian-like distribution. The distortion ratios are highly dense around 0

and they spread out all the way to 5 for X21 and 4 for X22 .

4.2.3

2D Scatter Plots

Scatter plots after random projections have been something we eager to see after we

decide to study random projections below the JL bound. 2D scatter plots are easy

to understand which also provides us direct observation on the cluster structure of

data sets.

33

(a) X11 : MaxCCp at q = 2

(b) X12 : MaxCCp at q = 2

(c) X11 : rescale MaxCCp at q = 2

(d) X12 : rescale MaxCCp at q = 2

(e) X11 : MinCCp at q = 2

(f) X12 : MinCCp at q = 2

34

(g) X11 : MaxCCs at q = 2

(h) X12 : MaxCCs at q = 2

(i) X11 : MinCCs at q = 2

(j) X12 : MinCCs at q = 2

Figure 4.14: X11 , X12 : 2D scatter plots at q = 2.

In the 2D scatter plots, we want to see if the points within the same cluster

are close to each other and the points from different clusters are far away after the

projection. Figure 4.14 (a)(b) and (e)to (j) are scatter plots corresponding to the

projections that had MaxCCp, MinCCp, MaxCCs and MinCCs for X11 and X12 .

The points are densely around the center of the plots, and there are not too many

points laying on the edge. This indicates that the projected points can be considered

35

as a single-cluster whose points are mostly close to its center. Also, almost all the

plots are indistinguishable and both x and y axes are around 10−5 .

Figure 4.14 (c)(d) are the scatter plots of Max CCp for X11 and X12 after their axes

are rescaled to [0, 1]. We can see a dot at the origin. This is because all the points

after projection are very close to each other, Figure 4.14 (c)(d) are actually the plots

of all the points overlapping at the origin. This tells that although the distortion

ratios at q = 2 are not entirely within the range [1 − ε, 1 + ε], the cluster structure

was well-preserved on the 2D scatter plots. This proves that random projection with

method 1 has done a really good job keeping the cluster structure of the original data

sets.

Now, we show the scatter plots for the two-cluster data sets at q = 2. In the

experiment, we colored the points of the two clusters differently. What is the 2D

scatter plots going to look like? The two sets of the colored points will be away from

each other or mixed after the projection? Figure 4.16 shows the result of 2D scatter

plots of X21 and X22 .

All 8 figures in Figure 4.16 are almost indistinguishable. All the points from two

clusters after random projections are so close within the cluster that we can only see

2 dots on the scatter plots. The dots in blue denote the first 500 points in the data

set which are the points in the cluster 1 ; the dots in red denote the next 500 points

in the data set which are the points in the cluster 2. The blue dots on every plot lay

on the origin, while the red dots have different x,y axes and are away from the blue

dots. The blue and red dots on the scatter plots can be visually recognized as two

different clusters. Notice that the projected points within the same cluster are not

the same point but very close to each other.

36

(a) X21 : MaxCCp at q = 2

(b) X22 : MaxCCp at q = 2

(c) X21 : MinCCp at q = 2

(d) X22 : MinCCp at q = 2

(e) X21 : MaxCCs at q = 2

(f) X22 : MaxCCs at q = 2

37

(g) X21 : MinCCs at q = 2

(h) X12 : MinCCs at q = 2

Figure 4.16: X21 , X22 : 2D scatter plots at q = 2.

4.3

Method 2: Achlioptas’[3] {−1, 1}

In this section, we are going to introduce the results of the CCp/CCs measurement,

ensemble distortion ratio histograms and 2D scatter plots using Achlioptas’ {−1, 1}

projection method. Some of the results will be similar to the ones using method 1

and others will be very interesting to talk about.

4.3.1

Pearson Correlation Coefficent (CCp) and Spearman’s

Correlation Coefficient (CCs) Tables

Table 4.5 and 4.6 contain the CCp and CCs of X11 and X12 over 100 times using

method 2. Similar to method 1, the CCp and CCs decrease with the decrement of

the target dimension. We cannot really tell which method has higher correlation

coefficient by reading the tables. For example, in Table 4.1 and 4.5, when q = 171,

38

both mean of the CCp is around 0.32 and both the mean of the CCs is around 0.31.

Table 4.5: CCp, CCs under 100 trials on Data Set X11

CCp

q

Max

Min

Mean

Variance

171

0.3460

0.3002

0.3276

1.0816 × 10−4

100

0.2854

0.2310

0.2566

1.3605 × 10−4

q

Max

Min

Mean

Variance

171

0.3304

0.2870

0.3140

1.0051 × 10−4

100

0.2804

0.2197

0.2453

1.2294 × 10−4

25

0.1658

0.0998

0.1314

1.7120 × 10−4

CCs

25

0.1611

0.0934

0.1244

1.5661 × 10−4

5

0.0909

0.0342

0.0611

1.3543 × 10−4

2

0.0663

0.0052

0.0354

1.7974 × 10−4

5

0.0856

0.0311

0.0552

1.0339 × 10−4

2

0.0569

0.0065

0.0303

1.3002 × 10−4

Table 4.6: CCp, CCs under 100 trials on Data Set X12

CCp

q

Max

Min

Mean

Variance

171

0.2812

0.2801

0.2807

5.8095 × 10−8

100

0.2188

0.2177

0.2182

5.0656 × 10−8

q

Max

Min

Mean

Variance

171

0.0283

0.0202

0.0237

2.5079 × 10−6

100

0.0313

0.0216

0.0261

3.1279 × 10−6

25

0.1115

0.1108

0.1111

1.6795 × 10−8

CCs

25

0.0373

0.0205

0.0285

9.1225 × 10−6

5

0.0501

0.0498

0.0499

4.6833 × 10−9

2

0.0317

0.0314

0.0316

3.2996 × 10−9

5

0.0402

0.0163

0.0275

3.6130 × 10−5

2

0.0372

-0.0017

0.0159

6.1422 × 10−5

For data set X12 , at q = 171, the mean of the CCp and CCs using method 2 are

around 0.28 and 0.023 respectively and the mean of the CCp and CCs using method

1 are around 0.20 and 0.007 respectively. The different between two methods are

very small. Table 4.7 and 4.8 demonstrate the CCp and CCs for the two two-cluster

39

data sets X21 and X22 using method 2. Similar to method 1, the CCp and CCs

significantly increase compared to the results of the one-cluster experiments using

the same projection method. Both CCps of the X21 and X22 are very close to 1 for

all the target dimensions. The CCs of X21 using method 2 is around 0.8 and the CCs

of X22 is around 0.75.

Table 4.7: CCp, CCs under 100 trials on Data Set X21

CCp

q

Max

Min

Mean

Variance

171

1

1.0000

1.0000

1.0310 × 10−26

100

1

1.0000

1.0000

1.0484 × 10−26

q

Max

Min

Mean

Variance

171

0.8467

0.8232

0.8358

2.0194 × 10−5

100

0.8266

0.8077

0.8175

1.7292 × 10−5

25

1

1.0000

1.0000

1.8087 × 10−26

CCs

25

0.7966

0.7673

0.7844

3.0538 × 10−5

5

1

1.0000

1.0000

8.7129 × 10−25

2

1

1.0000

1.0000

1.2821 × 10−21

5

0.7740

0.7535

0.7642

2.1308 × 10−5

2

0.7731

0.7475

0.7582

2.4217 × 10−5

Table 4.8: CCp, CCs under 100 trials on Data Set X22

CCp

q

Max

Min

Mean

Variance

171

1.0000

1.0000

1.0000

1.8494 × 10−25

100

1.0000

1.0000

1.0000

1.6612 × 10−25

q

Max

Min

Mean

Variance

171

0.7628

0.7439

0.7545

1.3555 × 10−5

100

0.7625

0.7460

0.7551

1.4377 × 10−5

40

25

1.0000

1.0000

1.0000

1.9167 × 10−25

CCs

25

0.7664

0.7442

0.7555

1.8225 × 10−5

5

1.0000

1.0000

1.0000

1.8137 × 10−25

2

1.0000

1.0000

1.0000

1.9895 × 10−25

5

0.7720

0.7456

0.7567

1.8225 × 10−5

2

0.7650

0.7458

0.7546

1.4422 × 10−5

4.3.2

Ensemble Distortion over 100 Times

Next, we show the ensemble distortion ratio over 100 times using method 2. Figure

4.19 contains the 100 trial ensemble distortion graphs for data sets X11 , X12 using

method 2. All the distortion histograms of X11 are similar to the ones of using

method 1. However, the distortion histograms of X12 with the Achlioptas’ {−1, 1}

are sparser compared to the ones used method 1. At q = 171 and 100, the histograms

still form a “Gaussian-like” distribution, but sparser. At q = 5, we only see 7 spikes

with “Gaussian-like” distribution. And at q = 2, we only see 3 high spikes on the

graph. This is due to the unique construction of the Achlioptas projecton matrix. We

are going to explain it along with the corresponding iVAT images and the 2D scatter

plots in section 4.4.

(a) X11 at q = 171

(b) X12 at q = 171

41

(a) X11 at q = 100

(b) X12 at q = 100

(c) X11 at q = 25

(d) X12 at q = 25

(g) X11 at q = 5

(h) X12 at q = 5

42

(i) X11 at q = 2

(j) X12 at q = 2

Figure 4.19: X11 , X12 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2.

Figure 4.22 demonstrates the ensemble distortion ratio histograms over 100 times

for both X21 and X22 using method 2. Similar to results of X11 , using different

projection methods makes no big difference on ”boxy” data sets X11 and X21 .

(a) X21 at q = 171

(b) X22 at q = 171

43

(c) X21 at q = 100

(d) X22 at q = 100

(e) X21 at q = 25

(f) X22 at q = 25

(g) X21 at q = 5

(h) X22 at q = 5

44

(i) X21 at q = 2

(j) X22 at q = 2

Figure 4.22: X21 , X22 : ensemble distortion over 100 trials at q = 171, 100, 25, 5, 2.

Figure 4.22 (a),(c),(e),(f) and (i) look like Gaussians with spikes on them. At

q = 171, the distortion ratios lay within [0.5, 1.7], which is inside of the range [0.1, 1.9].

Even for q = 100, the distortion ratios are still good, which are within [0.4, 1.9]. With

the decrement of target dimension, the distortion ratio increases to 3 at q = 5 and all

the way to 5 at q = 2. Figure 4.22 (b), (d), (f), (h) and (j) demonstrate the “spikes

” distribution of X22 using method 2. Similarly, the distortion ratios at q = 171 and

q = 100 are well within the setting range [0.1, 1.9]. The distortion increases when the

target dimension goes down. One interesting thing about X22 using method 2 is that

at q = 5 and 2, the spikes locate at exact the same places. We are going to explain

this in section 4.4.

4.3.3

2D Scatter Plots

Next, we demonstrate the 2D scatter plots using method 2.

45

(a) X11 : MaxCCp at q = 2

(b) X12 : MaxCCp at q = 2

(c) X11 : MinCCp at q = 2

(d) X12 : MinCCp at q = 2

(e) X12 : MinCCp at q = 2

(f) X12 : MinCCp at q = 2

46

(g) X11 : MaxCCs at q = 2

(h) X12 : MaxCCs at q = 2

Figure 4.24: X11 , X12 : 2D scatter plots at q = 2.

Figure 4.24 (a)(c)(e)(g) of X11 look similar to the ones using method 1. If we

rescale the plot, we will see a single dot at the origin. However, all scatter plots of

X12 give us an interesting ”5-point” pattern, which on each plot, we can see there

are a point in the center and four other points at the four corners. Notice that each

dot on the plot represents the points with exact the same x and y axes. Detailed

explanation will be addressed in section 4.4.

On the next page, we show the scatter plots for the two-cluster data sets at for X21

and X22 using method 2.

47

(a) X21 : MaxCCp at q = 2

(b) X22 : MaxCCp at q = 2

(c) X21 : MinCCp at q = 2

(d) X22 : MinCCp at q = 2

(e) X21 : MaxCCs at q = 2

(f) X22 : MaxCCs at q = 2

48

(g) X21 : MinCCs at q = 2

(h) X12 : MinCCs at q = 2

Figure 4.26: X21 , X22 : 2D scatter plots at q = 2.

Similar to the ones using method 1, all 8 figures in 4.26 are almost indistinguishable, the dots in blue denote the first 500 points in the data set which are the points

in the cluster 1; the dots in red denote the next 500 points in the data set which are

the points in the cluster 2. The points are just very close to each other. They don’t

have exact the same x and y axes. The blue dots on every plot lay on the origin.

The blue and red dots can be visually recognized as two different clusters. From Fig

4.19 and Fig 4.22, method 2 seems to be less reliable in term of preserving the cluster

structure of single-cluster data sets. It works well with the two-cluster data sets in

out experiment.

49

4.4

iVAT images

In this section, we are going to demonstrate the iVAT images of the data sets after

random projection. We also include interesting and important discussion of the unexpected ensemble distortion plots, iVAT images and 2D scatter plots of X12 using

method 2.

Figure 4.27 is the iVAT of X11 in R1000 .

Figure 4.27: iVAT of data set X11 at p = 1000

We cannot see any particular pattern about it. There is a dash line on the main

diagonal of the image instead of the dark blocks we saw in section 3.4.2. This indicates

that there are no multiple clusters.

Figure 4.28 demonstrates the iVAT image of two-cluster data set X21 .

50

Figure 4.28: iVAT of data set X21 at p = 1000

In Figure 4.27, we can see there are two giant black blocks along the main diagonal

of the image. This tells us that there are two potential clusters existing in the data

set.

Since the iVAT images of X11 and X12 look exactly the same as well as the ones for

X21 and X22 , for the simplicity, we are not going to show all of them here again. Also,

displaying iVAT images of single-cluster data sets is pointless and will be omitted in

the future sections.

51

So, what do the iVAT images look like after a random projection?

Let us look at the results of method 1 first:

(a) iVAT image of X21 : MaxCCp at q = 2

(b) iVAT of X22 : MaxCCp at q = 2

Figure 4.29: iVAT of data set X21 and X22 at q = 2

Figure 4.29(a)(b) represents iVAT images for all two-cluster data sets cases, which

q is from 171, ...2 for both methods. The iVAT images of data sets after random

projections are sharp and clear. The two blocks in the images indicate that there are

two potential clusters. This is also consistent with the result of the corresponding 2D

scatter plots. We can say the cluster information of the two two-cluster data sets are

well-preserved after random projections using either method 1 or method 2.

52

Now let us take a look at some interesting results of data set X12 using method 2.

(a) X12 : scatter plot of MaxCCp at q = 2 (b) X12 : iVAT image of MaxCCp at q = 2

Figure 4.30: iVAT of data set X22 at q = 2

Figure 4.30(b) is the iVAT image of X12 at M axCCp, q = 2 using method 2. We

can see that there is a tiny black singleton between the first two blocks(heightened

with a red circle) . Figure 4.30(a) is the corresponding 2D scatter plot of the same

data set. The iVAT image suggests that there are 5 potential clusters, which is not

the single-cluster we are expecting. Why is this happening?

√

The JL transformation of method 2 is: Y = 1/ qRX. After JL transformation, Y

will look sometime

like this:

1

1

1

a a + r12 ∗ √2 δ a + r13 ∗ √2 δ ... a + r1,1000 ∗ √2 δ

Y2∗1000 =

1

1

1 .

b b + r22 ∗ √ δ a + r23 ∗ √ δ ... a + r2,1000 ∗ √ δ

2

2

2

Ignoring the constant a, b, because rij is either -1 or 1, there are only 5 possible values

1

1

1

1

1

1

exist. They are (a, b), (a + √ δ, b + √ δ), (a + √ δ, b − √ δ), (a − √ δ, b + √ δ)

2

2

2

2

2

2

1

1

and (a − √ δ, b − √ δ). Note that the center point (a, b) in Figure 4.30(a) is also

2

2

the first point of data set X12 . So, when q = 2, we found 22 + 1 = 5 clusters. This

53

also explains why the iVAT of X12 using method 2 has this unique “4 blocks with a

singleton” structure.

Remember that, in section 4.3.2, both of the ensemble distortion ratio plots of X12

and X22 at q = 2 form a structure of 3 “spikes” located at x = 0, 1 and 2 respectively.

This is due to the combination effect of the unique construction of data set X12 and

the using of method 2. As we mentioned earlier, the center point in Figure 4.30(a)

is the first point in X12 , whose squared Euclidean distance to any other points in

X12 is δ 2 before the projection. After a random projection using method 2, the

distance between the first point and any other point is still 2δ 2 . Note that we used

squared Euclidean distance in the experiment. This results in a distortion ratio of 1.

Similarly, the distance between any two points excluding the first point is 2δ 2 before

the projection. The possible distances of any two points excluding the first point

after random projections using method 2 are 0, 2δ 2 or 4δ 2 . This results in distortion

ratios of 0, 1 or 2. This explains why the 3 “spikes” only locate at x = 0, 1 and 2.

As for two-cluster data set X22 , the distortion ratios of 0, 1 and 2 still exist. However,

the distortion ratios of the pair-wise distances from different clusters are also added

into the histogram.

54

So, what happens at q = 5?

Figure 4.31 (a) shows the iVAT image of the data set X12 at M axCCp, q = 5 using

method 2. We counted 32 blocks in the graph. According to what we found at q = 2,

when q = 5, we should have found 25 + 1 = 33 clusters. We think it is possible

that the singleton is too small to be seen on the iVAT image. The result of using

4-connected component algorithm actually verifies that. In 4.31(b), the singleton is

actually locating at the right bottom corner of the iVAT image on the main diagonal.

Here we suggest that 2q + 1 is the upper bound of the clusters we can found on iVAT

images using Achlioptas’ projection method. Similar to q = 2, at q = 5, the ”spikes”

will locate at x = 0, 2/5, 4/5, 1, 6/5, 8/5, 2.

(a) iVAT of data set X22 at q = 5 components

(b) Reconstructed matrix using

4-connected component labeling

Figure 4.31: iVAT of data set X22 at q = 5

55

Chapter 5

Summary

To explore the properties and performance of random projections blew the JL limit,

we built 4 synthetic data sets in R1000 , used Dasgupta and Gupta’s [2] N (0, 1) Gaussian projection method and Achlioptas’[3] {−1, 1} projection method. We measured

the Pearson and Spearman’s correlation coefficient, the distortion ratio of pair-wise

distances. We also drew the ensemble distance distortion histograms, iVAT images

and 2D scatter plots to study the cluster structure changes after random projections.

We can draw the following conclusions based on our experimental results.

1. The four synthetic data sets we built achieved pretty good results. Data set X12

and X22 helped reveal some problems with Achlioptas’[3] {−1, 1} projection method.

This is due to the unique construction of data set X12 . (Only one coordinate of each

point was modified based on the coordinates of the first point.) They also helped

understanding the reason of the forming the unexpected “5-point” structure for X12

using method 2. (The distance between the first point and any other point is δ and

56

the distance between any two points excluding the first point is

√

2δ.) Compared

to X11 and X21 , X12 and X22 are more compact data sets. i.e. They can be better

recognized as cluster or clusters in R1000 .

2. Dasgupta and Gupta’s [2] N (0, 1) Gaussian projection method works better than

Achlioptas’[3] {−1, 1} method does when the embedding dimension q is below the JL

bound. The experimental results at the JL bound (q = 171 in our experiment) are

consistent with the JL lemma. All distance distortion ratios are within [1 − ε, 1 + ε].

Both projection methods work well with two-cluster data sets in term of preserving

cluster structure. The 2D scatter plots of X12 present an unexpected ”5-point” pattern using Achlioptas’ method. iVAT images also give the corresponding ”5-cluster”

structure. We say that cluster structure of data set X12 cannot be well-preserved

when using method 2.

3. Numerical results of the Pearson and Spearman’s correlation coefficient prove that

with the decrement of the target dimension, the distance distortion increases. Both

correlation coefficient significantly increases from single-cluster data sets to multiplecluster data sets. However, our experiments are not sufficient to tell if the Pearson

and Spearman’s correlation coefficient can be used to indicate a good or bad random

projection. Further studies on this matter are needed in order to answer this question.

4. The iVAT images are sharp and clear in our experiments. This is possibly due to

the way we built the data sets. We tried to make the points within the cluster as

compact as possible and the points from different clusters away from each other.

57

6. An obvious advantage of random projections over other feature extraction tools

is the simplicity of the construction of projection matrices and the transformation.

It is fast and computational efficient. Our limited experiments suggest that random

projection under the JL bound is feasible and relatively reliable in term of obtaining

cluster information about data sets. However, there are still a lot experiments can be

done to find out how to get better projections. Other future work could be combining random projection with existing algorithms to build powerful tools for different

applications.

58

Now we show the additional experiments we did with the PCA and random projections on the Gaussian-based data set.

5.0.1

PCA experiments

Table 5.1 shows the ccp and ccs for data sets X11 and X21 using the PCA.

Table 5.1: CCp, CCs of PCA on Data Sets X11 and X21

X11

q

171

100

25

5

2

ccp 0.7995 0.6595 0.3893 0.1921 0.1207

ccs 0.7847 0.6401 0.3686 0.1732 0.1024

X21

q

171

100

25

5

2

ccp

1

1

1

1

1

ccs 0.9718 0.9551 0.9179 0.8952 0.8847

In Table 5.1, we can tell that the values of the Pearson and Spearman’s correlation

coefficients of data set X21 are similar to the ones using random projections. The

Pearson correlation coefficient reaches 1 and Spearman’s correlation coefficient is also

quite big for q below the JL limit. However, both the Pearson and Spearman’s

correlation coefficient drop from around 0.8 to around 0.1 with the decrement of q

from 171 to 2 using the PCA on single-cluster data set X11 .

Figure 5.1 (a) and (b) are the corresponding 2D scatter plots for data sets X11

and X21 at q = 2.

59

(a) PCA 2D scatter plot of X11

(b) PCA 2D scatter plot of X21

Figure 5.1: PCA scatter plots of X11 and X21 at q = 2

In the Figure 5.1 above, we can see that the 2D scatter plot of X11 using the PCA is

similar to what we had using the method 1 and method 2. The 1000 points after using

the PCA are close to each other and form a cluster cloud. However, Figure 5.1(b)

shows a quite unique distribution. The x-axis of the points are either around −16 or

16. And the y-axis of the points spread from −3 ∗ 10−6 to 3 ∗ 10−6 . As we all known

that the PCA reorders coordinates with the eignvectors corresponding to the largest

eignvalues. In the 2D plot, the x-axis is actually the first principal component, which

is the eignvector pointing from cluster 1 to cluster 2. The corresponding eignvalue is

the Euclidean distance between the first 500 points and the next 500 points, which

√

is roughly 1000 ≈ 32. We do see that the difference between the x-axes is roughly

(16 − (−16)) = 32. Similarly, the y-axis is the second principal component, which

could be a vector between any two points within the cluster. We do see that the

maximum difference between the y-axes is roughly (3 ∗ 10−6 − (−3 ∗ 10−6 )) = 6 ∗ 10−6 ,

which is less than the possible maximum Euclidean distance between any two points

60

within the cluster

p

1000 ∗ (2 ∗ 10−6 ) ≈ 6.3 ∗ 10−5 . Notice that the red dots are the

first 500 points and the blue dots are the next 500 points.

5.0.2

Gaussian-based data set

We did talk about the reason why we did not use Gaussian-based data sets as our

primary data sets to study random projections below the JL limit. The reason is

that, in the high dimensional space, for Gaussian-based data sets, there is a large

number of points laying away from its mean. But, in the reality, we do not usually

build specific data sets to fit the tools or algorithms. It is most likely the other way

around. Gaussian-based data sets are more realistic representations of real world data

sets. Here, we tested method 1 on a well-separated two-cluster Gaussian-based data

set.

We constructed the data set as following:

There are 500 points from each Gaussian cluster in the data set. The first cluster centers at (−3, −3, ..., −3) and its covariance matrix is a diagonal matrix with the main

diagonal having 0.1 and 1 alternating. The second cluster has the same covariance

matrix, but it centers at (3, 3, ..., 3). Note that these two clusters are well-separated

in R1000 . We call this data set X31 . What we are curious about is whether X31 would

give us a well-separated two-cluster 2D scatter plot and the corresponding iVAT image after random projections?

Figure 5.2(a) and (b) show the 2D scatter plots of data set X31 after using method

1.

61

(a) 2D scatter plot of X31 at

MaxCCp=0.9828

(b) 2D scatter plot of X31 at

MinCCp=0.0126

Figure 5.2: M1:scatter plots of X31 at the Max/Min ccp

Figure 5.2(a) shows the 2D scatter plot at ccp=0.9828, which ccp reaches its

maximum value over 100 trials. The red dots denote the first 500 points and the blue

dots denote the next 500 points. We can see that the points within the cluster are

close to each other and points from different clusters are far away from each other.

We consider this a good projection. However, at ccp = 0.0126, where cpp reaches its

minimum value, the first 500 and the next 500 points are highly mixed. We consider

this a bad projection.

Figure 5.3 shows the corresponding iVAT images. At q = 5 and ccp = 0.6048, we

start to see the bad projections, which we do not see two equal-sized blocks along the

diagonal. The clear and sharp iVAT images of the maximum ccp at q=5 and 2 tell

us that there are two potential clusters.

62

(a) iVAT image of X31 at

MaxCCp=0.9925 and q=5

(b) iVAT image of X31 at

MinCCp=0.6048 and q=5

(c) iVAT image of X31 at

MaxCCp=0.9828 and q=2

(d) iVAT image of X31 at

MinCCp=0.0126 and q=2

Figure 5.3: M1:iVAT images of X31 at Max/Min ccp and q=5 and 2

Table 5.2 demonstrates the maximum, minimum and mean ccp/ccs on X31 from

q=171 to 2 using the method 1.

63

Table 5.2: CCp, CCs under 100 trials on Data Set X31

CCp

q

Max

Min

Mean

Variance

171

0.9994

0.9989

0.9993

7.4116 × 10−9

q

Max

Min

Mean

Variance

171

0.8648

0.8442

0.8537

1.7754 × 10−5

100

25

0.9991

0.9976

0.9982

0.9887

0.9987

0.9947

−8

3.9451 × 10

2.462 × 10−6

CCs

100

25

0.8503

0.8070

0.8184

0.7763

0.8322

0.7928

2.7588 × 10−5 3.4428 × 10−5

5

0.9925

0.6048

0.9557

0.0029

2

0.9828

0.0126

0.8362

0.0426

5

0.7823

0.5711

0.7654

4.7894 × 10−4

2

0.7753

0.0310