TEST OF ENGLISH AS A FOREIGN LANGUAGE

Research

Reports

REPORT 65

JUNE 2000

Monitoring Sources of Variability

Within the Test of Spoken

English Assessment System

Carol M. Myford

Edward W. Wolfe

Monitoring Sources of Variability Within the Test of Spoken English Assessment System

Carol M. Myford

Edward W. Wolfe

Educational Testing Service

Princeton, New Jersey

RR-00-6

®

®

®

Educational Testing Service is an Equal Opportunity/Affirmative Action Employer.

Copyright © 2000 by Educational Testing Service. All rights reserved.

No part of this report may be reproduced or transmitted in any form or by any means,

electronic or mechanical, including photocopy, recording, or any information storage

and retrieval system, without permission in writing from the publisher. Violators will be

prosecuted in accordance with both U.S. and international copyright laws.

EDUCATIONAL TESTING SERVICE, ETS, the ETS logo, GRE, TOEFL, the TOEFL

logo, TSE, and TWE are registered trademarks of Educational Testing Service. The

modernized ETS logo is a trademark of Educational Testing Service.

FACETS Software is copyrighted by MESA Press, University of Chicago.

Abstract

The purposes of this study were to examine four sources of variability within the

Test of Spoken English (TSE®) assessment system, to quantify ranges of variability for

each source, to determine the extent to which these sources affect examinee performance,

and to highlight aspects of the assessment system that might suggest a need for change.

Data obtained from the February and April 1997 TSE scoring sessions were analyzed

using Facets (Linacre, 1999a).

The analysis showed that, for each of the two TSE administrations, the test

usefully separated examinees into eight statistically distinct proficiency levels. The

examinee proficiency measures were found to be trustworthy in terms of their precision

and stability. It is important to note, though, that the standard error of measurement varies

across the score distribution, particularly in the tails of the distribution.

The items on the TSE appear to work together; ratings on one item correspond

well to ratings on the other items. Yet, none of the items seem to function in a redundant

fashion. Ratings on individual items within the test can be meaningfully combined; there

is little evidence of psychometric multidimensionality in the two data sets. Consequently,

it is appropriate to generate a single summary measure to capture the essence of examinee

performance across the 12 items. However, the items differ little in terms of difficulty,

thus limiting the instrument’s ability to discriminate among levels of proficiency.

The TSE rating scale functions as a five-point scale, and the scale categories are

clearly distinguishable. The scale maintains a similar though not identical category

structure across all 12 items. Raters differ somewhat in the levels of severity they

exercise when they rate examinee performances. The vast majority used the scale in a

consistent fashion, though. If examinees’ scores were adjusted for differences in rater

severity, the scores of two-thirds of the examinees in these administrations would have

differed from their raw score averages by 0.5 to 3.6 raw score points. Such differences

can have important consequences for examinees whose scores lie in critical decisionmaking regions of the score distribution.

Key words:

oral assessment, second language performance assessment, Item Response

Theory (IRT), rater performance, Rasch Measurement, Facets

i

The Test of English as a Foreign Language (TOEFL®) was developed in 1963 by the National Council

on the Testing of English as a Foreign Language. The Council was formed through the cooperative

effort of more than 30 public and private organizations concerned with testing the English proficiency

of nonnative speakers of the language applying for admission to institutions in the United States. In

1965, Educational Testing Service (ETS®) and the College Board assumed joint responsibility for the

program. In 1973, a cooperative arrangement for the operation of the program was entered into by ETS,

the College Board, and the Graduate Record Examinations (GRE®) Board. The membership of the

College Board is composed of schools, colleges, school systems, and educational associations; GRE

Board members are associated with graduate education.

ETS administers the TOEFL program under the general direction of a Policy Council that was

established by, and is affiliated with, the sponsoring organizations. Members of the Policy Council

represent the College Board, the GRE Board, and such institutions and agencies as graduate schools

of business, junior and community colleges, nonprofit educational exchange agencies, and agencies

of the United States government.

✥

✥

✥

A continuing program of research related to the TOEFL test is carried out under the direction of the

TOEFL Committee of Examiners. Its 11 members include representatives of the Policy Council, and

distinguished English as a second language specialists from the academic community. The Committee

meets twice yearly to review and approve proposals for test-related research and to set guidelines for

the entire scope of the TOEFL research program. Members of the Committee of Examiners serve

three-year terms at the invitation of the Policy Council; the chair of the committee serves on the Policy

Council.

Because the studies are specific to the TOEFL test and the testing program, most of the actual research

is conducted by ETS staff rather than by outside researchers. Many projects require the cooperation

of other institutions, however, particularly those with programs in the teaching of English as a foreign

or second language and applied linguistics. Representatives of such programs who are interested in

participating in or conducting TOEFL-related research are invited to contact the TOEFL program

office. All TOEFL research projects must undergo appropriate ETS review to ascertain that data

confidentiality will be protected.

Current (1999-2000) members of the TOEFL Committee of Examiners are:

Diane Belcher

Richard Berwick

Micheline Chalhoub-Deville

JoAnn Crandall (Chair)

Fred Davidson

Glenn Fulcher

Antony J. Kunnan (Ex-Officio)

Ayatollah Labadi

Reynaldo F. Macías

Merrill Swain

Carolyn E. Turner

The Ohio State University

Ritsumeikan Asia Pacific University

University of Iowa

University of Maryland, Baltimore County

University of Illinois at Urbana-Champaign

University of Surrey

California State University, LA

Institut Superieur des Langues de Tunis

University of California, Los Angeles

The University of Toronto

McGill University

To obtain more information about TOEFL programs and services, use one of the following:

E-mail: toefl@ets.org

Web site: http://www.toefl.org

ii

Acknowledgments

This work was supported by the Test of English as a Foreign Language (TOEFL)

Research Program at Educational Testing Service.

We are grateful to Daniel Eignor, Carol Taylor, Gwyneth Boodoo, Evelyne Aguirre

Patterson, Larry Stricker, and the TOEFL Research Committee for helpful comments on

an earlier draft of the paper. We especially thank the readers of the Test of Spoken

English and the program’s administrative personnel—Tony Ostrander, Evelyne Aguirre

Patterson, and Pam Esbrandt—without whose cooperation this project could never have

succeeded.

iii

Table of Contents

Introduction......................................................................................................................1

Rationale for the Study ....................................................................................................2

Review of the Literature ..................................................................................................3

Method .............................................................................................................................5

Examinees ............................................................................................................5

Instrument ............................................................................................................6

Raters and the Rating Process..............................................................................6

Procedure .............................................................................................................7

Results..............................................................................................................................9

Examinees ............................................................................................................11

Items.....................................................................................................................20

TSE Rating Scale .................................................................................................24

Raters ...................................................................................................................34

Conclusions......................................................................................................................43

Examinees ............................................................................................................43

Items.....................................................................................................................44

TSE Rating Scale .................................................................................................45

Raters ...................................................................................................................45

Next Steps ............................................................................................................46

References........................................................................................................................48

Appendix..........................................................................................................................51

iv

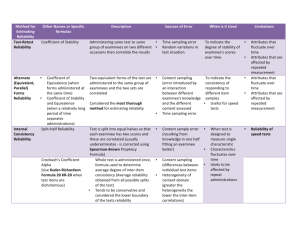

List of Tables

Page

Table 1. Distribution of TSE Examinees Across Geographic Locations........................5

Table 2. TSE Rating Scale ..............................................................................................8

Table 3. Misfitting and Overfitting Examinees from the February and April 1997

TSE Administrations........................................................................................................16

Table 4. Rating Patterns and Fit Indices for Selected Examinees...................................17

Table 5. Examinees from the February 1997 TSE Administration Identified as

Having Suspect Rating Patterns.......................................................................................19

Table 6. Examinees from the April 1997 TSE Administration Identified as

Having Suspect Rating Patterns.......................................................................................20

Table 7. Item Measurement Report for the February 1997 TSE Administration ...........21

Table 8. Item Measurement Report for the April 1997 TSE Administration .................21

Table 9. Rating Scale Category Calibrations for the February 1997 TSE Items ............25

Table 10. Rating Scale Category Calibrations for the April 1997 TSE Items ................25

Table 11. Average Examinee Proficiency Measures and Outfit Mean-Square

Indices for the February 1997 TSE Items ........................................................................28

Table 12. Average Examinee Proficiency Measures and Outfit Mean-Square

Indices for the April 1997 TSE Items ............................................................................. 29

Table 13. Frequency and Percentage of Examinee Ratings in Each Category for

the February 1997 TSE Items ..........................................................................................30

Table 14. Frequency and Percentage of Examinee Ratings in Each Category for

the April 1997 TSE Items ................................................................................................31

Table 15. Rating Patterns and Fit Indices for Selected Examinees.................................33

Table 16. Summary Table for Selected TSE Raters ........................................................35

v

Table 17. Effects of Adjusting for Rater Severity on Examinee Raw Score

Averages, February 1997 TSE Administration ................................................................37

Table 18. Effects of Adjusting for Rater Severity on Examinee Raw Score

Averages, April 1997 TSE Administration......................................................................37

Table 19. Frequencies and Percentages of Rater Mean-Square Fit Indices for the

February 1997 TSE Data..................................................................................................39

Table 20. Frequencies and Percentages of Rater Mean-Square Fit Indices for the

April 1997 TSE Data .......................................................................................................39

Table 21. Frequencies of Inconsistent Ratings for February 1997 TSE Raters ..............40

Table 22. Frequencies of Inconsistent Ratings for April 1997 TSE Raters ...................41

Table 23. Rater Effect Criteria...................................................………………………..42

Table 24. Rater Effects for February 1997 TSE Data......................................................42

Table 25. Rater Effects for April 1997 TSE Data............................................................42

Appendix. TSE Band Descriptor Chart............................................................................51

vi

List of Figures

Page

Figure 1. Map from the Facets Analysis of the Data from the February 1997 TSE

Administration .................................................................................................................12

Figure 2. Map from the Facets Analysis of the Data from the April 1997 TSE

Administration .................................................................................................................13

Figure 3. Category Probability Curves for Items 4 and 8 (February Test)......................26

Figure 4. Category Probability Curves for Items 4 and 7 (April Test)............................26

vii

Introduction

Those in charge of monitoring quality control for complex assessment systems need

information that will help them determine whether all aspects of the system are working as

intended. If there are problems, they must pinpoint those particular aspects of the system that are

out of synch so that they can take meaningful, informed steps to improve the system. They need

answers to critical questions such as: Do some raters rate more severely than other raters? Do

any raters use the rating scales inconsistently? Are there examinees that exhibit unusual profiles

of ratings across items? Are the rating scales functioning appropriately? Answering these kinds

of questions requires going beyond interrater reliability coefficients and analysis of variance

main effects to understand the impact of the assessment system on individual raters, examinees,

assessment items, and rating scales. Data analysis approaches that provide only group-level

statistics are of limited help when one’s goal is to refine a complex assessment system. What is

needed for this purpose is information at the level of the individual rater, examinee, item, and

rating scale. The present study illustrates an approach to the analysis of rating data that provides

this type of information. We analyzed data from two 1997 administrations of the revised Test of

Spoken English (TSE®) using Facets (Linacre, 1999a), a Rasch-based computer software

program, to provide information to TSE program personnel for quality control monitoring.

In the beginning of this report, we provide a rationale for the study and lay out the

questions that focused our investigation. Next, we present a review of literature, discussing

previous studies that have used many-facet Rasch measurement to investigate complex rating

systems for evaluating speaking and writing. In the method section of the paper we describe the

examinees that took part in this study, the Test of Spoken English, the TSE raters, and the rating

procedure they employ. We then discuss the statistical analyses we performed, presenting the

many-facet Rasch model and its capabilities.

The results section is divided into several subsections. First, we examine the map that is

produced as part of Facets output. The map is perhaps the most informative piece of output from

the analysis, because it allows us to view all the facets of our analysis—examinees, TSE items,

raters, and the TSE rating scale—within a single frame of reference. The remainder of the results

section is organized around the specific quality control questions we explored with the Facets

output. We first answer a set of questions about the performance of examinees. We then look at

how the TSE items performed. Next, we turn our attention to questions about the TSE rating

scale. And lastly, we look at a set of questions that relate to raters and how they performed. We

then draw conclusions from our study, suggesting topics for future research.

1

Rationale for the Study

At its February 1996 meeting, the TSE Committee set forth a validation research agenda

to guide a long term program for the collection of evidence to substantiate interpretations made

about scores on the revised TSE (TSE Committee, 1996). The committee laid out as first priority

a set of interrelated studies that focus on the generalizability of test scores. Of particular

importance were studies to determine the extent to which various factors (such as item/task

difficulty and rater severity) affect examinee performance on the TSE. The committee suggested

that Facets analyses and generalizability studies be carried out to monitor these sources of

variability as they operate in the TSE setting.

In response to the committee’s request, we conducted Facets analyses of data obtained

from two administrations of the revised TSE—February and April 1997. The purpose of the

study was to monitor four sources of variability within the TSE assessment system: (1)

examinees, (2) TSE items, (3) the TSE rating scale, and (4) raters. We sought to quantify

expected ranges of variability for each source, to determine the extent to which these sources

affect examinee performance, and to highlight aspects of the TSE assessment system that might

suggest a need for change.

Our study was designed to answer the following questions about the sources of

variability:

Examinees

•

How much variability is there across examinees in their levels of proficiency?

Who differs more—examinees in their levels of proficiency, or raters in their

levels of severity?

•

To what extent has the test succeeded in separating examinees into distinct strata

of proficiency? How many statistically different levels of proficiency are

identified by the test?

•

Are the differences between examinee proficiency measures mainly due to

measurement error or to differences in actual proficiency?

•

How accurately are examinees measured? How much confidence can we have in

the precision and stability of the measures of examinee proficiency?

•

Do some examinees exhibit unusual profiles of ratings across the 12 TSE items?

Does the current procedure for identifying and resolving discrepancies

successfully identify all cases in which rater agreement is "out of statistical

control" (Deming, 1975)?

Items

•

Is it harder for examinees to get high ratings on some TSE items than others? To

what extent do the 12 TSE items differ in difficulty?

2

•

Can we calibrate ratings from all 12 TSE items, or do ratings on certain items

frequently fail to correspond to ratings on other items (that is, are there certain

items that do not "fit" with the others)? Can a single summary measure capture

the essence of examinee performance across the items, or is there evidence of

possible psychometric multidimensionality (Henning, 1992; McNamara, 1991;

McNamara, 1996) in the data and, perhaps, a need to report a profile of scores

rather than a single score summary for each examinee if it appears that systematic

kinds of profile differences are appearing among examinees who have the same

overall summary score?

•

Are all 12 TSE items equally discriminating? Is item discrimination a constant

for all 12 items, or does it vary across items?

TSE Rating Scale

•

Are the five scale categories on the TSE rating scale appropriately ordered? Is the

rating scale functioning properly as a five-point scale? Are the scale categories

clearly distinguishable?

Raters

•

Do TSE raters differ in the severity with which they rate examinees?

•

If raters differ in severity, how do those differences affect examinee scores?

•

How interchangeable are the raters?

•

Do TSE raters use the TSE rating scale consistently?

•

Are there raters who rate examinee performances inconsistently?

•

Are there any overly consistent raters whose ratings tend to cluster around the

midpoint of the rating scale and who are reluctant to use the endpoints of the

scale? Are there raters who tend to give an examinee ratings that differ less than

would be expected across the 12 items? Are there raters who cannot effectively

differentiate between examinees in terms of their levels of proficiency?

Review of the Literature

Over the last several years, a number of performance assessment programs interested in

examining and understanding sources of variability in their assessment systems have been

experimenting with Linacre’s (1999a) Facets computer program as a monitoring tool (see, for

example, Heller, Sheingold, & Myford, 1998; Linacre, Engelhard, Tatum, & Myford, 1994; Lunz

& Stahl, 1990; Myford & Mislevy, 1994; Paulukonis, Myford, & Heller, in press). In this study,

we build on the pioneering efforts of researchers who are employing many-facet Rasch

measurement to answer questions about complex rating systems for evaluating speaking and

3

writing. These researchers have raised some critical issues that they are investigating with

Facets. For example,

•

•

•

•

•

•

Can rater training enable raters to function interchangeably (Weigle, 1994)?

Can rater training eliminate differences between raters in the degree of severity they

exercise (Lumley & McNamara, 1995; Marr, 1994; McNamara & Adams, 1991; Tyndall

& Kenyon, 1995; Wigglesworth, 1993)?

Are rater characteristics stable over time (Lumley & McNamara, 1995; Marr, 1994;

Myford, Marr, & Linacre, 1996)?

What background characteristics influence the ratings raters give (Brown, 1995; Myford,

Marr, & Linacre, 1996)?

Do raters differ systematically in their use of the points on a rating scale (McNamara &

Adams, 1991)?

Do raters and tasks interact to affect examinee scores (Bachman, Lynch, & Mason, 1995;

Lynch & McNamara, 1994)?

Several researchers examined the rating behavior of individual raters of the old Test of

Spoken English and reported differences between raters in the degree of severity they exercised

when rating examinee performances (Bejar, 1985; Marr, 1994), but no studies have as yet

compared raters of the revised TSE. Bejar (1985) compared the mean rating of individual TSE

raters and found that some raters tended to give lower ratings than others; in fact, the raters Bejar

studied did this consistently across all four scales of the old TSE (pronunciation, grammar,

fluency, and comprehension). More recently, Marr (1994) used Facets to analyze data from two

1992 and 1993 administrations of the old TSE and found that there was significant variation in

rater severity within each of the two administrations. She reported that more than two thirds of

the examinee scores in the first administration would have been changed if adjustments had been

made for rater severity, while more than half of the examinee scores would have been altered in

the second administration. In her study, Marr also looked at the stability of the rater severity

measures across administrations for the 33 raters who took part in both scoring sessions. She

found that the correlation between the two sets of rater severity measures was only 0.47. She

noted that the rater severity estimates were based on each rater having rated an average of only

30 examinees, and each rater was paired with fewer than half of the other raters in the sample.

This suggests, Marr hypothesized, that much of what appeared to be systematic variance

associated with differences in rater severity may instead have been random error. She concluded

that the operational use of Facets to adjust for rater effects would require some important

changes in the existing TSE rating procedures: A means would need to be found to create greater

overlap among raters so that all raters could be connected in the rating design (the ratings of

eight raters had to be deleted from her analysis because they were insufficiently connected).1 If

1 Disconnection occurs when a judging plan for data collection is instituted that, because of its deficient structure,

makes it impossible to place all raters, examinees, and items in one frame of reference so that appropriate

comparisons can be drawn (Linacre, 1994). The allocation of raters to items and examinees must result in a network

of links that is complete enough to connect all the raters through common items and common examinees (Lunz,

Wright, & Linacre, 1990). Otherwise, ambiguity in interpretation results. If there are insufficient patterns of nonextreme high ratings and non-extreme low ratings to be able to connect two elements (e.g., two raters, two

examinees, two items), then the two elements will appear in separate subsets of Facets output as “disconnected.”

Only examinees that are in the same subset can be directly compared. Similarly, only raters (or items) that are in the

same subset can be directly compared. Attempts to compare examinees (or raters, or items) that appear in two or

more different subsets can be misleading.

4

this were accomplished, one might then have greater confidence in the stability of the estimates

of rater severity both within and across TSE administrations.

In the present study of the revised TSE, we worked with somewhat larger sample sizes

than Marr used. Marr’s November 1992 sample had 74 raters and 1,158 examinees, and her May

1993 sample had 54 raters and 785 examinees. Our February 1997 sample had 66 raters and

1,469 examinees, and our April 1997 sample had 74 raters and 1,446 examinees. Also, while

both of Marr’s data sets had disconnected subsets of raters and examinees in them, there was no

disconnection in our two data sets.

Method

Examinees

Examinees from the February and April 1997 TSE administrations (N = 1,469 and 1,446,

respectively) were generally between the ages of 20 and 39 years of age (83%). Fewer

examinees were under 20 (about 7%) or over 40 (about 10%). These percentages were consistent

across the two test dates. About half of the examinees for both administration dates were female

(53% in February and 47% in April). Over half of the examinees (55% in February and 66% in

April) took the TSE for professional purposes (for example, for selection and certification in

health professions, such as medicine, nursing, pharmacy, and veterinary medicine) with the

remaining examinees taking the TSE for academic purposes (primarily for selection for

international teaching assistantships). Table 1 shows the percentage of examinees taking the

examination in various locations around the world. This table reveals that, for both examination

dates, a majority of examinees were from eastern Asia, and most of the remaining examinees

were from Europe.

Table 1

Distribution of TSE Examinees Across Geographic Locations

February 1997

April 1997

Location

N

%

N

%

Eastern Asia

806

56%

870

63%

Europe

244

17%

197

14%

Africa

113

8%

52

4%

Middle East

102

7%

128

9%

South America

74

5%

47

3%

North America

57

4%

47

3%

Western Asia

35

2%

37

3%

5

Instrument

The purpose of the revised TSE is the same as that of the original TSE. It is a test of

general speaking ability designed to evaluate the oral language proficiency of nonnative speakers

of English who are at or beyond the postsecondary level of education (TSE Program Office,

1995). The underlying construct for the revised test is communicative language ability, which is

defined to include strategic competence and four language competencies: linguistic competence,

discourse competence, functional competence, and sociolinguistic competence (see Appendix).

The TSE is a semi-direct speaking test that is administered via audio-recording equipment using

recorded prompts and printed test booklets. Each of the 12 items that appears on the test consists

of a single task that is designed to elicit one of 10 language functions in a particular context or

situation. The test lasts about 20 minutes and is individually administered. Examinees are given

a test booklet and asked to listen to and read general instructions. A tape-recorded narrator

describes the test materials and asks the examinee to perform several tasks in response to these

materials. For example, a task may require the examinee to interpret graphical material, tell a

short story, or provide directions to someone. After hearing the description of the task, the

examinee is encouraged to construct as complete a response as possible in the time allotted. The

examinee’s oral responses are recorded, and each examinee’s test score is based on an evaluation

of the resulting speech sample.

Raters and the Rating Process

All TSE raters are experienced teachers and specialists in the field of English or English

as a second language who teach at the high school or college level. Teachers interested in

becoming TSE raters undergo a thorough training program designed to qualify them to score for

the TSE program. The training program involves becoming familiar with the TSE rating scale

(see Table 2), the TSE communication competencies, and the corresponding band descriptors

(see Appendix). The TSE trainer introduces and discusses a set of written general guidelines that

raters are to follow in scoring the test. For example, these include guidelines for arriving at a

holistic score for each item, guidelines describing what materials the raters should refer to while

scoring, and guidelines explaining the process to be used in listening to a tape. Additionally, the

trainees are introduced to a written set of item-level guidelines to be used in scoring. These

describe in some detail how to handle a number of recurring scoring challenges TSE raters face.

For example, they describe how raters should handle tapes that suffer from mechanical problems,

performances that fluctuate between two bands on the rating scale across all competencies,

incomplete responses to a task, and off-topic responses.

After the trainees have been introduced to all of the guidelines for scoring, they then

practice using the rating scale to score audiotaped performances. Prior to the training, those in

charge of training select benchmark tapes drawn from a previous test administration that show

performance at the various band levels. They prepare written rationales that explain why each

tape exemplifies performance at that particular level. Rater trainees listen to the benchmark tapes

and practice scoring them, checking their scores against the benchmark scores and reading the

scoring rationales to gain a better understanding of how the TSE rating scale functions. At the

end of this qualifying session, each trainee independently rates six TSE tapes. They then present

the scores they assign each tape to the TSE program for evaluation. To qualify as a TSE rater, a

trainee can have only one discrepant score—where the discrepancy is a difference of more than

6

one bandwidth (that is, 10 points)— among the six rated tapes. If the scores the trainee assigns

meet this requirement, then the trainee is deemed "certified" to score the TSE. The rater can then

be invited to participate in subsequent operational TSE scoring sessions.

At the beginning of each operational TSE scoring session, the raters who have been

invited to participate undergo an initial recalibration training session to refamiliarize them with

the TSE rating scale and to calibrate to the particular test form they will be scoring. The

recalibration training session serves as a means of establishing on-going quality control for the

program.

During a TSE scoring session, examinee audiotapes are identified by number only and are

randomly assigned to raters. Two raters listen to each tape and independently rate it (neither

rater knows the scores the other rater assigned). The raters evaluate an examinee’s performance

on each item using the TSE holistic five-point rating scale; they use the same scale to rate all 12

items appearing on the test. Each point on the scale is defined by a band descriptor that

corresponds to the four language competencies that the test is designed to measure (functional

competence, sociolinguistic competence, discourse competence, and linguistic competence), and

strategic competence. Raters assign a holistic score from one of the five bands for each of the 12

items. As they score, the raters consider all relevant competencies, but they do not assess each

competency separately. Rather, they evaluate the combined impact of all five competencies

when they assign a holistic score for any given item.

To arrive at a final score for an examinee, the 24 scores that the two raters gave are

compared. If the averages of the two raters differ by 10 points or more overall, then a third rater

(usually a very experienced TSE rater) rates the audiotape, unaware of the previously assigned

scores. The final score is derived by resolving the differences among the three sets of scores.

The three sets of scores are compared, and the closest pair is averaged to calculate the final

reported score. The overall score is reported on a scale that ranges from 20 to 60, in increments

of five (20, 25, 30, 35, 40, 45, 50, 55, 60).

Procedure

For this study, we used rating data obtained from the two operational TSE scoring

sessions described earlier. To analyze the data, we employed Facets (Linacre, 1999a), a Raschbased rating scale analysis computer program.

The Statistical Analyses. Facets is a generalization of the Rasch (1980) family of

measurement models that makes possible the analysis of examinations that have multiple

potential sources of measurement error (such as, items, raters, and rating scales).2 Because our

goal was to gain an understanding of the complex rating procedure employed in the TSE setting,

we needed to consider more measurement facets than the traditional two—items and

examinees—taken into account by most measurement models. By employing Facets, we were

able to establish a statistical framework for analyzing TSE rating data. That framework enabled

us to summarize overall rating patterns in terms of main effects for the rater, examinee, and item

2

See McNamara (1996, pp. 283-287) for a user-friendly description of the various models in this family and the

types of situations in which each model could be used.

7

Table 2

TSE Rating Scale

Score

60

Communication almost always effective: task performed very competently

Functions performed clearly and effectively

Appropriate response to audience/situation

Coherent, with effective use of cohesive devices

Use of linguistic features almost always effective; communication not affected by

minor errors

50

Communication generally effective: task performed competently

Functions generally performed clearly and effectively

Generally appropriate response to audience/situation

Coherent, with some effective use of cohesive devices

Use of linguistic features generally effective; communication generally not affected

by errors

40

Communication somewhat effective: task performed somewhat competently

Functions performed somewhat clearly and effectively

Somewhat appropriate response to audience/situation

Somewhat coherent, with some use of cohesive devices

Use of linguistic features somewhat effective; communication sometimes affected

by errors

30

Communication generally not effective: task generally performed poorly

Functions generally performed unclearly and ineffectively

Generally inappropriate response to audience/situation

Generally incoherent, with little use of cohesive devices

Use of linguistic features generally poor; communication often impeded by major

errors

20

No effective communication: no evidence of ability to perform task

No evidence that functions were performed

No evidence of ability to respond appropriately to audience/situation

Incoherent, with no use of cohesive devices

Use of linguistic features poor; communication ineffective due to major errors

Copyright © 1996 by Educational Testing Service, Princeton, NJ. All rights reserved. No reproduction in whole or

in part is permitted without express written permission of the copyright owner.

8

facets. Additionally, we were able to quantify the weight of evidence associated with each of

these facets and highlight individual rating patterns and rater-item combinations that were

unusual in light of expected patterns.

In the many-facet Rasch model (Linacre, 1989), each element of each facet of the testing

situation (that is, each examinee, rater, item, rating scale category, etc.) is represented by one

parameter that represents proficiency (for examinees), severity (for raters), difficulty (for items),

or challenge (for rating scale categories). The Partial Credit form of the many-facet Rasch model

that we used for this study was:

log (

Pnjik

Pnjik - 1

) = Bn - Cj - Di - Fik

(1)

Pnjik

= the probability of examinee n being awarded a rating of k when rated by rater j on

item i

Pnjik-1 = the probability of examinee n being awarded a rating of k-1 when rated by rater j on

item i

Bn

= the proficiency of examinee n

= the severity of rater j

Cj

= the difficulty of item i

Di

= the difficulty of achieving a score within a particular score category (k) averaged

Fik

across all raters for each item separately

When we conducted our analyses, we separated out the contribution of each facet we

included and examined it independently of other facets so that we could better understand how

the various facets operate in this complex rating procedure. For each element of each facet in

this analysis, the computer program provides a measure (a logit estimate of the calibration), a

standard error (information about the precision of that logit estimate), and fit statistics

(information about how well the data fit the expectations of the measurement model).

Results

We have structured our discussion of research findings around the specific questions we

explored with the Facets output. But before we turn to the individual questions, we provide a

brief introduction to the process of interpreting Facets output. In particular, we focus on the map

that is perhaps the single most important and informative piece of output from the computer

program, because it enables us to view all the facets of our analysis at one time.

The maps shown as Figures 1 and 2 display all facets of the analysis in one figure for

each TSE administration and summarize key information about each facet. The maps highlight

results from more detailed sections of the Facets output for examinees, TSE items, raters, and the

TSE rating scale. (For the remainder of this discussion, we will refer only to Figure 1. Figure 2

tells much the same story. The interested reader can apply the same principles described below

when interpreting Figure 2.)

9

The Facets program calibrates the raters, examinees, TSE items, and rating scales so that

all facets are positioned on the same scale, creating a single frame of reference for interpreting

the results from the analysis. That scale is in log-odds units, or “logits,”which, under the model,

constitute an equal-interval scale with respect to appropriately transformed probabilities of

responding in particular rating scale categories. The first column in the map displays the logit

scale. Having a single frame of reference for all the facets of the rating process facilitates

comparisons within and between the facets.

The second column displays the scale that the TSE program uses to report scores to

examinees. The TSE program averages the 24 ratings that the two raters assign to each

examinee, and a single score of 20 to 60, rounded to the nearest 5 (thus, possible scores include

20, 25, 30, 35, 40, 45, 50, 55, and 60), is reported.

The third column displays estimates of examinee proficiency on the TSE assessment—

single-number summaries on the logit scale of each examinee’s tendency to receive low or high

ratings across raters and items. We refer to these as "examinee proficiency measures." Higher

scoring examinees appear at the top of the column, while lower scoring examinees appear at the

bottom of the column. Each star represents 12 examinees, and a dot represents fewer than 12

examinees. These measures appear as a fairly symmetrical platykurtic distribution, resembling a

bell-shaped normal curve—although this result was in no way preordained by the model or the

estimation procedure. Skewed and multi-modal distributions have appeared in other model

applications.

The fourth column compares the TSE raters in terms of the level of severity or leniency

each exercised when rating oral responses to the 12 TSE items. Because more than one rater

rated each examinee’s responses, raters’ tendencies to rate responses higher or lower on average

could be estimated. We refer to these as “rater severity measures.” In this column, each star

represents 2 raters. More severe raters appear higher in the column, while more lenient raters

appear lower. When we examine Figure 1, we see that the harshest rater had a severity measure

of about 1.5 logits, while the most lenient rater had a severity measure of about -2.0 logits.

The fifth column compares the 12 items that appeared on the February 1997 TSE in

terms of their relative difficulties. Items appearing higher in the column were more difficult for

examinees to receive high ratings on than items appearing lower in the column. Items 7, 10, and

11 were the most difficult for examinees, while items 4 and 12 proved easiest.

Columns 6 through 17 display the five-point TSE rating scale as raters used it to score

examinee responses to each of the 12 items. The horizontal lines across each column indicate the

point at which the likelihood of getting the next higher rating begins to exceed the likelihood of

getting the next lower rating for a given item. For example, when we examine Figure 1, we see

that examinees with proficiency measures from about -5.5 logits up through about -3.5 logits are

more likely to receive a rating of 30 than any other rating on item 1; examinees with proficiency

measures between about -3.5 logits and about 2.0 logits are most likely to receive a rating of 40

on item 1; and so on.

The bottom rows of Figure 1 provide the mean and standard deviation of the distribution

of estimates for examinees, raters, and items. When conducting a Facets analysis involving

these three facets, it is customary to center the rater and item facets, but not the examinee facet.

10

By centering facets, one establishes the origin of the scale. As Linacre (1994) cautions, "in most

analyses, if more than one facet is non-centered in an analysis, then the frame of reference is not

sufficiently constrained, and ambiguity results" (p. 27).

Examinees

How much variability is there across examinees in their levels of proficiency? Who differs

more—examinees in their levels of proficiency or raters in their levels of severity?

Looking at Figures 1 and 2, we see that the distribution of rater severity measures is much

narrower than the distribution of examinee proficiency measures. In Figure 1, examinee

proficiency measures show an 18.34-logit spread, while rater severity measures show only a

3.55-logit spread. The range of examinee proficiency measures is about 5.2 times as wide as the

range of rater severity measures. Similarly, in Figure 2 the rater severity measures range from

-1.89 logits to 1.30 logits, a 3.19-logit spread, while the examinee proficiency measures range

from -5.43 logits to 11.69 logits, a 17.12-logit spread. Here, the range of examinee proficiency

measures is about 5.4 times as wide as the range of rater severity measures. A more typical

finding of studies of rater behavior is that the range of examinee proficiency is about twice as

wide as the range of rater severity (J. M. Linacre, personal communication, March 13, 1995).

The finding that the range of TSE examinee proficiency measures is about five times as

wide as the range of TSE rater severity is an important one, because it suggests that the impact of

individual differences in rater severity on examinee scores is likely to be relatively small. By

contrast, suppose that the range of examinee proficiency measures had been twice as wide as the

range of rater severity. In this instance, the impact of individual differences in rater severity on

examinee scores would be much greater. The particular raters who rated individual examinees

would matter more, and a more compelling case could be made for the need to adjust examinee

scores for individual differences in rater severity in order to minimize these biasing effects.

To what extent has the test succeeded in separating examinees into distinct strata of

proficiency? How many statistically different levels of proficiency are identified by the test?

Facets reports an examinee separation ratio (G) which is a ratio scale index comparing

the "true" spread of examinee proficiency measures to their measurement error (Fisher, 1992).

To be useful, a test must be able to separate examinees by their performance (Stone & Wright,

1988). One can determine the number of statistically distinct proficiency strata into which the

test has succeeded in separating examinees (in other words, how well the test separates the

examinees in a particular sample) by using the formula (4G + 1)/3. When we apply this formula,

we see that the samples of examinees that took the TSE in either February 1997 or April 1997

could each be separated into eight statistically distinct levels of proficiency.

11

Figure 1

Map from the Facets Analysis of the Data from the February 1997 TSE Administration

____________________________________________________________________________________________________________________

Rating Scale for Each Item

Logit

TSE Score

Examinee

Rater

Item

1

2

3

4

5

6

7

8

9 10 11 12

____________________________________________________________________________________________________________________

11

High Scores

Severe

Difficult

*.

10

.

.

9

60

.

60 60 60 60 60 60 60 60 60 60 60 60

*.

8

*.

*.

7

**.

--------------------------- ****.

-------------------------6

****.

----------***.

5

****.

50s

*****.

50 50 50 50 50 50 50 50 50 50 50 50

4

*****.

*******.

3

*******.

*********.

----------------- 2 --------------- *********.

------------------------------*******.

*

1

*******.

**.

*******

*********

7 10 11

0

*****.

*********

1 2 3 5 6 8 9

40s

*****.

*******

4 12

40 40 40 40 40 40 40 40 40 40 40 40

-1

****.

**.

**.

*

-2

**.

*

--------------------- **.

--------------------------------3

*.

-----------*

----4

30s

.

.

30 30 30 30 30 30 30 30 30 30 30 30

-5

.

--------------------- .

----------------------6

-----------20

.

------------7

Low scores

Lenient

Easy

20 20 20 20 20 20 20 20 20 20 20 20

____________________________________________________________________________________________________________________

Mean

2.48

.00

.00

S.D.

2.88

.72

.30

____________________________________________________________________________________________________________________

12

Figure 2

Map from the Facets Analysis of the Data from the April 1997 TSE Administration

____________________________________________________________________________________________________________________

Rating Scale for Each Item

Logit

TSE Score

Examinee

Rater

Item

1

2

3

4

5

6

7

8

9 10 11 12

_____________________________________________________________________________________________________________________

11

High Scores

Severe

Difficult

*.

10

.

.

9

60

.

60 60 60 60 60 60 60 60 60 60 60 60

.

8

*.

**.

---7

*.

------------------------------- ***.

-----------------6

****.

-------****.

5

****.

50s

*****

50 50 50 50 50 50 50 50 50 50 50 50

4

******.

******.

3

********

---*********.

------------- 2 --------------- *********.

--------------------------******.

.

1

******.

***.

******.

*****.

7 11

0

*******

*******

1 2 3 5 6 8 9 10

40s

*****.

****.

4 12

40 40 40 40 40 40 40 40 40 40 40 40

-1

****.

**.

***.

.

-2

****.

.

***.

--------------- -3 --------------- *.

-------------------------*.

-4

30s

.

.

30 30 30 30 30 30 30 30 30 30 30 30

-5

.

--------------------- .

-------------------6

-----20

---------------7

Low scores

Lenient

Easy

20 20 20 20 20 20 20 20 20 20 20 20

____________________________________________________________________________________________________________________

Mean

2.24

.00

.00

S.D.

2.91

.66

30

____________________________________________________________________________________________________________________

13

Are the differences between examinee proficiency measures mainly due to measurement error or

to differences in actual proficiency?

Facets also reports the reliability with which the test separates the sample of examinees

—that is, the proportion of observed sample variance which is attributable to individual

differences between examinees (Wright & Masters, 1982). The examinee separation reliability

coefficient represents the ratio of variance attributable to the construct being measured (true

score variance) to the observed variance (true score variance plus the error variance). Unlike

interrater reliability, which is a measure of how similar rater measures are, the separation

reliability is a measure of how different the examinee proficiency measures are (Linacre, 1994).

For the February and April TSE data, the examinee separation reliability coefficients were both

0.98, indicating that the true variance far exceeded the error variance in the examinee proficiency

measures.3

How accurately are examinees measured? How much confidence can we have in the precision

and stability of the measures of examinee proficiency?

Facets reports an overall measure of the precision and stability of the examinee

proficiency measures that is analogous to the standard error of measurement in classical test

theory. The standard error of measurement depicts the extent to which we might expect an

examinee’s proficiency estimate to change if different raters or items were used to estimate that

examinee’s proficiency. The average standard error of measurement for the examinees that took

the February 1997 TSE was 0.44; the average standard error for examinees that took the April

1997 TSE was 0.45.

Unlike the standard error of measurement in classical test theory, which estimates a single

measure of precision and stability for all examinees, Facets provides a separate, unique estimate

for each examinee. For illustrative purposes, we focused on the precision and stability of the

"average" examinee. That is, we determined 95% confidence intervals for examinees with

proficiency measures near the mean of the examinee proficiency distribution for the February

and April data. For the February data, the mean examinee proficiency measure was 2.47 logits,

and the standard error for that measure was 0.41. Therefore, we would expect the average

examinee’s true proficiency measure to lie between raw scores of 49.06 and 52.83 on the TSE

scale 95% of the time. For the April data, the mean examinee proficiency measure was 2.24

logits, and the standard error of that measure was 0.40. Therefore, we would expect the average

examinee’s true proficiency measure to lie between raw scores of 48.63 and 52.07 on the TSE

scale 95% of the time. To summarize, we would expect an average examinee’s true proficiency

to lie within about two raw score points of his or her reported score most of the time.

It is important to note, however, that the size of the standard error of measurement varies

across the proficiency distribution, particularly at the tails of the distribution. In this study,

examinees at the upper end of the proficiency distribution tended to have larger standard errors

on average than examinees in the center of the distribution. For example, examinees taking the

3

According to Fisher (1992), a separation reliability less than 0.5 would indicate that the differences between

examinee proficiency measures were mainly due to measurement error and not to differences in actual proficiency.

14

TSE in February who had proficiency measures in the range of 5.43 logits to 10.30 logits (that is,

they would have received reported scores in the range of 55 to 60 on the TSE scale) had standard

errors for their measures that ranged from 0.40 to 1.03. By contrast, examinees at the lower end

of the proficiency distribution tended to have smaller standard errors on average than examinees

in the center of the distribution. For example, examinees taking the TSE in February who had

proficiency measures in the range of -3.48 logits to -6.68 logits (that is, they would have received

reported scores in the range of 20 to 30 on the TSE scale) had standard errors for their measures

that ranged from 0.36 to 0.38. Thus, for institutions setting their own cutscores on the TSE, it

would be important to take into consideration the standard errors for individual examinee

proficiency measures, particularly for those examinees whose scores lie in critical decisionmaking regions of the score distribution, and not to assume that the standard error of

measurement is constant across that distribution.

Do some examinees exhibit unusual profiles of ratings across the 12 TSE items? Does the

current procedure for identifying and resolving discrepancies successfully identify all cases in

which rater agreement is "out of statistical control" (Deming, 1975)?

As explained earlier, when the averages of two raters’ scores for a single examinee differ

by more than 10 points, usually a very experienced TSE rater rates the audiotape, unaware of the

scores previously assigned. The three sets of scores are compared, and the closest pair is used to

calculate the final reported score (TSE Program Office, 1995). We used Facets to determine

whether this third-rating adjudication procedure is successful in identifying problematic ratings.

Facets produces two indices of the consistency of agreement across raters for each

examinee. The indices are reported as fit statistics—weighted and unweighted, standardized and

unstandardized. In this report, we make several uses of these indices. First, we discuss the

unstandardized, information-weighted mean-square index, or infit, and explain how one can use

that index to identify examinees who exhibit unusual profiles of ratings across the 12 TSE items.

We then examine some examples of score patterns that exhibit misfit to show how one can

diagnose the nature of misfit. Finally, we compare decisions that would be made about the

validity of examinee scores based on the standardized infit index to the decisions that would be

made about the validity of examinee scores based on the current TSE procedure for identifying

discrepantly rated examinees.

First, however, we briefly describe how the unstandardized infit mean-square index is

interpreted. The expectation for this index is 1; the range is 0 to infinity. The higher the infit

mean-square index, the more variability we can expect in the examinee’s rating pattern, even

when rater severity is taken into account. When raters are fairly similar in the degree of severity

they exercise, an infit mean-square index less than 1 indicates little variation in the examinee’s

pattern of ratings (a "flat-line" profile consisting of very similar or identical ratings across the 12

TSE items from the two raters), while an infit mean-square index greater than 1 indicates more

than typical variation in the ratings (that is, a set of ratings with one or more unexpected or

surprising ratings, aberrant ratings that don’t seem to "fit" with the others). Generally, infit

mean-square indices greater than 1 are more problematic than infit indices less than 1. There are

no hard-and-fast rules for setting upper- and lower-control limits for the examinee infit meansquare index. Some testing programs use an upper-control limit of 2 or 3 and a lower-control

limit of .5; more stringent limits might be instituted if the goal were to strive to reduce

15

significantly variability within the system. The more extreme the infit mean-square index, the

greater potential gains for improving the system—either locally, by rectifying an aberrant rating,

or globally, by gaining insights to improve training, rating, or logistic procedures.

For this study, we adopted an upper-control limit for the examinee infit mean-square

index of 3.0, a liberal control limit to accommodate some variability in each examinee’s rating

pattern, and a lower-control limit of 0.5. We wished to allow for a certain amount of variation in

raters’ perspectives, yet still catch cases in which rater disagreement was problematic. An infit

mean-square index beyond the upper-control limit signals an examinee performance that might

need another listening before the final score report is issued, particularly if the examinee’s score

is near a critical decision-making point in the score distribution. Table 3 summarizes examinee

infit information from the February and April 1997 TSE test administrations.

As Table 3 shows, of the 1,469 examinees tested in February, 165 (about 11%) had infit

mean-square indices less than 0.5. Similarly, of the 1,446 examinees tested in April, 163 (again,

about 11%) had infit mean-square indices less than 0.5. These findings suggest that about 1 in

10 examinees in these TSE administrations may have received very similar or identical ratings

across all 12 TSE items.

Further, in the February administration, 15 examinees (about 1%) had infit mean-square

indices equal to or greater than 3.0. Similarly, in the April administration, 15 (1%) had infit

mean-square indices equal to or greater than 3.0. Why did these particular examinees misfit? In

Table 4 we examine the rating patterns associated with some representative misfitting cases.

Table 3

Misfitting and Overfitting Examinees from the February and April 1997 TSE Administrations

February 1997

April 1997

Infit MeanSquare Index

Number of

Examinees

Percent of

Examinees

Number of

Examinees

Percent of

Examinees

< 0.5

165

11.2%

163

11.3%

3.0 to 4.0

11

0.7%

11

0.7%

4.1 to 5.0

2

0.1%

2

0.1%

5.1 to 6.0

2

0.1%

0

0.0%

6.1 to 7.0

0

0.0%

1

0.1%

7.1 to 8.0

0

0.0%

1

0.1%

16

Table 4

Rating Patterns and Fit Indices for Selected Examinees

Ratings Received by Examinee #110

(Infit Mean-Square Index = 0.1; Proficiency Measure = .13, Standard Error = .53)

Rater #74

(Severity = -.39)

Rater #69

(Severity = .95)

Item Number

6

7

1

2

3

4

5

40

40

40

40

40

40

40

40

40

40

40

40

8

9

10

11

12

40

40

40

40

40

40

40

40

40

40

40

40

Ratings Received by Examinee #865

(Infit Mean-Square Index = 1.0; Proficiency Measure = -.39, Standard Error = .47)

Rater #30

(Severity = -.53)

Rater #59

(Severity = 1.19)

Item Number

6

7

1

2

3

4

5

40

40

40

40

40

40

40

30

30

40

30

40

8

9

10

11

12

40

50

40

40

40

50

40

40

40

30

40

40

Ratings Received by Examinee #803

(Infit Mean-Square Index = 3.1; Proficiency Measure = 2.55, Standard Error = .42)

Rater #31

(Severity = -.79)

Rater #42

(Severity = -.61)

Item Number

6

7

1

2

3

4

5

50

50

50

60

50

60

40

40

50

50

40

50

8

9

10

11

12

30

60

60

50

60

50

30

40

50

40

50

40

Ratings Received by Examinee #1060

(Infit Mean-Square Index = 6.6; Proficiency Measure = 2.73, Standard Error = .41)

Rater #18

(Severity = -.53)

Rater #36

(Severity = .29)

Item Number

6

7

1

2

3

4

5

40

40

30

40

30

40

50

60

60

60

60

60

17

8

9

10

11

12

40

30

30

40

40

30

60

60

60

60

50

50

As Table 4 shows, the ratings of Examinee #110 exhibit a flat-line pattern: straight 40s

from both raters. Facets flags such rating patterns for further examination because they display

so little variation. This deterministic pattern does not fit the expectations of the model; the

model expects that for each examinee there will be at least some variation in the ratings across

items. Examinees who receive very similar or nearly identical ratings across all 12 items will

show fit indices less than 0.5; upon closer inspection, their rating patterns will frequently reveal

this flat-line nature. In some cases, one might question whether the raters who score such an

examinee’s responses rated each item independently or, perhaps, whether a halo effect may have

been operating.

The ratings of Examinee #865 (infit mean-square index = 1.0) shown in Table 4 are

fairly typical. There is some variation in the ratings: mostly 40s with an occasional 30 from

Rater #59, who tends to be one of the more severe raters (rater severity measure = 1.19), and

mostly 40s with an occasional 50 from Rater #30, who tends to be one of the more lenient raters

(rater severity measure = -.53).

Table 4 shows that, for Examinee #803, the two ratings for Item 7 were misfitting. Both

raters gave this examinee unexpectedly low ratings of 30 on this item, while the examinee’s

ratings on all other items are higher, ranging from 40 to 60. When we examine the Facets table

of misfitting ratings, we find that the model expected these raters to give 40s on Item 3, not 30s.

These two raters tended to rate leniently overall (rater severity measures = -.61 and -.79), so their

unexpectedly low ratings of 30 are somewhat surprising in light of the other ratings they gave the

examinee.

The rating pattern for Examinee #1060, shown in Table 4, displays a higher level of

misfit (infit mean-square index = 6.6). In this case, the examinee received 30s and 40s from a

somewhat lenient rater (rater severity measure = -.53) and 50s and 60s from a somewhat harsh

rater (rater severity measure = .29). The five 30 ratings are quite unexpected, especially from a

rater who has a tendency to give higher ratings on average. These ratings are all the more

unexpected in light of the high ratings (50s and 60s) given this examinee by a rater who tends to

rate on average more severely. In isolated cases like this one, TSE program personnel might

want to review the examinee’s performance before issuing a final score report. The scores for

Examinee #1060 were flagged by the TSE discrepancy criteria as being suspect because the

averages of the two raters’ sets of scores were 35.83 and 57.50—a difference of more than 10

points; third ratings were used to resolve the differences between the scores.

We also analyzed examinee fit by comparing the standardized infit indices to the

discrepancy resolution criteria used by the TSE program. The standardized infit index is a

transformation of the unstandardized infit index, scaled as a z score (that is, a mean of 0 and a

standard deviation of 1). Under the assumption of normality, the distribution of standardized

infit indices can be viewed as indicating the probability of observing a specific pattern of

discrepant ratings when all ratings are indeed nondiscrepant. That is, standardized infit indices

can be used to indicate which of the observed rating patterns are most likely to include surprising

or unexpected ratings. For our analyses, we adopted an upper-control limit for the examinee

standardized infit of 3.0 which suggests that infit indices for any rating patterns that exceed this

value are likely to be nonaberrant only 0.13% of the time.

18

Thus, we identified examinees with one or more aberrant ratings using the standardized

infit index, which takes into account the level of severity each rater exercised when rating

examinees. We also identified examinees having discrepant ratings according to TSE resolution

criteria, which only takes into account the magnitude of the differences between the scores

assigned by two raters and does not consider the level of severity exercised by those raters.

Tables 5 and 6 summarize this information for the February and April test administrations by

providing the number and percentage of examinees whose rating patterns were identified as

suspect under both sets of criteria.

Based on the TSE discrepancy criteria, about 4% of the examinees were identified as

having aberrant ratings in each administration. Based on the standardized infit index, about 3%

of the examinees’ ratings were aberrant. What is more interesting, however, is the fact that the

two methods identified only a small number of examinees in common. Only 1% of the

examinees were identified as having suspect rating patterns according to both criteria, as shown

in the lower right cell of each table.

About 3% of the examinees in each data set were identified as having suspect rating

patterns by the TSE discrepancy criteria but were not identified as being suspect by the infit

index (as shown in the shaded upper right cell of each table). These cases are most likely ones in

which an examinee was rated by one severe rater and one lenient rater. Such ratings would not

be unusual in light of each rater’s overall level of severity; each rater would have been using the

rating scale in a manner that was consistent with his or her use of the scale when rating other

Table 5

Examinees from the February 1997 TSE Administration

Identified as Having Suspect Rating Patterns

TSE Criteria

Nondiscrepant

Discrepant

Total

Nondiscrepant

1,380

(94%)

46

(3%)

1,426

(97%)

Discrepant

29

(2%)

14

(1%)

43

(3%)

Total

1,409

(96%)

60

(4%)

1,469

(100%)

Infit Criteria

19

Table 6

Examinees from the April 1997 TSE Administration

Identified as Having Suspect Rating Patterns

TSE Criteria

Nondiscrepant

Discrepant

Total

Nondiscrepant

1,359

(94%)

47

(3%)

1,406

(97%)

Discrepant

26

(2%)

14

(1%)

40

(3%)

Total

1,385

(96%)

61

(4%)

1,446

(100%)

Infit Criteria

examinees of similar proficiency. The apparent discrepancies between raters could have been

resolved by providing a model-based score that takes into account the levels of severity of the

two raters when calculating an examinee’s final score. If Facets were used to calculate examinee

scores, then there would be no need to bring these types of cases to the attention of the more

experienced TSE raters for adjudication. Facets would have automatically adjusted these

scores for differences in rater severity, and thus would not have identified these examinees as

misfitting and in need of a third rater’s time and energy.

The shaded lower left cells in Tables 5 and 6 indicate that about 2% of the examinees

were identified as having suspect rating patterns according to the infit criteria, but were not

identified based on the TSE discrepancy criteria. These cases are most likely situations in which

raters made seemingly random rating errors, or examinees performed differentially across items

in a way that differs from how other examinees performed on these same items. These cases

would seem to be the ones most in need of reexamination and reevaluation by the experienced

TSE raters, rather than the cases identified in the shaded upper right cell of each table.

Items

Is it harder for examinees to get high ratings on some TSE items than others? To what extent do

the 12 TSE items differ in difficulty?

To answer these questions, we can examine the item difficulty measures shown in Table

7 and Table 8. These tables order the 12 TSE items from each test according to their relative

difficulties. More difficult items (that is, those that were harder for examinees to get high ratings

on) appear at the top of each table, while easier items appear at the bottom. For the February

20

Table 7

Item Measurement Report for the February 1997 TSE Administration

Item

Item 7

Item 11

Item 10

Item 3

Item 9

Item 8

Item 2

Item 5

Item 1

Item 6

Item 4

Item 12

Difficulty

Measure

(in logits)

Standard

Error

Infit

Mean-Square

Index

.46

.43

.25

.16

.11

.10

-.01

-.11

-.16

-.23

-.48

-.52

.04

.04

.04

.04

.04

.04

.04

.04

.04

.04

.04

.04

1.0

1.0

1.0

1.0

0.9

0.9

1.1

1.0

1.2

0.9

0.9

0.9

Table 8

Item Measurement Report for the April 1997 TSE Administration

Item

Item 11

Item 7

Item 6

Item 2

Item 3

Item 9

Item 5

Item 10

Item 1

Item 8

Item 12

Item 4

Difficulty

Measure

(in logits)

Standard

Error

Infit

Mean-Square

Index

.45

.42

.25

.16

.06

-.01

-.03

-.09

-.10

-.10

-.38

-.64

.04

.04

.04

.04

.04

.04

.04

.04

.04

.04

.04

.04

0.9

1.0

0.9

1.1

1.0

0.9

1.0

1.0

1.3

0.9

0.8

0.9

21

administration, the item difficulty measures range from -.52 logits for Item 12 to .46 logits for

Item 7, about a 1-logit spread. The range of difficulty measures for the items that appeared on

the April test is nearly the same: items range in difficulty from -.64 logits for Item 4 to .45 logits

for Item 11, about a 1-logit spread.

The spread of the item difficulty measures is very narrow compared to the spread of

examinee proficiency measures. If the items are not sufficiently spread out along a continuum,

then that suggests that those designing the assessment have not succeeded in defining distinct

levels along the variable they are intending to measure (Wright & Masters, 1982). As shown

earlier in Figures 1 and 2, the TSE items tend to cluster in a very narrow band. For both the

February and April administrations, Items 4 and 12 were slightly easier for examinees to get high

ratings on, while Items 7 and 11 were slightly more difficult. However, in general, the items

differ relatively little in difficulty and thus do not convincingly define a line of increasing

intensity. To improve the measurement capabilities of the TSE, test developers may want to

consider introducing into the instrument some items that are easier than those that currently

appear, as well as some items that are substantially more difficult than the present items. If such

items could be designed, then they would help to define a more recognizable and meaningful

variable and would allow for placement of examinees along the variable defined by the test

items.

Can we calibrate ratings from all 12 TSE items, or do ratings on certain items frequently fail to

correspond to ratings on other items (that is, are there certain items that do not "fit" with the