Vol. 2 Issue 3 Mar. – Apr. 2008 - Karpagam Academy of Higher

advertisement

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

Trace Driven Simulation of GDSF# and Existing Caching Algorithms

for Internet Web Servers

1

J B Patil, 2B.V. Pawar

ABSTRACT

policy GDSF# gives close to perfect performance in both

Web caching is used to improve the performance of the

the important metrics: HR and BHR.

Internet Web servers. Document caching is used to

Keywords: Web caching, replacement policy, hit ratio,

reduce the time it takes Web server to respond to client

byte hit ratio, trace-driven simulation

requests by keeping and reusing Web objects that are

1. INTRODUCTION

likely to be used in the near future in the main memory of

the Web server, and by reducing the volume of data

The enormous popularity of the World Wide Web has

transfer between Web server and secondary storage. The

caused a tremendous increase in network traffic due to

heart of a caching system is its page replacement policy,

http requests. This has given rise to problems like user-

which needs to make good replacement decisions when

perceived latency, Web server overload, and backbone

its cache is full and a new document needs to be stored.

link congestion. Web caching is one of the ways to

The latest and most popular replacement policies like

alleviate these problems [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11]. Web

GDSF use the file size, access frequency, and age in the

caches can be deployed throughout the Internet, from

decision process.

browser caches, through proxy caches and backbone

caches, through reverse proxy caches, to the Web server

The effectiveness of any replacement policy can be

caches.

evaluated using two metrics: hit ratio (HR) and byte hit

In our work, we use trace-driven simulation for evaluating

ratio (BHR). There is always a trade-off between HR and

the performance of different caching policies for Internet

BHR [1]. In this paper, using three different Web server

Web servers. Our study uses Web server traces from

logs, we use trace driven analysis to evaluate the effects

three different sites on the Internet.

of different replacement policies on the performance of a

Web server. We propose a modification of GDSF policy,

Cao and Irani have surveyed ten different policies and

GDSF#, which allows augmenting or weakening the impact

proposed a new algorithm, Greedy-Dual-Size (GDS) in

of size or frequency or both on HR and BHR. Our

[5]. The GDS algorithm uses document size, cost, and

simulation results show that our proposed replacement

age in the replacement decision, and shows better

performance compared to previous caching algorithms.

In [4] and [12], frequency was incorporated in GDS,

Department of Computer Engineering, R. C. Patel Institute

of Technology, Shirpur. (M.S.), India. E-mail:

jbpatil@hotmail.com

1

resulting in Greedy-Dual-Size-Frequency (GDSF) and

Greedy-Dual-Frequency (GDF). While GDSF is attributed

to having best hit ratio (HR), it having a modest byte hit

Department of Computer Science, North Maharashtra

University, Jalgaon. (M.S.), India. E-mail:

bvpawar@hotmail.com

2

ratio (BHR). Conversely, GDF yields a best HR at the cost

of worst BHR [12].

573

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

size si, then the impact of size is less than that of frequency

In this paper, we propose a new algorithm, called Greedy-

[4].

Dual-Size -Frequency # (GDSF #), which allows

augmenting or weakening the impact of size or frequency

Extending this logic further, we propose an extension to

or both on HR and BHR. We compare GDSF# with GDS

the GDSF, called GDSF#, where the key value of

family algorithms like GDS(1), GDS(P), GDSF(1), GDSF(P).

document is computed as

Our simulation study shows that GDSF# gives close to

perfect performance in both the important metrics: HR

and BHR.

where » and ´ are rational numbers. If we set » or ´ above

1, it augments the role of the corresponding parameter.

The remainder of this paper is organized as follows.

Conversely, if we set » or ´ below 1, it weakens the role of

Section 2 introduces GDSF#, a new algorithm for Web

the corresponding parameter.

cache replacement. Section 3 describes the simulation

model for the experiment. Section 4 describes the

Therefore, we present the GDSF# algorithm as shown

experimental design of our simulation while Section 5

below:

presents the simulation results. We present our

begin

conclusions in Section 6.

Initialize L = 0

2. GDSF# Algorithm (Our Proposed Algorithm)

Process each request document in turn:

In GDSF, the key value of document i is computed as

follows [4] [12]:

let current requested document be i

if i is already in cache

The Inflation Factor L is updated for every evicted

else

document i to the priority of this document i. In this way,

L increases monotonically. However, the rate of increase

while there is not enough room in cache for p

is very slow. If a faster mechanism for increasing L is

begin

designed, it will lead to a replacement algorithm with

let L = min(

features closure to LRU. We can apply similar reasoning

), for all i in cache

evict i such that

to

and

using

,

If we augment the frequency by

,…, etc. instead of

end

load i into cache

then the impact of

frequency is more pronounced than that of size. Similarly,

if we use

,

end

,…, etc. or use log (si) instead of file

574

=L

Trace Driven Simulation of GDSF# and Existing Caching Algorithms for Internet Web Servers

3. SIMULATION MODEL FOR THE EXPERIMENT

4. EXPERIMENTAL DESIGN

In case of Web Servers, a very simple Web server is

This section describes the design of the performance

assumed with a single-level file cache. When a request is

study of cache replacement policies. The discussion

received by the Web server, it looks for the requested file

begins with the factors and levels used for the simulation.

in its file cache. A cache hit occurs if the copy of the

Next, we present the performance metrics used to evaluate

requested document is found in the file cache. If the

the performance of each replacement policy used in the

document is not found in the file cache (a cache miss),

study. Lastly, we discuss other design issues regarding

the document must be retrieved from the local disk or

the simulation study.

from the secondary storage. On getting the file, it stores

4.1 Factors and Levels

the copy in its file cache so that further requests to the

There are two main factors used in the in the trace-driven

same document can be serviced from the cache. If the

simulation experiments: cache size and cache replacement

cache is already full when a file needs to be stored, it

policy. This section describes each of these factors and

triggers a replacement policy.

the associated levels.

Our model also assumes file-level caching. Only complete

Cache Size

documents are cached; when a file is added to the cache,

the whole file is added, and when a file is removed from

The first factor in this study is the size of the cache. For

the cache, the entire file is removed.

the Web server logs, we have used seven levels from 1

MB to 64 MB. The upper bounds of cache size are chosen

For simplicity, our simulation model completely ignores

to represent an infinite cache size for the respective traces.

the issues of cache consistency (i.e., making sure that

An infinite cache is one that is so large that no file in the

the cache has the most up-to-date version of the

given trace, once brought into the cache, need ever be

document, compared to the master copy version at the

evicted. It allows us to determine the maximum achievable

original Web server, which may change at any time).

cache hit ratio and byte hit ratio, and to determine the

Lastly, caching can only work with static files, dynamic

performance of a smaller cache size to be compared to

files that have become more and more popular within the

that of an infinite cache.

past few years, cannot be cached.

Replacement Policy

3.1 Workload Traces

In our research, we examine the following previously

proposed replacement policies: GDS(1), GDS(P), GDSF(1),

In this study, logs from three different Web servers are

and GDSF(P). Our proposed policy GDSF# is also

used: a Web server from an academic institute, Symbiosis

examined and evaluated against these policies.

Institute of Management Studies, Pune; a Web server

from a manufacturing company, Thermax, Pune, and a

Greedy-Dual-Size (GDS): GDS [5] maintains for each

Web server for an E-Shopping site in UK,

object a characteristic value Hi. A request for object i

www.wonderfulbuys.co.uk.

(new request or hit) requires a recalculation of Hi. Hi is

calculated as

575

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

We show the simulation results of GDS(1), GDS(P),

GDSF(1), GDSF(P)., GDSF#(1), and GDSF#(P) for the Web

L is a running aging factor, which is initialized to zero, ci is

server traces for hit rate and byte hit rate. The graph for

the cost to fetch object i from its origin server, and si is

Infinite indicates the performance for the Infinite cache

the size of object i. GDS chooses the object with the

size.

smallest Hi-value. The value of this object is assigned to

L. if cost is set to 1, it becomes GDS(1), and when cost is

FIFO is chosen as a representative of strategies that do

set to p = 2 + size/536, it becomes GDS(P).

not exploit any particular access pattern characteristics,

and hence its performance can be used to gauge the

Greedy-Dual-Size-Frequency (GDSF): GDSF [4] [12]

benefits of exploiting any such characteristics.

calculates Hi as

5.1 Simulation Results for GDSF# Algorithm

In this section, we experiment with the various values of

It takes into account frequency of reference in addition

» and ´ in the equation for computing key value,

to size. Similar to GDS, we have GDSF(1) and GDSF(P).

4.2 Performance Metrics

to augment or weaken the impact of frequency and size in

The performance metrics used to evaluate the various

GDSF#.

replacement policies used in this simulation are Hit Rate

and Byte Hit Rate.

Effect of Augmenting Frequency in GDSF#

Hit Rate (HR) Hit rate (HR) is the ratio of the number of

if we add frequency in the GDS to make it GDSF, it

requests met in the cache to the total number of requests.

improves BHR considerably and HR slightly. To check

whether we can further improve the performance, we set

Byte Hit Rate (BHR) Byte hit rate (BHR) is concerned

ë = 2, 5, 10 with δ = 1 in the equation for

with how many bytes are saved. This is the ratio of the

. Figure 1

shows a comparison of GDSF(1) and GDSF#(1) with ë =

number of bytes satisfied from the cache to the total bytes

2, 5, 10 with ä = 1 for the three Web server traces. The

requested.

results indicate that augmenting frequency in GDSF#

5. SIMULATION RESULTS

improves BHR in all the three traces but the improvement

This section presents the simulation results for

comes at the cost of HR. Again, we find that with ë = 2, we

comparison of different file caching strategies.

get the best results for BHR.

Section 5.1 gives the simulation results for the GDSF#

algorithm. Section 5.2 shows the results for Web servers.

576

Trace Driven Simulation of GDSF# and Existing Caching Algorithms for Internet Web Servers

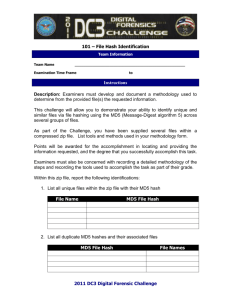

Figure 1: HR and BHR for GDSF# algorithm using Web server traces (ë=2, 5, 10)

577

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

Effect of De-Augmenting Size in GDSF#

We find that we get best results for both HR and BHR for

the combination ë = 2 and ä = 0.9 for all the six Web

We have seen that emphasizing frequency in GDSF#

traces. This combination shows close to perfect

results in improved BHR. Now let us check the effect of

performance for both the important metrics: HR and BHR.

de-augmenting or weakening the size. For this, we set ä =

0.3, 0.6, 0.9 with ë = 1 in the equation for

. Figure 2

This is important result because as noted earlier, there is

shows a comparison of GDSF(1) and GDSF#(1) with ä =

always a trade-off between HR and BHR [2]. Replacement

0.3, 0.6, 0.9 with ä = 1 for the three Web server traces. The

policies that try to improve HR do so at the cost of BHR,

results are on expected lines. The effect of decreased

and vice versa [5]. Often, a high HR is preferable because

impact of file size improves BHR across all the six traces.

it allows a greater number of requests to be serviced out

Again, it is at the cost of HR. Specifically, we get best

of cache and thereby minimizing the average request

BHR at ä = 0.3, and best HR at ä = 0.9.

latency as perceived by the user. However, it is also

desirable to maximize BHR to minimize disk accesses or

Effect of Augmenting Frequency & De-Augmenting Size

outward network traffic.

in GDSF#

In the next sections, we use the best combination of ë= 2

We have seen that emphasizing frequency and de-

and ä= 0.9 in the equation for

emphasizing size in GDSF# results in improved BHR, at

for GDSF# to compare

the performance of GDSF# with GDS(1), GDS(P), GDSF(1),

the cost of slight reduction in HR. Now the question is

and GDSF(P).. So, instead of denoting it as GDSF#(ë=2,

then whether we can achieve still better results by

ä=0.9), we will denote it as simply GDSF#.

combination of both augmenting frequency and deaugmenting size. For this, we try different combinations

of ë and ä.

578

Trace Driven Simulation of GDSF# and Existing Caching Algorithms for Internet Web Servers

Figure 2: HR and BHR for GDSF# algorithm using Web server traces (ä=0.3, 0.6, 0.9)

5.2 Simulation Results for Web Servers

In this section, we present and discuss simulation results

for Thermax, Wonderfulbuys, and Symbiosis Web

servers.

Simulation Results for Thermax

Figures 3a and 3b give the comparison of GDSF# with

other algorithms.

Figure 3b: Byte Hit rate of Thermax trace

The results indicate that the HR achieved with an infinite

sized cache is 98.71% while the BHR is 94.19% for the

Thermax trace. Of the algorithms shown in Figure 3,

GDSF(1) and GDSF#(1) had the highest and almost similar

HRs.

In case of BHRs, GDSF(P), GDSF#(1), and GDSF#(P) had

the highest BHRs. However, GDSF(P) had a lower HR.

GDSF# is thus optimized for both HR and BHR in case of

Figure 3a: Hit rate of Thermax trace

Thermax trace.

579

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

Simulation Results for Wonderfulbuys

Simulation Results for Symbiosis

Figures 4a and 4b show the performance graphically.

Figures 5a and 5b show the performance graphically.

Figure 4a: Hit rate of Wonderfulbuys trace

Figure 5a: Hit rate of Symbiosis trace

Figure 4b: Byte Hit rate of Wonderfulbuys trace

Figure 5b: Byte Hit rate of Symbiosis trace

The results indicate that the HR achieved with an infinite

sized cache is 99.66% while the BHR is 99.27% for the

The results indicate that the HR achieved with an infinite

Wonderfulbuys trace. Of the algorithms shown in Figure

sized cache is 98.16% while the BHR is 95.87% for the

4, GDSF(1) had the highest HR followed by GDSF#(1).

Symbiosis trace. Of the algorithms shown in Figure 5,

GDS(1) and GDS(P) also had a lower HRs.

GDS(1) and GDSF(1) had the highest HR followed by

GDSF#(1). GDS(1) had a lower HR.

In case of BHRs, GDSF#(P) had the highest BHR followed

by GDSF(P), GDSF#(1), and GDSF(1). However, GDSF#

In case of BHRs, GDSF#(1), and GDSF#(P) had the highest

scores over the others in better HR in case of

BHRs followed by GDSF(1) and GDSF(P). However,

Wonderfulbuys trace.

580

Trace Driven Simulation of GDSF# and Existing Caching Algorithms for Internet Web Servers

because of comparatively better HR, GDSF# scores over

explanation is that in size-based policies, large files

most of the other algorithms in case of Symbiosis trace.

are always the potential candidates for the eviction,

and the inflation factor is advanced very slowly, so

6. CONCLUSION

that even if a large file is accessed on a regular basis,

In this paper, we proposed a Web cache algorithm called

it is likely to be evicted repeatedly. GDSF and GDSF#

GDSF#, which tries to maximize both HR and BHR. It

uses frequency as a parameter in its decision-making,

incorporates the most important parameters of the Web

so popular large files have better chance of staying in

traces: size, frequency of access, and age (using inflation

a cache. In addition, the inflation or ageing factor, L is

value, L) in a simple way.

now advanced faster. GDSF and GDSF# shows

substantially improved BHR across all traces.

We have compared GDSF# with some popular cache

replacement policies for Web servers using a trace-driven

F

simulation approach. We conducted several experiments

frequency yield better BHR because they do not

using three Web server traces. The replacement policies

discriminate against the large files. These policies also

examined were GDS(1), GDS(P), GDSF(1), GDSF(P).

retain popular objects (both small and large) longer

GDSF#(1), and GDSF#(P). We used metrics like Hit Ratio

than recency-based policies like LRU. However,

(HR) and Byte Hit Ratio (BHR) to measure and compare

normally these policies show poor HR because these

performance of these algorithms. Our experimental results

policies do not take into account the file size which

show that:

F

results in a higher file miss penalty.

As pointed out by Williams et al. in [11], the observed

F

HRs can range from 20% to as high as 98%, with

frequency or both on HR and BHR. Our results show

rate of 98% comes from a Web server cache, rather

that our proposed replacement policy gives close to

than proxy cache. Our results are consistent with this

perfect performance in both the important metrics: HR

finding.

and BHR.

The results also indicate that it is more difficult to

7. REFERENCES

achieve high BHRs than high HRs. For example, in all

1.

the three traces, the maximum BHR is always less than

Policies”, In ACM SIGMETRICS Performance

The results are consistent across all the three traces.

Evaluation Review, August 1999.

GDSF# and GDSF show the best HR and BHR

F

M. Arlitt, R. Friedrich, and T. Jin, “Workload

Characterization of Web Proxy Cache Replacement

maximum HR.

F

We analyzed the performance of GDSF# policy, which

allows augmenting or weakening the impact of size or

majority ranging around 50%. The workload with a hit

F

Similarly, replacement policies giving importance to

2.

M. Abrams, C. R. Standridge, G. Abdulla, S. Williams,

significantly outperforming the baseline algorithms

and E. A. Fox, “Caching Proxies: Limitations and

like LRU, LFU for these metrics.

Potentials”, In Proceedings of the Fourth

International World Wide Web Conference, Pages

Replacement policies emphasizing the document size

119-133, Boston, MA, December 1995.

yield better HR, but typically show poor BHR. The

581

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

3.

M. Arlitt and C. Williamson, “Trace Driven

Proceedings of ACM SIGCOMM, PP 293-305,

Simulation of Document Caching Strategies for

Stanford, CA, 1996, Revised March 1997.

12. M. F., Arlitt, L. Cherkasova, J. Dilley, R. J. Friedrich,

Internet Web Servers”, Simulation Journal, Vol. 68,

4.

No. 1, PP 23-33, January 1977.

and T. Y Jin, “Evaluating Content Management

L. Cherkasova, “Improving WWW Proxies

Techniques for Web Proxy Caches”, ACM

Performance with Greedy-Dual-Size-Frequency

SIGMETRICS Performance Evaluation Review,

Caching Policy”, In HP Technical Report HPL-98-

Vol.27, No. 4, PP 3-11, March 2000.

69(R.1), November 1998.

5.

Author’s Biography

P. Cao and S. Irani, “Cost-Aware WWW Proxy

Caching Algorithms”, In Proceedings of the

Prof. J. B. Patil received his BE

USENIX Symposium on Internet Technology and

(Electronics) degree from the SGGS College

Systems, PP 193-206, December 1997.

6.

of Engineering & Technology, Nanded in

S. Jin and A. Bestavros, “GreedyDual*: Web

1986; M.Tech.( Computer Science & Data

Caching Algorithms Exploiting the Two Sources of

Processing) from Indian Institute of

Temporal Locality in Web Request Streams”, In

Technology, Kharagpur in 1993; and has submitted his

Proceedings of the 5th International Web Caching

Ph.D. thesis to the North Maharashtra University, Jalgaon

and Content Delivery Workshop, 2000.

7.

in Computer Science in January 2008. He is presently

S. Podlipnig and L. Boszormenyi, “A Survey of Web

working as Principal and Professor in the Department of

Cache Replacement Strategies”, ACM Computing

Computer Engineering, R. C. Patel Institute of Technology,

Surveys, Vol. 35, No.4, PP 374-398, December 2003.

8.

Shirpur since 2001. His current research interest is in the

L. Rizzo, and L. Vicisano, “Replacement Policies

area of Internet Web caching and prefetching. He has

for a Proxy Cache”, IEEE/ACM Transactions on

authored and co-authored over 20 papers in referred

Networking, Vol. 8, No. 2, PP 158-170, April 2000.

9.

academic journals and national/international conference

A. Vakali, “LRU-based Algorithms for Web Cache

proceedings. He is a life member of Computer Society of

Replacement”, In International Conference on

India (CSI), Institute of Engineers (India), and Indian

Electronic Commerce and Web Technologies,

Society for Technical Education (ISTE).

Lecture Notes in Computer Science, Vol.1875, PP 409-

Prof. B. V. Pawar received his B.E. degree

418, Springer-Verlag, Berlin, Germany, 2000.

10. R. P. Wooster and M. Abrams., “Proxy Caching that

from the V.J.T.I., Mumbai, in Production

Estimates Page Load Delays”, In Proceedings of

Engineering in 1986; M.Sc. degree from the

the Sixth International World Wide Web Conference,

Mumbai University, Mumbai, in Computer

PP 325-334, Santa Clara, CA, April 1997.

Science in 1988; Ph.D. degree from the North

11. S. Williams, M. Abrams, C. R. Standridge, G. Abdulla,

Maharashtra University, Jalgaon in Computer Science in

and E. A. Fox, “Removal Policies in Network

2000. He is presently Professor in the Department of

Caches for World-Wide-Web Documents”, In

Computer Science, North Maharashtra University,

582

Trace Driven Simulation of GDSF# and Existing Caching Algorithms for Internet Web Servers

Jalgaon where he has been involved in teaching and

in referred academic journals and national/international

research since 1991. His current research interests are in

conference proceedings. He is a life member of Computer

the areas of natural language processing and information

Society of India (CSI), India and Linguistic Society of

retrieval. He has authored and co-authored over 60 papers

India (LSI).

583

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

An Extended MD5 (ExMD5) Hashing Algorithm For Better Data Integrity

S. Karthikeyan

ABSTRACT

1. INTRODUCTION

Recent developments in scientific and engineering

The Trusted Network Interpretation [4] points out that

applications communication are playing a major role in

integrity ensures computerized data, which are the same

online transactions. All the transactions across the globe

as those in source documents and have not been exposed

are shared by network. The integrity ensures that only

to accidental or malicious alteration or destruction. The

authorized parties are able to modify the transmitted

integrity ensures the data related are precise, accurate,

information. Modification includes writing, changing,

unmodified, meaningful and usable. The operating

changing status and delaying or replaying transmitted

system, database management system and the network

messages. The Message Digest functions MD4 and MD5

enforce the integrity.

provide one-way function integrity in the form of octets,

The integrity of data is more important like confidentiality.

32-bit and 64 bit words respectively. The extended MD5

In some situations passing authentication data, the

(ExMD5) was designed to be somewhat more

integrity is paramount. In other cases, the need for

conservative than MD5 in terms of being more concerned

integrity is less obvious. Some of the message integrity

with security. An extended MD5 hashing algorithm that

threats are falsification of messages and noise. The

follows five passes than MD5, which follows only four

hackers may do the falsification of messages during

passes. In the proposed approach, the message is

transmission using active wiretap. Signals sent over

processed in 512-bit blocks and the message digest is a

communication media are subject to interference from

128-bit quantity. Each stage consists of computing

other traffic on the same media, as well as from natural

function based on the 512-bit message chunk and the

sources such as, lightning, and electric motors. Such

message digest to produce a new intermediate value. The

unintentional interference is called noise. These forms of

value of the message digest is the result of the output of

noise are inevitable, and it can threaten the integrity of

the final block of the message. An extended MD5 hashing

data in a message.

provides the better data integrity and the resulted values

are compared against existing hashing algorithms such

The integrity defines one-way hash function generating

as MD4 and MD5 algorithms.

the checksum of the message [2]. When the receiver gets

the data, it hashes it as well and compares the two sums,

Key Words : Hashing Algorithon, one-way function,

if they match, then the data is unaltered. The integrity is

message digest and integrity.

implemented through the use of Message Authentication

Code (MAC) and hash or Message Digest Functions.

Reader and Head, Department of Computer Science,

Karpagam Arts and Science College (Autonomous),

Coimbatore-21.

The MAC is cryptographically generated fixed length

quantity and associated with a message to reassure the

584

An Extended MD5 (ExMD5) Hashing Algorithm For Better Data Integrity

recipient that the message is genuine. The Message

The main usage of hash function ‘h’ is to maintain

Digest functions MD2, MD4 and MD5 provide one-way

confidentiality and authentication between sender and

function in the form of octets, 32-bit and 64 bit words

receiver. The simplest hash function is the bit-by-bit

respectively. The examples for message digest functions

exclusive-OR (XOR) of every block. This operation can

are MD2, MD4 and MD5. The MD5 was designed to be

be expressed as follows:

somewhat more conservative than MD4 in terms of being

Mi = Ci1

more concerned with security.

Ci2… Cin

Where,

1.1. Hash Function

Mi = ith bit of the hash code, 1≤ i ≤ n

A hash function maps a message of any length into a

n = Number of n-bit blocks in the input

fixed length hash value or message digest. The hash value

depends on input value. It provides an error detection

Cij = ith bit in jth block

capability [3]. A cryptographic function, such as DES or

= XOR operation

AES, is especially appropriate for sealing values, since

the outsider will not know the key and thus will not be

This operation produces a simple parity for each bit

able to modify the stored value. A change in one bit or

position. This process is known as longitudinal

bits in the message results in a change in the hash code.

redundancy check.

A hash function is an easily computable map ƒ → x h

from a very long input ‘x’ to a much shorter output ‘h’. In

2. LITERATURE REVIEW

a hash function it is not computationally feasible to find

The following various hash functions and its usages are

same hash value for two different inputs x and x1 such

discussed by the authors [9].

that ƒ(x) = ƒ(x ). The most widely used cryptographic

1

The hash function S

hash functions are MD4, MD5 and Secure Hash Algorithm

authentication and digital signature [7]. The hash code

(SHA). The authors [5] will describe the following

of the message ‘M’ is encrypted using private key of the

properties that are important for hash function ‘h’.

F

sender PRs. Thus, the E(PRs,H(M)) is an encryption

The function ‘h’ can be applied to a block of data of

function of a variable length message ‘M’ and the private

any size.

key PRs and it produces a fixed size output. The receiver

F

The function ‘h’ produces a fixed-length output.

F

The h(x) is relatively easy to compute for any given

decrypts hash value, which is received from sender using

public key of the sender PUs and compares with the original

message M. If it is equal the integrity is proved.

‘x’.

F

F

R : M||E(PRs,H(M)) provides

The hash function S

R : E(K,(M||H(M||SV))) provides

For any given value ‘h’, it is computationally infeasible

authentication and confidentiality. The technique

to find ‘x’ such that h(x) = h.

assumes that the two communicating parties share a

common secret value (SV) [11]. The sender ‘S’ computes

For any given block ‘x’, it is computationally infeasible

the hash values over the concatenation of ‘M’ and ‘SV’

to find y `” x such that h(y) = h(x).

585

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

and appends the result hashing value to ‘M’. Then entire

The Bellare, Canetti and Krawczyk [6] present new, simple

message with hash code is encrypted using shared key

and practical constructions of message authentication

value ‘K’. The receiver decrypts entire encoded message

schemes based on a cryptographic hash function. This

using shared secret key value ‘K’. The receiver extracts

paper describes basic properties of cryptographic hash

the original message ‘M’ and concatenates with secret

functions and keyed hash functions. It also describes

value. Calculate the hash value for ‘M||SV’ and compare

various attacks in message authentication codes and

with received hash value. In this approach both

provides the solution.

authentication and confidentiality is well proved. The

The Bosselaers [1] presents a performance improvement

various authors discuss the different approaches related

15% for the MD4 family of hash functions. The

to the hashing algorithm.

improvement is obtained by substituting n-cycle

Anderson and Math [9] provide the information on

instructions by n-1 cycle instructions and reducing so

classifications of hash functions. There are many

many instructions. This paper also compares the

applications for which one-way hash functions are

performance improvement on MD4, MD5, and SHA-1.

required. Digital signatures are one example; it is usually

This comparison is done by 90 MHz Pentium processors.

not practical to sign the whole message, as public key

Challal, Bouabdallah and Bettahar [12] describe the hybrid

algorithms are rather slow, so the normal practice is to

hash-chaining scheme in conjunction with an adaptive

hash a message to a digit between 128 ad 160 bits, and

and efficient data source authentication protocol, which

sign this need. The paper describes the real requirements

tolerates packet loss and guarantees non-repudiation of

of hash functions like collision free, complementation free,

media-streaming origin. The hybrid hash chaining has

addition free and multiplication freedom properties. These

the following terminology: If a packet Pj contains the

freedom properties are central to controlling interactions

hash of a packet Pi, the hash link connects Pi to Pj. The

between cryptographic algorithms, and have the potential

target of Pi is Pj. A signature packet is a sequence of

to be useful in algorithm design as well.

packet hashes, which are signed using a conventional

Anderson and Biham [8] present a new hash function,

digital signature scheme. A hash link relates the packet

which is called a Tiger, to be designed for 64 bit processors

with signature packets. This protocol allows saving the

and secure than MD4, SHA-1 and Snefru-8. The next

bandwidth and improves the probability that a packet be

generation of processors has 64-bit words and the older

verifiable even if some packets are lost.

hash functions could not be implemented efficiently. The

Cao, Lin and Xue [10] provide the information on secure

Tiger hash function is stronger and faster than SHA-1 in

randomized RSA-based blind signature scheme. The blind

32-bit processors and about three times faster on 64-bit

signature scheme can yield a signature and message pair

processors. It outputs 192-bit hash value, which can be

whose information does not leak to the signer. When

truncated to 128-bit or 168-bits for existing applications

blind signatures are used to design e-cash schemes there

compatibility. However this Tiger hash function has more

are two problems: double spending and accuracy of signer

passes than existing hash functions.

information. The proposed scheme in this paper satisfies

blindness and unforgeability properties. The computation

586

An Extended MD5 (ExMD5) Hashing Algorithm For Better Data Integrity

cost of this scheme is six modular exponentiations, six

F

modular multiplications, three hashing operations and

message word on each pass.

twice of random number generation performed by the

3.1. Algorithm Description

user to obtain and verify a signature. This paper uses

one-way public hash function like MD4,

The extended MD5 uses a different constant for each

To find the message digest for ‘b’-bit message input: The

SHA-1.

‘b’ is an arbitrary non-negative integer; ‘b’ may be zero; it

3. EXTENDED MD5 HASHING ALGORITHM

need not be a multiple of eight, and it may be arbitrarily

The Extended MD5 algorithm (ExMD5) takes as input a

large. The bits of the message are written down as follows:

message of random length and produces as output a 128m_0 m_1 ... m_{b-1}

bit message digest of the input. This algorithm ensures

that it is computationally infeasible to produce two

Append Padding Bits

messages having the same message digests, or to produce

any message having a given pre-specified target message

The message is to be fed into the message digest

digest. The extended MD5 algorithm is an extension of

computation which must be multiples of 512-bits. The

the MD5 message digest algorithm. The proposed

following steps are performed for message padding:

algorithm is more secure and conservative in design than

Step 1: The original message is padded by adding a 1-

MD5. It is intended for digital signature applications,

bit, followed by enough

where it identifies the correct sender.

message 64-bit less than a multiple of 512-bits.

0- bits to leave the

The extended MD5 algorithm is designed to be quite

Step 2: Then a 64-bit quantity representing the number

secure comparing to existing hash algorithms like MD2,

of bits in the unpadded message is appended to

MD4, and MD5. In addition, it does not require any large

the message.

substitution tables; the algorithm can be coded quite

The following figure1 represents the padding process.

compactly. The extended MD5 algorithm is similar to

1-512 bits

MD4 and MD5. The major differences are:

Original Message

F

1000…000

MD4 makes three passes over each 16-octet chunk of

the message and MD5 has four passes for every 16-

F

F

64 bits

Original

length in

bits

octet chunk of the message. The extended MD5 makes

Figure 1: Extended MD5 Figure1: Extended MD5

five passes over each 16-octet chunk.

message padding

The functions are slightly different in the number of

The bit order within the octet is a most significant bit to

bits in the shifts.

the least significant bit and the octet order is a least

significant bit to the most significant bit.

MD4 has one constant, which is used for each

message word in pass 2, and different constant used

Overview of extended MD5 Message Digest Computation

for the entire 16 message words in pass 3. No constant

In extended MD5, the message is processed in 512-bit

is used in pass 1.

blocks (sixteen 32-bit words). The following figure2 will

587

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

illustrate this process. The message digest is a 128-bit

Where S1 (i) = 7+5i, so the ‘S’ cycle over the values

quantity (four 32-bit words). Each stage consists of

7,12,17,22. This is different ‘S1’ from that in MD4. The

computing a function based on the 512-bit message

first few steps of the pass are as follows:

chunk and the message digest to produce a new

d0 = d1 + (d0 + F(d1, d2, d3) + m0 + T1) = 7

intermediate value for the message digest. The value of

d3 = d0 + (d3 + F(d0, d1, d2) + m1 + T2) = 12

the message digest is the result of the output of the final

block of the message.

d2 = d3 + (d2 + F(d3, d0, d1) + m2 + T3) = 17

Each stage in extended MD5 takes five passes over the

d1 = d2 + (d1 + F(d2, d3, d0) + m3 + T4) = 22

message block (as opposed to four for MD5). As with

d0 = d1 + (d0 + F(d1, d2, d3) + m4 + T5) = 7

MD4, at the end of the stage, each word of the modified

message digest is added to the corresponding pre-stage

Step 2: Extended MD5 Message Digest Pass 2

message digest value. In MD4, before the first stage, the

message digest is initialized to d0 = 6745230116, d1 =

∧z) v (y∧

∧~z). Whereas,

A function G(x, y, z) is defined as (x∧

efcdab8916, d2 = 98badcfe16, and d3 = 1032547616. As with

the function ‘G’ is different in extended MD5 to the ‘G’

extended MD5, each pass modifies d0, d1, d2, d3 using m0,

function in MD4. A separate step is done for each of the

m1, m2,…m15. The following five steps are performed to

16 words of the message. For each integer ‘i’ from 0

compute the message digest of the message.

through 15,

d(-i) ∧3=d(1-i)∧3+(d(-i)∧3+H(d(1-i)∧3,d(2-i)∧3,d(3-i)∧3) + m(3i + 5)∧15

+Ti+33)

Constant

Padded Message

Where S2 (i) = i(i+7)/2 + 5; so, the ‘S’ cycle over the values

5, 9, 14, 20. This is a different S2 from that in MD4. The

Dig

first est

few steps of the pass are as follows:

…

512 bits

d0 = d1 + (d0 + G(d1, d2, d3) + m1 + T17) = 5

Dig

d3 est

= d0 + (d3 + G(d0, d1, d2) + m6 + T18) = 9

d2 = d3 + (d2 + G(d3, d0, d1) + m11 + T19) = 17

512 bits

Dig

d1est

= d2 + (d1 + G(d2, d3, d0) + m0 + T20) = 22

Figure2 : Entire processes for extended MD5

d0 = d1 + (d0 + G(d1, d2, d3) + m5 +T21) = 5

Message

Step 1: Extended MD5 Message Digest Pass 1

Step 3: Extended MD5 Message Digest Pass 3

For each integer ‘i’ from 0 through 15,

⊕y⊕

⊕z. A separate step

A function H(x, y, z) is defined as x⊕

d (-i)∧3 = d (i-i)∧3 + (d (-i)∧3 + F(d (1-i)∧3 ,d (2-i)∧3 ,d (3-i)∧3 ) +

is done for each of the 16 words of the message. For each

mi + Ti+1)

integer ‘i’ from 0 through 15,

588

An Extended MD5 (ExMD5) Hashing Algorithm For Better Data Integrity

d(-i)∧3= d(1-i)∧3+ (d(-i)∧3+ H(d(1-i)∧3,d(2-i)∧3,d(3-i)∧3) + m(3i + 5)∧15 +

S5= ((bit(∑S4&63))+1)

Ti+33)

The

S4 is calculated by

bit(∑S4)

di, where i = 0 to 15. For

Where S3(0)=4, S3(1)=11, S3(2)=16, S3(0)=23; so, the ‘S’

example, the

cycle over the values 4, 11, 16, 23. This is a different S3

is 110100002 and binary value of 63 is 1111112.

from that of the MD4. The first few steps of the pass are

Step 1: Extract 6-LSB bits from 110100002.

as follows:

Step 2: Bit-wise AND operation between 1111112

d0=d1+(d0+H(d1,d2,d3)+m5+T33) = 4

and 0100002.

d3=d0+(d3+H(d0,d1,d2)+m8+T34)= 11

Step 3: The result is 0100002 is added with

d2=d3+(d2+H(d3,d0,d1)+m11+T35)= 16

0000012 get 0100012.

d1=d2+(d1+H(d2,d3,d0)+m14+T36)= 23

Step 4: The value 0100012 is XOR with 0100002.

The output will be 0000012.

d0=d1+(d0+H(d1,d2,d3)+m1+T37) = 4

Step 5: The output value 0000012 is converted

Step 4: Extended MD5 Message Digest Pass 4

into decimal value 110 and this value is used as an array

A function I(x, y, z) is defined as y⊕(x v ~z). A separate

index in Ti and the final value got is ‘d76aa478’.

step is done for each of the 16 words of the message. For

The extended hash function uses five passes and

each integer ‘i’ from 0 through 15,

d(-i) ∧3= d(1-i)∧3+ (d(l-i)∧3+ (d(-i)∧3+I(d(l-i)∧3,d(2-i)∧3, d(3-i)∧3) +

m(7i)∧15 + Ti+49)

S4 = 208 means, the binary value for 20810

provides the better hash values for input messages.

∑ 4. RESULT AND DISCUSSION

The extended MD5 for unique hash value has been

Where S4(i)=(i+3)(i+4)/2; so, the ↵s cycle over the 6, 10,

implemented and the performance has been compared

15, 21. The first few steps of the pass are as follows:

with MD5 and MD4 message digest hashing algorithms.

d0= d1+( d0+I(d1, d2, d3)+m0+T49) = 6

The results were significant in terms of speed and

accuracy. The extended MD5 algorithm has been

d3= d0+( d3+I(d0, d1, d2)+m7+T50) = 10

designed and implemented on JAVA under Windows XP

d2= d3+( d2+I(d3, d0, d1)+m14+T51) = 15

operating system using synthetic data. The extended

MD5 quality is measured in terms of speed, which differs

d1= d2+( d1+I(d2, d3, d0)+m5+T52) = 21

from algorithm to algorithm.

d0= d1+( d0+I(d1, d2, d3)+m12+T53) = 6

Step 5: Extended MD5 Message Digest Pass 5

A separate step is done for this phase. The stage ‘S5’ is

calculated by

Figure3: Screen view of extended MD5 Algorithm

589

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

The above figure3 illustrates the screen view of proposed

It also noted that there is a difference of values for certain

extended MD5 algorithm. The user enters the two source

runs between extended MD5 and MD5. The time

values or selects the file, which is used as an input for

difference between Extended MD5 and MD4 is very high.

extended MD5 maximum 128-bit with the help of browse

The following table 2 shows the mean and standard

button. The hash value calculation button calculating

deviation of time for Extended MD5 and the MD5.

the unique hash value.

Table 2: Summary statistics of extended MD5 in terms

of time

The following table1 shows the overall performance

results obtained for various runs using the following

Statistics Function

hardware configuration:

ExMD5 (in

Seconds)

MD5

(in Seconds)

Processor : Intel Pentium IV 3.06 GHz

Mean

0.0045

0.0059

RAM

Standard Deviation

0.002799

0.003178

: 512 MB RAM

Hard Disk : 80 GB HDD

Table 1: Performance comparison of

extended MD5

It is observed from the above table2 that the mean of

in terms of time

extended MD5 is low and standard deviation of extended

MD5 is less than the MD5 and therefore, the extended

Extended

MD5

(in Seconds)

MD5

(in Seconds)

MD4

(in Seconds)

1

0.001

0.001

0.010

2

0.001

0.003

0.011

3

0.005

0.004

0.018

4

0.004

0.006

0.025

5

0.004

0.009

0.032

6

0.007

0.009

0.037

7

0.008

0.010

0.044

8

0.009

0.003

0.057

9

0.004

0.005

0.062

MD5 is more consistent.

The following figure4 shows the graph of the time for

extended MD5, MD5 and MD4 message digest algorithms

in hash value calculation.

Performance Graph of Extended MD5 in terms of Time

0.08

0.07

Time in Seconds

Run

Extended MD5

0.06

0.05

MD5

0.04

MD4

0.03

0.02

0.01

0

1

2

3

4

5

6

7

8

9

10

Run

10

0.002

0.009

0.073

Figure4: Performance Graph of extended MD5 in

The performance with respect to the hash value

terms of time

calculation time is noted for up to 10 runs for same inputs.

This table is used to analyze results about the speed and

The pictorial representation of the above figure4 clearly

performance of the proposed extended MD5 algorithm.

shows that the speed of extended MD5 is greater than

the other two hashing algorithms. In the accuracy point

It is observed from the above table that the time taken for

of view, the proposed extended MD5 has one more pass

extended MD5 and MD5 are nearly same for various runs.

590

An Extended MD5 (ExMD5) Hashing Algorithm For Better Data Integrity

than MD5 and provides high security than MD5 and

International Journal of Information Security,

MD4.

Springer-Verlag, Vol. 6, No. 3, PP 153-181, 2007.

[5] Lars R. Knudsen, Xuejia Lai, and Bart Preneel,

5. CONCLUSION

“Attacks on fast double block length hash

In network communication, the data transmission

functions”, Journal of Cryptology, Springer-Verlag,

experiences a great threat by attackers. Even though the

Vol.11, No.1, PP 59-72, 1998.

attackers cannot read the content of a message, they are

[6] Mihir Bellare, Ran Canetti and Hugo Krawczyk,

capable of changing the content. The economic liability

“Keying

of cyber crimes is also expected to increase two-to-three

hash

functions

for

message

authentication”, Proceedings of the 16th Annual

fold by every year. Most of the cyber crimes are

International Cryptology Conference on Advances

undetected. The security still remains a risky one. The

in Cryptology, Lecture Notes in Computer Science,

focus of this research paper is mainly on Integrity using

Vol. 1109, PP 1-15, 1996.

extended MD5 hashing algorithm. The extended MD5

[7] Phong Q. Nguyen, and Igor E. Shparlinski, “The

hashing algorithm executes five passes and produces

insecurity of digital signature algorithms with

unique hash value for each message. This algorithm has

partially known nonces”, Journal of Cryptology,

been implemented on JAVA using windows XP operating

Springer-Verlag, Vol. 15, No. 3, PP 151-176, 2002.

system. The proposed extended MD5 algorithm has been

[8] Rose Anderson and Eli Biham, “Tiger: A fast new

applied in various online transactions for ensure the better

hash

integrity than MD5.

function”,

citeseer.ist.psu.edu/

anderson96tiger.html, PP 1-13, 1996.

[9] Rose Anderson and Math .C, “The classifications

6. REFERENCES

of hash functions”, citeseer.ist.psu.edu/33025.html,

[1] Antoon Bosselaers, “Even faster hashing on

PP 1-11, 1993.

Pentium”, Proceedings of Eurocrypt’97, Lecture

[10] Tinjie Cao, Dongdai Lin, and Rui Xue, “A randomized

Notes in Computer Science, Springer-Verlag, Vol.

RSA-based blind signature scheme for electronic

1233, PP 16-17, 1997.

cash”, Computers and Security, Elsevier, Vol. 24,

[2] Jie Liang, and Xue-jie lai, “Improved collision attack

No. 1, PP 44-49, 2005.

on hash function MD5”, Journal of Science and

[11] Wen-Ai Jackson, Keith M. Martin, and Christine M.

Technology, Springer-Verlag, Vol. 22, No. 1, PP 79-

O’Keefe, “Mutually trusted authority-free secret

87, 2007.

[3] Jose L Munoz, Jordi Forne, Oscar Esparza, and

sharing schemes”, Journal of Design Codes and

Miguel Soriano, “Certificate revocation system

Cryptography, Springer-Verlag, Vol. 10, No. 4, PP 261-

implementation based on the Mrekle hash tree”,

289, 1997.

[12] Yacine Challal, Abdelmadjid Bouabdallah, and

International Journal of Information Security,

Hatem Bettahar, “Hybrid hash chaining scheme for

Springer-Verlag, Vol. 2, No. 2, PP 110-124, 2004.

[4] Karl Krukow, and Mogens Nielsen, “Trust structures

adaptive multicast source authentication of media-

– denotational and operational semantics”,

streaming”, Computers and Security, Elsevier,

Vol. 24, No. 1, PP 57-68, 2005.

591

Karpagam JCS Vol. 2 Issue 3 Mar. - Apr. 2008

[13] Theodosios Tsiakis, and George Sthephanides, “The

[17] Steven M Bellovin, and Micheal Merritt, “Encrypted

concept of security and trust in electronic payment

key exchange: password-based protocols secure

systems”, Computers and Security, Elsevier, Vol. 24,

against dictionary attacks”, Proceedings of the

No. 1, PP 10-15, 2005.

IEEE Symposium on Research in Security and

[14] Thomas Wu, “The secure remote password

Privacy, Oakland, PP 48-56, 1992.

protocol”, IEEE Journal on Selected Areas in

Communication, IEEE Press, Vol. 11, No. 5, PP 648-

Author’s Biography

656, 1993.

Dr. S. Karthikeyan received the Doctorate

[15] Tian-Fu Lee, Chai-Chaw Chang, and Tzonelih

Degree in Computer Science and

Hwang, “Private authentication techniques for

Engineering from the Alagappa University,

global mobility networks”, Journal of Wireless

Karaikudi in 2008. He is currently working

Personal Communications, Springer-Verlag, Vol. 35,

as a Reader and Head in Department of Computer Science,

No. 4, PP 329-336, 2005.

Karpagam Arts and Science College (Autonomous),

[16] Stephanie Alt, “Authenticated hybrid encryption

Coimbatore. His research interests include Network

for multiple recipients”, IEEE Journal on Selected

Security using Cryptography.

Areas in Communications, IEEE-Press, Vol.11, No.5,

PP 156-182, 2006.

592