Convolutive Bounded Component Analysis Algorithms for

advertisement

1

Convolutive Bounded Component Analysis

Algorithms for Independent and Dependent Source

Separation

Huseyin A. Inan, Student Member, IEEE, Alper T. Erdogan, Senior Member, IEEE

Abstract— Bounded Component Analysis (BCA) is a framework that can be considered as a more general framework than

Independent Component Analysis (ICA) under the boundedness

constraint on sources. Using this framework, it is possible to

separate dependent as well as independent components from

their mixtures. In this article, as an extension of a recently

introduced instantaneous BCA approach, we introduce a family

of convolutive BCA criteria and corresponding algorithms. We

prove that the global optima of the proposed criteria, under

generic BCA assumptions, are equivalent to a set of perfect separators. The algorithms introduced in this article are capable of

separating not only the independent sources but also the sources

that are dependent/correlated in both component (space) and

sample (time) dimensions. Therefore, under the condition that the

sources are bounded, they can be considered as “Extended Convolutive ICA” algorithms with additional dependent/correlated

source separation capability. Furthermore, they have potential

to provide improvement in separation performance especially

for short data records. The article offers examples to illustrate

the space-time correlated source separation capability through

a Copula distribution based example. In addition, a frequencyselective MIMO Equalization example demonstrates the clear

performance advantage of the proposed BCA approach over

the state of the art ICA based approaches in setups involving

convolutive mixtures of digital communication sources.

Index Terms— Bounded Component Analysis, Independent

Component Analysis, Convolutive Blind Source Separation, Dependent Source Separation, Finite Support, Frequency-Selective

MIMO Equalization.

I. I NTRODUCTION

The unsupervised separation of components (or sources)

from a set of their mixture samples has been a major research

topic in both machine learning and adaptive signal processing

[1]. The set of applications where the Blind Source Separation

(BSS) problem arises as the central issue is strikingly diverse

and has a very large span. The blindness property is the key to

the flexibility of this approach which leads to its widespread

use. However, the blindness feature also makes BSS a challenging problem to solve. The hardship caused by the lack of

training data and relational statistical information is generally

overcome by exploiting some side information/assumptions

about the model.

This work was supported in part by TUBITAK 112E057 Project.

Huseyin A. Inan is with Electrical-Electronics Engineering Department of

Koc University, Sariyer, Istanbul, 34450, Turkey. Contact Information: Email:

hinan@ku.edu.tr

Alper T. Erdogan is with Electrical-Electronics Engineering Department of

Koc University, Sariyer, Istanbul, 34450, Turkey. Contact Information: Email:

alper@stanfordalumni.org, Phone:+90 (212) 338 1490, Fax: +90 (212) 338

1548

The most common assumption is the mutual statistical independence of sources. The approach based on this assumption is

referred to as the Independent Component Analysis (ICA) and

it is the most popular and successful BSS approach [1]–[3].

There have been several other assumptions mostly fortifying

the independence assumption such as time structure (e.g., [4],

[5]), sparsity (e.g., [6]) and special constant modulus or finite

alphabet structure of communications signals (e.g. [7]–[9]).

In typical practical BSS applications source values take their

values from a compact set. This property has been exploited

especially in some recent ICA algorithms. The potential for

utilizing the boundedness property as an additional assumption

in the ICA framework was first put forward in [10]. In this

work, Pham reformulated the mutual information cost function

in terms of order statistics. In the bounded case, this formulation leads to the effective minimization of the separator output

ranges. In the similar direction, Cruces and Duran showed

that an optimization framework based on Renyi’s Entropy

leads to support length minimization to extract sources from

their mixtures [11]. Vrins et. al, utilized range minimization approach to obtain alternative ICA algorithms [12]–[14].

Parallel to these contributions, Erdogan extended the infinity

norm minimization based blind equalization approach in [15],

[16] to obtain source separation algorithms, again within ICA

framework, based on infinity norm minimization [17], [18].

These algorithms assumed peak symmetry for the bounded

sources, which is later abandoned in [19].

Following these contributions related to exploitation of

boundedness of signals within the ICA framework, recently,

Cruces showed that boundedness can be utilized to replace

mutual statistical independence assumption with a weaker

assumption [20]. This fact led to a new framework, named

Bounded Component Analysis (BCA), which enables separation of independent and dependent (even correlated) sources.

For bounded sources, BCA provides a more general framework than ICA, since the joint density factorability requirement of the mutual independence assumption is replaced

by the weaker domain separability assumption. The domain

separability assumption refers to the condition that the convexified effective support of the joint pdf to be written as the

cartesian product of their marginal pdf counterparts. Therefore,

it is a necessary condition for the independence. However,

for the independence assumption to hold there is a more

stringent requirement about the factorability of the joint pdf in

terms of product of marginals. BCA framework removes this

requirement, therefore provides a more flexible framework for

2

bounded sources including ICA as a special case.

Within the newly introduced BCA framework, Cruces introduced a source extraction algorithm in [20]. A deflationary

approach for BCA was recently proposed in [21]. In [22], total

range minimization is posed as a BCA approach for uncorrelated sources and the characterization of the stationary points

of the corresponding symmetric orthogonalization algorithm

are provided. More recently, Erdogan proposed a new BCA

approach which enables separation of both independent and

dependent, including correlated, bounded sources from their

instantaneous mixtures [23]. In this approach, two geometric

objects, namely principal hyperellipsoid and bounding hyperrectangle, concerning separator outputs are introduced. The

separation problem is posed as maximization of the relative

sizes of these objects, in which the size of hyperellipsoid is

chosen as its volume. When the size of bounding hyperrectangle is chosen as its volume, a generalized form of Pham’s

objective in [10], which was derived by manipulating the

mutual information objective in ICA framework, is obtained.

When the size of the bounding hyperrectangle is chosen as

a norm of its main diagonal, this leads to a set of BCA

algorithms whose global optima correspond to a fixed relative

scalings of sources at the separator outputs.

This article extends the instantaneous or memoryless BCA

approach introduced in [23] for the convolutive BCA problem.

We extend the objective functions proposed in [23] to cover

the more general case where the observations are space-time

mixtures of the original sources. In particular, we show that

the algorithms corresponding to these extensions are capable

of separating not only independent sources but also sources

which are potentially dependent and even correlated in both

space and time dimensions. This is a remarkable feature of

the proposed approach which is due to a proper exploitation

of the boundedness property of sources. The part of this work

is presented in IEEE ICASSP 2013 Conference [24]. We can

highlight the novel contributions of the article as:

1) The article provides a convolutive BCA approach which

can be used to generate algorithms that are capable of

separating not only independent sources but also dependent (even correlated) sources from their convolutive

mixtures. Therefore, for bounded sources, the article

proposes a more general framework than ICA for the

convolutive source separation problem that replaces the

strong mutual independence assumption with weaker

and more generic domain separability assumption leading to additional capability to separate sources which are

potentially dependent/correlated in both space and time

directions.

2) The article offers a set of convolutive BCA objectives

whose global optima are proven to correspond to perfect

separators.

3) Through a digital communications example, the article

illustrates the potential for the significant performance

improvement offered by the proposed BCA approach

over the state of the art ICA based approaches, especially

for short data records.

4) The article prescribes an approach based on the update

of time-domain filter parameters which is free of per-

mutation alignment problem suffered by the algorithms

using frequency domain updates. Furthermore, unlike

many time-domain convolutive ICA approaches, the proposed approach doesn’t require pre-whitening operation,

which is problematic in convolutive settings.

The organization of the article is as follows: Section II

describes the convolutive BCA setup assumed throughout

the article. The geometric optimization settings to solve the

convolutive BCA problem are introduced in Section III. The

perfect separation property of the global optimizers of these

settings are also shown in the same section. The algorithms

corresponding to the proposed geometric framework are provided in Section IV. Section V covers the extension of the

proposed approach for complex valued signals. The numerical

examples illustrating the performance of the BCA algorithms

are provided in Section VI. Finally, Section VII is the conclusion.

Notation: Let A ∈ Cp×q and a ∈ Cp×1 be arbitrary. The

notation used in the article is summarized in Table I.

Notation

Meaning

Am,: (A:,m )

R{A} (I{A})

kakr

diag(a)

mth row (column) of A

The real (imaginary) part of A

P

Usual r-norm given by ( pm=1 |a|r )1/r .

Diagonal matrix whose diagonal entries

starting in the upper left corner are a1 , . . . , ap .

a1 a2 . . . ap , i.e. the product of the

elements of a.

The convex support for random vector a

Standard basis vector pointing in the m direction.

Identity matrix

Kronecker product

Q

(a)

Sa

em

I

⊗

TABLE I

N OTATION USED IN THE ARTICLE .

Indexing: m is used for (source, output) vector components,

k is the sample index and i is the algorithm iteration index.

II. C ONVOLUTIVE B OUNDED C OMPONENT A NALYSIS

S ETUP

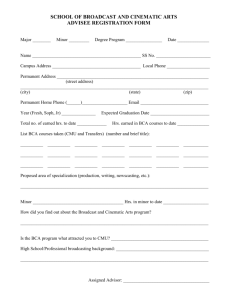

The convolutive BSS setup assumed throughout the article

is summarized in Figure 1.

The components of the convolutive BCA setup that we

consider throughout the article are as follows:

• The setup consists of p real sources. The sources are

represented by a zero mean (without loss of generality)

wide sense stationary vector process {s(k) ∈ Rp ; k ∈

Z} with a Power Spectral Density (PSD) {Ps (f ) ∈

Cp×p ; f ∈ [− 21 , 12 )}.

We further assume that ranges of the sources are bounded,

i.e., sm (k) ∈ [αm , βm ] where αm , βm ∈ R, βm > αm

for m = 1, ..., p and k ∈ Z. We define γm = R(sm ) =

βm − αm as the range of sm where R(·) is the range

operator providing the support length for the pdf of its

argument. We decompose the sources as

△

s(k) = Υs(k),

k ∈ Z,

3

•

We also

define

W̃ = W (0) W (1) . . . W (M − 1) as the separator coefficient matrix.

The overall system function is defined as

G(f ) = W(f )H(f ) =

P

−1

X

G(l)e−j2πf l ,

l=0

Fig. 1.

•

where {G(l); l ∈ {0, . . . , P − 1}} are the impulse

response coefficients, {G(f ); f ∈ [− 21 , 12 )} represents

the DTFT of the coefficients and P − 1 is the order

of overall system. Therefore, in the time domain, the

sources {s(k) ∈ Rp ; k ∈ Z} and the separator outputs

{z(k) ∈ Rp ; k ∈ Z} are related by

Convolutive Blind Source Separation Setup.

where Υ = diag(γ1 , γ2 , . . . , γp ) is the range matrix of

s and {s(k) ∈ Rp ; k ∈ Z} is the normalized source

process whose components have unit ranges.

The source signals are mixed by a MIMO system with a

q x p transfer matrix

H(f ) =

L−1

X

H(l)e−j2πf l ,

l=0

where {H(l); l ∈ {0, . . . , L − 1}} are the impulse

response coefficients and {H(f ); f ∈ [− 21 , 21 )} represents the Discrete Time Fourier Transform (DTFT) of the

impulse response. We assume that H(f ) is an equalizable

transfer function of order L − 1 [25]. The output of the

mixing MIMO system is denoted by {y(k) ∈ Rq ; k ∈

Z}. We have

Y (f ) = H(f )S(f ),

where Y (f ) is the Discrete Time Fourier Transform

(DTFT) of {y(k) ∈ Rq ; k ∈ Z}, S(f ) is the DTFT of

{s(k) ∈ Rp ; k ∈ Z}. Equivalently, in the time domain

y(k) =

L−1

X

l=0

•

H(l)s(k − l),

k ∈ Z.

We define H̃ = H(0) H(1) . . . H(L − 1) as

the mixing coefficient matrix.

Separator of the system

W(f ) =

M−1

X

W (l)e−j2πf l

l=0

is a p x q FIR transfer matrix of order M − 1 where

{W (l); l ∈ {0, . . . , M − 1}} are the impulse response

coefficients and {W(f ); f ∈ [− 21 , 21 )} represents the

DTFT of the coefficients. The separator output sequence

is denoted by {z(k) ∈ Rp ; k ∈ Z} whose DTFT can be

written as

Z(f ) = W(f )Y (f ).

Equivalently, in the time domain

z(k) =

M−1

X

l=0

W (l)y(k − l),

k ∈ Z.

z(k) =

P

−1

X

l=0

G(l)s(k − l),

k ∈ Z.

Defining G̃ = G(0) G(1) . . . G(P − 1) and

T

s̃(k) = s(k) s(k − 1) . . . s(k − P + 1)

, we

have z(k) = G̃s̃(k), for k ∈ Z. We obtain the range

matrix of s̃ as Υ̃ = I ⊗ Υ.

Unlike ICA, the sources are not assumed to be independent,

or uncorrelated, therefore implying that Ps (f ) is allowed

to be non-diagonal. We assume sources satisfy “the domain

separability” assumption (A1) which is stated as follows:

• (A1) The (convex hulls of the) domain of the extended

source vector (Ss̃ ) can be written as the cartesian product

of (the convex hulls of the) the individual components of

the extended source vector (Ss̃m for m = 1, 2, . . . , P p),

i.e., Ss̃ = Ss̃1 × Ss̃2 × . . . × Ss̃P p .

Note that s̃ corresponds to the collection of all random

variables from all p sources in a time window of length P .

Therefore, the domain separability assumption (A1) in effect

states that the convex support of the joint pdf corresponding

to all of these source random variables in a given P-length

time window, is separable, i.e., it can be written as the

cartesian product of convex supports of the marginals of these

random variables. This assumption essentially states that the

range of values for each of these random variables is not

determined by the values other random variables, whereas

the probability distribution defined over this range may be

dependent. Therefore, the assumption (A1) is a quite flexible

constraint, allowing arbitrary joint densities (corresponding

to dependent or independent random variables) over this

separable domain. The samples can, in fact, be correlated,

i.e., Ps (f ) can change with frequency. We point out that

(A1) is a much weaker assumption than the assumption of

independence of sources in both time and space dimensions. In

fact, the domain separability, which is a requirement about the

support set of the joint distribution, is a necessary condition for

the mutual independence. However, the mutual independence

assumption further dictates that the joint distribution is equal to

the product of the marginals, which is rather a strong additional

requirement on top of the domain separability.

If we compare the assumption (A1) with the assumptions

used in the convolutive ICA approaches: the independence

4

assumption in the ICA approaches refers to the mutual independence among sources. Some of the convolutive ICA

approaches further assume temporal independence, i.e., the

independence among the time samples of each source. In

such a case s̃ vector, containing all spatio-temporal source

samples in P-length time window, would be assumed to be an

independent vector which is a very strong assumption compared to (A1). Therefore, for bounded sources, the convolutive

BCA framework based on (A1) and enabling spatio-temporal

dependence among source samples is more general approach

and covers the convolutive ICA framework assuming temporal

independence as a special case. The same conclusion can

be extended for the convolutive ICA approaches that do not

assume temporal independence if and only if the temporal

dependence structure complies with the assumption (A1).

III. A FAMILY OF C ONVOLUTIVE BCA A LGORITHMS

A. A Convolutive BCA Optimization Framework

In this subsection, we extend the instantaneous BCA approach introduced in [23] to the convolutive BSS separation

problem. The BCA approach in [23] is based upon the

optimization of the relative sizes of two geometric objects,

namely the principal hyperellipsoid and bounding hyperrectangle, defined over the separator outputs. The main idea of

this approach is summarized in Appendix. We modify the first

(volume ratio) objective function of [23] as

!

Z 1

p

Y

1 2

log(det(Pz (f )))df − log

R(zm ) ,

J1 (W̃ ) =

2 − 12

m=1

(1)

where Pz (f ) is the PSD of the separator output sequence.

In the proposed objective function, we only modify the log

volume of the principal hyper-ellipse, i.e., the first term.

The definition of the volume of the principal hyper-ellipse

is extended from sample based correlation information to

process based correlation information, capturing inter-sample

correlations. We note that the objective in (1) is the asymptotic extension of the J1 objective in [23]. This extension

is obtained by concatenating source vectors in the source

process and invoking the wide sense stationarity property of

the sources along with the linear-time-invariance property of

mixing and separator systems such that the determinant of the

covariance in [23] converges to the integral term in (1). We

also note that this integral term or its modified forms (due to

the diagonality of the PSD for independent sources) appear in

some convolutive ICA approaches such as [26], [27].

B. The Global Optimality of the Perfect Separators

In this section we show that the global optima of the

objective function (1) corresponds to perfect separators. The

following theorem shows that the proposed objective is useful

for achieving separation of convolutive mixtures whose setup

is outlined in Section II.

Theorem: Assuming the setup in Section II, H(f ) is

equalizable by an FIR separator matrix of order M − 1 and

Ps (f ) ≻ 0 for all f ∈ [− 21 , 21 ), the set of global maxima for

J1 in (1) is equal to a set of perfect separator matrices.

Proof: Using the fact that

H

Pz (f ) = G(f )ΥPs (f )ΥT G(f ) ,

we obtain

Z 12

log(det(Pz (f )))df =

− 21

Z

1

2

− 21

Z

1

2

− 21

log |det(G(f )Υ)|2 det(Ps (f )) df =

log |det(G(f )Υ)|2 df +

Z

1

2

− 12

log det(Ps (f )) df.

(2)

Using the Hadamard inequality [28] yields

Z 21

log |det(G(f )Υ)|2 df ≤

− 12

!

Z 21

p

Y

2

log

|| (G(f )Υ)m,: ||2 df =

− 12

1

2

Z

m=1

p

X

log || (G(f )Υ)m,: ||22 df =

− 12 m=1

p Z 1

X

2

m=1

− 12

log || (G(f )Υ)m,: ||22 df,

(3)

where (G(f )Υ)m,: is the mth row of G(f )Υ. From Jensen’s

inequality [29], for m = 1, ..., p, we have

Z 12

log || (G(f )Υ)m,: ||22 df ≤

− 21

!

Z 1

2

log

− 21

|| (G(f )Υ)m,: ||22 df

.

The use of Parseval’s theorem yields

Z 12

|| (G(f )Υ)m,: ||22 df = ||(G̃Υ̃)m,: ||22 .

(4)

(5)

− 12

Thus, from (3-5), we obtain

Z 21

p

X

log ||(G̃Υ̃)m,: ||22 ,

log |det(G(f )Υ)|2 df ≤

− 12

m=1

(6)

which further implies,

Z 12

p

X

log(det(Pz (f )))df ≤

log ||(G̃Υ̃)m,: ||22

− 12

m=1

+

Z

1

2

− 12

As a result,

log det(Ps (f )) df.

(7)

Y

p

p

1 X

log ||(G̃Υ̃)m,: ||22 − log

R(zm )

2 m=1

m=1

Z 12

1

log det(Ps (f )) df.

(8)

+

2 − 21

J1 (W̃ ) ≤

5

Under the BCA’s domain separability assumption (A1) stated

in Section II, we can write the range of mth component of z

△

as R(zm ) = ||G̃m,: Υ̃||1 . We can further define Q = G̃Υ̃, the

range vector for the separator outputs can be rewritten as

R(z) = ||Q1,: ||1 ||Q2,: ||1 ... ||Qp,: ||1 .

(9)

If we rewrite the inequality (8) in terms of Q we obtain

!

p

p

X

Y

log ||Qm,: ||2 − log

J1 (W̃ ) ≤

||Qm,: ||1 ,

m=1

+

=

1

2

Z

1

2

− 12

p

X

m=1

1

+

2

Note that,

p

X

m=1

Z

m=1

log det(Ps (f )) df

log ||Qm,: ||2 −

1

2

− 12

p

X

m=1

log ||Qm,: ||1

log det(Ps (f )) df.

p

X

log ||Qm,: ||1 ,

log ||(Qm,: )||2 ≤

C. Extension of Convolutive BCA Optimization Framework

The framework introduced in section III-A is extended by

proposing different alternatives for the second term of the

objective function (1) (measure of the bounding hyperrectangle

for the output vectors). Example of such alternatives can be

achieved by choosing the length of the main diagonal of the

bounding hyperrectangle. As a result, we obtain a family of

alternative objective functions in the form

J2,r (W̃ ) =

1

2

1

2

Z

− 12

log(det(Pz (f )))df − log (||R(z)||pr ) ,

(14)

where r ≥ 1. By modifying (10), we can obtain the corresponding objective expression in terms of scaled overall

mapping Q as

(10)

(11)

m=1

due to the ordering ||q||1 ≥ ||q||2 for any q. Therefore,

Z 1

1 2

(12)

log det(Ps (f )) df.

J1 (W̃ ) ≤

2 − 12

We note that the equalities in (3) and (11) must be achieved

in order to achieve the equality in (12). The equality in

(11) is achieved if and only if each row of Q has only

one non-zero element which results in each row of G̃ has

only one non-zero element and the inequality in (3) is

achieved if and only if the rows

G(f ) are perpendicular

PPof

−1

−j2πf l

to

each

other.

Since

G(f

)

=

and G̃ =

l=0

G(l)e

G(0) G(1) . . . G(P − 1) , the combination of these

two requirements yield that the only one non-zero elements

in the rows of G̃ must not be positioned in the same indexes

with respect to mod p, otherwise, the rows of G(f ) would not

be perpendicular to each other.

As a result, the inequality in (12) is achieved if and only if

G̃ corresponds to perfect separator transfer matrix in the form

G(f ) = diag(α1 e−j2πf d1 , α2 e−j2πf d2 , . . . , αp e−j2πf dp )P

(13)

where αk ’s are non-zero real scalings, dk ’s are non-negative

integer delays, and P is a Permutation matrix. The FIR

equalizability of the mixing system implies the existence of

such parameters.

Here, we point out that virtual delayed source problem does

not exist in the proposed framework: In case, if one of the

separator outputs is the delayed version of another output

Pz (f ) becomes rank deficient, and therefore, its determinant

becomes zero. Therefore, the maximizing solution for the

proposed objective will avoid such cases as they will actually

minimize the PSD dependent term in the objective (1). This

fact is also reflected by the proof of the Theorem above.

J2,r (W̃ ) ≤

p

X

m=1

log ||Qm,: ||2 −

T p

log ||Q1,: ||1 ||Q2,: ||1 ... ||Qp,: ||1 r

Z 1

1 2

(15)

log det(Ps (f )) df.

+

2 − 21

The results of analyzing this objective function, for some

special r values:

• r = 1 Case: In this case, we can write

T p

kQ1,: k1 kQ2,: k1 . . . kQp,: k1

1

≥ p

p

p

Y

m=1

kQm,: k1 ,

where the inequation comes from Arithmetic-GeometricMean-Inequality, and the equality is achieved if and

only if all the rows of Q have the same 1-norm. In

consequence, we can write

J2,1 (W̃ ) ≤

p

X

m=1

log ||Qm,: ||2 −

− log(pp ) +

1

2

Z

1

2

− 12

p

X

m=1

log ||Qm,: ||1

log det(Ps (f )) df.

(16)

Similarly, from (11), we have

Z 1

1 2

log det(Ps (f )) df − log(pp ).

J2,1 (W̃ ) ≤

2 − 12

(17)

As a result, Q is a global maximum of J2,1 (W̃ ) if and

only if it is a perfect separator matrix of the form

Q = kPdiag(ρ),

where k is a non-zero value, ρ ∈ {−1, 1}p and P is a

permutation matrix. This implies G̃ is a global maximum

of J2,1 (W̃ ) if and only if it can be written in the form

G̃ = kPΥ̃−1 diag(ρ).

6

•

r = 2 Case: In this case, using the basic norm inequality,

for any a ∈ Rp , we have

where ν = N + M − 1, η = 2ν + 1 is the DFT size and

we use the PSD estimate for the separator outputs given

by

ν

X

P̂z (l) =

R̂z (k)e−j2πlk/η ,

1

||a||2 ≥ √ ||a||1 ,

p

where the equality is achieved if and only if all the

components of a are equal in magnitude. As a result,

we can write

p

p

X

X

J2,2 (W̃ ) ≤

log ||Qm,: ||2 −

log ||Qm,: ||1

m=1

1

R̂z (k) =

ν + 1 − |k|

m=1

− log(p

•

k=−ν

for l ∈ {−ν, ..., ν}, where N is the number of samples

and R̂z is the output sample autocovariance function,

defined as

p/2

1

)+

2

Z

1

2

− 12

log det(Ps (f )) df.

R̂(zm ) =

p

Y

1

p

||R(z)||

≥

R(zm ).

1

pp

m=1

where the equality is achieved if and only if all the

components of R(z) are equal in magnitude. Based on

this inequality, we obtain

J2,∞ (W̃ ) ≤

+

m=1

1

2

Z

log ||Qm,: ||2 −

1

2

− 21

p

X

m=1

log ||Qm,: ||1

log det(Ps (f )) df.

Ŵ(l) =

zm (k) −

min

k∈{1,2,...,N }

zm (k),

ν

X

W (k)e−j2πlk/η ,

k=−ν

and

P̂y (l) =

ν

X

R̂y (k)e−j2πlk/η .

k=−ν

Therefore, J2,∞ also has the same set of global optima

as J2,1 and J2,2 .

Hence, to attain the global maximum of J2,1 , J2,2 and J2,∞ ,

there is also a condition that all the rows of Q have the same

1-norm. This implies G̃ is a global maximum of J2,1 , J2,2 and

J2,∞ if and only if it can be written in the form

Following

Q the similar steps as in [23] for the derivative

p

of log

m=1 R̂(om ) , the subgradient based iterative

algorithm for maximizing objective function (20) is provided as

W (i+1) (n) = W (i) (n)

X

ν

o

n

1

+ µ(i)

R P̂z (l)−1 Ŵ(i) (l)P̂y (l)ej2πnl/η

η

G̃ = kPΥ̃−1 diag(ρ).

where k is a non-zero value, ρ ∈ {−1, 1}p and P is a

permutation matrix which corresponds to a subset of perfect

separators defined by (13).

l=−ν

−

IV. I TERATIVE BCA A LGORITHMS

(20)

max

k∈{1,2,...,N }

l=−ν

(19)

l=−ν

q=max(0,−k)

where

In this section, we provide the adaptive algorithm corresponding to the optimization settings presented in the previous

section.

• Objective Function J1 (W̃ ):

In the adaptive implementation, we assume a set of finite

observations of mixtures {y(0), y(1), ..., y(N − 1)} and

modify the objective as

!

p

ν

X

Y

1

J¯1 (W̃ ) =

R̂(zm ) ,

log(det(P̂z (l))) − log

2η

m=1

z(q)z T (q + k),

for m = 1, 2, ..., p.

Note that the derivative of the first part of J¯1 (W̃ ) with

respect to W (n) is

Pν

1 ∂ l=−ν log(det(P̂z (l)))

=

2η

∂W(n)

ν

o

1 X n

(21)

R P̂z (l)−1 Ŵ(l)P̂y (l)ej2πnl/η ,

η

and Arithmetic-Geometric-Mean-Inequality yields

p

X

X

for k = −ν, ..., ν. We point out that we use R̂(z) for the

range vector of the sample outputs for which we have

(18)

Similarly, J2,2 has the same set of global maxima as J2,1 .

r = ∞ Case: Using the basic norm inequality, for any

a ∈ Rp ,

1

||a||∞ ≥ ||a||1 ,

p

||R(z)||p∞ ≥

min(ν,ν−k)

p

X

m=1

1

eTm R̂(z W(i) )

T max(i)

min(i)

) − y(lm

)

,

em y(lm

(22)

max(i)

lm

•

where µ(i) is the step-size at the ith iteration and

min(i)

(lm

) is the sample index for which the maximum

(minimum) value for the mth separator output is achieved

at the ith iteration.

Objective Function J2,r (W̃ ):

In the adaptive implementation, we modify the family of

alternative objective functions as

ν

1 X

J¯2,r (W̃ ) =

log(det(P̂z (l))) − log ||R̂(z)||pr

2η

l=−ν

(23)

7

For r = 1, 2, we can write the update equation as

W (i+1) (n) = W (i) (n)

X

ν

o

n

1

+ µ(i)

R P̂z (l)−1 Ŵ(i) (l)P̂y (l)ej2πnl/η −

η

l=−ν

p

T X pR̂m (z (i) )r−1

W

max(i)

min(i)

) − y(lm

)

.

em y(lm

r

m=1 ||R̂(z W(i) )||r

(24)

A. Complex Extension of the Convolutive BCA Optimization

Framework

We can extend the framework introduced in the Section III

to the complex signals by following similar steps with the

real vector z̀. We define the subset of R2p vectors which are

isomorphic to the elements of Z as

Z̀ = {z̀ : z ∈ Z}.

Similarly, we define

For r = ∞, the update equation has the form

S̀ =

W (i+1) (n) = W (i) (n)

X

ν

n

o

1

+ µ(i)

R P̂z (l)−1 Ŵ(i) (l)P̂y (l)ej2πnl/η −

η

{s̀ : s ∈ S},

Ỳ =

{ỳ : y ∈ Y }.

By these definitions, we modify the objective function J1 in

(1) for the complex case as

(i)

T

X

!

pβm

max(i)

min(i)

Z 1

2p

em y(lm

) − y(lm

)

Y

1 2

||R̂(z W(i) )||∞

log(det(Pz̀ (f )))df − log

R(z̀m ) .

Jc1 (W̃ ) =

m∈M(z W(i) )

2 − 21

m=1

(25)

(29)

Note that the mapping between Φ(s) and Φ(z) is given by

where M(z W(i) ) is the set of indexes for which the peak

Γ(Q), thus the theorem proved in Section III-B implies that

range value is achieved, i.e.,

the set of global maxima for the objective function (29) have

M(z W(i) ) = {m : R̂m (z W(i) ) = kR̂(z W(i) )k∞ }, (26) the properties that the corresponding Γ(G̃) satisfies (13) and

the structure imposed by (28). Therefore, the set of global

(i)

optima for (29) (in terms of G̃) is given by

and βm s are the convex combination coefficients.

l=−ν

=

GOc

D ∈ CpP ×pP is a full rank diagonal matrix with

V. E XTENSION TO C OMPLEX S IGNALS

In the complex domain, we consider p complex sources with

finite support, i.e., real and imaginary part of the sources have

finite support. We define the operator Φ : Cp → R2p ,

Φ(a) =

R{aT } I{aT }

T

{G̃ = PD : P ∈ Rp×pP is a permutation matrix,

(27)

as an isomorphism between p dimensional complex domain

and 2p dimensional real domain. For any complex vector a, we

introduce the corresponding isomorphic real vector as à, i.e.,

à = Φ{a}. We also define the operator Γ : Cp×q → R2p×2q

as

R{A} −I{A}

Γ(A) =

.

(28)

I{A} R{A}

In the complex domain, both mixing and separator coefficient matrices are complex matrices, i.e., H̃ ∈ Cq×pL and

W̃ ∈ Cp×qM . The set of source vectors S and the set of

separator outputs Z are subsets of Cp and the set of mixtures

Y is a subset of Cq . We also note that since

y(k) = G̃s̃(k),

we have

ỳ(k) = Γ(G̃)`s̃(k).

where Γ(G̃) = Γ(G(0)) Γ(G(1)) . . . Γ(G(P − 1))

T

and `s̃(k) = s̀(k) s̀(k − 1) . . . s̀(k − P + 1)

.

Dii = αi e

jπki

2

, αi ∈ R, ki ∈ Z, i = 1, . . . pM },

which corresponds to a subset of complex perfect separators

with discrete phase ambiguity.

In the adaptive implementation, we modify the objective as

!

2p

ν

Y

1 X

¯

Jc1 (W̃ ) =

R̂(z̀m ) .

log(det(P̂z̀ (l))) − log

2η

m=1

l=−ν

(30)

¯ 1 (W̃ ) with

Note that the derivative of the first part of Jc

respect to W (n) is

Pν

1 ∂ l=−ν log(det(P̂z̀ (l)))

= Λ11 + Λ22 + j(Λ21 − Λ12 ),

2η

∂W(n)

(31)

where we define

ν

o

1 X n

R P̂z̀ (l)−1 Γ(Ŵ)(l)P̂ỳ (l)ej2πnl/η

η

l=−ν

Λ11 Λ21

=

Λ11 , Λ12 , Λ21 , Λ22 ∈ Rp×q

Λ21 Λ22

where

Γ(Ŵ)(l) =

ν

X

k=−ν

Γ(W (k))e−j2πlk/η .

8

The corresponding iterative update equation for W(n) can

be written as

W(i+1) (n) = W(i) (n)

(i)

(i)

(i)

(i)

+ µ(i) Λ11 + Λ22 + j(Λ21 − Λ12 )

−

2p

X

m=1

1

eTm R̂(z̀ W(i) )

vm

and in the adaptive implementation by modifying these objective functions as

ν

1 X

¯

,

log(det(P̂z̀ (l))) − log ||R(z̀)||2p

Jc2,r (W̃ ) =

r

2η

l=−ν

(41)

H max(i)

min(i)

y(lm

) − y(lm

)

,

(32)

or alternatively,

ν

1 X

¯

.

Jca2,r (W̃ ) =

log(det(P̂z (l))) − log ||R(z̀)||2p

r

η

l=−ν

where we define

(42)

vm =

em

jem−p

m ≤ p,

m > p.

(33)

A variation on the approach considered for the complex

case can be obtained by observing that in the Jc1 objective

function

log(det(Pz̀ (f ))) = log |det(Γ(G)(f )Γ(Υ))|2 det(Ps̀ (f )) ,

(34)

PP −1

where Γ(G)(f ) = l=0 Γ(G(l))e−j2πf l . We also note that

|det(Γ(G)(f )Γ(Υ))| = |det(G(f )Υ)|2 .

(35)

Therefore, if we define an alternative objective function

!

Z 12

2p

Y

log(det(Pz (f )))df − log

R(z̀m ) ,

Jc1a (W̃ ) =

− 12

m=1

(36)

it would have the same set of global optima. In the adaptive

implementation, the modified objective will be as

!

2p

ν

X

Y

1

¯ 1a (W̃ ) =

Jc

R̂(z̀m ) .

log(det(P̂z (l))) − log

η

m=1

The update equation is similar to (32) where the derivative

of first part of (41) or (42) is given by either (31) or (38)

depending on the choice and the derivative of the second part

depends on the selection of r, e.g.,

• r = 1, 2 Case: In this case

∂log ||R(z̀)||2p

r

=

∂W(n)

2p

H

X

pR̂m (z̀ W(i) )r−1

max(i)

min(i)

) − y(lm

)

,

vm y(lm

r

m=1 ||R̂(z̀ W(i) )||r

(43)

•

where vm is as defined in (33).

r = ∞ Case: In this case

(i)

X

∂log ||R(z̀)||2p

pβm

r

=

∂W(n)

||R̂(z̀ W(i) )||∞

m∈M(z̀ W(i) )

H

max(i)

min(i)

) − y(lm

)

(44)

vm y(lm

where vm is as defined in (33),

l=−ν

(37)

The convenience of Jc1a is in terms of the simplified update

expression for (31), which results in the derivative of the first

¯ 1a (W̃ ) with respect to W (n) is

part of Jc

Pν

1 ∂ l=−ν log(det(P̂z (l)))

η

∂W(n)

ν

X

1

=

P̂z (l)−1 Ŵ(l)P̂y (l)ej2πnl/η .

(38)

η

l=−ν

Therefore, the corresponding iterative update equation for

W(n) in (32) is updated accordingly.

B. Complex Extension of the Alternative Objective Functions

Similar to the complex extension of J1 provided in the

previous subsection, we can extend the J2 family by defining

Z 1

1 2

,

log(det(Pz̀ (f )))df − log ||R(z̀)||2p

Jc2,r (W̃ ) =

r

2 − 21

(39)

or alternatively,

Jca2,r (W̃ ) =

Z

1

2

− 12

log(det(Pz (f )))df − log ||R(z̀)||2p

,

r

(40)

M(z̀ W(i) ) = {m : R̂m (z̀ W(i) ) = kR̂(z̀ W(i) )k∞ }, (45)

(i)

and βm s are the convex combination coefficients.

VI. N UMERICAL E XAMPLES

In this section, we illustrate the separation capability of

the proposed algorithms for the convolutive mixtures of both

independent and dependent sources.

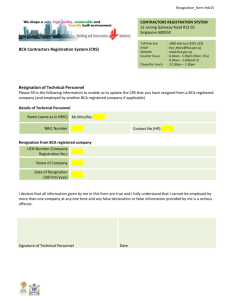

A. Separation of Space-Time Correlated Sources

We first consider the following scenario to illustrate the

performance of the proposed algorithms regarding the separability of convolutive mixtures of space-time correlated sources:

In order to generate space-time correlated sources, we first

generate a samples of a τ p size vector, d, with Copulat distribution in [30], a perfect tool for generating vectors

with controlled correlation, with 4 degrees of freedom whose

correlation matrix parameter is given by R = Rt ⊗ Rs

is a Toeplitz

matrix whose first row is

where Rt (Rs ) τ −1

( 1 ρs . . . ρp−1

) (Note that

1 ρt . . . ρt

s

the Copula-t distribution is obtained from the corresponding

t-distribution through the mapping of each component using the corresponding marginal cumulative distribution functions, which leads to uniform marginals [30]). Each sample of d is partitioned to produce source vectors, d(k) =

9

45

40

35

MMSE (ρt = 0)

30

BCA (J¯1, ρt = 0)

25

BCA (J¯2,1 , ρt = 0)

BCA (J¯1, ρt = 0.5)

BCA (J¯2,∞ , ρt = 0)

20

KurtosisMax. of [31]

Signal to Interference-plus-Noise Ratio (dB)

16

14

12

10

BCA (J¯1, ρt = 0)

6

BCA (J¯2,1 , ρt = 0)

BCA (J¯2,∞ , ρt = 0)

4

KurtosisMax. of [31]

2

0

0

Alg.2 of [32]

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

Correlation Coefficient ρs

5

4

3

2

MMSE

BCA (J¯1)

1

BCA (J¯2,1 )

BCA (J¯2,2 )

0

−2

0

10

MMSE (ρt = 0)

8

−1

Alg.2 of [32]

15

18

Fig. 3. Dependent convolutive mixtures separation performance results for

SNR = 20dB.

Signal to Interference-plus-Noise Ratio (dB)

s(kτ ) s(kτ + 1) . . . s((k + 1)τ − 1) . Therefore, we

obtain the source vectors as samples of a wide-sense cyclostationary1 process whose correlation structure in time direction

and space directions are governed by the parameters ρt and

ρs , respectively.

In the simulations, we considered a scenario with 3 sources

and 5 mixtures, an i.i.d. Gaussian convolutive mixing system

with order 3 and a separator of order 10. At each run, we

generate 50000 source vectors where τ is set as 5. The results

are computed and averaged over 500 realizations.

Figure 2 shows the output total Signal energy to total

Interference+Noise energy (over all outputs) Ratio (SINR)

obtained for the proposed BCA algorithms (J¯1 , J¯2,1 , J¯2,∞ )

for various space and time correlation parameters under 45dB

SNR. SINR performance of Minimum Mean Square Error

(MMSE) filter of the same order, which uses full information

about mixing system and source/noise statistics, is also shown

to evaluate the relative success of the proposed approach. A

comparison has also been made with a gradient maximization

of the criterion (kurtosis) of [31] (KurtosisMax.) and Alg.2

of [32] where we take kmax = 50 and lmax = 20. We have

obtained these methods from [1], [33].

Signal to Interference-plus-Noise Ratio (dB)

BCA (J¯2,∞ )

KurtosisMax. of [31]

Alg.2 of [32]

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

Correlation Coefficient ρs

5

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

Fig. 4. Dependent convolutive mixtures separation performance results for

SNR=5dB.

Correlation Coefficient ρs

Fig. 2. Dependent convolutive mixtures separation performance results for

SNR = 45dB.

For the same setup, Figure 3 shows the output total Signal

energy to total Interference+Noise energy (over all outputs)

Ratio (SINR) obtained for the proposed BCA algorithms

(J¯1 , J¯2,1 , J¯2,∞ ), gradient maximization of the criterion (kurtosis) of [31] (KurtosisMax.), Alg.2 of [32], and MMSE for

various space correlation parameters under 20dB SNR.

In Figure 4, we provide the output total Signal energy to total Interference+Noise energy (over all outputs)

Ratio (SINR) obtained for the proposed BCA algorithms

(J¯1 , J¯2,1 , J¯2,2 , J¯2,∞ ), gradient maximization of the criterion

(kurtosis) of [31] (KurtosisMax.), Alg.2 of [32], and MMSE

for various space correlation parameters under 5dB SNR.

These results demonstrate that the performance of proposed

algorithms closely follows its MMSE counterpart for a wide

range of correlation values. Therefore, we obtain a convolutive

extension of the BCA approach introduced in [23], which

is capable of separating convolutive mixtures of space-time

correlated sources.

We also point out that the proposed BCA approaches maintains high separation performance for various space and time

correlation parameters. On the other hand, the performance

of gradient maximization of the criterion (kurtosis) of [31]

(KurtosisMax.) and Alg.2 of [32] degrades substantially with

increasing correlation, since in the correlated case, independence assumption simply fails.

B. MIMO Blind Equalization

1 This

actually violates the stationarity assumption on sources when ρt 6= 0.

However, we still use this as a convenient method to generate space-time

correlated sources

We next consider the following scenario to illustrate the performance of the proposed method for the convolutive mixtures

10

of digital communication sources. We consider 3 complex

QAM sources such that 2 sources are 16-QAM signals and 1

source is 4-QAM signal. We take 5 mixtures, an i.i.d. Gaussian

convolutive mixing system with order 3 and a separator of

order 10. The results are computed and averaged over 500

¯ 1 , Jc

¯ 2,1 and

realizations. We use the objective functions Jc

¯

Jc2,2 as the BCA algorithms introduced in Section V for this

simulation. The resulting Signal to Interference Ratio is plotted

with respect to the sample lengths in Figure 5. We have also

compared our algorithms with a gradient maximization of the

criterion (kurtosis) of [31] (KurtosisMax.) and Alg.2 of [32].

According to Figure 5, the proposed BCA approaches

achieve better performance than ICA based approaches. As

it is mentioned earlier, the proposed method does not assume/exploit statistical independence. The only impact of

short data length is on accurate representation of source box

boundaries. The simulation results suggest that the shorter

data records may not be sufficient to reflect the stochastic

independence of the sources, and therefore, the compared

algorithms require more data samples to achieve the same SIR

level as the proposed approach.

the sources are bounded and satisfy domain separability

assumption.

• Even though the source samples may be drawn from a

stochastic setting where they are mutually independent,

especially for short data records, the estimation of sources

based on domain separability is expected to be more

robust than the estimation based on the independence

feature. As illustrated in the previous section, this feature results in superior separation performances, relative

to some state of the art Convolutive ICA methods, in

convolutive MIMO equalization problem, which is more

pronounced especially for shorter packet sizes.

We note that the dimension of the extended vector of sources

(s̃) increases with the order of the overall system and with the

number of sources. This implies that the proposed convolutive

BCA approaches’ performances will depend on the sample

length as the order of the convolutive system and/or the

number of sources increase. We finally note that for the

applications where the sources have tailed distributions, the

performances of the proposed convolutive BCA algorithms are

likely to suffer, which can be considered as one drawback of

the algorithms.

Signal to Interference Ratio (dB)

70

A PPENDIX

60

BCA (J¯c1)

50

BCA (J¯c2,1 )

BCA (J¯c2,2 )

40

KurtosisMax. of [31]

Alg.2 of [32]

30

20

10

0

0

1000

2000

3000

4000

5000

6000

7000

8000

9000 10000

Sample Length

Fig. 5.

Signal to Interference Ratio as a function of Sample Length

VII. C ONCLUSION

In this article, we introduce an algorithmic framework for

the convolutive Bounded Component Analysis problem. The

utility of the proposed algorithms are mainly twofold:

• The proposed algorithms are capable of separating not

only independent sources but also dependent, even correlated sources. The dependence/correlation is allowed to

be in both source (or space) and in sample (or time)

directions. The proposed framework’s capability in terms

of separating space-time correlated sources (as well as

independent sources) from their convolutive mixtures favors it as a widely applicable approach under the practical

constraint on the boundedness of sources. In fact the

proposed approach can be considered as a more general

convolutive approach than ICA with additional dependent

source separation capability, under the condition that

In this appendix, we provide an essential summary of the

geometric BCA approach introduced in [23]. We first start with

the definitions of two geometric objects used by this approach:

• Principal Hyperellipsoid is the hyperellipse whose principal semi-axis directions are determined by the eigenvectors of the covariance matrix and whose principal semiaxis lengths are equal to principal standard deviations,

i.e., the square roots of the eigenvalues of the covariance

matrix.

• Bounding Hyperrectangle corresponds to the box defined by the Cartesian product of the support sets of the

individual components. This can be also defined as the

minimum volume box containing all samples and aligning

with the coordinate axes.

An example, for a case of 3-sources to enable 3D picture, is

provided in Figure 5. In this figure, a realization of separator

output samples and the corresponding bounding hyperrectangle and principal hyper-ellipsoid are shown.

The separation problem is posed as maximization of the

relative sizes of these objects. An example to the optimization

setting of this approach could be given as:

p

det(Rz )

Qp

.

(46)

maximize

m=1 R(zm )

Note that the numerator of (46) is the (scaled) volume of the

principal hyperellipse, whereas the denominator is the volume

of the bounding hyperrectangle for the output vectors.

The principal hyperellipsoid in the output domain is the

image (with respect to the instantaneous overall mapping

defined as G) of the principal hyper-ellipsoid in the source

domain. We note that the instantaneous overall mapping causes

|det(G)| scaling in the volume of principal hyperellipsoid.

However, the image of the bounding hyperrectangle in the

11

Fig. 6.

Bounding Hyper-rectangle and Principal Hyper-ellipsoid

source domain is a hyperparallelopiped which is a subset of

the bounding hyperrectangle in the output domain. Hence, the

volume scaling for the source and separator output bounding

boxes is more than or equal to |det(G)|. As an important

observation, for the latter, the scaling would be |det(G)| if

and only if G is a perfect separator matrix. We observe this

by simple geometrical reasoning that the bounding box in the

source domain is mapped to another hyper-rectangle (aligning

with coordinate axes) if and only if G can be written as

G = DP , where D is a diagonal matrix with non-zero

diagonal entries, and P is a permutation matrix. The set of

such G matrices is referred as Perfect Separators. Therefore,

the optimization setting given as an example provides Perfect

Separators.

R EFERENCES

[1] P. Comon and C. Jutten, Handbook of Blind Source Separation: Independent Component Analysis and Applications. Academic Press, 2010.

[2] A. Hyvärinen, J. Karhunen, and E. Oja, Independent Component Analysis. John Wiley and Sons Inc., 2001.

[3] P. Comon, “Independent component analysis, A new concept?,” Signal

Processing, vol. 36, pp. 287–314, Apr. 1994.

[4] A. Belouchrani, K. Abed-Meraim, J.-F. Cardoso, and E. Moulines, “A

blind source separation technique using second-order statistics,” IEEE

Trans. on Signal Processing, vol. 45, no. 2, pp. 434–444, Feb. 1997.

[5] M. Matsuoka, M. Ohaya, and M. Kawamoto, “A neural net for blind

separation of nonstationary signals,” Neural Networks, vol. 8, no. 3, pp.

411–419, Mar. 1995.

[6] P. Georgiev, F. Theis, and A. Cichocki, “Sparse component analysis and

blind source separation of underdetermined mixtures,” IEEE Trans. on

Neural Networks, vol. 16, no. 4, pp. 992–996, Jul. 2005.

[7] A. J. van der Veen and A. Paulraj, “An analytical constant modulus

algorithm,” IEEE Trans. on Signal Processing, vol. 44, no. 5, pp. 1136–

1155, May 1996.

[8] P. Jallon, A. Chevreuil, and P. Loubaton, “Separation of digital communication mixtures with the CMA: Case of unknown symbol rates,”

Signal Processing, vol. 90, no. 9, pp. 2633–2647, Sep. 2010.

[9] S. Talwar, M. Viberg, and A. Paulraj, “Blind separation of synchronous

co-channel digital signals using an antenna array. I. algorithms,” IEEE

Trans. on Signal Processing, vol. 44, no. 5, pp. 1184–1197, May 1996.

[10] D.-T. Pham, “Blind separation of instantenaous mixtrures of sources

based on order statistics,” IEEE Trans. on Signal Processing, vol. 48,

pp. 363–375, Feb. 2000.

[11] S. Cruces and I. Duran, “The minimum support criterion for blind source

extraction: a limiting case of the strenthened young’s inequality,” Lecture

Notes in Computer Science, pp. 57–64, Sep. 2004.

[12] F. Vrins, M. Verleysen, and C. Jutten, “SWM: A class of convex

contrasts for source separation,” in Proceedings of IEEE ICASSP 2005,

Philadelphia, PA, Mar. 2005, pp. 161–164.

[13] F. Vrins, J. A. Lee, and M. Verleysen, “A minimum-range approach to

blind extraction of bounded sources,” IEEE Trans. on Neural Networks,

vol. 18, no. 3, pp. 809–822, May 2007.

[14] F. Vrins and D. Pham, “Minimum range approach to blind partial

simultaneous separation of bounded sources: Contrast and discriminacy

properties,” Neurocomputing, vol. 70, pp. 1207–1214, Mar. 2007.

[15] S. Vembu, S. Verdu, R. Kennedy, and W. Sethares, “Convex cost

functions in blind equalization,” IEEE Trans. on Signal Processing,

vol. 42, pp. 1952–1960, Aug. 1994.

[16] A. T. Erdogan and C. Kizilkale, “Fast and low complexity blind equalization via subgradient projections,” IEEE Trans. on Signal Processing,

vol. 53, pp. 2513–2524, Jul. 2005.

[17] A. T. Erdogan, “A simple geometric blind source separation method for

bounded magnitude sources,” IEEE Trans. on Signal Processing, vol. 54,

pp. 438–449, Feb. 2006.

[18] A. T. Erdogan, “Globally convergent deflationary instantaneous blind

source separation algorithm for digital communication signals,” IEEE

Trans. on Signal Processing, vol. 55, no. 5, pp. 2182–2192, May 2007.

[19] A. T. Erdogan, “Adaptive algorithm for the blind separation of sources

with finite support,” in Proceedings of EUSIPCO 2008, Lausanne, Aug.

2008.

[20] S. Cruces, “Bounded component analysis of linear mixtures: A criterion

for minimum convex perimeter,” IEEE Trans. on Signal Processing,

vol. 58, no. 4, pp. 2141–2154, Apr. 2010.

[21] P. Aguilera, S. Cruces, I. Duran, A. Sarmiento, and D. Mandic, “Blind

separation of dependent sources with a bounded component analysis

deflationary algorithm,” IEEE Signal Processing Letters, vol. 20, no. 7,

pp. 709–712, Jul. 2013.

[22] A. T. Erdogan, “On the convergence of symmetrically orthogonalized

bounded component analysis algorithms for uncorrelated source separation,” IEEE Trans. on Signal Processing, vol. 60, no. 11, pp. 6058–6063,

Nov. 2012.

[23] A. T. Erdogan, “A family of bounded component analysis algorithms,”

in IEEE International Conference on Acoustics, Speech and Signal

Processing, Kyoto, Mar. 2012, pp. 1881–1884.

[24] H. A. Inan and A. T. Erdogan, “A bounded component analysis approach

for the separation of convolutive mixtures of dependent and independent

sources,” in IEEE International Conference on Acoustics, Speech and

Signal Processing, Vancouver, BC, May 2013, pp. 3223–3227.

[25] Y. Inouye and R.-W. Liu, “A system-theoretic foundation for blind

equalization of an FIR MIMO channel system,” IEEE Trans. on CAS-I:

Fund. Th. and Apps., vol. 49, no. 4, pp. 425–436, Apr. 2002.

[26] T. Mei, J. Xi, F. Yin, A. Mertins, and J. F. Chicharo, “Blind source

separation based on time-domain optimization of a frequency-domain

independence criterion,” IEEE Trans. on Audio, Speech, and Language

Processing, vol. 14, no. 6, pp. 2075–2085, Nov. 2006.

[27] T. Mei, A. Mertins, F. Yin, J. Xi, and J. F. Chicharo, “Blind source

separation for convolutive mixtures based on the joint diagonalization

of power spectral density matrices,” Signal Processing , vol. 88, no. 8,

pp. 1990–2007, Aug. 2008.

[28] D. Garling, Inequalities: A Journey into Linear Analysis. Cambridge

University Press, 2007.

[29] W. Rudin, Real and Complex Analysis. McGraw-Hill Book Co., 1987.

[30] S. Demarta and A. J. McNeil, “The t copula and related copulas,”

International Statistical Review, vol. 73, no. 1, pp. 111–129, Jan. 2005.

[31] C. Simon, P. Loubaton, and C. Jutten, “Separation of a class of

convolutive mixtures: a contrast function approach,” Signal Processing,

vol. 81, no. 4, pp. 883 – 887, 2001.

[32] M. Castella and E. Moreau, “New kurtosis optimization schemes for

MISO equalization,” IEEE Trans. on Signal Processing, vol. 60, no. 3,

pp. 1319–1330, 2012.

[33] P. Jallon, M. Castella, and R. Dubroca, “Matlab toolbox for separation

of convolutive mixtures,” Available: http://www-public.int-evry.fr/

∼castella/toolbox/