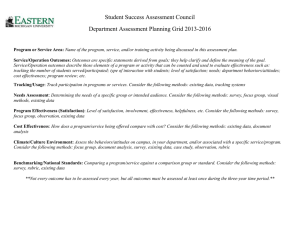

Institutional Effectiveness and Assessment Plan

advertisement