Managing the Information Systems Infrastructure

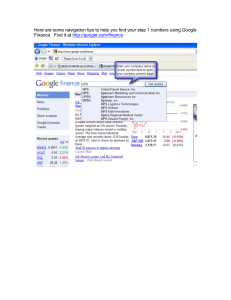

advertisement