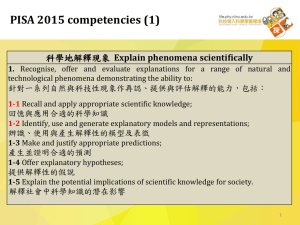

PISA 2015 Draft Science Framework

advertisement