Creating a Program to Deepen Family Inquiry at

advertisement

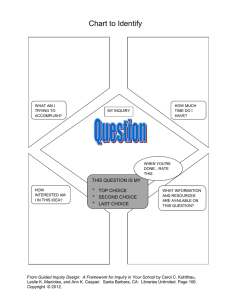

Research Report Creating a Program to Deepen Family Inquiry at Interactive Science Exhibits • • • • • Sue Allen and Joshua P. Gutwill A common goal of science museums is to support the public in science inquiry by engaging groups of visitors with interactive exhibits. This article summarizes the efforts of a team of researchers and practitioners to extend and deepen such inquiry by explicitly coaching families in the skills of scientific inquiry at interactive exhibits. The first phase of the project, reported here, involved designing a “best case” program that worked for small groups of casual visitors under ideal circumstances, facilitated by an experienced educator in a quiet laboratory near the public floor. The final program, called Inquiry Games, taught visitors to sandwich their spontaneous physical experimentation between two additional phases: asking a question to drive their investigation at the beginning; and interpreting the results of their investigation at the end. Provisional evaluation data suggest that the Inquiry Games improved visitors’ inquiry behavior in several ways and was rated as very enjoyable by them. Encouraged by these indicators, we suggest ways in which this program might be implemented on the open museum floor. • • • • • What exactly does it mean for visitors to engage in inquiry? The term “scientific inquiry” has no single definition, but most researchers, practitioners, and learning theorists include such activities as questioning, observing, predicting, experimenting, explaining, synthesizing or applying ideas, arguing with evidence, and communicating ideas to others.1 Inquiry experiences may help people learn and practice the skills of science, exercise their decision-making capacities, and feel empowered to make sense of the natural world.2 Indeed, inquiry skills are broadly recognized as a key component of scientific literacy.3 Sue Allen (suea@exploratorium.edu) is on leave from the Exploratorium; this work was completed while she served as a Program Officer in the Division of Learning in Formal and Informal Settings at the National Science Foundation. Josh Gutwill (joshuag@exploratorium.edu) is Acting Director of Visitor Research and Evaluation at the Exploratorium in San Francisco. 289 290 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt Science museums are ideal environments for learning and practicing such skills. While playing with exhibits, visitors naturally ask questions, try various experiments, make observations, and discuss their experiences. For decades, science museums have created environments that deliberately support and extend visitors’ inquiry.4 Even in art and history museums, designers and researchers are increasingly attempting to foster an “investigative stance” by helping visitors ask and answer their own questions about the objects and artifacts.5 Some science museums have designed open-ended exhibits specifically to encourage visitor-driven experimentation rather than to teach scientific content. For example, the Science Museum of Minnesota created Experiment Benches: exhibits with a range of experimental options for visitors to test (Sauber 1994). The goal of the Experiment Benches was to simulate a miniature laboratory in which visitors could explore sound, optics, electricity, and other physical phenomena. More recently, the Exploratorium in San Francisco developed multi-option exhibits to promote Active Prolonged Engagement (APE) behavior in visitors (Humphrey and Gutwill 2005). Studies found that APE exhibits increased visitors’ dwell time and altered their behavior. Visitors asked more questions, seeking an explanation — and they answered more of their own questions without referring to the label — than visitors at more traditional exhibits (Gutwill 2005). Borun et al. (1998) found that making exhibits more open-ended (among other qualities) increased families’ tendency to engage in key inquiry behaviors at exhibits that were associated with learning (asking and answering questions, reading labels, and commenting). Although open-ended exhibit designs such as these support inquiry behaviors, visitors do not always have the expertise and confidence needed to conduct coherent, in-depth investigations to answer their questions. Some skills are challenging even for people with science backgrounds (Allen 1997; Loomis 1996; Randol 2005). One reason for this may be that inquiry is rarely explicit in museum materials. When confronted with an interesting exhibit or object, visitors may have difficulty thinking about the skills they should apply to the process of understanding it. According to Schauble, scientific skills and practices tend to be hidden because: . . . the qualities that objects have — perceptible, static, enduring, and valuable — tend to make them more visible and salient than the practices involved in making science, art or history. Forms of argumentation, inquiry, and expression are difficult to see and think about (2002, 235). Consequently, if a museum’s goal is to help visitors engage more deeply in the practices of scientists, it may be particularly important to help them understand, practice, and talk about the intellectual tools that scientists use. On the floor of a science museum, this means helping visitors explicitly learn the skills of inquiry and ways to apply them at science exhibits to investigate natural phenomena more deeply. To address this need, a team of researchers and practitioners at the Exploratorium created a “crash course” in exhibit-based inquiry for families and other visiting groups. The goal of the course was not to teach any particular scientific content, nor to guide a deep investigation at one particular exhibit — both laudable goals often addressed in science centers — but to help families ask and answer their own questions at novel exhibits cur ato r 5 2 /3 • j u ly 20 09 291 they may encounter in the future. Building on the Exploratorium’s APE project, and in collaboration with staff from its Institute for Inquiry, the program was developed by adding a programmatic overlay to existing open-ended APE exhibits. The project was funded by the National Science Foundation and named GIVE (Group Inquiry by Visitors at Exhibits). In this article we describe the program’s design process and structure in detail, and summarize the preliminary findings from a study that assessed its effectiveness. The Design Process for Inquiry Games The GIVE development team produced and tested Inquiry Games, in which groups of visitors learned simple inquiry skills they could use as they interacted with exhibits.6 The design process, which relied on both a review of the inquiry research literature and a series of iterative formative evaluation studies, was an example of a close interplay between theory-driven research and pragmatic design constraints. We describe it here in some detail, in the hope that it will illuminate some of the design terrain for museum professionals wishing to create similar programs. Characteristics of a feasible program — It is well known that most museum-goers visit in multi-generational social groups and follow paths of their own choosing through the range of available exhibits (Diamond 1986; Falk and Dierking 1992; 2000; Falk 2006). For the Inquiry Games to be feasible and genuinely useful in this kind of “free-choice” leisure setting, the activities would need to be: • appropriate for groups with a broad range of ages, interests, and backgrounds; • accessible enough to be non-intimidating to visitors without strong science backgrounds; • simple enough to be remembered without much effort; • intrinsically enjoyable so they would be used spontaneously, beyond the practice period with a staff educator; • quickly learnable over a 20-30 minute experience, to fit easily within the timeframe of a typical museum visit; and • applicable across a very broad range of exhibit types and topics, so that visitors would find them useful during the rest of their visit, no matter which exhibits they chose to use. This set of properties proved challenging, and required almost six months of pilot testing and revision to achieve simultaneously. Initial choice of skills — One major design issue was choosing which inquiry skills to include in the Inquiry Games. As a first attempt, the team created a set of six inquiry skills derived from the school-based research literature on inquiry. These six were augmented 292 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt and adjusted to be compatible with the informal science learning values of curiosity, personalization, diversity of perspectives, and social learning. The six skills originally targeted were: 1. Exploration (phrased as “playing, messing around”) — This initial phase would be a combination of the traditional scientific inquiry skills of observation and experimentation, but would be unstructured and informal, giving visitors the freedom and confidence to observe and experiment with exhibits in fruitful ways. 2. Question-generation (“What makes it do that?”) — Visitors would then search for a question of interest to them that would be fruitful in the exhibit context. The team particularly wanted to evoke questions that were causal in nature (so they would generate alternative explanations by different participants), yet still be amenable to experimentation, such as “What makes it do that?” 3. Generation of multiple alternative models (“Maybe what’s going on is . . . ”) — Visitors would then be guided to generate possible answers to their previous question, “What makes it do that?” They would be particularly encouraged to give causal explanations — important because these are a central form of scientific understanding (Sandoval and Reiser 2004; Schauble et al. 1991; White and Frederiksen 2000). However, they might also offer analogies, associations, or other kinds of models or principles, in keeping with the broader definitions of explanatory interpretation that are often used in informal learning environments (see Borun, Chambers, and Cleghorn 1996; Callanan and Jipson 2001; Leinhardt and Knutson 2004). 4. Choice of explanatory model, with empirical or theoretical justification (“What we think is going on is . . . ”) — The group would then be encouraged to come to agreement about possible explanations, through discussing and comparing alternative ideas, and referring back to what they discovered while “messing around” with the exhibit. This would be a translation into informal environments of the central skill of argumentation, whereby alternative claims are brought into competition and evidence is used to decide among them (Kuhn 1990; Kuhn and Udell 2003). 5. Significance (“How this exhibit speaks to me”) — Visitors would be encouraged to make connections based on their personal knowledge, experience, or interests. They might be asked questions such as “What do you notice or think about that others might miss?” This skill, which has no direct counterpart in the formal inquiry literature, would encourage visitors to bring their diverse personal backgrounds to the shared experience. This would have the potential to validate the kinds of meanings visitors naturally make in museums, while folding them into a scientific meaningmaking process. 6. Metacognitive self-assessment (“But what we still don’t know is . . . ”) — Finally, the team planned to coach visitors to think about the limits of what they now know and what they do not. This would add a metacognitive — thinking about think- cur ato r 5 2 /3 • j u ly 20 09 293 ing — component to the set of inquiry skills, in accordance with a large body of research that has shown metacognition to be a gateway skill for other types of learning (Brown and Campione 1994; Chi et al. 1989; Palincsar and Brown 1984). The original plan was to introduce these skills sequentially, but show how they can form a loose cycle, with self-assessment leading back to a new round of exploration and question-generation. A rude awakening: Findings from formative evaluation — When the team conducted a series of small formative evaluation studies,7 we quickly learned that this set of skills was unrealistic in the museum context, and needed to be reworked in a number of ways. First, the set of six skills was far too ambitious in scope. This became clear when visitors were asked to play the game with a new exhibit after the practice session, and were then observed at a distance. The observations showed that when visitors were overwhelmed by the memory task, or found the new skills unpalatable, they revealed this by simply not using them. Thus we could easily tell when our set of skills was too ambitious. In the early months of pilot-testing, this happened frequently, leading the team to gradually reduce the number of skills in the program from six to two, at which point the majority of visitors were able to go reliably through the steps to the end without assistance. In order to further reduce the memory load, the educator repeatedly named the skills throughout the practice-session, and gave every visitor a colorful two-sided card with the skills printed on either side. The cards could be used throughout the session and taken home at the end. An additional lesson learned from the formative evaluation was that the six skills originally targeted were not equally useful to visitors in the exhibit setting. The exploration skill (1) was so compelling and natural for visitors that it required no emphasis at all; the GIVE team educator merely invited groups to begin with this as a form of orientation to the exhibit. In fact, the team needed to design respectful ways to stop visitors exploring when it was time to engage in other parts of the inquiry process. Skill (2), asking a question, worked well, especially when the educator suggested that groups try to ask a “juicy” question,8 which was defined explicitly for the visitors as a question that nobody in their group knew the answer to, yet was realistically answerable at the exhibit. By contrast, skills (3) and (4), involving conceptual modeling and argumentation, which many researchers see as the heart of scientific inquiry, seemed particularly challenging for visitors to do. We suspected there were two main reasons for this: First, in a multi-faceted, openended exhibit, it was difficult to encourage multiple visitors to focus on explaining the same feature of the exhibit. They tended to see different things as interesting. Second, children were reluctant to challenge the views of adults or more experienced group members, especially in the social context of a “fun” museum visit. Our observations seem compatible with previous studies showing that visitors explain phenomena to each other quite frequently (Allen 1997; 2002; Crowley and Callanan 1998), but that such explanations tend to be brief and partial (Crowley et al. 2001) and only rarely lead to well-developed scientific argumentation (Randol 2005). 294 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt This difficulty led us to combine skills (3) and (4) to create a less competitive skill that was still related to explanation, but closer to what visitors naturally do: interpreting an observed result. The next skill on the original list (5), looking for personal significance in the exhibit, was eventually dropped because visitors reported that it did not fit easily into the rest of the inquiry sequence. Lastly, metacognitive self-assessment (6) was folded into the structure of the activity itself, rather than being a separate step (this is described more in the next section). The team briefly explored a different kind of inquiry cycle from the literature: one that focused on “learning by design,” which has proven successful in schools (Kolodner et al. 2003). This approach teaches science by having students try to achieve design challenges. However, we decided not to pursue this prototype when we failed to find a way to make it generalize to a broad range of exhibit types. Its effectiveness seemed limited to construction-style exhibits such as building solar cars or designing boats from a range of smaller elements, and the GIVE project’s goals were to create a version of inquiry with broader applicability. Final choice of skills — Based on several months of iterative formative evaluation, we focused the final Inquiry Games on only two skills: proposing a (preferably “juicy”) question at the beginning to initiate an investigation; and interpreting a result at the end. While this set of skills may not be unique or even optimal as an orientation to inquiry, these two skills did emerge as the strongest candidates among those tried by the team. They served as bookends to visitors’ natural exploration activity; seemed intellectually accessible to diverse groups; and were simple enough to be understood quickly and remembered without undue effort. At the same time, previous research has shown that these skills are not routinely practiced by visitors. According to a detailed study of inquiry activity by families at multi-outcome interactive exhibits at several museums (Randol 2005), the skills of asking a question, describing a result, and drawing a conclusion were all rarer by a factor of 5-10 than the most common actions of manipulating something that can be varied at the exhibit, and observing the result. Furthermore, though many visitors may wonder or draw conclusions privately as they use exhibits, articulating such thoughts publicly is a notable inquiry skill in itself, and serves to move the group’s investigation forward (Quintana et al. 2004). In short, the chosen skills seemed to be accessible, under-utilized, and worthy of emphasis in our program. Choice of pedagogical structure — The other major decision undertaken during the iterative design phase involved choosing appropriate pedagogical structures: ways to teach the two inquiry skills by embedding them in some kind of activity. In general, the team embraced the well-studied approach of “cognitive scaffolding” (Wood 2001) in which a teacher (in our case, an experienced museum educator) uses questions, prompts, and other structured interactions as cognitive supports for learners during an extended investigation. In this case, the GIVE team created a “game”9 with rules and a printed card with colorful icons (shown in photo 1), to help the family remember the skills (Davis 1993). After two rounds of practice, the educator’s support “faded,” requiring the group to play the game alone, usually with an adult facilitating as needed. cur ato r 5 2 /3 • j u ly 20 09 295 Photo 1. To make the skills easy to remember, the team created colorful cards that visitors could keep with them and take home afterwards. Shown here are the front and back of the cards for Hands Off (left) and Juicy Question (right). Photo courtesy of the Exploratorium. Even with the overall scaffolding metaphor, however, there were many choices to be made about the design of the inquiry-based game. For example, within a family, who should keep track of the skills, ensuring that the group would remember to practice them? How could all members participate, without the activity feeling forced or too prescriptive? During the pilot phase, the GIVE team explored various ways to structure the game, including turn-taking, role-playing with props, and spontaneous participation with minimal structure. Role-playing, which has been used successfully in school contexts for learning inquiry (White and Frederiksen 2005), was a particularly appealing solution because it reduces the cognitive demands on any individual in the family. Each person would have to remember only his or her own role (for instance: “My job is to remind the group to brainstorm questions when the time comes”), and the roles could be rotated for even greater learning. During the pilot phase, the team created colorful necklaces with the roles on them, to help visitors remember and recognize each other’s roles. However, in the end we dropped this strategy because creating roles for a variable number of family members made the game overly complex for families to learn within the short time period available, and because it was difficult to create a flexible number of roles for varying numbers of family members in different groups. More successful and easier to implement were activities where participants did not have specialized roles, but participated on equal terms, either taking turns under the guidance of a single facilitator, or calling out their ideas as individuals whenever they had something to share. Based on the pilot-testing, the team settled on two alternative pedagogical structures for the Inquiry Games, one tightly collaborative and the other supportive of individual spontaneity: A. Juicy Question Game — In this game, the family works together to identify and jointly investigate a single question that is “juicy” — defined, for the purposes of the game, as one to which nobody knows the answer at the beginning of the investigation, but the question can be answered by using the exhibit. The game begins with some initial 296 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt exploration of the exhibit, after which family members take turns sharing a question they have about the exhibit. They then choose one of the questions to investigate as a group. At the end of the investigation, the family stops to reflect on what they have discovered and share their ideas with each other until they feel finished. The two components of the term “juicy” were chosen deliberately: Adults who did not know the answers to the groups’ questions tended to co-investigate with their children, rather than falling into more didactic modes of teaching (as was suggested by Randol 2005). Secondly, asking an answerable question is a significant skill in any domain of science, because so many questions require resources or explanatory models beyond those available in the phenomena at hand. For example, the question “Why does the ball float in the airstream?” does not meet the two criteria because it cannot be readily answered using the exhibit, unless someone in the group already knows the canonical scientific explanation (in which case it is not juicy for that reason). A juicier question, assuming nobody in the group knows the answer, is: “Do some balls float higher than others in the airstream?” This can be answered to the satisfaction of all members of a group, just by using the exhibit. Other inquiry authors (Elstgeest 2001) have noted that questions taking the form of “what happens if” or “does it ever happen that” can be particularly valuable places to begin productive inquiry, rather than the “why” questions that may spring to mind but often require explanation of the invisible. Interestingly, formative evaluation showed that families who started out investigating less-juicy “why” questions often spontaneously turned them into juicy questions over time, so the educator was able to intervene less as the family moved in an ultimately productive direction. Because the Juicy Question game is tightly collaborative, it required a facilitator. At first, the educator took this role, but because the goal was to get the family to play the Inquiry Game independently, the educator asked one of the adults in the family to gradually take on the facilitator role. The facilitating adult was also given a special card with steps for running the game, to serve as a reminder and support. Each family played the game twice (at two different exhibits) before being asked to do it alone. B. Hands-Off Game — The team was concerned that the tightly structured nature of Juicy Question might make it seem less like a game and more like formal schooling. For this reason, we developed an alternative pedagogical structure, which was more spontaneous and less scripted, in keeping with the informal and choice-based environment of the museum floor. The inspiration for this game came from two sources. One was Housen and Yenawine’s Visual Thinking Strategies, in which students practice inquiry skills by examining artworks and sharing evidence for their interpretations in a spontaneous group process that builds over time (Housen 2001; Housen and Yenawine 2001). The other source was the Exploratorium’s Explainer program, which first developed the phrase “hands off” as a way to get the attention of visiting families. Building on these, we created a Hands-Off game in which anyone, at any time, could call out “hands off!” and the rest of the family would need to stop using the exhibit and listen to the caller. At that point, the person could share either a proposal for something they wished to investigate, or a discovery about the exhibit, before calling “hands-on” again. In this way, the game taught visitors to use the same two inquiry skills as Juicy Question, but in a more individu- cur ato r 5 2 /3 • j u ly 20 09 297 Photo 2. A family plays Hands Off at one of the exhibits. Photo courtesy of the Exploratorium. al and spontaneous way. No facilitator was needed, and the game was particularly easy to remember. It proved to be especially popular with younger children, since it gave them a way to be heard over their potentially dominating siblings. Photo 2 shows a family playing the game at one of the exhibits. Evaluating the Program’s Success In this section we highlight some of the preliminary indicators of the program’s effectiveness. Subsequent publications will offer more detailed analyses. After months of iterating our Inquiry Games, we reached a point where we felt they were strong enough to stabilize. In terms of our original goals, we found that: 1. The games worked for visiting families10 with a broad range of ages, interests, and backgrounds. We set a lower age limit of eight for family members, but that was partly because the project’s study requirements included an interview phase, and younger children struggled to answer the interview questions. It seemed possible to play the games with children as young as six. 2. The games were simple enough to be remembered without much effort, especially with the aid of summary cards that families took home with them. 3. The games could be learned within a realistic timeframe. In 20-30 minutes, most families were able to learn the games and investigate two exhibits satisfactorily. 298 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt 4. Provisional findings from a study of 200 eligible families recruited from the public floor11 suggest that families who were asked to play the games at a new exhibit showed enhanced inquiry behaviors compared to families in a control group who had not learned the games. For example, they appeared to spend longer; engaged in one or both inquiry skills (proposing a question and interpreting a result) more often; and conducted more investigations that built on each other in a coherent way. The detailed results of these comparisons are still being carefully confirmed and will be reported subsequently, but early patterns suggest that both games enhanced visitors’ inquiry, and that the greatest improvements were exhibited by families who learned Juicy Question in particular. 5. When asked what aspects of the games visitors had most enjoyed, they typically mentioned the support for collaboration among their group members, and support for focusing and thinking more deeply about an exhibit. For example, a parent talking about Juicy Question said, “It made us stop and think together, so we actually had to think about what we were doing and make sure we did learn something from it. So it was fun and educational as well.” Similarly, a typical comment about the Hands Off game was: “Everybody had a chance to take a turn with their ideas.” Least enjoyable aspects included the tendency for the games to feel rigid, and the difficulty of getting everyone to maintain the game structure while physically interacting with an exhibit. The most commonly reported downside to Juicy Question was the difficult of deciding on a question to investigate, while players of Hands Off most commonly reported frustration waiting for a turn when someone else had called “hands off.” 6. Overall, both games were intrinsically enjoyable. On a scale from 1 to 5, where 1 = never and 5 = always, adults and children rated both games midway between 4 and 5 when rating “I had fun” and “I thought it was interesting.” In follow-up phone interviews12 conducted with family members 3–4 weeks after their visit, approximately one quarter said they had spontaneously used the games at other exhibits after leaving the research lab, even though they had not been asked to do so at the time. Both adults and children named specific exhibits where they had used the games, in topic areas as diverse as electric circuits, reflexes, bubbles, lenses, sound localization, musical scales, and probability. Conclusions and Next Steps Summary of design phase — The design phase of the GIVE project showed that it was possible to create an enjoyable “crash course” in scientific inquiry that could be learned within the realistic timeframe of a casual museum visit and applied to open-ended exhibits on any topic. Early evaluation data suggests that visitors enjoyed playing the specific Inquiry Games developed in this project, and that they engaged in more of the targeted inquiry behaviors when playing the games on their own with a new exhibit. cur ato r 5 2 /3 • j u ly 20 09 299 Photo 3. The GIVE research laboratory, a quiet area adjacent to the museum floor, where visitors’ conversations and behaviors could be videotaped with high fidelity. Photo courtesy of the Exploratorium. Trade-offs in design — One insight that emerged over the course of the design phase elicited this observation: Teaching the processes of inquiry was a significantly more ambitious goal than providing an inquiry experience. The focus of this project was on the teaching aspect: to give visitors a set of skills they could use confidently and successfully in a variety of future settings, without the presence of a staff member. If the goal had been to facilitate a single inquiry-based learning experience, there would have been less need to help families practice and remember the skills; the available time could have been used to teach more inquiry skills beyond the two chosen in this project. The focus on building skills for flexible future use also led the team to avoid teaching any scientific principles embodied in the exhibits. Such a focus would not have been transferable to new exhibits in the absence of an experienced staff educator. Thus, the GIVE team’s commitment to teaching generalizable inquiry skills was one particular choice within a design space of trade-offs. Plans to use the program more broadly — Because GIVE was primarily an early-stage research project to identify a set of skills and practices for deepening group inquiry in a “best case” scenario, we controlled many aspects of the museum environment. For example, we 300 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt had an extremely skilled educator work with the visitors, and we isolated them from the noise and distractions of the museum floor by conducting the program in a laboratory space, as shown in photo 3. With the Inquiry Games fully developed and showing early indicators of success in that limited context, it seems worthwhile to briefly consider how they could be taken to the next step of programmatic implementation on the museum floor. Implementation on the museum floor — To bring Inquiry Games to museum visitors as a regular program, we anticipate that the following issues might particularly need to be addressed. First: The program was designed for groups sized 3–5, so for larger groups it might be awkward for everyone to gather around an exhibit and participate fully. Second: Small groups are economically costly to work with, if the facilitators are paid staff. Third: The levels of enjoyment by families may depend on the skill level of the educator. We have not yet explored the effects of having the program offered by a less experienced staff facilitator. Fourth: The program may be harder to implement in the chaotic environment of a public museum floor. Currently we are working with the leaders of the Exploratorium’s Explainer Program to explore solutions to these challenges. For example, we hope to pilot-test the games with Explainers working as trained facilitators who can spontaneously offer to show the games to small groups of visitors during their visit. In their standard demonstrations with visitors, Explainers already choose exhibits that can be readily controlled (for instance: with key moving parts that can be held). This approach might be adapted so that a visiting group could play the game while the Explainer interrupts the action at appropriate times to narrate to onlookers the rules of the game and to amplify key aspects of the conversation on the noisy public floor. School field trips — An obvious next step for the Inquiry Games would be to try them with school field trips. The structured learning culture of schools is often perceived to be at odds with the informal learning context of museums, making it difficult to design educational field trips based on free-choice learning practices (Cox-Petersen et al. 2003; Griffin 2004; Griffin and Symington 1997; Mortensen and Smart 2007; Parsons and Breise 2000; Price and Hein 1991). However, the Inquiry Games have the potential to ameliorate this problem by teaching students important scientific thinking skills while supporting their self-driven exploration of natural phenomena. The GIVE project team is currently studying field trip groups using the Inquiry Games in the lab setting, with chaperones acting as facilitators. Living collections — In theory, the Inquiry Games are sufficiently general to be used in any informal science learning setting. However, because the games were designed for use in an interactive science museum, they are well suited to exhibits that support a high degree of physical manipulation. How could families propose, implement, and reflect on experiments with living systems, where opportunities for direct manipulation are few? We hypothesize that in such environments it may be more appropriate to adjust the specific skills targeted by the games, to emphasize observation rather than experimentation. The Visual Thinking Strategies framework (Housen 2001) may be more suitable in such cur ato r 5 2 /3 • j u ly 20 09 301 settings, since it invites participants to share their interpretations of what they are observing, and to identify evidence for such interpretations. We found this technique did not work well in the interactive science museum precisely because the dynamic and interactive nature of the exhibits made it difficult for groups to all observe the same thing at the same time. Living systems with slower timeframes of change may be more appropriate venues for this framework. Opportunities beyond the museum — During follow-up interviews, a small fraction of families (about 15 percent) indicated that they had spontaneously used some aspect of the Inquiry Games in their lives beyond their visit. This figure could presumably be increased with the addition of a Web site where visitors could post their investigations — including the framing questions and interpretations of results — for others to see. It might also be used as an entry point into online citizen science communities where members of the public participate in scientific research activities. The impact could be further extended by creating an online version of the Inquiry Games, along with video showing sample training sessions and investigations, for use by museum educators, teachers, chaperones, home-schoolers and other interested groups. Acknowledgments The authors gratefully acknowledge the creative and analytical contributions of all members of the GIVE team and its advisors, and the comments of four anonymous reviewers. This material is based upon work supported by the National Science Foundation under Grant number 0411826, and later work supported by the National Science Foundation while the first author was working at the Foundation. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation. Notes 1. See Abd-El-Khalick et al. 2004; Allen 1997; Ash 1999; Bybee 2000; Chinn and Malhotra 2002; Hatano 1986; Minstrell 1989; Minstrell and van Zee 2000; White and Frederiksen 2000. 2. See Dyasi 1999; Edelson, Gordin, and Pea 1999; Gutwill 2008; Suchman 1962. 3. See Bransford, Brown, and Cocking 2003; Bruer 1994; Gitomer and Duschl 1995; National Research Council 1996; 2000; Olson and Loucks-Horsley 2000; Rankin, Ash, and Kluger-Bell 1999; White 1993. 4. See Ansbacher 1999; Hein 1998; Oppenheimer 1968; Oppenheimer 1972; Sauber 1994. 5. See Bain and Ellenbogen 2002; Hapgood and Palincsar 2002; Housen 2001; Davis 1993. 302 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt 6. For more details about the project, the program and the study results, including videos of visitors learning and using the Inquiry Games, see the GIVE Web site: www.exploratorium.edu/partner/visitor_research/give/. 7. The findings from these studies were used to inform many aspects of the development of the Inquiry Games, but our design insights are probable rather than statistically verified. The final version, was, however, tested against a control condition with an experimental design whose preliminary results are reported here. 8. This use of the word “juicy” was not a technical meaning derived from the research literature. Rather, it was a term familiar from everyday life, intended to imply that this kind of question was likely to lead to an investigation that was satisfying, enjoyable, and full of possibilities. The term was coined by team member Suzy Loper. 9. The inquiry-based activities were game-like in only some respects (collaborative, engaging, rule-based, involving turn-taking), and were not exciting activities in which one person emerges as a winner. However, referring to the activities as “games” seemed particularly effective in helping children understand that the exit interview questions were not focused on the exhibit but on the strategies they had learned. 10. While we refer to them as “families,” there was no strict requirement for their relationships to each other. We simply recruited groups of visitors who were not members, with at least one adult, at least one child aged 8–13, and no children younger than 8. 11. Of the families who were eligible to participate, 46 percent agreed. We compared the behavior of families playing the game to that of control groups who did not learn the games. Families were randomly assigned to be in different study conditions. From a research perspective this allowed us to assess the effects of the games, albeit for only half of the families eligible to participate. From a programmatic perspective we would not expect this participation rate to predict families’ willingness to engage in such a program more generally, because this was an extended research study that took approximately one hour to complete and included incentives such as small gifts and exclusive access to several exhibits. 12. Members of the GIVE team attempted to conduct follow-up phone interviews with one adult and one child in each of the 200 families who participated in the study. They were successful in reaching adults from 76 percent of the families and contacting children from 50 percent of the families; this sample was not significantly different from those not reached, in terms of age, gender, prior knowledge, or time spent with the exhibits. References Abd-El-Khalick, F., S. BouJaoude, R. Duschl, N. G. Lederman, R. Mamlok-Naaman, A. Hofstein, M. Niaz, D. Treagust, and Hsiao-lin Tuan. 2004. Inquiry in science education: International perspectives. Science Education 88 (3): 397–419. cur ato r 5 2 /3 • j u ly 20 09 303 Allen, S. 1997. Using scientific inquiry activities in exhibit explanations. (Informal Science Education Special Issue.) Science Education 81 (6): 715–734. ______. 2002. Looking for learning in visitor talk: A methodological exploration. In Learning Conversations In Museums, G. Leinhardt, K. Crowley and K. Knutson, eds. Mahwah, NJ: Lawrence Erlbaum Associates. Ansbacher, T. 1999. Experience, inquiry and making meaning. Exhibitionist 18 (2): 22– 26. Ash, D. 1999. The process skills of inquiry. In Inquiry: Thoughts, Views and Strategies for the K-5 Classroom. Washington, D.C.: National Science Foundation. Bain, R, and K. Ellenbogen. 2002. Placing objects within disciplinary perspectives: Examples from history and science. In Perspectives on Object-centered Learning in Museums, S. G. Paris, ed. Mahwah, NJ: Lawrence Erlbaum Associates. Borun, M., M. Chambers, and A. Cleghorn. 1996. Families are learning in science museums. Curator: The Museum Journal 39 (June): 123–138. Borun, M., J. I. Dritsas, N. E. Johnson, K. F. Peter, K. Fadigan, A. Jangaard, E. Stroup, and A. Wenger. 1998. Family Learning in Museums: The PISEC Perspective. Association of Science-Technology Centers. Bransford, J., A. Brown, and R. R. Cocking, eds. 2003. How People Learn: Brain, Mind, Experience and School. Washington, DC: National Academy Press. Brown, A., and J. Campione. 1994. Guided discovery in a community of learners. In Classroom Lessons: Integrating Cognitive Theory and Classroom Practice, K. McGilly, ed. Cambridge, MA: M.I.T. Press. Bruer, J. T. 1994. Schools for Thought: A Science of Learning in the Classroom. Cambridge, MA: M.I.T. Press. Bybee, R. W. 2000. Teaching science as inquiry. In Inquiring into Inquiry Learning and Teaching in Science, J. Minstrell and E. H. v. Zee, eds. Washington, DC: American Association for the Advancement of Science. Callanan, M. A., and J. L. Jipson. 2001. Explanatory conversations and young children’s developing scientific literacy. In Designing for Science: Implications from Everyday, Classroom, and Professional Science, K. Crowley, C. D. Schunn and T. Okada, eds. Mahwah, NJ: Lawrence Erlbaum Associates. Chi, M., M. Bassok, M. Lewis, P. Reimann, and R. Glaser. 1989. Self-explanations: How students study and use examples in learning to solve problems. Cognitive Science 13: 145–182. Chinn, C. A., and B. A. Malhotra. 2002. Epistemologically authentic inquiry in schools: A theoretical framework for evaluating inquiry tasks. Science Education 86 (2): 175– 218. Crowley, K., and M. Callanan. 1998. Describing and supporting collaborative scientific thinking in parent-child interactions. Journal of Museum Education 23 (1): 12–17. Crowley, K., M. Callanan, J. Jipson, J. Galco, K. Topping, and J. Shrager. 2001. Shared scientific thinking in everyday parent-child activity. Science Education 85 (6): 712– 732. Davis, J. 1993. Museum games. Teaching Thinking and Problem Solving 15 (2): 1–6. Diamond, J. 1986. The behavior of family groups in science museums. Curator: The Museum Journal 29 (2): 139–154. 304 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt Dyasi, H. 1999. What children gain by learning through inquiry. In Inquiry: Thoughts, Views and Strategies for the K-5 Classroom, L. Rankin, ed. Arlington: National Science Foundation. Edelson, D. C., D. N. Gordin, and R. D. Pea. 1999. Addressing the challenges of inquirybased learning through technology and curriculum design. Journal of the Learning Sciences 8 (3): 391–450. Elstgeest, J. 2001. The right question at the right time. In Primary Science: Taking the Plunge, W. Harlen, ed. Portsmouth, NH: Heinemann. Falk, J. H. 2006. An identity-centered approach to understanding museum learning. Curator: The Museum Journal 49 (2): 151–166. Falk, J., and L. Dierking. 1992. The Museum Experience. Washington, DC: Whalesback Books. ______. 2000. Learning from Museums: Visitor Experiences and the Making of Meaning. New York: AltaMira Press. Gitomer, D. H., and R. Duschl. 1995. Moving towards a portfolio culture in science education. In Learning Science in the Schools, S. M. Glynn and R. Duit, eds. Mahwah, NJ: Lawrence Erlbaum Associates. Gutwill, J. P. 2005. Observing APE. In Fostering Active Prolonged Engagement: The Art of Creating APE Exhibits, T. Humphrey and J. Gutwill, eds. San Francisco: Left Coast Press. ______. 2008. Challenging a common assumption of hands-on exhibits: How counterintuitive phenomena can undermine open-endedness. Journal of Museum Education 33 (2): 187–198. Hapgood, S., and A. S. Palincsar. 2002. Fostering an investigative stance: Using text to mediate inquiry with museum objects. In Perspectives on Object-centered Learning in Museums, S. G. Paris, ed. Mahwah, NJ: Lawrence Erlbaum Associates. Hatano, G. 1986. Enhancing motivation for comprehension in science and mathematics through peer interaction. Paper read at Annual Meeting of the American Educational Researchers’ Association, San Francisco. Hein, G. E. 1998. Learning in the Museum, Museum Meanings. New York: Routledge. Housen, A. C. 2001. Aesthetic thought, critical thinking and transfer. Arts and Learning Research Journal 18 (1): 99–131. Housen, A. C., and P. Yenawine. 2001. Basic VTS at a Glance. New York: Visual Understanding in Education. Humphrey, T., and J. P. Gutwill, eds. 2005. Fostering Active Prolonged Engagement: The Art of Creating APE Exhibits. Walnut Creek: Left Coast Press. Kolodner, J. L., P. J. Camp, D. Crismond, B. Fasse, J. Gray, J. Holbrook, S. Puntambekar, and M. Ryan. 2003. Problem-based learning meets case-based reasoning in the middle-school science classroom: Putting learning by design into practice. Journal of the Learning Sciences 12 (4): 495–547. Kuhn, D. 1990. What constitutes scientific thinking? Contemporary Psyschology 35 (1). Kuhn, D. and W. Udell. 2003. The development of argument skills. Child Development 75 (5):1245–1260. Leinhardt, G., and K. Knutson. 2004. Listening in on Museum Conversations. Lanham, MD: AltaMira Press. cur ato r 5 2 /3 • j u ly 20 09 305 Loomis, R. J. 1996. Learning in museums: Motivation, control and meaningfulness. In Study Series, Committee for Education and Cultural Action (CECA), N. Gesché, ed. Paris: ICOM. Minstrell, J. 1989. Teaching science for understanding. In Toward the Thinking Curriculum: Current Cognitive Research (1989 Yearbook of the Association for Supervision and Curriculum Development), L. Resnick and L. Klopfer, eds. Alexandria, VA.: ASCD. Minstrell, J., and E. van Zee, eds. 2000. Inquiring into Inquiry Learning and Teaching in Science. Washington, DC: American Association for the Advancement of Science. National Research Council. 1996. National Science Education Standards. Washington, DC: National Academy Press. ______. 2000. Inquiry and the National Science Education Standards: A Guide for Teaching and Learning, S. Olson and S. Loucks-Horsley, eds. Washington, DC: National Academy Press. Olson, S., and S. Loucks-Horsley, eds. 2000. Inquiry and the National Science Education Standards: A Guide for Teaching and Learning. Washington, DC: National Academy Press. Oppenheimer, F. 1968. A rationale for a science museum. Curator: The Museum Journal (Nov.). ______. 1972. The Exploratorium: A playful museum combines perception and art in science education. American Journal of Physics 40 (July): 978–984. Palincsar, A., and A. Brown. 1984. Reciprocal teaching of comprehension-fostering and comprehension-monitoring activities. Cognition and Instruction 1: 117–175. Quintana, C., B. J. Reiser, E. A. Davis, J. Krajcik, E. Fretz, R. Golan Duncan, E. Kyza, D. Edelson, and E. Soloway. 2004. A scaffolding design framework for software to support science inquiry. Journal of the Learning Sciences 13 (3): 337–386. Randol, S. 2005. The nature of inquiry in science centers: Describing and assessing inquiry at exhibits. Doctoral dissertation, University of California, Berkeley. Rankin, L., D. Ash, and B. Kluger-Bell, eds. 1999. Inquiry: Thoughts, Views and Strategies for the K-5 Classroom. Vol. 2: Foundations: A Monograph for Professionals in Science, Mathematics, and Technology Education. Arlington: National Science Foundation. Sandoval, W. A., and B. J. Reiser. 2004. Explanation-driven inquiry: Integrating conceptual and epistemic scaffolds for scientific inquiry. Science Education 88 (3): 345–372. Sauber, C. M, ed. 1994. Experiment Bench: A Workbook for Building Experimental Physics Exhibits. St. Paul: Science Museum of Minnesota. Schauble, L. 2002. Cloaking objects in epistemological practices. In Perspectives on Objectcentered Learning in Museums, S. G. Paris, ed. Mahwah, NJ: Lawrence Erlbaum Associates. Schauble, L., R. Glaser, K. Raghavan, and M. Reiner. 1991. Causal models and experimentation strategies in scientific reasoning. The Journal of the Learning Sciences 1 (2): 201–238. Suchman, J. R. 1962. The Elementary School Training Program in Scientific Inquiry. Urbana: University of Illinois. White, B. 1993. Intermediate causal models: A missing link for successful science education? In Advances in Instructional Psychology, R. Glaser, ed. Hillsdale, NJ: Lawrence Erlbaum Associates. 306 s u e a l l e n a nd j os h ua P. g utwil l • research repo rt White, B., and J. Frederiksen. 2000. Metacognitive facilitation: An approach to making scientific inquiry accessible to all. In Inquiring into Inquiry Learning and Teaching in Science, J. Minstrell and E. H. v. Zee, eds. Washington, DC: American Association for the Advancement of Science. ______. 2005. A theoretical framework and approach for fostering metacognitive development. Educational Psychologist 40 (4): 211–223. Wood, D. 2001. Scaffolding, contingent tutoring and computer-supported learning. International Journal of Artificial Intelligence in Education 12: 280–292.