TERMS OF REFERENCE White Paper on Measuring International

advertisement

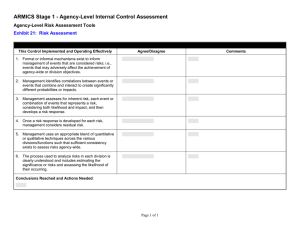

TERMS OF REFERENCE White Paper on Measuring International NGO Agency-Level Results April 2015 I. Introduction & Background International NGOs have been increasingly interested in creating organizational level metrics and results that measure and evaluate more than the sum of their individual projects. Organizations have approached this in a variety of different ways: some are limiting their efforts to capture reliable, accurate information on outputs and numbers of people reached, while others are striving to collect outcome-level data. Some have developed sets of standard indicators while others have opted to create indicator “buckets” to give field programs more flexibility in determining appropriate measures of success. Various agencies are creating public reports for communications purposes, internal reports as management tools, and dashboards, websites and databases. Other agencies have taken an entirely different approach, opting to learn about organizational impact through tools such as meta-evaluations and other types of program and operational reviews, or even choosing against agency-level measurement and focusing on project-level impact. Since 2012, InterAction’s Evaluation and Program Effectiveness Working Group (EPEWG) has been hosting sessions on the subject of agency-level measurement with the aim of sharing the advantages and disadvantages of collecting data and evaluating organizational impact in this way. A smaller group of organizations has been meeting regularly to exchange experiences and to explore how to share these lessons more proactively within the wider development and humanitarian community. To date, this has primarily taken the form of presentations to the EPEWG, or in venues such as InterAction’s Forum or the American Evaluation Association conference. This smaller group mainly consists of U.S.-based international NGOs working in both relief and development, and is actively engaged in by the lead Monitoring and Evaluation staff person, or other relevant point person who may be managing or exploring the construction of some type of system on behalf of the agency. The majority of the participants in the group have first-hand experience with both navigating the decision making process within the agency, both at the executive and field level, as to what type of systems to establish, as well as the actual hands-on management experience of attempting to build and use one. Most of the members of the group regularly struggle with the pros and cons of the various forms of these systems and have been seeking out peer learning for the last several years, both for internal advocacy purposes as well as for operational, programmatic and management tools and experiences. Over the last year, it has become clear that there continues to be an acute need for further learning within this group, and within the wider landscape of humanitarian and development practitioners. 1 InterAction’s website has inventoried the various forums where international NGO experiences with agency-level results measurement systems have been presented, and in 2014 the group produced a “Key Framing Points” document which briefly summarized common reflection points when considering establishing an agency-level results system. The group also began a brief inventory, in table form, of various agencies’ experiences. Due to the continued and increasingly high demand for more information, collaboration, and learning from wider InterAction members, this small group would now like to capture what has been learned in a more systematic, comprehensive way and has decided to commission a white paper to further explore this issue, objectively document a wide range of agency experiences, and explore pressing questions about whether investments in these types of systems are worthwhile, and if so, for what and under which circumstances. The group strives to produce a white paper that can collectively represent a wide range of InterAction members, and speak to the growing inquiry and work among international NGOs to create agency-level results measurement systems. To that end, the funding for this consultancy is based on a committed pool of contributions from 10 prominent international NGOs, and will be managed by a representative advisory group with co-chairs, in conjunction with InterAction. II. Objective(s) of the study The goal of the study is to examine and learn from experiences in building agency-level results measurement systems in order to reach senior leaders, executives, and the donor community about the practicality, implications, constraints, and potential for such initiatives. The study should build on and complement previous work done by international NGO measurement professionals (including the “Key Framing Points” document distributed at the InterAction Forum 2014 workshop). Key objectives of the study include: 1. Capture, describe and inventory a wide range of approaches to measuring agency-level results, complemented by some in-depth case examples. 2. Analyze international NGO experiences for key lessons learned: patterns of successes and challenges in selecting, building, maintaining, and using these systems. 3. Based on the analysis of a variety of international NGO experiences, develop findings regarding whether, and/or under which circumstances, investments in agency level measurement systems are worthwhile. 4. Where appropriate, allow space to objectively document advocacy statements from study participants about the value and return on investment of these systems. Although the primary subject area of this study is agency-level systems measuring program-level impact, it may also be within the scope of this study to note agencies who have widened the definition of agency-level results to include other operational areas, such as management, human resources, finance and administration, revenue, etc., and to capture how they have structured these issues. The InterAction EPEWG advisory group for this white paper is interested in a critical look at both the premise and practice of agency-level results measurement. The Consultant(s) should design the research in a manner that allows them to critically examine the premise that agency-level results measurement 2 systems are desirable and can work as desired, as well as questions of, if yes, then in what circumstances and how; if not, then why not. The Consultant(s) should consider organizing focus group discussions or similar group discussion exercises for heads of monitoring and evaluation at organizations participating in the study to debate and jointly reflect on the questions asked in this research. Frequently, there are differing experiences and opinions on the issues of agency-level measurement systems. The debates among practitioners grappling with the premise and practice of agency-level measurement may be best understood when heads of evaluation debate and react to one another’s experiences and conclusions. Key questions to be addressed in this study include: Motivations: What are the drivers prompting organizations to establish such systems, and what are organizations’ expectations for what these systems can accomplish? o How do and should agencies decide whether or not to create an agency-level measurement system? What criteria do they, or should they, consider? o To what extent do expectations about what agency-level measurement systems can accomplish align or differ within organizations? o In what ways can the people charged with creating agency-level measurement systems manage expectations of various stakeholders, from senior executives to field staff? Assumptions: What assumptions are organizations making when they establish agency-level measurement systems? (see “Key Framing Points” note produced for the InterAction Forum 2014 workshop) o What are the minimum M&E capacities that need to be in place before an NGO should consider agency-level measurement? o What capacities are necessary (technical and financial) to develop adequate IT systems, and to encourage their adoption/use? Systems landscape: What is the current state of affairs of the U.S. international NGO community, related to measuring agency-level results? o Description of various systems that have been designed/implemented by international NGOs in order to illustrate what such a system can accomplish and what is involved in building such a system: e.g. common indicators; an agency-level measurement systems with centralized database; beneficiary based counting systems; meta evaluation based systems that aggregate/analyze results across a sample of programs, etc. o Of the spectrum of systems that measure agency-level results, what is the nature of those which also include non-program, operational issues such as HR and finance, and how do those systems differ from those only focusing on program results? o What are some alternatives to, or implications for, not creating an agency-level measurement system? Use: How, if at all, is the data generated by agency-level measurement systems being used? o Is there a way to devise a measurement system that produces data that is meaningful to country staff and program managers as well as to senior management and boards? o Whose data needs should drive the system design choices? o Who owns the system? Who has primary responsibility for using the data the results? 3 o o How does the aggregation of data (at the national, regional or global level) typically play into systems measuring agency-level results? Under what conditions is aggregation of data feasible and meaningful? What is the level of satisfaction by the end users of the data, and why? Challenges/Lessons learned o What risks should stakeholders be aware of when establishing agency-level measurement systems? Costs and benefits (financial, labor time, other) o Which systems have proven to be worthwhile and reasonable in view of the actual as well as opportunity costs involved, as deemed by the agencies themselves and/or by the external consultant? The study should review a wide range of organizations, and should take into account a range of factors that make a difference in whether and how agency-wide measurement can work. These include: Clarity of organizational purpose Single sector or multi-sector programming Funding base of organization (small individual donor base vs. high wealth individual donors vs. institutional funding base) Degree of centralization/de-centralization of the organization Mode of operating – direct service delivery or working primarily through local partners Leadership style, organizational culture and structure Behaviors and incentives for the end utility of the system Scale of operations III. Proposed Methodology This group has put some thought into the methodology needed to conduct the research for this white paper. However, we request that all parties interested in this Terms of Reference consider the key questions and propose the methodology that best fits the needs of this study. Methodologies proposed must include strong evidence of mixed-methods and the ability to triangulate findings, an indication of a proposed sampling approach, plus an adherence to international evaluation standards and ethics. The advisory group for this white paper needs to ensure that the study is conducted with clear attentiveness to the needs of the executive-level audience, and thus employs appropriate methods to do so. Methods may include: Interviews (individual and focus groups) with staff from a sample of organizations that have chosen to adopt agency-level measurement systems, as well as from those organizations that have chosen alternatives. A variety of staff from within organizations should be interviewed, potentially including: heads of evaluation, senior managers and CEO/board members, country level staff, and program managers. Also interview evaluation consultants/information management systems experts who have supported international NGOs to research, design and implement these systems. Interviews may also include donor agencies and international NGO fundraising teams, in order to better understand the perceived need to build these systems. 4 Document review: Review of InterAction’s EPEWG outputs (see InterAction’s website); review of international NGO documents related to current systems in place; broader literature on organization level evaluation, etc. Survey international NGOs as broadly as possible with a questionnaire, to be able to better inventory which agencies do or do not have these types of systems, and to describe somewhat the type of system. For the broad-based survey, as well as for the interviews, the advisory group will assist in identifying key stakeholders. In addition to these potential methodologies, it could be helpful for the approach and deliverables to employ data visualization tools to convey key take-aways. Examples might include understanding the organizational evolution/development that drives or develops vis-a-vis agency-level measurement or using visual tools to represent different models for agency-level results. IV. Deliverables 1. Presentation of initial findings to the EPEWG white paper advisory group. 2. Presentation of findings at an open workshop of InterAction members, responding to questions and facilitating discussion, once the papers are complete. 3. Presentation to InterAction member CEOs at InterAction’s CEO Retreat or annual conference, once the papers are complete. 4. Short summary publication, 2-3 ½ pages, which focuses on the main points of the long paper and is geared towards senior leadership and Boards of Directors. 5. Longer, full white paper, approximately 15-20 pages. This should be more in-depth and technical for the measurement community with evidence of recommendations included. 6. Both the short and long versions of the paper should include a feedback and revision period, to validate results and strengthen the study. V. Timeline To date, the advisory group for this white paper has indicated a high degree of flexibility with the timeline for this assignment. All deliverables much be completed and approved by the end of 2015, though there is a pressing need to produce this report for external audiences earlier than that. The preferable deliverable for all deadlines would be October 1, 2015 though the date and detailed workplan items may be proposed by the consultant. VI. Evaluator Qualifications and Application Procedures The InterAction EPEWG advisory group for this white paper is looking for experienced consultants or consulting teams with extensive experience conducting similar types of studies and white papers, preferably within the evaluation and program effectiveness sectors, and among humanitarian and international non-government agencies. Consultants must be able to demonstrate experience with evaluation methodologies, primary data collection and analysis, both qualitative and quantitative, as well as facilitation skills with executive-level US-based staff. All work must be conducted in English, and no travel is expected to be required for this consultancy, beyond presentations in the Washington DC area. 5 The InterAction EPEWG is seeking evaluators who are proposing innovative, mixed methods in their suite of evaluation design. Likewise, we are seeking evaluators with particular experience in writing advocacy pieces for executive-level attention. A full proposal, including a detailed, proposed methodology and comments on this TOR, plus CVs of the consultant/consultant team, and a cost proposal, should be submitted by May 15th, 2015 to Laia Griñó, Senior Manager of Transparency, Accountability and Results at lgrino@interaction.org. Questions on the TOR may be directed to Laia Griñó, via email, until Friday, May 1st, 2015. Note that questions will only be responded to on an individual basis; all questions will not be published publicly. 6