Optimal Control Systems - Min

advertisement

M IN -M AX C ONTROL

G AME T HEORY

O PTIMAL C ONTROL S YSTEMS

M IN -M AX O PTIMAL C ONTROL

Harry G. Kwatny

Department of Mechanical Engineering & Mechanics

Drexel University

O PTIMAL C ONTROL

E XAMPLES

M IN -M AX C ONTROL

O UTLINE

M IN -M AX C ONTROL

Problem Definition

HJB Equation

Example

G AME T HEORY

Differential Games

Isaacs’ Equations

E XAMPLES

Heating Tank

O PTIMAL C ONTROL

G AME T HEORY

E XAMPLES

M IN -M AX C ONTROL

G AME T HEORY

P ROBLEM D EFINITION

P ROBLEM S ETUP

Consider the control system

ẋ = f (x, u, w)

where

I

x ∈ Rn is the state

I

u ∈ U ⊂ Rm is the control

I

w ∈ W ⊂ Rq is an external disturbance

We seek a control u that minimizes the cost J for the worst

possible disturbance w and

Z T

J = ` (x (T)) +

L (x (t) , u (t) , w (t)) dt

0

O PTIMAL C ONTROL

E XAMPLES

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

HJB E QUATION

HJB E QUATION

We seek both u∗ (t) and w∗ (t) such that

{u∗ , w∗ } = arg min max J (u, w)

u⊂U w⊂W

Define the cost to go

T

Z

V (x, t) = min max ` (x (T)) +

u⊂U w⊂W

L (x (τ ) , u (τ ) , w (τ )) dτ

t

The principle of optimality leads to

∂V (x, t)

∂V (x, t)

+ H ∗ x,

=0

∂t

∂x

where

H ∗ (x, λ) = min max L (x, u, w) + λT f (x, u, w)

u∈U w∈W

O PTIMAL C ONTROL

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLE

E XAMPLE

Consider the linear dynamics

ẋ = Ax + Bu + Ew

y = Cx

and cost

J(u, w) = lim

T→∞

1

2T

T

Z

h

i

yT (t)y(t) + ρuT (t)u(t) − γ 2 wT (t)w(t) dt

0

Suppose

I (A, B) and (A, E) are stabilizable

I (A, C) is detectable

Then

O PTIMAL C ONTROL

1

1

u (t) = − BT Sx (t) , w(t) = 2 ET Sx(t)

ρ

γ

1 T

1

AT S + SA − S

BB − 2 EET S = −CT C

ρ

γ

E XAMPLES

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

E XAMPLE

E XAMPLE C ONT ’ D : S OME C ALCULATIONS

∗

H = min max

u

w

1 T T

T

2 T

T

x C Cx + ρu u − γ w w + λ (Ax + Bu + Ew)

2

1

u∗ = − BT λ,

ρ

H∗ =

w∗ =

1 T

E λ

γ2

1

1

1 T T

x C Cx + λT Ax − λT BBT λ + 2 λT EET λ

2

2ρ

2γ

ẋ =

1

∂H ∗

1

= Ax + 2 EET λ − BBT λ

∂λ

γ

ρ

∂H ∗

= −CT Cx + −AT λ

λ̇ = −

∂x

1

1

T

T

A

ẋ

x

2 EE − ρ BB

γ

=

λ

λ̇

−CT C

−AT

O PTIMAL C ONTROL

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

E XAMPLE

E XAMPLE C ONT ’ D

Consider the Hamiltonian matrix

A

H=

−CT C

1

EET

γ2

− ρ1 BBT

−AT

Now, under the above assumptions:

I There exists a γmin such that so that there are no eigenvalues of H on the

imaginary axis provided γ > γmin .

I When γ → ∞ we approach the standard LQR solution.

I For γ = γmin , the controller is the full state feedback H∞ controller.

I All other values of γ produce valid min-max controllers.

I The condition Reλ(H) 6= 0 is equivalent to stability of the matrix

A+

1

1

EET − BBT

γ2

ρ

I The closed loop system matrix is

A−

1 T

BB

ρ

The term EET /γ 2 is destabilizing, so the system is guaranteed some margin of

stability.

O PTIMAL C ONTROL

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

D IFFERENTIAL G AMES

T WO P ERSON D IFFERENTIAL G AME

Once again consider the system

ẋ = f (x, u, w)

However, now consider both u and w to be controls associated,

respectively, with two players A and B.

Player A wants to minimize the cost

Z T

J = ` (x (T)) +

L (x (t) , u (t) , w (t)) dt

0

and player B wants to maximize it. This is a two person,

zero-sum, differential game.

O PTIMAL C ONTROL

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

D IFFERENTIAL G AMES

D EFINITIONS

Instead of the ‘cost to go’ define two value functions:

D EFINITION (VALUE F UNCTIONS )

The lower value function is

T

Z

VA (x, t) = min max ` (x (T)) +

u⊂U w⊂W

t

and the upper value function is

Z

VB (x, t) = max min ` (x (T)) +

w⊂W u⊂U

L (x (τ ) , u (τ ) , w (τ )) dτ

T

L (x (τ ) , u (τ ) , w (τ )) dτ

t

I if VA = VB , a unique solution exists. This solution is called a pure

strategy.

I If VA 6= VB , a unique strategy does not exist. In general, the player who

‘plays second’ has an advantage.

O PTIMAL C ONTROL

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

I SAACS ’ E QUATIONS

I SAACS ’ E QUATIONS

Define

HA (x, λ) = min max L (x, u, w) + λT f (x, u, w)

u∈U w∈W

HB (x, λ) = min max L (x, u, w) + λT f (x, u, w)

u∈U w∈W

Then we get Isaacs’ equations

∂VA (x, t)

∂VA (x, t)

=0

+ HA x,

∂t

∂x

∂VB (x, t)

∂VB (x, t)

+ HB x,

=0

∂t

∂x

∂VA (x, t)

∂VB (x, t)

HA x,

≡ HB x,

⇒ VA (x, t) ≡ VB (x, t)

∂x

∂x

and the game is said to satisfy the Isaacs’ condition. Notice that if u∗ ,w∗ are

optimal,the the pair (u∗ , w∗ ) is a saddle point in the sense that

J (u∗ , w) ≤ J (u∗ , w∗ ) ≤ J (u, w∗ )

O PTIMAL C ONTROL

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

H EATING TANK

W ELL - STIRRED H EATING TANK S TARTUP

ẋ = −x + w + u,

x (0) = x0 ,

0 ≤ u ≤ 2,

|w| ≤ 1

x, dimensionless tank temperature

u, dimensionless heat input

I w, dimensionless input water flow

I

I

Z

J=

T

(x − x̄)2 dt,

T fixed

0

H = (x − x̄)2 + λ (−x + w + u)

{u∗ , w∗ } = arg min max H (x, u, w, λ)

u

w

⇒ u∗ = (1 − sgn (λ)) , w∗ = sgn (λ)

λ̇ = −

O PTIMAL C ONTROL

∂H

⇒ λ̇ = λ − 2 (x − x̄) ,

∂x

λ (T) = 0

M IN -M AX C ONTROL

G AME T HEORY

E XAMPLES

H EATING TANK

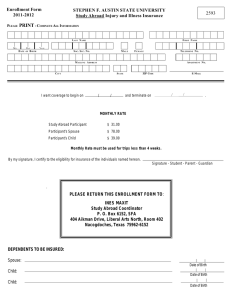

E XAMPLE , C ONT ’ D

ẋ = −x + 1

λ̇ = λ − 2 (x − x̄)

x (T) = x̄, λ (T) = 0

0.5

x,Λ

0.0

-0.5

-1.0

0.0

with x̄ = 0.8

O PTIMAL C ONTROL

0.5

1.0

t

1.5

2.0

!["The [AME program] has proved to be invaluable in](http://s3.studylib.net/store/data/009071930_1-d58255f43c2ddfa1ebafddfbf4735dbd-300x300.png)