Optimal Uniform and Lp Rates for Random Fourier Features

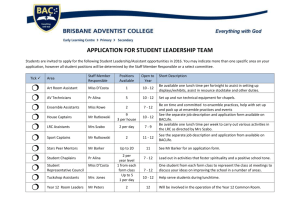

advertisement

Optimal Uniform and Lp Rates for Random Fourier

Features

Zoltán Szabó∗

Gatsby Unit, UCL

Joint work with Bharath K. Sriperumbudur∗

Department of Statistics, PSU

(∗ equal contribution)

Pennsylvania State University

December 4, 2015

Zoltán Szabó

Optimal Rates for RFFs

Outline

Kernels and kernel derivatives.

Random Fourier features (RFFs).

Guarantees on RFF approximation: uniform, Lr .

Zoltán Szabó

Optimal Rates for RFFs

Kernel, RKHS

k : X × X → R kernel on X, if

∃ϕ : X → H(ilbert space) feature map,

k(a, b) = hϕ(a), ϕ(b)iH (∀a, b ∈ X).

Zoltán Szabó

Optimal Rates for RFFs

Kernel, RKHS

k : X × X → R kernel on X, if

∃ϕ : X → H(ilbert space) feature map,

k(a, b) = hϕ(a), ϕ(b)iH (∀a, b ∈ X).

Kernel examples: X = Rd (p > 0, θ > 0)

p

k(a, b) = (ha, bi + θ) : polynomial,

2

2

k(a, b) = e −ka−bk2 /(2θ ) : Gaussian,

k(a, b) = e −θka−bk2 : Laplacian.

Zoltán Szabó

Optimal Rates for RFFs

Kernel, RKHS

k : X × X → R kernel on X, if

∃ϕ : X → H(ilbert space) feature map,

k(a, b) = hϕ(a), ϕ(b)iH (∀a, b ∈ X).

Kernel examples: X = Rd (p > 0, θ > 0)

p

k(a, b) = (ha, bi + θ) : polynomial,

2

2

k(a, b) = e −ka−bk2 /(2θ ) : Gaussian,

k(a, b) = e −θka−bk2 : Laplacian.

In the H = H(k) RKHS (∃!): ϕ(u) = k(·, u).

Zoltán Szabó

Optimal Rates for RFFs

RKHS: evaluation point of view

Let H ⊂ RX be a Hilbert space.

Consider for fixed x ∈ X the δx : f ∈ H 7→ f (x) ∈ R map.

The evaluation functional is linear:

δx (αf + βg ) = αδx (f ) + βδx (g ).

Zoltán Szabó

Optimal Rates for RFFs

RKHS: evaluation point of view

Let H ⊂ RX be a Hilbert space.

Consider for fixed x ∈ X the δx : f ∈ H 7→ f (x) ∈ R map.

The evaluation functional is linear:

δx (αf + βg ) = αδx (f ) + βδx (g ).

Def.: H is called RKHS if δx is continuous for ∀x ∈ X.

Zoltán Szabó

Optimal Rates for RFFs

RKHS: reproducing point of view

Let H ⊂ RX be a Hilbert space.

k : X × X → is called a reproducing kernel of H if for

∀x ∈ X, f ∈ H

1

2

k(·, x) ∈ H,

hf , k(·, x)iH = f (x) (reproducing property).

Zoltán Szabó

Optimal Rates for RFFs

RKHS: reproducing point of view

Let H ⊂ RX be a Hilbert space.

k : X × X → is called a reproducing kernel of H if for

∀x ∈ X, f ∈ H

1

2

k(·, x) ∈ H,

hf , k(·, x)iH = f (x) (reproducing property).

Specifically, ∀x, y ∈ X

k(x, y ) = hk(·, x), k(·, y )iH .

Zoltán Szabó

Optimal Rates for RFFs

RKHS: positive-definite point of view

Let us given a k : X × X → R symmetric function.

k is called positive definite if ∀n ≥ 1, ∀(a1 , . . . , an ) ∈ Rn ,

(x1 , . . . , xn ) ∈ Xn

n

X

ai aj k(xi , xj ) = aT Ga ≥ 0,

i,j=1

where G = [k(xi , xj )]ni,j=1 .

Zoltán Szabó

Optimal Rates for RFFs

Kernel: example domains (X)

Euclidean space: X = Rd .

Graphs, texts, time series, dynamical systems, distributions.

Zoltán Szabó

Optimal Rates for RFFs

Kernel: application example – ridge regression

Given: {(xi , yi )}`i=1 , H = H(k).

Task: find f ∈ H s.t. f (xi ) ≈ yi ,

`

J(f ) =

1X

[f (xi ) − yi ]2 + λ kf k2H → min (λ > 0).

f ∈H

`

i=1

Zoltán Szabó

Optimal Rates for RFFs

Kernel: application example – ridge regression

Given: {(xi , yi )}`i=1 , H = H(k).

Task: find f ∈ H s.t. f (xi ) ≈ yi ,

`

J(f ) =

1X

[f (xi ) − yi ]2 + λ kf k2H → min (λ > 0).

f ∈H

`

i=1

Analytical solution, O(`3 ) – expensive:

f (x) = [k(x1 , x), . . . , k(x` , x)](G + λ`I )−1 [y1 ; . . . ; y` ],

G = [k(xi , xj )]`i,j=1 .

Zoltán Szabó

Optimal Rates for RFFs

Kernel: application example – ridge regression

Given: {(xi , yi )}`i=1 , H = H(k).

Task: find f ∈ H s.t. f (xi ) ≈ yi ,

`

J(f ) =

1X

[f (xi ) − yi ]2 + λ kf k2H → min (λ > 0).

f ∈H

`

i=1

Analytical solution, O(`3 ) – expensive:

f (x) = [k(x1 , x), . . . , k(x` , x)](G + λ`I )−1 [y1 ; . . . ; y` ],

G = [k(xi , xj )]`i,j=1 .

Idea: Ĝ, matrix-inversion lemma, fast primal solvers → RFF.

Zoltán Szabó

Optimal Rates for RFFs

Kernels: more generally

Requirement: inner product on the inputs (k : X × X → R).

Loss function (λ > 0):

J(f ) =

`

X

V (yi , f (xi )) + λ kf k2H(k) → min .

f ∈H(k)

i=1

Zoltán Szabó

Optimal Rates for RFFs

Kernels: more generally

Requirement: inner product on the inputs (k : X × X → R).

Loss function (λ > 0):

J(f ) =

`

X

V (yi , f (xi )) + λ kf k2H(k) → min .

f ∈H(k)

i=1

By the representer theorem [f (·) =

J(α) =

`

X

P`

i=1 αi k(·, xi )]:

V (yi , (Gα)i ) + λαT Gα → min .

α∈R`

i=1

⇒ k(xi , xj ) matters.

Zoltán Szabó

Optimal Rates for RFFs

Kernel derivatives: application example

Motivation:

fitting ∞-D exp. family distributions [Sriperumbudur et al., 2014],

k ↔ sufficient statistics,

rich family,

Zoltán Szabó

Optimal Rates for RFFs

Kernel derivatives: application example

Motivation:

fitting ∞-D exp. family distributions [Sriperumbudur et al., 2014],

k ↔ sufficient statistics,

rich family,

fitting = linear equation:

coefficient matrix: (d`) × (d`), d = dim(x),

entries: kernel values and derivatives.

Zoltán Szabó

Optimal Rates for RFFs

Kernel derivatives: more generally

Objective:

`

J(f ) =

1X

V (yi , {∂ p f (xi )}p∈Ji ) + λ kf k2H(k) → min .

`

f ∈H(k)

i=1

Zoltán Szabó

Optimal Rates for RFFs

Kernel derivatives: more generally

Objective:

`

J(f ) =

1X

V (yi , {∂ p f (xi )}p∈Ji ) + λ kf k2H(k) → min .

`

f ∈H(k)

i=1

[Zhou, 2008, Shi et al., 2010, Rosasco et al., 2010, Rosasco et al., 2013,

Ying et al., 2012]:

semi-supervised learning with gradient information,

nonlinear variable selection.

Kernel HMC [Strathmann et al., 2015].

Zoltán Szabó

Optimal Rates for RFFs

Focus

X = Rd . k: continuous, shift-invariant [k(x, y) = k̃(x − y)].

By Bochner’s theorem:

Z

Z

iω T (x−y)

k(x, y) =

e

dΛ(ω) =

cos ω T (x − y) dΛ(ω).

Rd

Rd

Zoltán Szabó

Optimal Rates for RFFs

Focus

X = Rd . k: continuous, shift-invariant [k(x, y) = k̃(x − y)].

By Bochner’s theorem:

Z

Z

iω T (x−y)

k(x, y) =

e

dΛ(ω) =

cos ω T (x − y) dΛ(ω).

Rd

Rd

i.i.d.

RFF trick [Rahimi and Recht, 2007] (MC): ω1:m := (ωj )m

j=1 ∼ Λ,

m

k̂(x, y) =

1 X

cos ωjT (x − y) =

m

j=1

Zoltán Szabó

Z

cos ω T (x − y) dΛm (ω).

Rd

Optimal Rates for RFFs

RFF – existing guarantee, basically

Hoeffding inequality + union bound:

r

k − k̂ ∞ = Op

L (S)

|S|

|{z}

!

log m

.

m

linear

Zoltán Szabó

Optimal Rates for RFFs

RFF – existing guarantee, basically

Hoeffding inequality + union bound:

r

k − k̂ ∞ = Op

L (S)

|S|

|{z}

!

log m

.

m

linear

Characteristic function point of view [Csörgő and Totik, 1983]

(asymptotic!):

1

2

|Sm | = e o(m) is the optimal rate for a.s. convergence,

For faster growing |Sm |: even convergence in probability fails.

Zoltán Szabó

Optimal Rates for RFFs

Today: one-page summary

1

specifically

Finite-sample L∞ -guarantee −−−−−−→

!

p

log

|S|

k − k̂ ∞ = Oa.s.

√

L (S)

m

⇒ S can grow exponentially [|Sm | = e o(m) ] – optimal!

Zoltán Szabó

Optimal Rates for RFFs

Today: one-page summary

1

specifically

Finite-sample L∞ -guarantee −−−−−−→

!

p

log

|S|

k − k̂ ∞ = Oa.s.

√

L (S)

m

⇒ S can grow exponentially [|Sm | = e o(m) ] – optimal!

2

Finite sample Lr guarantees, r ∈ [1, ∞).

Zoltán Szabó

Optimal Rates for RFFs

Today: one-page summary

1

specifically

Finite-sample L∞ -guarantee −−−−−−→

!

p

log

|S|

k − k̂ ∞ = Oa.s.

√

L (S)

m

⇒ S can grow exponentially [|Sm | = e o(m) ] – optimal!

2

Finite sample Lr guarantees, r ∈ [1, ∞).

3

Derivatives: ∂ p,q k.

Zoltán Szabó

Optimal Rates for RFFs

. . . , where

Uniform (r = ∞), Lr (1 ≤ r < ∞) norm:

kk − k̂kL∞ (S) := sup k(x, y) − k̂(x, y) ,

x,y∈S

Z Z

kk − k̂kLr (S) :=

r

|k(x, y) − k̂(x, y)| dx dy

S

Zoltán Szabó

S

Optimal Rates for RFFs

1

r

.

. . . , where

Uniform (r = ∞), Lr (1 ≤ r < ∞) norm:

kk − k̂kL∞ (S) := sup k(x, y) − k̂(x, y) ,

x,y∈S

Z Z

kk − k̂kLr (S) :=

r

1

r

|k(x, y) − k̂(x, y)| dx dy

S

.

S

Kernel derivatives:

∂ p,q k(x, y) =

∂ |p|+|q| k(x, y)

,

∂x1p1 · · · ∂xdpd ∂y1q1 · · · ∂ydqd

Zoltán Szabó

Optimal Rates for RFFs

|p| =

d

X

j=1

|pj |.

kk − k̂kL∞ (S) : proof idea

1

R

Empirical process form [Pg := g dP]:

sup k(x, y) − k̂(x, y) = sup |Λg − Λm g | = kΛ − Λm kG .

g ∈G

x,y∈S

Zoltán Szabó

Optimal Rates for RFFs

kk − k̂kL∞ (S) : proof idea

1

R

Empirical process form [Pg := g dP]:

sup k(x, y) − k̂(x, y) = sup |Λg − Λm g | = kΛ − Λm kG .

g ∈G

x,y∈S

2

f (ω1:m ) = kΛ − Λm kG concentrates (bounded difference):

1

kΛ − Λm kG - Eω1:m kΛ − Λm kG + √ .

m

Zoltán Szabó

Optimal Rates for RFFs

kk − k̂kL∞ (S) : proof idea

1

R

Empirical process form [Pg := g dP]:

sup k(x, y) − k̂(x, y) = sup |Λg − Λm g | = kΛ − Λm kG .

g ∈G

x,y∈S

2

f (ω1:m ) = kΛ − Λm kG concentrates (bounded difference):

1

kΛ − Λm kG - Eω1:m kΛ − Λm kG + √ .

m

3

G is ’nice’ (uniformly bounded, separable Carathéodory) ⇒

Eω1:m kΛ − Λm kG - Eω1:m R (G, ω1:m ) .

| {z }

1 Pm

E supg ∈G m

j=1 j g (ωj )

Zoltán Szabó

Optimal Rates for RFFs

Proof idea

4

Using Dudley’s entropy bound:

1

R (G, ω1:m ) - √

m

Z

|G|L2 (Λm )

q

log N (G, L2 (Λm ), u)du.

0

Zoltán Szabó

Optimal Rates for RFFs

Proof idea

4

Using Dudley’s entropy bound:

1

R (G, ω1:m ) - √

m

5

Z

|G|L2 (Λm )

q

log N (G, L2 (Λm ), u)du.

0

G is smoothly parameterized by a compact set ⇒

N (G, L2 (Λm ), u) ≤

4|S|A

+1

u

Zoltán Szabó

d

v

u X

u1 m

, A(ω1:m ) = t

kωj k22 .

m

Optimal Rates for RFFs

j=1

Proof idea

4

Using Dudley’s entropy bound:

1

R (G, ω1:m ) - √

m

5

|G|L2 (Λm )

q

log N (G, L2 (Λm ), u)du.

0

G is smoothly parameterized by a compact set ⇒

N (G, L2 (Λm ), u) ≤

6

Z

4|S|A

+1

u

d

v

u X

u1 m

, A(ω1:m ) = t

kωj k22 .

m

j=1

Putting together [|G|L2 (Λm ) ≤ 2, Jensen inequality] we get . . .

Zoltán Szabó

Optimal Rates for RFFs

L∞ result for k

Let k be continuous, σ 2 :=

and compact set S ⊂ Rd

m

Λ

kk̂ − kkL∞ (S)

R

kωk2 dΛ(ω) < ∞. Then for ∀τ > 0

h(d, |S|, σ) +

√

≥

m

√

2τ

s

p

h(d, |S|, σ) := 32 2dlog(2|S| + 1) + 16

32

!

2d

+

log(2|S| + 1)

p

2d log(σ + 1).

Zoltán Szabó

≤ e −τ ,

Optimal Rates for RFFs

Consequence-1 (Borel-Cantelli lemma)

m→∞

A.s. convergence on compact sets: k̂ −−−−→ k at rate

Zoltán Szabó

Optimal Rates for RFFs

q

log |S|

m .

Consequence-1 (Borel-Cantelli lemma)

m→∞

A.s. convergence on compact sets: k̂ −−−−→ k at rate

Growing diameter:

log |Sm |

m

m→∞

−−−−→ 0 is enough, i.e. |Sm | = e o(m) .

Zoltán Szabó

Optimal Rates for RFFs

q

log |S|

m .

Consequence-1 (Borel-Cantelli lemma)

m→∞

A.s. convergence on compact sets: k̂ −−−−→ k at rate

Growing diameter:

log |Sm |

m

q

log |S|

m .

m→∞

−−−−→ 0 is enough, i.e. |Sm | = e o(m) .

Specifically:

asymptotic optimality [Csörgő and Totik, 1983, Theorem 2] (if k(z)

vanishes at ∞).

Zoltán Szabó

Optimal Rates for RFFs

Consequence-2: Lr result for k (1 ≤ r )

Idea:

Note that

Z Z

kk̂ − kkLr (S) =

r

|k̂(x, y) − k(x, y)| dx dy

S

S

≤ kk̂ − kkL∞ (S) vol2/r (S).

Zoltán Szabó

Optimal Rates for RFFs

1

r

Consequence-2: Lr result for k (1 ≤ r )

Idea:

Note that

Z Z

kk̂ − kkLr (S) =

1

r

r

|k̂(x, y) − k(x, y)| dx dy

S

S

≤ kk̂ − kkL∞ (S) vol2/r (S).

n

vol(S) ≤ vol(B), where B := x ∈ Rd : kxk2 ≤

Zoltán Szabó

Optimal Rates for RFFs

|S|

2

o

,

Consequence-2: Lr result for k (1 ≤ r )

Idea:

Note that

Z Z

kk̂ − kkLr (S) =

r

|k̂(x, y) − k(x, y)| dx dy

S

S

≤ kk̂ − kkL∞ (S) vol2/r (S).

n

o

vol(S) ≤ vol(B), where B := x ∈ Rd : kxk2 ≤ |S|

2 ,

R∞

d/2

d

vol(B) = dπ d|S| , Γ(t) = 0 u t−1 e −u du. ⇒

2 Γ

2

+1

Zoltán Szabó

Optimal Rates for RFFs

1

r

Lr result for k

Under the previous assumptions, and 1 ≤ r < ∞:

!2/r

√

d/2

d

π |S|

h(d, |S|, σ) + 2τ

√

Λm kk̂ − kkLr (S) ≥

≤ e −τ .

d

d

m

2 Γ( 2 + 1)

Zoltán Szabó

Optimal Rates for RFFs

Lr result for k

Under the previous assumptions, and 1 ≤ r < ∞:

!2/r

√

d/2

d

π |S|

h(d, |S|, σ) + 2τ

√

Λm kk̂ − kkLr (S) ≥

≤ e −τ .

d

d

m

2 Γ( 2 + 1)

Hence,

kk̂ − kkLr (S) = Oa.s.

!

p

|S|2d/r log |S|

√

.

m

|

{z

}

m→∞

Lr (S)-consistency if −−−−→ 0

Zoltán Szabó

Optimal Rates for RFFs

Lr result for k

Under the previous assumptions, and 1 ≤ r < ∞:

!2/r

√

d/2

d

π |S|

h(d, |S|, σ) + 2τ

√

Λm kk̂ − kkLr (S) ≥

≤ e −τ .

d

d

m

2 Γ( 2 + 1)

Hence,

kk̂ − kkLr (S) = Oa.s.

!

p

|S|2d/r log |S|

√

.

m

|

{z

}

m→∞

Lr (S)-consistency if −−−−→ 0

Uniform guarantee: |Sm | = e m

δ<1

; now:

Zoltán Szabó

|Sm |2d/r

√

m

r

→ 0 ⇒ |Sm | = õ(m 4d ).

Optimal Rates for RFFs

Direct Lr result for k (proof idea after discussion)

Under the previous assumptions, and 1 < r < ∞:

!2/r

√ !

d/2 |S|d

0

π

C

2τ

r

≤ e −τ ,

Λm kk̂ − kkLr (S) ≥

+ √

1−max{ 12 , 1r }

m

2d Γ( d2 + 1)

m

√

Cr0 = O( r ): universal constant; only r -dependent (not |S| or m-dep.).

Zoltán Szabó

Optimal Rates for RFFs

Direct Lr result for k (proof idea after discussion)

Under the previous assumptions, and 1 < r < ∞:

!2/r

√ !

d/2 |S|d

0

π

C

2τ

r

≤ e −τ ,

Λm kk̂ − kkLr (S) ≥

+ √

1−max{ 12 , 1r }

m

2d Γ( d2 + 1)

m

√

Cr0 = O( r ): universal constant; only r -dependent (not |S| or m-dep.).

Note: if 2 ≤ r , then

√

1 1

1 m 1−max{ 2 , r } =

m,

r a.s.

2 kk̂ − kk r

→

0

if

|S

|

=

o

m 4d as m → ∞.

m

L (Sm )

p

3 In short, we got rid of

log(|S|): õ → o.

Zoltán Szabó

Optimal Rates for RFFs

Direct Lr result for k: proof idea

1

f (ω1 , . . . , ωm ) = kk − k̂kLr (S) concentrates (bounded difference):

r

2τ

kk − k̂kLr (S) ≤ Eω1:m kk − k̂kLr (S) + vol2/r (S)

.

m

Zoltán Szabó

Optimal Rates for RFFs

Direct Lr result for k: proof idea

1

2

f (ω1 , . . . , ωm ) = kk − k̂kLr (S) concentrates (bounded difference):

r

2τ

kk − k̂kLr (S) ≤ Eω1:m kk − k̂kLr (S) + vol2/r (S)

.

m

0

By Lr ∼

= (Lr )∗ ( 1r +

symmetrization:

1

r0

Eω1:m kk − k̂kLr (S)

0

= 1), the separability of Lr (S) (r > 1) and

m

X

2

≤ Eω1:m Eε εi cos(hωi , · − ·i)

.

m

i=1

Lr (S)

|

{z

}

=:(∗)

Zoltán Szabó

Optimal Rates for RFFs

Direct Lr result for k: proof idea

1

2

f (ω1 , . . . , ωm ) = kk − k̂kLr (S) concentrates (bounded difference):

r

2τ

kk − k̂kLr (S) ≤ Eω1:m kk − k̂kLr (S) + vol2/r (S)

.

m

0

By Lr ∼

= (Lr )∗ ( 1r +

symmetrization:

1

r0

Eω1:m kk − k̂kLr (S)

0

= 1), the separability of Lr (S) (r > 1) and

m

X

2

≤ Eω1:m Eε εi cos(hωi , · − ·i)

.

m

i=1

Lr (S)

|

{z

}

=:(∗)

3

Since Lr (S) is of type min(2, r ) [’-rule’] ∃Cr0 such that

(∗) ≤

Cr0

m

X

!

k cos(hωi , · −

min(2,r )

·i)kLr (S)

i=1

Zoltán Szabó

Optimal Rates for RFFs

1

min(2,r )

.

Kernel derivatives: N2d 3 [p; q] 6= 0

p,q . If

Goal: kd

1 supp(Λ) is bounded:

h

i

2

Ck,p,q := Eω∼Λ |ω p+q | kωk2 < ∞: L∞ , Lr X, but

Gaussian, Laplacian, inverse multiquadratic, Matern:(

c0 universality ⇔ supp(Λ) = Rd , if k(z) ∈ C0 (Rd ).

Zoltán Szabó

Optimal Rates for RFFs

Kernel derivatives: N2d 3 [p; q] 6= 0

p,q . If

Goal: kd

1 supp(Λ) is bounded:

h

i

2

Ck,p,q := Eω∼Λ |ω p+q | kωk2 < ∞: L∞ , Lr X, but

Gaussian, Laplacian, inverse multiquadratic, Matern:(

c0 universality ⇔ supp(Λ) = Rd , if k(z) ∈ C0 (Rd ).

2

supp(Λ) is unbounded:

G: becomes unbounded.

[Rahimi and Recht, 2007]: ’Hoeffding → Bernstein’, but

Zoltán Szabó

Optimal Rates for RFFs

Kernel derivatives: unbounded supp(Λ)

Assumptions [ha = cos (a) , S∆ = S − S]:

1 z 7→ ∇ [∂ p,q k(z)]: continuous; S ⊂ Rd : compact,

z

Ep,q := Eω∼Λ |ω p+q | kωk2 < ∞.

2 ∃L > 0, σ > 0

M! σ 2 LM−2

(∀M ≥ 2, ∀z ∈ S∆ ),

2

f (z; ω) = ∂ p,q k(z) − ω p (−ω)q h|p+q| (ω T z).

Eω∼Λ |f (z; ω)|M ≤

Zoltán Szabó

Optimal Rates for RFFs

Kernel derivatives: unbounded supp(Λ)

d

1

Then with Fd := d − d+1 + d d+1

p,q kk ∞

Λm k∂ p,q k − ∂[

≥

≤

L (S)

−

≤ 2d−1 e

2

m

8σ 2 1+ L2

2σ

+ Fd 2

4d−1

d+1

|S|(Dp,q,S + Ep,q )

where Dp,q,S := supz∈conv (S∆ ) k∇z [∂ p,q k(z)]k2 .

Zoltán Szabó

Optimal Rates for RFFs

d

d+1

−

e

2

m

8(d+1)σ 2 1+ L2

2σ

,

Summary

Finite sample

spec.

L∞ (S) guarantees −−−→ |Sm | = e o(m) – asymp. optimal!

Lr (S) results (⇐ uniform, type of Lr ).

Zoltán Szabó

Optimal Rates for RFFs

Summary

Finite sample

spec.

L∞ (S) guarantees −−−→ |Sm | = e o(m) – asymp. optimal!

Lr (S) results (⇐ uniform, type of Lr ).

derivative approximation guarantees:

bounded spectral support: X

unbounded spectral support: trickier – to be continued;)

Zoltán Szabó

Optimal Rates for RFFs

Thank you for the attention!

Acknowledgments: This work was supported by the Gatsby Charitable

Foundation.

Zoltán Szabó

Optimal Rates for RFFs

Csörgő, S. and Totik, V. (1983).

On how long interval is the empirical characteristic function

uniformly consistent?

Acta Scientiarum Mathematicarum, 45:141–149.

Rahimi, A. and Recht, B. (2007).

Random features for large-scale kernel machines.

In Neural Information Processing Systems (NIPS), pages

1177–1184.

Rosasco, L., Santoro, M., Mosci, S., Verri, A., and Villa, S.

(2010).

A regularization approach to nonlinear variable selection.

JMLR W&CP – International Conference on Artificial

Intelligence and Statistics (AISTATS), 9:653–660.

Rosasco, L., Villa, S., Mosci, S., Santoro, M., and Verri, A.

(2013).

Nonparametric sparsity and regularization.

Journal of Machine Learning Research, 14:1665–1714.

Zoltán Szabó

Optimal Rates for RFFs

Shi, L., Guo, X., and Zhou, D.-X. (2010).

Hermite learning with gradient data.

Journal of Computational and Applied Mathematics,

233:3046–3059.

Sriperumbudur, B. K., Fukumizu, K., Gretton, A., Hyvärinen,

A., and Kumar, R. (2014).

Density estimation in infinite dimensional exponential families.

Technical report.

http://arxiv.org/pdf/1312.3516.pdf.

Strathmann, H., Sejdinovic, D., Livingstone, S., Szabó, Z.,

and Gretton, A. (2015).

Gradient-free Hamiltonian Monte Carlo with efficient kernel

exponential families.

In Neural Information Processing Systems (NIPS).

Ying, Y., Wu, Q., and Campbell, C. (2012).

Learning the coordinate gradients.

Advances in Computational Mathematics, 37:355–378.

Zoltán Szabó

Optimal Rates for RFFs

Zhou, D.-X. (2008).

Derivative reproducing properties for kernel methods in

learning theory.

Journal of Computational and Applied Mathematics,

220:456–463.

Zoltán Szabó

Optimal Rates for RFFs