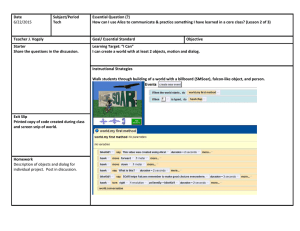

Here - School of Electronic Engineering and Computer Science

advertisement