Slides

advertisement

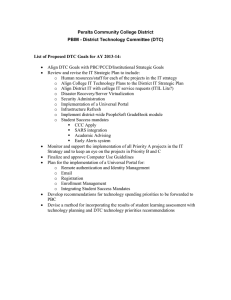

Gaussian Processes and Fast Matrix-Vector Multiplies Iain Murray Dept. Computer Science, University of Toronto Work with Joaquin Quiñonero Candela, Carl Edward Rasmussen, Edward Snelson and Chris Williams GP regression model 1 1 0.5 0.5 0 0 −0.5 −0.5 −1 −1 −1.5 0 0.2 0.4 0.6 0.8 1 −1.5 f ∼ GP f ∼ N (0, Σ), Σij = k(xi, xj ) 0 0.2 0.4 0.6 0.8 y|x ∼ N (f (x), σn2 ) 1 GP posterior 1 1 0.5 0.5 0 0 −0.5 −0.5 −1 −1 −1.5 −1.5 0 0.2 0.4 0.6 0.8 Draws ∼ p(f |data) 1 0 0.2 0.4 0.6 0.8 Mean and error bars 1 Standard matrix operations Infer function at a point x∗: p(f (x∗)|data) = N (m, s2) Need covariances: Kij = k(xi, xj ), (k∗)i = k(x∗, xi) Posterior available in closed-form: M = K + σn2 I −1 y m = k> M ∗ −1 s2 = k(x∗, x∗) − k> M k∗ ∗ Learning (hyper-)parameters k(xi, xj ) = exp(−0.5|xi − xj |2/`2) 1 0.5 0 −0.5 −1 −1.5 0 0.2 0.4 0.6 0.8 ` = 0.1, σn = 0.01 1 0 0.2 0.4 0.6 0.8 ` = 0.5, σn = 0.05 1 0 0.2 0.4 0.6 0.8 ` = 1.5, σn = 0.15 (Marginal) likelihood: log p(y|X, `, σn) = − 12 y>M −1y − 12 log |M | − n2 log 2π 1 Exploding costs GPs scale poorly with large datasets O(n3) computation usually takes the blame: M −1 or M −1y, M −1k∗ and det(M ) Not the only story: Kij = k(xi, xj ) O(dn2) computation O(n2) memory Large literature on GP approximations Exploding costs 20,000 points, GBs of RAM, ∼ 1012 f.p. operations The “SoD Approximation” [1] Trivial, obvious solution: randomly throw away most of the data 1 1 0.5 0.5 0 0 −0.5 −0.5 −1 −1 −1.5 −1.5 0 0.2 0.4 0.6 0.8 1 0 0.2 0.4 0.6 0.8 1 e.g. keeping 1/20 points [1] Rasmussen and Williams (2006) Local Regression 0.6 kernel value output, y 0.8 0.4 0.2 0 0 0.2 0.4 x* input, x 0.8 1 x* kd-trees for > 1 dimensions Moore et al. (2007) put the data in a kd-tree Set k(x∗, xi) equal for many xi in a common node So far: — GP review and scaling problems — Simple alternatives Next: — Numerical methods for full GP Iterative methods Alternatives to straightforward O(n3) operations: — Conjugate Gradients (CG), — “Block Gauss–Seidel”, e.g., Mark Gibbs thesis (1997) Li et al. (ICML 2007) — Randomized approaches, e.g., Liberty et al. (PNAS 2007) —... Matrix-vector multiplies dominate cost, O(n2) each Example: CG finds α = M −1y by iterative optimization: > 1 > α = argmax F(z) = y z − 2 z M z z Comparing iterative methods Focus has been on mean prediction: Taken from Large-scale RLSC learning without agony, Li et al. 2007 Comparing iterative methods Training on 16,384 datapoints from SARCOS 0 Relative residual 10 CG CG init GS cluster GS −5 10 0 10 1 2 10 10 Iteration number 3 10 Comparing iterative methods Test error progression 0.02 1 10 0 SMSE SMSE 0.018 10 0.016 0.014 −1 10 0.012 −2 10 0 10 1 2 10 10 Iteration number 3 10 0.01 1 10 2 10 Iteration number 3 10 So far: — GP review and scaling problems — Simple alternatives — Numerical methods for full GP Next: — Fast MVMs intended to speed up GPs Accelerating MVMs — CG originally for sparse systems, MVMs in < O(n2) — GP kernel matrix is often not sparse — Zeros in the covariance ⇒ marginal independence — Short length scales usually don’t match my beliefs — Empirically, I often learn lengthscales ≈ 1 2.4 output, y 0.8 0.5 0.6 0 2.2 0.4 −0.5 −1 0.2 2 −1.5 0 0 0.2 0.4 x* input, x 0.8 1 0 0.2 0.4 x* input, x 0.8 1 0 0.2 0.4 0.6 input, x 0.8 1 Fast, approximate MVMs — MVMs and similar ops needed in many algorithms — Fast MVMs involving kernels is being actively researched kernel value — Alternatives to CG also need MVMs Simplest idea: x* Give nearby points equal kernel values: X X X (Kα)j = k(xj , xi)αi ≈ k(xj , hxiC ) αj i C j∈C Simple kd-trees and GPs Merging GP kernels in kd-trees doesn’t work. Mean abs error time/s Example: single test-time MVM for 2-D synthetic data: 0 10 Full GP KD−tree −1 10 −2 10 −1 10 0 10 lengthscale, l 1 10 −1 10 −2 10 Full GP KD−tree −3 10 −2 10 −1 10 0 10 lengthscale, l 1 10 Might better code, another recursion or tree-type work? The merging idea is flawed Data Full GP mean Merge method Subset of Data y α = M −1y P m∗ = i k(x∗, xi)αi 0 x 1 Improve test time by grouping sum into pairs: xi+xi+1 k(x∗, xi)αi + k(x∗, xi+1)αi+1 ≈ (αi + αi+1)k x∗, 2 (I)FGT expansions — Gaussian kernel only — series expand MVM into terms involving single points — Aim: m×n MVM, O(mn) → O(m+n) — Only works in low dimensions1 0 −1 Mean abs error time/s 10 Full GP 10 KD−tree FGT −2 10 −1 10 −2 10 Full GP KD−tree FGT IFGT IFGT −3 −2 10 1 −1 10 0 10 lengthscale, l 1 10 Unless lengthscales are huge 10 −2 10 −1 10 0 10 lengthscale, l 1 10 Do we believe the GP anyway? 300 250 240 200 230 150 220 100 100 210 150 200 250 215 220 225 230 235 240 — Real data is nasty: thresholding, jumps, cl umps, kinks — Some sparse GPs “approximations” introduce flexibility — Local GPs are sometimes surprisingly good — Mixtures of GPs Summary • Fast MVMs are required to leverage iterative methods • Tree-based merging methods fail on simple problems This isn’t acknowledged in the literature • The IFGT is fast and accurate in low dimensions only • Is it worth using so much data? Is the model flexible enough to justify it? Extra Slides Folk Theorem “When you have computational problems, often there’s a problem with your model.” e.g. Andrew Gelman: http://www.stat.columbia.edu/~cook/movabletype/archives/ 2008/05/the_folk_theore.html Inducing point methods Approximate the GP prior: Z p(f , f∗) = p(f , f∗|u) p(u) du Z ' q(f , f∗) = q(f |u) q(f∗|u) p(u) du Several methods result from choosing different q’s: SoR/DIC, PLV/PP/DTC, FI(T)C/SPGP and BCM Quiñonero-Candela and Carl Edward Rasmussen (2005) SoR/DIC, a finite linear model q(f |u) deterministic: u ∼ N (0, Kuu), −1 set f∗ = k> K ∗ uu u Draws from the prior: 2 Costs (m inducing u’s): 1 O(m2n) training O(mn) covariances O(m) mean prediction O(m2) error bars 0 −1 −2 0 0.2 0.4 0.6 0.8 1 Limited fitting power Those SoR prior draws again: 2 1 0 −1 −2 0 0.5 1 1.5 2 2.5 3 FIC / SPGP q(f |u) = Q i pGP (fi|u) 4 3 2 1 0 −1 −2 −3 −4 0 0.5 1 1.5 2 2.5 3 O(·) costs are the same as SoR [If time or at end: discuss low noise behaviours] Training and Test times Training time: before looking at the test set Method Covariance Inversion Full GP SoD Sparse O(n2) O(m2) O(mn) O(n3) O(m3) O(mn2) Test time: spent making predictions Method Mean Full GP O(n) SoD O(m) Sparse O(m) Error bar O(n2) O(m2) O(m2) Test NLP vs. Training time 4.5 mean negative log probability mean negative log probability 1.5 1 0.5 0 −0.5 −1 −4 10 −2 10 0 2 10 10 train time /s 4 10 4D synthetic data 6 10 4 3.5 3 2.5 2 −4 10 −2 10 0 2 10 10 train time /s 4 10 21D real robot arm data 6 10 Test NLP vs. Test time sod.thrs dtc.thrs dtc.thgs dtc.lhgs fitc.thrs fitc.thgs fitc.lhgs 1 0.5 0 −0.5 −1 −1 10 0 1 10 10 test time per 10,000 test cases /s 2 10 4D synthetic data 4.5 mean negative log probability mean negative log probability 1.5 4 3.5 3 2.5 2 −1 10 0 1 10 10 test time per 10,000 test cases /s 2 10 21D real robot arm data Summary • When training-time dominates: SoD can be best(!), FIC wins when need to learn good hyper-parameters • When test-time dominates: FIC is best in practice and in theory (but see Ed’s thesis for updates) Conjugate Gradients Another way of saying α = M −1y: > 1 > α = argmax F(z) = y z − 2 z M z z Each iteration: — picks a new direction and optimizes along a line — matrix-vector multiply dominates cost, O(n2) Provable error tolerances tricky, but researched Numerical instability and accumulation of errors hard to analyse Conjugate Gradients results (Trying hard to get a speedup) Synthetic data: D = 4, σn = 0.1 0.1 0.8 0.6 SMSE SMSE 0.08 0.06 0.04 0.02 0 \ Chol. CG \ Chol. CG 0.2 0.4 0.2 1 time / s `=1 5 0 0.2 1 time / s ` = 0.1 5 Conjugate Gradients results Real robot arm data: D = 21: 0.08 SMSE SMSE 0.1 0.05 \ Chol. CG 0.06 0.04 1 10 time / s plain 100 0 \ Chol. CG 0.08 0.06 0.04 0.02 0.02 0 0.1 SMSE 0.1 \ Chol. CG 1 10 100 time / s preconditioned with FIC 0 1 10 100 time / s 1000 low-memory Some timings Example training times for fixed hyper-parameters on SARCOS. All times are in seconds on a 2.8 GHz AMD Opteron with Matlab 7.4. Subset size Setup time Chol. solving time Total time 4096 8192 16384 3.1 9.0 28.9 6.5 55.2 375.8 9.6 64.2 404.7