2 Math–Related Areas of SLD - Colorado Department of Education

advertisement

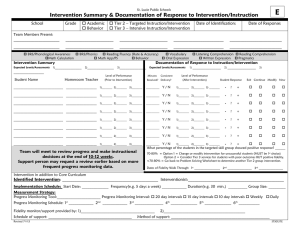

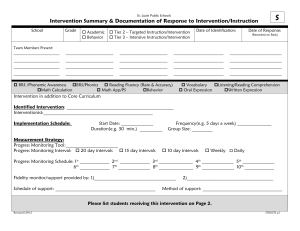

2 Math–Related Areas of SLD Math Calculation Math Problem Solving Determining Progress Measuring Fidelity Case Studies and Examples Jason Harlacher, PhD, NCSP Colorado Department of Education Colorado Department of Education I. II. Big Ideas in Math Assessments for math ◦ Academic Skill Deficit (low level) ◦ Insufficient Progress (low growth) III. IV. V. Determining Progress Measuring Fidelity Case Studies and Examples 2 32 million adults (14%) are identified as illiterate, 50 million can’t read above 4th grade level (education-portal.com) 32% of fourth-graders, 33% of eighth-graders, scored at Proficient level (NAEP, 2007) 4th No change in grade scores from 2007 to 2009 Jayanthi et al., 2008 39% of fourth-graders, 34% of eighth-graders scored at Proficient level (NAEP, 2009) No change in 4th grade scores from 2007 to 2009 (NAEP, 2009) Ratio of studies on reading disabilities to math disabilities was 5:1 from 1996-2005 (compared to 16:1 in prior decade) 3 Think of at least 2 ways can you add, subtract, multiply, or divide to get to the number 20. Number Sense- “a child’s fluidity and flexibility with numbers, the sense of what numbers mean, and an ability to perform mental mathematics...and make comparisons” (Gersten & Chard, 1999; p. 20) Not just knowing math facts, but also flexibility ◦ 8 + 5 = 13, so does 8 + 2 + 3 4 Tell me the sounds in “CAT” Just as phonemic awareness is a critical early skill for reading, number sense is viewed in the same manner 5 Developmental Progression For early mathematics: Counting Nonverbal and verbal enumeration of smaller to larger sets. Comparing and ordering Able to compare quantities and sets. Prereq for idea of movement and change. Need to envision a mental number line. Equal partitioning Ability to equally partition sets (6 into 3 and 3). Composing and decomposing Finding subsets within numbers, such as 7 has 3 and 4. Able to deal with larger numbers more abstractly. Grouping and place value (unitizing) Progression in abstractly grouping objects into sets, particularly in 10s (24 has two 10s and 4). Adding to/taking away Ability to notice and create increases and decreases in sets of items. (Clements et al, 2004; Gersten & Chard, 1999; Methe & Riley-Tillman, 2008) 6 National Council for Teacher Mathematics (nctm.org) Content Strands (the what) Math Processes (the doing) Number and Operations Problem Solving Algebra Reasoning and Proof Geometry Connections Measurement Communication Data Analysis Representation http://standards.nctm.org/document/appendix/numb.htm I. II. Big ideas in Math Assessments for math ◦ Academic Skill Deficit (low level) ◦ Insufficient Progress (low growth) III. IV. V. Determining Progress Measuring Fidelity Case Studies and Examples 8 Computation (Mathematical Calculation) ◦ Completing math problems where students must know concepts, strategies, and facts (includes decimals, fractions, percents) Applications (Mathematical Problem-Solving) ◦ Use and understanding of math concepts to solve problems (e.g., word problems, measurement, temperature, volume) (Thurber et al, 2002) 9 What is a deficit? Common guidelines are: ◦ 10th percentile and below ◦ 6 consecutive data points on CBM monitoring graph below 12th percentile ◦ Less than 50% on Criterion-referenced tests ◦ Gap ratio greater than 2.0 Overall convergence of evidence should indicate a deficit Gap Analysis is a method in which the gap between a student’s expected level of performance and current level of performance is quantified in a ratio. Expected Level = Gap Ratio Current Level Students who are more than 2.0 times below the expected level of performance are considered to have a significant gap. 78 Words per Minute 60 Words per Minute 78/60 1.3 No 35 Sounds 12 Sounds 35/12 2.91 Yes 35 Correct Digits 40 Correct Digits 35/40 0.88 No 85% on District Benchmark 63% on District Benchmark 85/63 1.40 No 3.4 Words per Week (growth) 1.6 Words per Week (growth) 3.4/1.6 2.13 Yes Anything less than 2.0 is okay, whether they score above or below the expected level. Where the Expected Level of Performance comes from and the context of the student’s school is critical. Expected Level Current Level Expression Gap Ratio Sign. Gap? 78 WPM (National Norm) 32 WPM 78/32 2.43 Yes 60 WPM (Local Norm) 32 WPM 60/32 1.88 No Compared to national norms, the student has a significant gap. Compared to local norms, student does NOT have a significant gap. Where would you intervene? With the student or elsewhere? Remember the theory behind problem-solving model and RTI. Let’s make a list. As a table/group, what are 2 assessments you generally use to show a student’s academic level in: ◦ math calculation? ◦ applications? 14 Computation Applications How would you quantify each source of information to determine if it’s a “skill deficit”? (Percentile? Gap ratio? Level?) 15 Achievement tests (WIAT, WJ, KTEA, KeyMath, TEMA,…) State testing scores (CSAP, Other state scores) Diagnostic assessments (G-MADE, MAP, KeyMath, STAR) Observations (illustrate comparison between peers and student) Curriculum based assessment (CBA, CBM, CBE) Other data indicating relation to peers/grade-level (district assessments, gradebook, student work, quizzes, end of unit tests, inventories, ) 16 What is insufficient progress? ◦ Let’s talk about this, as it’s not as straight forward as “academic skill deficit” Requires a progress monitoring tool Reliable (consistency) Valid (measure what’s intended & key indicator) Sensitive to growth (pick up changes in skills) Efficient to administer (quick and easy) Standardized (rule out bias or administrator effect) Alternate forms (avoid practice effects) 18 CBM fits all of the characteristics of an effective progress monitoring tool CBM is a standardized method of assessment that measures basic skills ◦ Brief, reliable, valid indicators ◦ A thermometer for academic health Tells you child’s level of risk (screener) and if instruction is working (progress monitor) ◦ Can monitor anywhere from twice/week to 3 times/year Sidenote- CBM is a type of assessment. AIMSweb, DIBELS, EdCheckUp, easyCBM are companies that have created passages/probes to use with CBM. 19 Curriculum-Based Measurement (AIMSweb): ◦ Computation Math Curriculum-based Measurement (M-CBM) Math Computation (M-COMP) Fact Probes ◦ Early Numeracy Test of Early Numeracy (TEN) ◦ Applications Math Concepts and Applied Problems (M-CAP) 20 Older version of math computation (AIMSweb) 2 or 4 minute, mixed-computation probe Score number of correct digits ◦ Can score answer process with grades 5 & 6 3 Benchmark, 40 Monitoring probes, grades 1 to 6 ◦ Will be discontinued in Fall 2011 21 Grade 1 Grade 6 22 Similar to M-CBM, for grades 7 and 8 ◦ 4 minutes, group or individual administration Score number of correct digits or answer process 3 Benchmark, 40 Monitoring Probes ◦ Includes decimals, percents, and fractions 24 25 26 8 minute, mixed computation probes for grades 1 to 8 3 Benchmark and 30 Monitoring probes (AIMSweb) Group or individual administration Ease of scoring (right or not) 27 Grade 1, Benchmark 2 Grade 1, Benchmark 1 28 Grade 7 29 30 Take 1 minute and complete the M-COMP in your handouts. To score: Circle the point value if correct; circle zero if incorrect. ◦ Alternate answers are correct if shows conceptual understanding (e.g., ½ = .50). 31 For each correct answer, write the number of points each problem is worth on your answered problems. Single-skill or multi-skill probes focusing on basic math facts 2 minutes, group or individual admin; 40 probes Basic fact = 0 to 12 33 34 Monitoring Basic Skills Progress (MBSP) InterventionCentral.org Mathfactcafe.com STAR Math easyCBM EdCheckUp Measure early numeracy skills (number sense) for grades K-1 3 benchmark, 30 monitoring probes 4 assessments: 38 39 40 41 42 What is the purpose of a screener? ◦ Identify students’ level of performance (Are they at-risk?) A diagnostic tool? ◦ To identify specific skill deficits and strengths (Why are they at-risk?) A progress monitoring tool? ◦ To monitor a change in students’ level of performance (Is the instruction effective?) What varying types of assessment do you have for building a Body of Evidence related to MATH COMPUTATION in order to demonstrate… academic skill deficit and insufficient progress? Applied math probes for grades 1 to 8 ◦ Eight minutes for grades 1 to 6, Ten minutes for 7 and 8 3 Benchmark and 30 Monitoring probes (AIMSweb) Group or individual administration Ease of scoring (right or not) 45 counting, number concepts, number naming, money, grid, measurement, time, charts and graphs, fractions, decimals, applied computation, word problems 46 Grade 2 47 Grade 7 49 Take 1 minute and complete the M-CAP. ◦ Score in similar manner as MCOMP. 50 Monitoring Basic Skills Progress (MBSP) InterventionCentral.org easyCBM What varying types of assessment do you have for building a Body of Evidence related to MATH APPLICATIONS in order to demonstrate… academic skill deficit and insufficient progress? To measure academic skill deficits/level, use same old basics. Think of way to quantify it (percentile, gap?) To measure progress, need CBM scores: ◦ M-COMP, M-CBM, Fact Probes for math computation ◦ M-CAP for applied math ◦ TEN for early numeracy ◦ Other CBM probes available from easyCBM, EdCheckUp, DIBELS, Monitoring Basic Skills (Fuchs) 54 I. II. Big ideas in Math Assessments for math ◦ Academic Skill Deficit (low level) ◦ Insufficient Progress (low growth) III. Determining Progress ◦ Graphing Basics ◦ Determining Response IV. V. Measuring Fidelity Case Studies and Examples 55 Learning is an interaction between the learner, curriculum, and environment (instruction). (Howell & Nolet, 2000) Have the plan with you. If you only have the data, may only focus on the learner. The question you are asking is “Is the instructional plan working?” Positive Outcomes 40 30 20 10 0 1 2 3 4 5 2nd grader and Words Read Correct Per Minute score 57 Use a graph with a scale that matches average range of performance. Otherwise, progress (or lack thereof) can be misrepresented. 140 120 (Average range, Spring) 100 80 60 40 20 0 1 2 3 4 5 2nd grader and Words Correct Per Minute score 58 140 Use a graph with a scale that matches average range of performance. Otherwise, progress (or lack thereof) can be misrepresented. 120 100 80 60 40 (Average range, Spring) 20 0 1 2 3 4 5 2nd grader and Correct Digits/ 2 Minutes score 59 140 120 100 80 60 40 20 0 1 2 3 4 5 6 7 8 2/2/2011 9 10 60 140 120 Make sure x-axis is chronological and that data points represent day they were administered. 100 80 60 40 20 0 1/1 2/1 3/1 4/1 5/1 2/2/2011 6/1 61 Graphing Basics: Every graph needs: - A goal - An aimline - A trendline Trendline Goal Aimline/Goal line 2/2/2011 62 1. Establish “Pattern of Performance” First and foremost, you need a pattern of performance established. 63 90 80 70 60 50 40 30 20 10 0 Can you predict with reasonable confidence the next data point? 1 2 3 4 5 6 7 8 2/2/2011 9 10 64 90 80 70 60 50 40 30 20 10 0 As a general guide, it takes 810 data points to establish a Can predict with reliable pattern of you growth. reasonable confidence the next data point? Can you predict with reasonable confidence the next data point? 1 2 3 4 5 6 7 8 9 10 65 140 Words Correct/Minute 120 Goal of 110 Pattern of performance? Can you predict the next data point with reasonable confidence? 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 140 Words Correct/Minute Pattern of performance? Can you predict the next data point with reasonable confidence? 120 100 Goal of 110 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 140 Words Correct/Minute 120 100 Pattern of performance? Can you predict the next data point with reasonable confidence? Goal of 110 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 140 Words Correct/Minute Pattern of performance? Can you predict the next data point with reasonable confidence? 120 100 Goal of 110 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 1. Establish “Pattern of Performance” ◦ If no pattern, don’t judge, collect more data. ◦ When in doubt, collect more data. ◦ 8-10 data points within one intervention to have a technically adequate and reliable growth pattern doesn’t mean you can’t make changes before then, but need 8-10 for a reliable slope. 2. What does the trendline look like compared to the aimline? 2/2/2011 70 120 100 Actual Growth to Expected Growth Trendline After 8-10 data points, compare trendline to aimline. 80 Aimline 60 40 20 0 12/24 1/23 2/22 3/23 4/22 5/22 71 How does the trendline look compared to the aimline? Positive response: ◦ Trendline is steeper than the aimline. Gap is closing/closed. 2/2/2011 72 120 100 Trendline is steeper than the aimline. Gap is closing/closed. Continue “as is”, but consider ending intervention if goal is met or above aimline. Trendline 80 Aimline 60 40 20 0 12/24 1/23 2/22 3/23 4/22 5/22 How does the trendline look compared to the aimline? Positive response: ◦ Trendline is steeper than the aimline. Gap is closing/closed. Poor response: ◦ Trendline is flatter than the aimline. Gap is widening. 2/2/2011 74 120 Trendline is flatter than the aimline. Gap is widening. 100 80 Check fidelity. If poor, improve it and continue. If good, make an instructional change! Trendline 60 Aimline 40 20 12/24 1/23 2/22 3/23 4/22 5/22 How does the trendline look compared to the aimline? Positive response: ◦ Trendline is steeper than the aimline. Gap is closing/closed. Poor response: ◦ Trendline is flatter than the aimline. Gap is widening. Questionable response: ◦ Trendline is parallel with aimline. ◦ Can treat as a “Poor Response”, but intervention may just need fidelity check or intensified. 2/2/2011 76 120 100 80 Trendline is parallel with aimline. Gap is staying consistent. Trendline Check fidelity. If poor, improve it and continue. If good, intensify intervention or make an instructional change! 60 40 Aimline 20 0 12/24 1/23 2/22 3/23 4/22 5/22 Response to Intervention Baseline Intervention Positive Questionable Aimline Performance Poor Trendline Time 140 120 How’s the trendline look compared to the aimline? 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 140 120 How’s the trendline look compared to the aimline? 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 140 How’s the trendline look compared to the aimline? 120 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 Would you trust this trendline?! Don’t forget pattern of performance before you judge a trendline! Most data entry programs will calculate a trendline regardless of how the data looks. 6/9 Is there a pattern of performance? 1. ◦ Positive or Poor Response? 2. ◦ ◦ If Positive, continue or consider ending intervention if goal is met. If Poor or Questionable, then… Check fidelity. 3. ◦ ◦ 4. If no, gather more data. If fidelity is poor, fix it and continue with plan. If good,… Make changes to alter rate of growth (major changes if poor response; perhaps minor changes if questionable response). 1) Pattern of performance? 2) Trendline compared to aimline? 140 120 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 1) Pattern of performance? 2) Trendline compared to aimline? 140 120 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 “Smallest, yet most powerful factor” Doesn’t always have to mean “change the program” Improve fidelity Opportunities to respond Accuracy of responses Pacing Person facilitating group Error correction format Level of explicitness Amount of review Time to practice skills Size of group Skill focus of intervention Pre-correction strategies Format of student response Incentives during group Time Setting Regroup students (not exhaustive) 85 For students who are low-performing, suspected of ,or have a disability Make more explicit More opportunities to respond For small-group, 8-12 OTRs/minute For larger groups it varies based on task, but about 6-8/minute More immediate and clearer corrective feedback Should be accurate with at least 95% of responses and provided correction 100% of errors More time to practice skills Jayanthi et al, 2008; Best Practices in School Psychology V 1) Pattern of performance? 2) Trendline compared to aimline? 140 Interv Change 120 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 1) Pattern of performance? 2) Trendline compared to aimline? 140 Tier 2 Tier 3 120 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 Insufficient Progress: Possible Referral? 5/26 6/9 Your district/school will ultimately define “insufficient progress”, but generally speaking, it is: 8-10 data points within one intervention phase that indicate a poor or questionable response But…there are two things to consider when you see a poor response Growth Rate and Realistic Progress Intensity of Instruction ◦ Time ◦ Size of Group • What is the growth rate? Current Score – Beginning Score # of weeks = GR Growth Rate ROI (Rate of Improvement) • How long will it take student to reach goal? Goal is 110 Words in 10 Weeks 95 Words 65 Words 8 weeks 95-65/8 = 3.75 Words/Week Will student meet goal in time? ◦ Multiply Growth Rate by weeks left in intervention. Add to current level. 3.75 Growth Rate X 2 weeks = 7.5 (growth left in intervention) + 95 (current level) = 102.5 at end 95 Words 65 Words 8 weeks 95-65/8 = 3.75 Words/Week When will student reach goal? Take Goal (110) – Beginning level (65) / Growth rate (3.75) = 45 (gap) /3.75 (growth rate) = 12 weeks to reach goal (from start of intervention) 25 points 17 points ? 10 weeks ? What growth rate is needed for the student to reach her goal in 10 weeks? 25 – 17 = 8 (Gap) 8 ÷ 10 weeks = 0.80 (Growth rate needed) Fuchs et al (1993) Deno et al (2001) Grade Realistic Ambitious Realistic 1 2 3 1.80 2 1.5 2 1.66 3 1 1.5 1.18 4 0.85 1.1 1.01 5 0.5 0.8 0.58 6 0.3 0.65 0.66 Typical practices Effective practices 95 Grade Realistic Ambitious 1 0.30 0.50 2 0.30 0.50 3 0.30 0.50 4 0.70 1.5 5 0.75 1.2 6 0.45 1.0 96 Grade M-CAP, AIMSweb 2 0.4 3 0.2 4 0.1 5 0.1 6 0.1 7 0.2 8 0.1 Caution: Realistic growth rates are based on normative samples and students performing on grade-level. Students who are below grade level and provided targeted instruction could have more growth relative to the rates listed here. 97 How much growth can you expect relative to the intensity of instruction? ◦ Two factors are time and size of the group ◦ More time and smaller group makes it more intense. When making educational decisions (instructional change or eligibility), intensity of instruction is important to consider. Daily half hour lesson for 12-20 weeks (D’Agostino & Murphy, 2004) Daily half hour lessons for 10-20 weeks (Vaughn et al in press) 30-50 minute sessions three times each week (O’Connor et al., 2005) 30 minute sessions four times each week (Burns et al., 2005) 20 minute sessions four times each week for 2.5 yrs (Torgesen et al, 1999) 50 minute sessions 4 times each week Oct-May (Torgesen at al, 2003) RTI Innovations 2010 10-minute daily sessions for 13 weeks (Van der Hayden et al., 2007) Tier 2 appears to be: 20-minute sessions three times/week • average of 10 weeks (Fuchs et al., 2008) • 20-30 for minutes/day 15-minute sessions three times/week 18 weeks (Bryant et al., 2008) outside of core 20-40-minute sessions, four to•five 3-5 times/week times/week (Gersten et l., 2009) 40-minute sessions (Fuchs et al., 2005) Tier 3 appears to be: • 45-60 minutes/day outside of core • 3-5 times/week Size of group ◦ Tier Two = 3 to 5: 1 ◦ Tier Three = 1 to 3: 1 1) Pattern of performance? 2) Trendline compared to aimline? 140 Tier 2 Tier 2: Tier Phase 3 2 120 100 80 60 40 20 0 12/24 1/7 1/21 2/4 2/18 3/3 3/17 3/31 4/14 4/28 5/12 5/26 6/9 The intensity of instruction and phase will play into decisions to evaluate. Regardless of going into Tier 2, 3, or eval, problem-solving never stops. Ensure basics with the graph (X- & Y-axis, goal, aimline, trendline) ◦ Always have the instructional plan with you when you examine a graph Examine Pattern of Performance first Then consider Trendline compared to Aimline Consider growth rates and intensity of instruction when making decisions. Never stop problem-solving, whether you evaluate or not. 10 3 I. II. Big ideas in Math Assessments for math ◦ Academic Skill Deficit (low level) ◦ Insufficient Progress (low growth) III. IV. V. Determining Progress Measuring Fidelity Case Studies and Examples 10 4 Fidelity: The extent to which the intervention/instruction was delivered as intended. -Did it occur? (Integrity and Sufficiency) -Did it match the student’s academic skill deficit? http://www.cde.state.co.us/cdesped/download/pdf/SLDColoradoRulesFedRegs.pdf 10 5 Exercise Food Time Lapse Weight Loss 3 times/week No dessert! 2 weeks Goal- 4 pounds 2 times/week Had dessert 1 day/week. 2 weeks Loss 1 pound 3 times/week No dessert 2 weeks Loss 2 pounds 10 6 Goal Not Met “not due to lack of instruction...” Fidelity was good Fidelity was unclear, not good, or don’t know Diet didn’t work Not sure if diet would have worked Ensuring fidelity avoids misattributing poor response to diet when poor implementation is the culprit Improve fidelity and redo diet Direct Assessment ◦ Components of an intervention are clearly outlined in operational terms and directly observed. ◦ Often calculate a total percentage of number of components implemented Indirect Assessment ◦ Assess degree of implementation without directly observing instruction/intervention. Typically based on self-reports, interviews, and review of permanent products. National Research Center on LD- http://www.ldaofky.org/RTI/RTI%20Manual%20Section%204%20-%20Fidelity%20of%20Implementation.pdf 108 From Heartland Agency in Iowa 10 9 From St. Croix River Education District 11 0 From Pitt County Schools, NC 11 1 11 2 Indirect Assessments: Look at… ◦ self reports (rating scales, checklists) on implementation ◦ lessons plans to ensure instruction was planned for and provided ◦ attendance to ensure student was present to receive instruction ◦ student products to see that students participated in the instruction 11 3 Math 10/12 35 45 10/13 45 45 Fire drill ended early, no fluency practice From Washoe County Schools; Reno, NV 11 4 11 5 What are some intervention programs you use that you could create (or already have) direct assessment fidelity tools for? How could (or does) your school indirectly measure fidelity? Appropriate instruction implies more than just ‘Did it occur?’ but also asks if the instruction targeted the student’s deficits. If the student has a deficit in time, would an intervention targeting math facts be appropriate? Is a fluency intervention appropriate for a student with decoding deficits? 11 7 “STAY ON TARGET!” -We’re all well intentioned, but ensure it’s on target by doing good problem analysis and using research-based instruction. 1) Examine skills being targeted during instruction. 2) Compare to skills identified as deficits in student. Ensure that what you’re doing is going to be effective. Starts with identifying the problem well. Fidelity and term “appropriate instruction” ask: - Did instruction/intervention occur? - Did instruction/intervention match the student’s academic skill deficit? Variety of direct and indirect ways to measure fidelity. Creating a culture in which fidelity is expected and part of routine makes it easier to collect data on it. 12 1 Old Model (Discrepancy) New Model (Problem-Solving) -Cognitive/Aptitude -Academic Level (Achievement) -Social/emotional -Observation -etc? -Academic Level -Academic Progress -Previous interventions -Fidelity information -Social/emotional -Observation -etc? One person cannot and should not do problem-solving and RTI on his/her own. The system is not built that way. Truly takes a village. School-Level Teams Grade-Level Teams Classroom Teacher I. II. Big ideas in Math Assessments for math ◦ Academic Skill Deficit (low level) ◦ Insufficient Progress (low growth) III. IV. V. Determining Progress Measuring Fidelity Case Studies and Examples 12 4 6th grade male up for re-evaluation in spring. Currently classified under LD in reading. Considering math. I. Academic Skill Deficit? 12 5 6th grade male up for re-evaluation in spring. Currently classified under LD in reading. Considering math. Grade 3, 4, 5; State Math Assessments Approaching Standards (next level is Proficient) 4th grade; Nevada NRT 21st percentile (grade 4) 6th grade (Winter) Benchmark 65% (on-track is 75%) MCBM (Winter 6th grade) 15 Correct Digits (26 is criterion) MCAP (Winter 6th grade) 6 Correct Problems (11 is criterion) KTEA-II (Win 6th) 83 (13th perc) in Computation 80 (9th perc) in Concepts and Application II. Insufficient Progress? Tier I (Aug to present) Tier II (Sept - Jan) Tier III (Jan to present) Core block of 60 minutes, 5 days/week using Everyday Math. • 20 minutes wholegroup instruction • 20 minutes of small group • 15 minutes of independent practice • 5 minutes for transition, cleanup 30 additional minutes of instruction, 2 days/week in group of 6 students. 30 minutes of small group instruction with 3 students 2 days/week. Targeting fact fluency using math games from curriculum. Also focusing on money, time, and word problems with supplemental core materials. Targeting math facts, word problems, and analyzing graphs to solve problems. Using direct instruction math program. 12 7 New goal line created after intervention change because a new expected rate of growth is needed to reach goal II. Insufficient Progress - number of correct digits on M-CBM 35 Tier 2 Tier 3 30 Goal of 26 CD 25 20 15 10 5 0 9/1 10/1 11/1 12/1 1/1 2/1 3/1 4/1 5/1 6/1 12 8 II. Insufficient Progress number of correct digits on M-CAP 20 18 16 14 12 10 8 6 4 2 0 Tier 2 Tier 3 Goal of 11 CP 9/1 10/1 11/1 12/1 1/1 2/1 3/1 4/1 5/1 6/1 12 9 III. Other factors Fidelity◦ Student present 96% of school days. Missed 1 day of math intervention all year. ◦ Teacher reported 95% of intervention components implemented (self-report sheet) ◦ Two observations for each tier: 100% of components implemented for Tier 2 and Tier 3 Observation◦ Student on-task with rest of peers. Completing activities with accuracy. 13 0 Academic Skill Deficit? Skill deficit on math CBM and KTEA-II Insufficient Progress? Insufficient progress computation, Qualified under LD inin Math but responding in applied math Computation, but not for Fidelity? (Occurred? Scores above 95% on fidelity measures. Applied Math. Appropriate?) Targeting computation and applied math deficits Response to instruction Data available. data Received Tier 2 and Tier Will re-examine for data? 3. applied math in 3 weeks Observation? On-task and successful during intervention time. Differentiated instruction during core. As a group/table, would you consider qualifying? Why or why not? 13 1 3rd grade male evaluated for reading difficulties in spring. Informal Reading Inventory, Sept 3rd grade Level HI (late 1st grade) District Reading Benchmarks 40%- Off Track (75% On track) R-CBM, Winter 3rd grade Read 60 Words Correct per Minute (criterion is 78); read with 92% accuracy (target accuracy is 95%) Survey-level assessment, Winter 3rd Met criterion at late 1st grade level (57 grade words with 95% accuracy) STAR Reading Assessment, Fall 3rd grade Grade equivalent score of 1.4 WIAT (Achievement Test) Basic Reading Skills- 86 (18th perc) Reading Comprehension- 89 (23rd perc) Curriculum Based Evaluation Revealed decoding as a deficit. Poor & inconsistent accuracy rate across reading passages from grades 1 to 3. 13 2 Insufficient Progress? SECOND GRADE Tier I: Oct –Nov Core block- 90 minutes of whole and small group instruction. THIRD GRADE Tier I: Sep to present Core block- 90 minutes of whole and small group instruction. Tier II: Nov –Feb 30 mins/2 times/week of small group reading. Group of 6-8 kids. Targeting fluency and comprehension. Tier III: Feb –June Reduced group size from 5 students to 3 student. Targeting fluency and comprehension. Tier III: Feb to present 30 minus/twice/week in a group of 4. Targeting fluency and decoding. 133 SECOND GRADE: R-CBM (words correct/minute) Tier 2 Tier 3 New goal line after intervention change 13 4 Tier 3 (continued from grade 2) 140 Progress in Reading, Grade 3, Words Correct/Minute 120 100 80 60 40 20 0 10/6 11/6 12/6 1/6 2/6 3/6 4/6 5/6 13 5 III. Other factors Fidelity◦ ◦ ◦ ◦ Student present 92% of school days in grades 2 and 3. Student was in different schools and states for grades K and 1. No fidelity data collected on intervention. Principal observation noted lack of implementation of high quality instruction in core (few opportunities to respond, limited differentiated instruction). 13 6 III. Other factors Observation ◦ Observed during whole-class core instruction for 45 minutes. ◦ Was receiving vocabulary instruction and answered correctly 2/2 times. ◦ Attention waivered. Limited interaction with teacher. ◦ Conclusions: Few opportunities to respond, long time on vocabulary for student (no differentiation), no additional strategies to facilitate learning for student 137 Did not qualify for services because: Skill Deficit? About 1.5-2 grades below current grade placement. Scoring low, but skill saydeficit? there was high fidelity 1. Could not Insufficient Progress? progressintervention in tier 2. Seems to (missing data, Insufficient second grade be responding to Tier 3 not matched to need) Fidelity? (Occurred? Appropriate?) 2. Received additional instruction, but not matched to his need (see 2nd grade Intensity of instruction wasLack limited intervention focus). of clear (only focus of instruction in grade 3 core. 60 mins/week of additional instruction) Response to instruction data? Available data. 3. Slight response to Tier 3 after targeting Observation? Limited effective practices used and decoding skills more. perhaps mismatch between student need and instruction. As a group/table, would you consider qualifying? Why or why not? 13 8 Look over the case study. Take 10-15 minutes to discuss and make a conclusion. 1) Would you lean more toward qualifying or not qualifying this student? 2) What is one factor that affected your answer to #1? 3) What would you do next for problem-solving? 13 9 Given your role and your school’s current situation, what is one piece of information from today that you will share with other staff in your building? Given your role and your school’s current situation, what is one piece of information from today that you will share with other staff in your building? 1) The body of evidence for SLD identification under a problem-solving model is different than one under a discrepancy model. 2) Consideration of focus and actual delivery of the instruction is much more pronounced. 3) Fidelity is not an evaluative tool for teachers. It’s about ensuring the “diet” was followed and if it was appropriate for the need of the student. 14 1 Thank you so much for your attention and participation. Please contact me with any questions you may have. Jason Harlacher, PhD, NCSP ◦ Principal Consultant, Colorado Dept of Educ ◦ Harlacher_j@cde.state.co.us Effective teaching practices for students with SLD in math or low performance in math. A few syntheses of research and meta-analyses have identified key methods for teaching students who are low in math and students with LD. ◦ ◦ ◦ ◦ Jayanthi et al, 2008 Baker, Gersten, & Lee, 2002 Gersten, Baker, & Chard, 2006 National Math Advisory Panel’s Report 14 4 Use explicit instruction on a regular basis. 1. ◦ ◦ ◦ ◦ Clear modeling with examples Practice time Frequent opportunities to respond Immediate corrective feedback I do, We do, You do it together, You do it alone 14 5 Use multiple instructional examples. 2. ◦ ◦ ◦ Careful selection and sequencing of examples Show variations, but highlight common but critical features Concrete to abstract, easy to hard, simple to complex 14 6 Have students verbalize decisions and solutions to a math problem. 3. ◦ Verbalize or think aloud their decisions and solutions Specific steps (I divide my two to get half) Generic heuristics (Now I need to check my answer) Solution format (First add the numbers in the unit column) Self-questioning format (What should I do first?) 14 7 Teach students to visually represent the information in the math problem. 4. ◦ ◦ More powerful with explicit instruction More powerful when students use a visual given by teacher vs. a self-generated one 14 8 Solve problems using multiple/heuristic strategies. 5. ◦ ◦ Discuss/show different ways to solve problems. What is 9 X 5? Can be nine fives or five nines. Also ten fives minus one five. 14 9 Provide ongoing formative assessment data and feedback to teachers. 6. ◦ Greater outcomes if teachers are given tips and suggestions to help decide: what to teach when to introduce the next skill how to group/pair students. 15 0 Provide peer-assisted instruction to students. 7. ◦ Good outcomes if low- and high-performing students are paired because: ◦ Low-performing student sees better strategies and methods used by high-performing student High-performing student solidifies knowledge by verbalizing/explaining it to other student Cross-age tutoring more powerful for students with LD (perhaps of gap of skill deficit?) PALS, Classwide Peer Tutoring, Team Assisted Individuation 15 1 Baker, Gersten, & Lee (2002). A synthesis of empirical research on teaching mathematics to lowachieving students. The Elementary School Journal, 103 (1), 51-73. Clements, W. et al (2004). Engaging young children in mathematics: Standards for early childhood mathematics in education. Mahwah, NJ: Erlbaum. Education-portal.com Gersten, R. and Chard, D. Number sense: Rethinking arithmetic instruction for students with mathematical disabilities. Journal of Special Education (1999), 3, 18–29. Howell, K., & Nolet, V. (2000). Curriculum-based evaluation. Jayanthi, M., Gersten, R., & Baker, S. (2008). Mathematics instruction for students with learning disabilities or difficulty learning mathematics. http://www.centeroninstruction.org/files/Teaching%20Math%20to%20SLD%20LD%20Guide .pdf Methe & Riley-Tillman (2008). An informed approach to selecting and designing early mathematics interventions. School Psychology Forum, 2, 29-41. Mathematics Learning Study & National Research Council (2001). Adding it up: Helping children learn mathematics. Washington, DC: National Academy Press. National Council of Teachers of Mathematics. http://standards.nctm.org/document/appendix/numb.htm Nation’s Report Card. http://nces.ed.gov/nationsreportcard/ Thurber, Shinn, Smolkowski (2002). What is measured in mathematics tests? Construct validity 15 of curriculum-based mathematics measures. School Psychology Review, 31 (4), 498-513. 2 Best Practices in School Psychology (2009). Fifth Edition. NASP, Burns, M., Appleton, J., & Stehouwer, J. (2005). Meta-Analytic Review of Responsiveness-ToIntervention Research: Examining Field-Based and Research-Implemented Models, Journal of Psychoeducational Assessment, 23, 381-394 D'Agostino, J. V., & Murphy, J. A. (2004). A meta-analysis of Reading Recovery in United States schools. Educational Evaluation and Policy Analysis, 26(1), 23-38. Deno, S. L., Fuchs, L. S., Marston, D.B & Shin, J., (2001). Using curriculum-based measurement to establish growth standards for students with learning disabilities. School Psychology Review,30,4 (pp 507-524). Fuchs, L. S., Fuchs, D., Hamlett, C. L., Walz, L., & Germann, G. (1993). Formative evaluation of academic progress: How much growth can we expect? School Psychology Review, 22, 27-48. Gersten, R. et al. (2009). Assisting Students Struggling with Mathematics: Response to Intervention (RtI) for Elementary and Middle Schools. US Dept of Education. National Center for Education Evaluation and Regional Assistance. Newman-Gonchar, R., Clarke, B., & Gersten, R. (2009). Multi-tier intervention and response to interventions for students struggling in mathematics. Center on Instruction. O’Connor, R.E., Harty, K., & Fulmer, D. (2005). Tiers of Intervention in Kindergarten Through Third Grade. Journal of Learning Disabilities, 38, 532-538. O’Connor, R.E., Fulmer, D., Harty, K., & Bell, K. (2005). Layers of Reading Intervention in Kindergarten through Third Grade: Changes in Teaching and Child Outcomes. Journal of Learning Disabilities, 38, 440-455. 15 3