Learning Progressions Project - University of Colorado Boulder

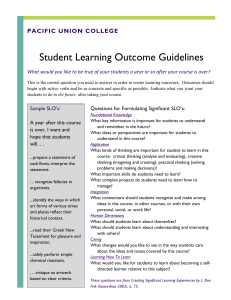

advertisement