Newton-Type Iterative Solver for Multiple View $ L2 $ Triangulation

A Note on Newton-Like Iterative Solver for Multiple

View L2 Triangulation

∗

Abstract

In this paper, we show that the L2 optimal solutions to most real multiple view L2 triangulation problems can be efficiently obtained by two-stage Newton-like iterative methods, while the difficulty of such problems mainly lies in how to verify the L2 optimality. Such a working two-stage bundle adjustment approach features the following three aspects: first, the algorithm is initialized by symmedian point triangulation, a multiple-view generalization of the mid-point method; second, a symbolic-numeric method is employed to compute derivatives accurately; third, globalizing strategy such as line search or trust region is smoothly applied to the underlying iteration which assures algorithm robustness in general cases.

Numerical comparison with tfml method shows that the local minimizers obtained by the two-stage iterative bundle adjustment approach proposed here are also the L2 optimal solutions to all the calibrated data sets available online by the Oxford visual geometry group. Extensive numerical experiments indicate the bundle adjustment approach solves more than 99% the real triangulation problems optimally. An IEEE 754 double precision C++ implementation shows that it takes only about 0.205 second to compute all the 4983 points in the Oxford dinosaur data set via Gauss-Newton iteration hybrid with a line search strategy on a computer with a 3.4GHz Intel

® i7 CPU.

Keywords: Triangulation; L2 optimality; iterative methods; line search; trust region.

1 Introduction

Triangulation is a critical topic in computer vision with applications in 3D object reconstruction, map estimation, robotic path-planning, surveillance and virtual reality

. Efficient two-view triangulation methods

and especially multiple-view L2 optimal ones

have drawn intensive research interests; the latter give rise to favorable maximum likelihood estimates under the assumption of independent gaussian noises

but still remain not well-resolved.

Triangulation algorithms which guarantee L2 optimality for up to three-view cases are mainly based on polynomial solving, symbolic-numeric Gr¨obner basis methods in solving polynomial systems, and branchand-bounds optimization techniques

. Recent research indicates that such an algorithm as can find a closed-form n -view L2 optimal solution does not exist

.

∗ correspondence author

1

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION et al

A novel non-iterative method based on fundamental matrix and linear matrix inequalities, tfml, by Chesi

, is efficient and able to handle more than three-view L2 triangulation. The major limitations of tfml might be the low solution accuracy in the conservative cases

and the fast efficiency decline due to scale increasing of the converted eigen value problem(EVP) when the number of cameras increases. Despite of these, tfml is probably by far the most successful n -view L2 triangulation method created naturally with a

necessary and sufficient cirtierion for L2 optimality verification

and will be used as benchmark here.

Traditional iterative methods such as the bundle adjustment optimization via Levenberg-Marquardt are mainly criticized for their no ideal initialization and the possible local convergence issue

. As far as we know, none of the state-of-the-art triangulation approaches which asserts L2 optimality for multiple view triangulation are iterative methods. Recent publications indicate that bundle adjustment optimization performs poorer than even some of the suboptimal methods

.

We find most of the real n -view L2 triangulation problems don’t have the difficulty of multiple local minima, i.e., in most cases the global L2 optimal solutions can be approached by solving only a convex problem via simple Newton-like methods

. As a matter of fact, a lot of the most cited real data sets can be globally solved by iterative methods with excellent accuracy and high efficiency. These data sets include but are not limited to dinosaur, model house, corridor, Merton colleges I, II and III, University library and

Wadham College, which are made available online by the visual geometry group of Oxford university(VGG, http://www.robots.ox.ac.uk/˜vgg/data/data-mview.html

) and are widely used to evaluate new triangulation algorithms.

In our numerical experiments, Newton-Raphson, Gauss-Newton and Levenberg-Marquardt methods all work successfully on Oxford VGG data when being implemented by:

(1) initializing via symmedian-point triangulation to obtain a good start point;

(2) computing all derivatives, gradients and Hessians of the cost function included, via a symbolic-numeric approach (or multiple precision computation) to assure high accuracy;

(3) using Newton-like underlying iterative methods such as Newton-Raphson, Gauss-Newton and other variants, smoothly hybrid with globalizing strategies in order to handle hard cases when symmedian point is not a good start point.

In this work we will show these implementation details and briefly introduce some criteria useful in verifying the L2 optimality

. We intend to present that bundle adjustment optimization with appropriate implementation details is a practically well-performed approach in solving the multiple-view L2 triangulation problems.

2 Implementation details of the iterative solver

The cost function of a typical unconstrained least square problem has the following form

:

1

2 f ( X ) =

1

2 r ( X )

T r ( X ) =

1

2 m

X

φ

2 i i =1

( X ) (2.1) and

The n

-view L2 triangulation is the least square problem as in ( 2.2

n pinhole cameras P i in 3 × 4 n 2D image homogenous coordinates x i

= ( u i

, v i

, 1)

T

, find the global least square minimizer X

∗

:

X

∗

= arg min

X ∈ R 3 f ( X ) = arg min

X ∈ R 3 n

X k x i

− ˆ i k

2

2

, where : ˆ i i =1

X = ( x, y, z )

T represents a 3D scene point, the projective depth corresponding to P i

.

X = ( x, y, z, 1)

T u i

, ˆ i

, 1)

T

=

P

λ i i

X , i = 1 · · · n.

(2.2) is X in homogeneous coordinates and λ i is

2

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

The projection of n

-camera cases can be represented as in equation ( 2.3

λ

1

λ

1

λ

2

λ

1 u

1 v

1 u

λ

2

λ

2

.

..

v

2

2

λ n u n

λ n v n

λ n

=

p p p

1

11

1

21

1

31 p p

2

11

2 p

21

2

31 p p p n

11 n

21 n

31 p p p

1

12

1

22

1

32 p p p

2

12

2

22

2

32

.

..

p p p n

12 n

22 n

32 p p p

1 p p

2

13

2 p

23

2

33 p p p n

13 n

23 n

33

13

1

23

1

33 p

1 p p

14

1

24

1

34 p p

2

14

2 p

24

2

34 p p p n

14 n

24 n

34 and the 2 n × 1 residue vector r ( X )

x y z

1

r ( X ) =

p p

∗ 1

11

∗ 1

21 p p

∗ 2

11

∗ 2

21 p p

∗ n

11

∗ n

21 p p

∗ 1

12

∗ 1

22 p p

.

..

∗ 2

12

∗ 2

22 p p

∗ n

12

∗ n

22 p p

∗ 1

13

∗ 1

23 p p

∗ 2

13

∗ 2

23 p p

∗ n

13

∗ n

23

x y z

−

u

1

− p v

1

− p

∗ 1

14

∗ 1

24 u

2

− p v

2

−

.

..

p

∗ 2

14

∗ 2

24 u v n n

− p

− p

∗ n

14

∗ n

24

= AX − B =

φ

1

φ

2

( X )

( X )

φ

3

( X )

φ

4

( X )

.

..

φ

2 n − 1

φ

2 n

( X

( X )

)

(2.3)

(2.4) where p

∗ i l,m

= p i l,m

λ i

λ i

= p i

3 , 1 x + p i

3 , 2 y + p i

3 , 3 z + p i

3 , 4

, i = 1 , 2 , · · · , n, l = 1 , · · · , 3 , m = 1 , · · · , 4 .

Then cost function f ( X )

) has the equivalent least square problem form as in equation ( 2.1

with m = 2 n .

2.1

Symmedian point method for initialization

The fast two-view mid-point triangulation method

has been extended to n -view cases by generalizing the concept mid-point into symmedian point which has the least sum of squared distances to all the projection rays. This idea was initially proposed by Sturm et al in 2006

, a simple and detailed implementation of which can also be found in

. However, it seems the advantage of such method in initializing an L2 triangulation has not yet been sufficiently realized.

In 3D Euclidean space, a line l i can be defined by a fixed point S i and a direction W i as: l i

, h S i

, W i i where W i is a 3 × 1 unit direction vector with 2-norm equal to 1. Define the 3 × 3 projection P i as:

P i

, I

3 × 3

−

W i

W i

T

W i

T

W i

= I

3 × 3

− W i

W i

T because the distance d i between X and line l i

= h S i

, W i i satisfies the quadratic form: d

2 i

= k P i

( X − S i

) k

2

2

= ( X − S i

)

T

P i

T

P i

( X − S i

)

(2.5)

(2.6)

(2.7)

3

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION then the symmedian point

ˆ which minimizes the sum of the solving the 3 × 3

linear system of equations ( 2.8

n

n

X

P i

!

i =1

ˆ

= n

X

( P i i =1

S i

) (2.8)

This triangulation method requires pinhole camera factorization such that all projection rays can be represented into a fixed 3D point S i and its direction W i both in their Euclidean coordinates

. Such a multiple-view triangulation approach via symmedian point is linear, suboptimal and efficient. The symme-

dian points thus obtained in closed form usually are excellent initial values for further improvement in those two-stage triangulation methods

.

Note that the two-stage iterative methods find the L2 optimal solutions only when the initial triangulation locates the global-L2-optimal attaction basin of the problems correctly. However, it is difficult to clarify which initialization algorithm is in general better than others. It is in our extensive numerical experiments that we find symmedian point triangulation outperforms other linear triangulation methods and comparison details are omitted here. The iterative methods discussed in this work are all initialized by symmedian points, while the tfml method by Chesi et al

also works but is too much expensive.

2.2

Symbolic-numeric computation of derivatives

A symbolic-numeric approach is employed to compute accurate derivatives of ( 2.2

changes in the implementation of Newton-like iterative methods may causes significant difference in the numerical solutions to the general multiple-view triangulation why we present these implementation details in a separate subsection. In fact, this is probably one of the reasons why bundle adjustment optimization has long been considered as at most suboptimal even for Oxford VGG data sets besides no good initialization and the absence of optimality verification criterion

.

Denote the 2 n × 3 dimensional Jacobian matrix of r ( X )

J ( X ) =

∂ φ

1

∂x

∂φ

∂x

2

∂φ

2 n

∂x

∂φ

∂y

∂φ

2

∂y

∂φ

.

..

∂y

1

2 n

∂φ

1

∂z

∂φ

∂z

2

∂φ

2 n

∂z

2 n × 3

(2.9)

Then the gradient g and Hessian H of the cost function f ( X )

: g ( X )

∆

= ∇ f ( X ) = J ( X )

T r ( X ) = J ( X )

T

( AX − B ) (2.10)

H ( X )

∆

= ∇

2 f ( X ) = J ( X )

T

J ( X ) +

2 n

X

φ i

∇

2

φ i i =1

(2.11)

It is critical to assure appropriately high accuracy of g ( X )

) since the least square problem is

converted into solving a nonlinear system ( 2.12

) using iterative methods. Any perturbation on

g ( X ) , numerical round-off errors included, means using solutions of a perturbed system ˆ ( X ) = 0 to approximate

4

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

). Inappropriate approximation to derivatives may be one of the reasons why conventional

implementation of iterative methods work unsatisfactory even for L2 triangulation problems close to the noise-free trivial cases.

g ( X ) = J ( X )

T r ( X ) = J ( X )

T

( A X − B ) = 0 (2.12)

) of reprojection error cost function ( 2.2

mated via a symbolic-numeric approach.

Considering the i -th partition of r ( X )

φ

2 i − 1 i -th camera: r i

( X ) =

φ

2 i − 1

φ

2 i

=

p i

11 x + p i

12 y + p i

13 z + p i

14 p i

31 x + p i

32 y + p i

33 z + p i

34 p i

21 x + p i

22 y + p i

23 z + p i

24 p i

31 x + p i

32 y + p i

33 z + p i

34 and

− u i

− v i

φ

2

i corresponding to the

(2.13)

Since both φ

2 i − 1 and φ

2 i are in rational forms and all such partitions are independent from each other, the first and second order partial derivatives of them and therefore the J

g

H

computed in a symbolic-numeric manner accurately.

For example, the i -th partition of J ( X ) is:

J

2 i − 1

J i

( X ) =

J

2 i

∂φ

2 i − 1

∂x

=

∂φ

∂x

2 i

∂φ

2 i − 1

∂y

∂φ

∂y

2 i

∂φ

2 i − 1

∂z

∂φ

2 i

∂z

Denote λ i

= p i

3 , 1 x + p i

3 , 2 y + p i

3 , 3 z + p i

3 , 4 and the following 18 determinants as:

(2.14)

1)∆ i, 1

12

= p i

11 p i

32

− p i

12 p i

31

, 2)∆ i, 1

13

= p i

11 p i

33

− p i

13 p i

31

, 3)∆ i, 1

14

= p i

11 p i

34

− p i

14 p i

31

,

4)∆ i, 1

21

= p i

12 p i

31

− p i

11 p i

32

, 5)∆ i, 1

23

= p i

12 p i

33

− p i

13 p i

32

, 6)∆ i, 1

24

= p i

12 p i

34

− p i

14 p i

32

,

7)∆ i, 1

31

= p i

13 p i

31

− p i

11 p i

33

, 8)∆ i, 1

32

= p i

13 p i

32

− p i

12 p i

33

, 9)∆ i, 1

34

= p i

13 p i

34

− p i

14 p i

33

,

10)∆ i, 2

12

= p i

21 p i

32

− p i

22 p i

31

, 11)∆ i, 2

13

= p i

21 p i

33

− p i

23 p i

31

, 12)∆ i, 2

14

= p i

21 p i

34

− p i

24 p i

31

,

13)∆ i, 2

21

= p i

22 p i

31

− p i

21 p i

32

, 14)∆ i, 2

23

= p i

22 p i

33

− p i

23 p i

32

, 15)∆ i, 2

24

= p i

22 p i

34

− p i

24 p i

32

,

16)∆ i, 2

31

= p i

23 p i

31

− p i

21 p i

33

, 17)∆ i, 2

32

= p i

23 p i

32

− p i

22 p i

33

, 18)∆ i, 2

34

= p i

23 p i

34

− p i

24 p i

33 let the Kronecker product of 3 × 3 identity matrix and X = ( x, y, z, 1)

T be:

Kron X =

1 0 0

0 1 0

0 0 1

⊗

x y z

1

=

X 0

0 X

0

0

0 0 X

12 × 3 and the numerical part J i num of Jacobian’s i -th partition independent of any variable x , y or z be:

J i num

=

0 ∆ i, 1

12

0 ∆ i, 2

12

∆ i, 1

13

∆ i, 2

13

∆ i, 1

14

∆ i, 2

14

∆ i, 1

21

∆ i, 2

21

0 ∆ i, 1

23

0 ∆ i, 2

23

∆ i, 1

24

∆ i, 2

24

∆ i, 1

31

∆ i, 2

31

∆ i, 1

32

∆ i, 2

32

0 ∆ i, 1

34

0 ∆ i, 2

34

5

(2.15)

(2.16)

(2.17)

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION then J ( X ) ’s i -th partition J i

( X )

J

2 i − 1

J i

( X ) =

J

2 i

= J i num

Kron X ∗ λ

− 2 i

(2.18)

Note that no such numerical approximation as finite difference is needed when calculating the J

and then g

) this way. The numerical parts

J i num

J i

( X )

they only depend on the cameras. It is already enough with only the accurate J

g

Gauss-Newton and Levenberg-Marquardt methods

where second order derivatives are unnecessary.

Accurate Hessian H

) can also be obtained based on the accurate

J

second order derivatives of ( 2.13

) in similar way; and the first order finite-difference approximation to

H

J

g

), we only need to further compute

∇

2

φ

2 i − 1 and ∇

2

φ

2 i

. Since both ∇

2

φ

2 i − 1 and ∇

2

φ

2 i are

3 × 3 symmetric matrices, each of them has only 6 independent entries. Number the 12 entries in the

), then every 6 of them can be rewritten into a

6 × 1 vector:

indices of ∇

2

φ

2 i − 1

:

1 2 3

2 4 5

3 5 6

7→

1

2

3

4

5

6

; indices of ∇

2

φ

2 i

:

7 8 9

8 10 11

9 11 12

7→

7

8

9

10

11

12

(2.19)

All the 12 independent entries of as one 12 matrix H

× i num

1

∇ 2 φ dimensional column vector and the homogeneous vector

2 i − 1

( h i

12 and ∇ 2 φ

2 i for the i -th camera can be concatenated together

, then be represented by the product of the following 12 × 4 x, y, z, 1)

T ∗ λ

− 3 i where: λ i

= p i

31 x + p i

32 y + p i

33 z + p i

34

:

0 2 p i

31

∆ i, 1

21

2 p i

31

∆ i, 1

31

2 p i

31

∆ i, 1

41

H i num

=

2 p p p i

33

∆ i, 1

12

+ p i

32

∆ i, 1

13

2

2 p i

33

∆ i, 2

12

+ p i

32

∆ i, 2

13

2 p p p p p p i

31 i

31 i

32 i

33 i

31 i

31 i

32 i

33

∆

∆

0

∆

∆

∆

∆

∆

∆ i, 1

12 i, 1

13 i, 1

12 i, 1

13 i, 2

12 i, 2

13 i, 2

12 i, 2

13 p p i

21 i

21

∆

∆

2

2

2 p i, 1

23 p p i, 2

23 p p p p i

32 i

32

+ p i

33 i

31 i

32 i

32

+ p i

33

∆

0

∆

∆

0

∆

∆

∆

∆ i, 1

21 i

33 i, 1

23 i, 1

23 i, 2

21 i, 2

21 i

33 i, 2

23 i, 2

23

∆

∆ i, 1

21 i, 2

21 p p i

21 i

21

∆ i, 1

32

2

2

2 p p p p p p

∆ i, 2

32 p i

33

+ p i

32 i

33 i

31 i

33

+ p i

32 i

33

∆ i, 1

31 i, 1

32

∆ i, 1

32

0

∆ i, 2

31 i, 2

32

∆ i, 2

32

0

∆

∆

∆ i

32 i, 2

31 i

32

∆

∆ i, 1

31 i, 2

31 p i

31

∆ i, 1

42 p p p p p i

31 i

32 i

31 i

31 i

32

∆

∆

∆

∆

∆

2

2

2

2

2 i, 1

43 p i, 1

43 p p i, 2

42 i, 2

43 p i, 2

43 p

+ p i

32

∆ i, 1

41

+ p i

32 i

32 i

33

∆

+ p i

33 i

31

∆

∆

+ p

+ p

∆

+ p

∆ i

33 i, 1

42 i

33 i, 1

43 i, 2

41 i

32 i

33 i, 2

42 i

33 i, 2

43

∆

∆

∆

∆

∆ i, 1

14 i, 1

42 i, 2

41 i, 2

14 i, 2

42

(2.20)

All the ∆ i,l mn

’s are as those defined in equation ( 2.15

). Then we can obtain accurate Hessian of

f ( X )

∆ i,l

12

= − ∆ i,l

21

, ∆ i,l

13

= − ∆ i,l

31

, ∆ i,l

23

= − ∆ i,l

32

, ∀ l = 1 , 2 .

6

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

2.3

Newton-like iterative methods and the globalizing strategies

Many state-of-the-art nonlinear optimizers can be used to minimize the unconstrained f ( X )

sical Newton-like iterative methods, Newton-Raphson, Gauss-Newton and Levenberg-Marquardt methods, have locally superlinear and quadratic convergence rate

when being initialized properly and therefore are our first choice.

The second order Taylor expansion of f ( X ) around X k m

N R k

( d ) and the Newton-Raphson step d k +1 gives rise to the quadratic model function

, where the Newton step d k +1 is the minimizer of m

N R k

( d ) when H ( X k

) is positive definite:

f ( X k

+ d d k +1

) ≈ m

N R k

( d ) = f ( X k

) + d

T g ( X k

) +

1

2!

d

T

H ( X k

) d

= arg min d ∈ R 3 m N R k

( d ) = − H ( X k

)

− 1 g ( X k

)

(2.21)

Similarly, the first order Taylor expansion of r ( X )

X k s k +1

, the minimizer to another quadratic model function m GN k

( s ) of f ( gives rise to the Gauss-Newton step

X )

X k

:

r ( X k

+ s ) ≈ m

GN k

( s ) =

s k +1

ˆ ( s

ˆ

)

= arg min s ∈ R 3

(

T s )

ˆ ( s )

=

= r f

(

(

X

X k k

) + J ( X k

) + 2 s m GN k

( s ) = − J ( X k

)

T

T

) s g ( X k

J ( X k

)

) + s

T

J ( X k

)

T

J ( X k

) s

− 1 g ( X k

) = − J ( X k

)

† r ( X k

)

(2.22)

Levenberg-Marquardt algorithm is considered as a modification on J

T or Gauss-Newton algorithm with trust region strategy on each step

.

J in the Gauss-Newton iteration, p k +1

= − J ( X k

)

T

J ( X k

) + µ k

I

− 1 g ( X k

) (2.23)

The Levenberg-Marquardt algorithms we use are those from

, with µ k for the µ

) updating in every iteration and is relatively expensive.

= k r ( X k

) k δ

2

( δ ∈ (1 , 2))

Algorithm 1 Soft line search with Armijo backtracking

9:

10:

11:

5:

6:

7:

8:

1:

2:

3:

4:

12:

13:

procedure S OFT L INE S EARCH ( k, X k

, d k

, @ f ( X ) )

γ ← 0.01

, δ ← 0.25

i ← 0

⊲

⊲ modified Armijo backtracking set the line search parameters: γ ∈ (0 , 0 .

5) , δ ∈ (0 , 1)

⊲ so as to compatible with the underlying iteration repeat

α ← δ i

⊲ a mod b

α k

← α if f ( X k

+ α ∗ d k

) ≤ f ( X k

) − γ ∗ α

3 return α k

∗ k d k k

3

2 then

⊲

⊲ Armijo backtracking criterion return step length if meeting criterion end if increment i by 1 until i ≥ 20 return α k end procedure

⊲ return after a max loop number

7

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION gies

The two major iterative approaches we suggest to use are Gauss-Newton hybrid with globalizing strate-

and

, denoted as gGN hereafter. The soft line search strategy with

Armijo backtracking rule is as in algoirthm

1 , and simple trust region by Steihaug’s method is as in algo-

rithm

2 , the theoretically local convergency (to critical points of

f ( X ) ) of which have been depicted and proven in literatures

. Unless otherwise specified, gGN represents Gauss-Newton with

in the numerical experiments.

Since without any globalizing strategy, the underlying Newton-Raphson and Gauss-Newton iterative methods work both accurate and efficient for more than 99% of the real cases, the globalizing strategies

and

better be hybrid with underlying Gauss-Newton iteration in a smooth manner. For example, a major difference between the trust region

version gGN and Levenberg-Marquardt is that trust region stepupdating

is only implemented when the new f k +1

= f ( X k +1

) is greater than f

0

= f ( X

0

) in gGN, which makes the gGN more efficient without losing robustness. Too frequent trust region step-updating in

Levenberg-Marquardt also ruins the accuracy according to our numerical experiments. Such hybridisation is also recommended to be used in the line search

version gGN.

Algorithm 2 Trust region algorithm: update s k by conjugate gradient method

7:

8:

9:

10:

11:

12:

13:

14:

15:

16:

17:

18:

19:

1:

2:

3:

4:

5:

6:

20:

procedure T RUST R EGION ( g k i ← 0 , x i

← X k

, ǫ ← 1 .

0 e

, B k

− 8

,

, X

∆ i k

, @ f ( X ) )

← 1 .

0 , η s

← 0 .

1 , η v

← 0 .

9 , γ inc

⊲

← simple trust region algorithm

4 , γ red

← 0 .

25 repeat model function: m i

( s ) ← − s T g k

−

1

2 s T B k s ⊲ 2nd order Taylor exp: f ( x i

) − f ( x i

+ s ) s i

← arg min k s k

2

≤ ∆

2 i m i

( s ) ⊲ solve subproblem by Steihaug method

ρ i

← if ρ i x

≥ η v then i +1

← x i

+ s i

∆ i +1 else if f ( x

ρ i i

)

←

− m i

∆ i f

(

∗

( s i x

γ

≥ η s then

) i inc

+ s i

) else x i +1

∆ i +1

← x i

+ s i

← ∆ i

⊲ ⊲ m k

⊲ m k

( s )

⊲

⊲ m k

The ratio of actual to predicted reduction approximates

( s )

⊲ f reduction very successful increase trust region radius approximates f

( s ) does not approximate f reduction when ρ i

∆ reduction successful

< η i s x i +1

∆ i +1

← x i

← ∆ i

∗ γ red end if

⊲ reduce trust region radius ∆ i increment i by 1 until k g i k ≤ ǫ or i ≥ 100 return s k end procedure

Numerical experiments indicate that if accurate derivative computation in section

is used, all the

Newton-like iterative methods initialized by symmedian points

are L2 optimal

Here we use four synthetic data examples from Chesi et al

for most real cases.

to illustrate the L2 optimality of the iterative methods, and the conservative case of tfml does not occur for any of the iterative methods at all.

Synthetic examples The synthetic data examples are based on the four cameras defined as in ( 2.24

The “SA2”, “SA3” and “SA4” examples are the cases with the first 2, 3 and 4 cameras as in ( 2.24

tively and all their images are (0 , 0 , 1)

T

. The conservative case “Con”, which tfml method fails in finding the optimal solution to, has the first three cameras and its 2D images are: x

1

= (0 .

9 , − 0 .

9 , 1)

T

, x

2

=

8

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

(0 .

6 , 2 , 1)

T

, x

3

= (2 , 1 .

3 , 1)

T respectively.

P

1

=

1 0 0 0

0 1 0 0

0 0 1 1

, P

2

=

− 1 − 1 − 1 0

1

0

0

0

−

1

1 1

1

, P

3

=

0 − 1

0 0

− 1 − 1

0 0

− 1 1

0 1

, P

4

=

0 − 1 − 1 0

0 1

1 0

−

1

1 1

1

(2.24)

Comparison results are listed as in table

Since all the Newton-Raphson, Gauss-Newton and Levenberg-

Table 1: Triangulation results comparison between tfml and iterative methods

Exmp.

Method

SA2

SA2

SA3

SA3

SA4

SA4

Con

Con

Triangulation result Reprojection error tfml (-0.272727272727

398 ,-0.18181818181

7941 ,0.636363636363

190 ) 0.055555555555556

gGN (-0.272727272727273,-0.181818181818182,0.636363636363636) 0.055555555555556

tfml (-0.3025060

37933953 ,-0.160909

286697078 ,0.7990907

47348768 ) 0.10521103596214

3 gGN (-0.302506061882800,-0.160909312731383,0.799090767385097) 0.105211035962142

tfml (-0.232284

343064664 ,-0.334519

175175504 ,0.6968068

78848929 ) 0.2099061662632

81 gGN (-0.232284268136407,-0.334519054968205,0.696806894375664) 0.209906166263248

tfml gGN

(1.

314094728910344 ,-1.

106491029764633 ,0.

043599248387159 ) 1.2

65349079248799

(1.424098078272550,-1.238341159147880,0.115482211291935) 1.223123745015136

Marqquardt methods perform similar, we only list the global Gauss-Newton (“ gGN” with

comparison. Table

indicates that iterative methods are more accurate which also globally solves the conser-

vative case for tfml. The L2 optimality can be easily verified by solving their ( 2.12

methods or per the criterion

mentioned in section

2.4

Numeric criteria in evaluating triangulation solutions

Because of their theoretically significance, there are global L2 optimality criteria developed by constructing the upper bound for f ( X ) cost function or lower bound of its Hessian on a convex domain

based on

sufficient conditions of the convexity. A necessary and sufficient criterion naturally generated from tfml and

tpml algorithms using the equality of µ

1 and µ

2 is therefore of special interests

though it is only limited to the proposed algorithms’ verification. For those cases when camera number is small and when efficiency

is not critical, it is also possible to compute all the real solutions to ( 2.12

) and compare the corresponding

reprojection errors.

Definition 2.1 (Numerical L2 optimality). A point is numerically L2 optimal if and only if it is a good

enough approximate solution to the nonlinear normal equation ( 2.12

) and its reprojection error is less than

or equal to that of a nice suboptimal estimation easy to obtain.

The so-called nice suboptimal reprojection error can be the upper local convexity level as defined in

or simply use that of symmedian point, which works acceptable for most cases. The upper local convexity level is a sufficient criterion of L2 optimality but is rather difficult to compute accurately: f

LC

∆

= min

X ∈ R

3 min y ∈ R

3 y

T

J ( X )

T

J ( X ) y

2 n

P i =1

( y T ∇ 2 φ i

( X ) y )

2

(2.25)

A more favorable efficient L2 optimality verification approach with high success ratio is the sufficient criteria via investigating the lower bounds of Hessian of f ( X ) on the convex intersection set of n cone domains

.

9

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

For the iterative methods proposed, we also use the following criteria to pre-determine whether the triangulation problem is a hard case or not, and the accuracy of a final solution.

Numerical experiments indicate that the square of an intrinsic curvature ρ of r ( X ) around a specific point X works very well in picking out those hard cases, which is the reciprocal of the maximum eigen value λ max of symmetric matrix K

J

†

, the Moore-Penrose pseudo-inverse of

J

), and second order derivatives of

r ( X )

:

K

2 n × 2 n

( X )

∆

= − J

†

( X )

T

2 n × 3

2 n

X

φ i

( X ) ∇

2

φ i

( X )

!

i =1

3 × 3

J

†

( X )

3 × 2 n

(2.26)

The intrinsic curvature rule to determine the solvability via Gauss-Newton iteration of

ρ 2 X

∗

∆

=

λ 2 max

K

1

∗

K ( X )

> γ f

2

( X

X

)

∗

∆

= r

X

∗

2

2

= f r ( X )

X

∗

,

∗ is as

:

(2.27)

The the maximum absolute value of eigenvalues of K indicates the local convergence rate of Gauss-

Newton iteration; and the maximum eigenvalue λ max of K

), is useful to determine whether a least

square problem is easily solvable via Newton-like iteration from a specific initialization. A rule of thumb useful in determine the solvability of an L2 triangulation problem by Newton-like iterative method proposed is whether ρ

2 is significantly larger than γ

2 of the global minimum determination when at the symmedian point X

0

. The thumb rule also works for most

K

LC is smaller than ǫ and the reprojection error at a point X is smaller than that of X

0

) indicates that, the larger residue it has at the initializer the more

difficult a triangulation problem is for iterative methods to solve because of its nonlinearity and multiple local minima, which is verified in our numerical experiments on extensive data sets

A quantitative criterion for accuracy estimation inspired by Kantorovich theorem the iterative step at the current

ˆ

:

.

is the 2-norm of

Definition 2.2 (LC distances). An estimation to the local convergence accuracy of

ˆ

, Kantorovich distance

K

LC

, is defined as:

K

LC solution

K

LC

= H

− 1 g (2.28)

2

6 ǫ ≈

ˆ

√

2 .

22 × 10 − 16 ≈ 1 .

49 × 10 − 8 for double precision computation usually means the current is a numerically good enough critical point of f ( X ) . The negative logarithm of K

LC also approximately indicate the accuracy of convergence in significant decimal digits.

3 Numerical Results for real data sets

Further numerical experiments are mainly conducted on the real data sets made available online by Oxford visual geometry group ( http://www.robots.ox.ac.uk/˜vgg/data/data-mview.html

). Numerical results indicate that the L2 triangulation of all those data sets, dinosaur, model house, corridor,

Merton colleges I, II and III, University library and Wadham College, can be globally solved by iteration

) in high efficiency with or without globalizing strategies 1

and

) only loses accuracy in very rare cases. The IEEE754 double precision C++ implementa-

tions of these iterative methods are conducted on a Windows

® computer with a 3.4GHz Intel

® i7 CPU.

Though global Gauss-Newton method(gGN), i.e., iteration ( 2.22

) with Armijo backtracking line search

strategy

1 , is relatively slower than Newton-Raphson ( 2.21

), it is more robust and therefore more favourable

for general cases.

10

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

We present here comparison results between gGN and tfml

on their ACT(average computing time) and R.E.(reprojection error) for the following data sets only: 1) dinosaur in table

camera case; 2) corridor in table

3 , which has the tfml conservative case (point No. 514); 3) model house in

table

4 , which has the maximum percentage of more than 4 camera cases. All indicate the iterative methods

significantly outperform tfml in both efficiency and accuracy.

Table 2: dinosaur data set results comparison between tfml and global Gauss-Newton method n # points ACT(s,tfml

) ACT(s,gGN)

12

13

14

21

Total

9

10

11

6

7

8

2

3

4

5

2300

1167

584

375

221

141

88

44

26

15

14

5

2

1

4983

0.010

0.048

0.060

0.071

0.080

0.097

0.115

0.148

0.175

0.215

0.270

0.303

0.390

1.094

203.614

R.E.(tfml

) R.E.(gGN)

0.0000362740

233.8453557

233.8453557

0.0000408420

8073.5262739

8073.5262739

0.0000443918

14972.8533254

14972.8533254

0.0000477932

4450.9466754

4450.9466754

0.0000505274

10995.10677

40 10995.1067739

0.0000521147

2955.318639

2 2955.3186391

0.0000548824

5396.742364

7 5396.7423646

0.0000574861

0.0000615819

391.319527

8

222.4767185

391.3195277

222.4767185

0.0000737776

2930.4360

400 2930.4360398

0.0000680938

0.0000799962

0.0000736568

0.0001041459

62.38270

250.05697

50

20

9.5944806

28.4078252

62.3827049

250.0569719

9.5944806

28.4078252

0.2051264558

50973.01367

74 50973.0136765

Table 3: corridor data set results comparison between tfml and global Gauss-Newton method n # points ACT(s,tfml

) ACT(s,gGN) R.E.(tfml

) R.E.(gGN)

9

11

Total

3

5

7

341

146

88

58

104

737

0.045

0.078

0.133

0.220

0.307

83.125

0.0000616368

0.0000655332

0.0000808400

94.4

950818

109.9579

135.1818

865

406

94.4847897

109.9579785

135.1818014

0.0000968958

119.653

4304 119.6533555

0.0001014581

204.112

9585 204.1128521

0.0538715274

663.

4012979 663.3907772

First, from the tables

~

4 , the conservative case for tfml never occurred for gGN. Note that the “conser-

vative case” for tfml occurs only in corridor data set (point No.514), all other results by tfml are L2 optimal per the criterion by Chesi et al

. By comparing the reprojection errors, it is easy to conclude that gGN results which are generally more accurate with smaller reprojection errors are also L2 optimal. The optimality of the gGN for the 3-view conservative case in corridor can be easily verified since which has been globally solven.

About efficiency, the three-view C++ implementation of gGN iteration are significantly faster than the

C++ implementation of the three-view only L2 optimal methods

; both efficiency and reprojection

11

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

Table 4: model house data set results comparison between tfml and global Gauss-Newton method n # points ACT(s,tfml

) ACT(s,gGN)

10

Total

7

8

9

3

4

5

6

382

19

158

3

90

1

12

7

672

0.056

0.083

0.073

0.089

0.109

0.172

0.185

0.230

48.582

0.0000368079

0.0001360150

R.E.(tfml

146.985

)

7377

11.209

8018

R.E.(gGN)

146.9856478

0.0000921823

23.2842

602 23.2842599

0.0000514310

538.832

9042 538.8328594

0.0001322201

15.38770

83 15.3877045

0.0000673846

304.239

3664 304.2392904

0.0001234658

7.534364

2 7.5343641

0.0001421567

63.4833

436 63.4833353

11.2097303

0.03318089788

1110.957

4864 1110.9571917

error of gGN are better than the the C++ implementation of the suboptimal methods by Recker et al

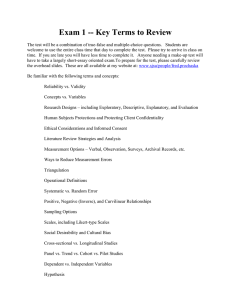

There is a trend of the ACT ratio η ( n ) =

ACT tfml between tfml and gGN: tfml becomes slow faster

ACT gGN

.

than gGN because its EVP scale is getting larger with the increase of camera number

, as is illustrated in figure

1 . For the 21-view case, gGN(C++) is more than 10000 times faster than tfml(per ACT in

).

10 , 000 time consumption ratio of tfml to gGN quadratic fit:

η

= 17

.

05 n

2

+ 127

.

55 n

8 , 000

6 , 000

4 , 000

2 , 000

200

2 4 6 8 10 12 14 16 18 20 22

The number of cameras, n

Figure 1: The trend of tfml to gGN time consumption ratio versus camera number (Oxford dinosaur data set)

Extensive numerical experiments are carried out based on the data sets by Agarwal et al

, where radial distortions of the calibrated cameras are neglected for the purpose of algorithm verification. Iterative method gGN has only achieved L2 optimality for 99.7% of the points since there exist large residue cases or outliers. However, globalizing strategies

and

assure local convergence to critical points and significant reporjection error improvement of the symmedian point initializers for all those hard cases. And in such hard cases, neither iterative methods, nor tfml has absolute advantage over their peers; while gGN is the most

12

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION favourable method which has the overall robustness, high efficiency, higher success ratio of convergence to critical points and highest ratio of achieving the lowest reprojection error in such extensive numerical experiments.

4 Discussion and Conclusion

By symmedian point initialization and accurate computation of derivatives, Newton type iterative methods can solve most of the multiple view L2 triangulation problems both efficiently and accurately, which means the difficulty of the multiple local minima of the nonconvex reprojection error cost function f ( X ) can be easily overcome in such real cases.

This indicate that symmedian points can efficiently locate the attraction basin of the optimal solution to f ( X ) in most real cases which simplifies the multiple view L2 triangulation problem into convex ones, and accurate computation of derivatives are critical for Newton-like methods to be successful in solving multiple view L2 triangulation problem.

In order to handle those hard cases where the nonlinearity of f ( X ) is so high and reprojection error is large at the initializers, globalizing strategies

and

are proposed to use smoothly-hybrid with the underlying Gauss-Newton iteration, which outperform Levenberg-Marquardt and other methods in robustness and efficiency, achieving high success ratio of convergence to critical points and significant reprojection error improvement over the symmedian point initializers.

This means bundle adjustment with appropriate implementations can significantly outperform its peers in solving optimal triangulation problems.

Similar to what has been proposed in

, in the rare cases where symmedian point triangulation fails to locate the optimal solution attraction basin it is usually because the point has large noise, in which case in a large-scale reconstruction problem, the best option is probably to remove the point from consideration.

Future work on optimal triangulation may focus on improving initialization technique which assures to locate the attraction basin of the global minimum, while the problems of L2 optimality guaranteed triangulation for multiple view cases continue to be NP-hard with no simple solution in general

. And it is useful

to develop efficient and reliable strategies, similar to the intrinsic normal curvature ( 2.27

iterations, so as to previously determine whether a problem is solvable or not iteratively.

Acknowledgement

References

[1] S. Agarwal, N. Snavely, S. M. Seitz, and R. Szeliski. Bundle adjustment in the large. In Proceedings of the

11th European Conference on Computer Vision: Part II, ECCV’10, pages 29–42, Berlin, Heidelberg, 2010.

Springer-Verlag. Data sets are available from: http://grail.cs.washington.edu/projects/bal/

(Accessed: Jan 15, 2014). (Cited on pages

and

[2] I. K. Argyros. Convergence and applications of Newton-type iterations. Springer, New York, London, 2008.

OHX. (Cited on pages

and

[3] M. Byr¨od and K. Josephson. K.: Fast optimal three view triangulation. In In: Asian Conference on Computer

Vision, 2007. (Cited on pages

[4] G. Chesi and Y. S. Hung. Fast multiple-view L2 triangulation with occlusion handling. Computer Vision and

Image Understanding, 115(2):211–223, Feb. 2011. (Cited on pages

[5] E. Demidenko. Is this the least squares estimate? Biometrika, 87(2):437–452, 2000. (Cited on page

[6] E. Demidenko. Criteria for global minimum of sum of squares in nonlinear regression. Computational Statistics

& Data Analysis, 51(3):1739–1753, Dec. 2006. (Cited on page

13

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

[7] J. E. Dennis, Jr. and R. B. Schnabel. Numerical Methods for Unconstrained Optimization and Nonlinear Equa-

tions. Classics in Applied Mathematics, 16. Society for Industrial & Applied Mathematics, 1996. (Cited on pages

[8] P. Deuflhard. Newton methods for nonlinear problems : affine invariance and adaptive algorithms. Springer series in computational mathematics. Springer, Berlin, Heidelberg, New York, 2011. Autre tirage : 2006. (Cited on pages

and

[9] J. Fan. The modified levenberg-marquardt method for nonlinear equations with cubic convergence. Mathematics

of Computation, 81(277), 2012. (Cited on page

[10] J.-y. Fan and Y.-x. Yuan. On the quadratic convergence of the levenberg-marquardt method without nonsingularity assumption. Computing, 74(1):23–39, Feb. 2005. (Cited on page

[11] R. Hartley, F. Kahl, C. Olsson, and Y. Seo. Verifying global minima for L2 minimization problems in multiple view geometry. International Journal of Computer Vision, 101(2):288–304, Jan. 2013. (Cited on pages

[12] R. I. Hartley and P. Sturm. Triangulation. Computer Vision and Image Understanding, 68(2):146–157, November

1997. (Cited on pages

and

[13] R. I. Hartley and A. Zisserman. Multiple view geometry in computer vision. Cambridge University Press,

Cambridge, UK, 2nd edition, 2003. (Cited on pages

and

[14] F. Lampariello and M. Sciandrone. Global convergence technique for the newton method with periodic hessian evaluation. Journal of Optimization Theory and Applications, 111:341–358, 2001. (Cited on page

[15] P. Lindstrom. Triangulation made easy. In CVPR, pages 1554–1561, 2010. (Cited on page

[16] F. Lu and R. Hartley. A fast optimal algorithm for L2 triangulation. In Proceedings of the 8th Asian conference

on Computer vision - Volume Part II, ACCV’07, pages 279–288, Berlin, Heidelberg, 2007. Springer-Verlag.

(Cited on pages

[17] H. B. Nielsen and K. Madsen. Introduction to Optimization and Data Fitting. Informatics and Mathematical

Modelling, Technical University of Denmark, DTU, Richard Petersens Plads, Building 321, DK-2800 Kgs.

Lyngby, aug 2010.

http://www2.imm.dtu.dk/pubdb/p.php?5938

(Accessed Jan 1, 2014). (Cited on page

[18] J. Nocedal and S. Wright. Numerical optimization. Springer series in operations research and financial engineering. Springer, New York, NY, 2. ed. edition, 2006. (Cited on pages

[19] J. M. Ortega and W. C. Rheinboldt. Iterative solution of nonlinear equations in several variables. Computer science and applied mathematics. Academic Press, New York, 1970. (Cited on page

[20] B. T. Poliak. Introduction to optimization. Translations series in mathematics and engineering. Optimization

Software, Publications Division, 1987. (Cited on page

[21] W. H. Press. Numerical recipes 3rd edition: The art of scientific computing. Cambridge university press, 2007.

(Cited on pages

and

17:72–90, 1977. (Cited on pages

and

[23] S. Recker, M. Hess-Flores, and K. I. Joy. Fury of the swarm: Efficient and very accurate triangulation for multiview reconstruction. In S. Recker and M. Hess-Flores, editors, International Conference on Computer Vision

Big Data 3D Computer Vision Workshop, Dec. 2013. (Cited on pages

and

[24] S. Recker, M. Hess-Flores, and K. I. Joy. Statistical angular error-based triangulation for efficient and accurate multi-view scene reconstruction. In S. Recker and M. Hess-Flores, editors, Workshop on the Applications

of Computer Vision (WACV), Jan. 2013.

http://www.thereckingball.com/triangulation.php

(Accessed: Jan 1, 2014). (Cited on pages

[25] H. Stew´enius, F. Schaffalitzky, and D. Nist´er. How hard is 3-view triangulation really? In IEEE International

Conference on Computer Vision, 2005. (Cited on pages

[26] P. Sturm, S. Ramalingam, and S. K. Lodha. On Calibration, Structure from Motion and Multi-View Geometry for

Generic Camera Models. In K. Daniilidis and R. Klette, editors, Imaging Beyond the Pinhole Camera, volume 33 of Computational Imaging and Vision, pages 87–105. Springer, 2006. (Cited on page

[27] R. Szeliski. Computer Vision: Algorithms and Applications (Texts in Computer Science). Springer, 2011 edition,

Oct. 2010. (Cited on pages

and

14

N EWTON -L IKE I TERATIVE S OLVER FOR M ULTIPLE V IEW L2 T RIANGULATION

[28] B. Triggs, P. F. McLauchlan, R. I. Hartley, and A. W. Fitzgibbon. Bundle adjustment - a modern synthesis.

In Proceedings of the International Workshop on Vision Algorithms: Theory and Practice, ICCV ’99, pages

298–372, London, UK, UK, 2000. Springer-Verlag. (Cited on page

[29] F. C. Wu, Q. Zhang, and Z. Y. Hu. Efficient suboptimal solutions to the optimal triangulation. International

Journal of Computer Vision, 91(1):77–106, 2011. (Cited on page

[30] N. Yamashita and M. Fukushima. On the rate of convergence of the levenberg-marquardt method. Computing,

(Suppl. 15):237–249, 2001. (Cited on page

Supplementary Materials

A. Newton-like triangulator source codes in visual C++ for Windows platforms.

B. Oxford visual geometry group data sets selected.

15