Evolutionary Approach for Land cover classification using GA based

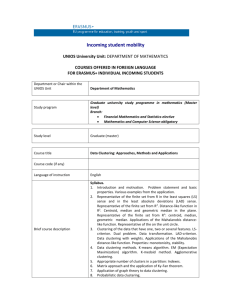

advertisement