Contents | Zoom in | Zoom out

For navigation instructions please click here

Search Issue | Next Page

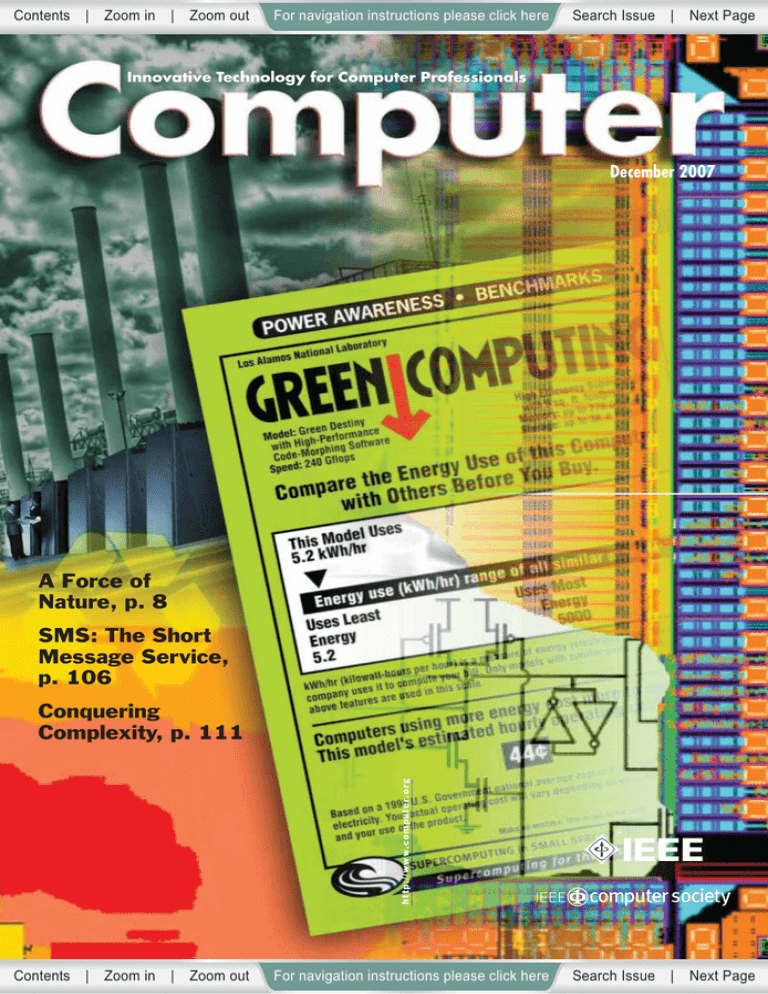

Innovative Technology for Computer Professionals

December 2007

A Force of

Nature, p. 8

SMS: The Short

Message Service,

p. 106

Contents | Zoom in | Zoom out

__________________

h t t p : / / w w w. c o m p u t e r. o r g

Conquering

Complexity, p. 111

For navigation instructions please click here

Search Issue | Next Page

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

_____________________________

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Innovative Technology for Computer Professionals

Editor in Chief

Computing Practices

Special Issues

Carl K. Chang

Rohit Kapur

Bill N. Schilit

Iowa State University

rohit.kapur@synopsys.com

________________

schilit@computer.org

____________

chang@cs.iastate.edu

____________

Bill N. Schilit

Kathleen Swigger

Michael R. Williams

president@computer.org

______________

Web Editor

Perspectives

Associate Editors

in Chief

2007 IEEE Computer Society

President

Bob Colwell

Ron Vetter

bob.colwell@comcast.net

_______________

vetterr@uncw.edu

__________

Research Features

Kathleen Swigger

University of North Texas

kathy@cs.unt.edu

__________

Area Editors

Column Editors

Computer Architectures

Steven K. Reinhardt

Security

Jack Cole

Mike Lutz

US Army Research Laboratory

Edward A. Parrish

Databases/Software

Michael R. Blaha

Broadening Participation

in Computing

Juan E. Gilbert

Embedded Computing

Wayne Wolf

Software Technologies

Mike Hinchey

Worcester Polytechnic Institute

Modelsoft Consulting Corporation

Georgia Institute of Technology

Loyola College Maryland

Graphics and Multimedia

Oliver Bimber

Entertainment Computing

Michael R. Macedonia

Michael C. van Lent

How Things Work

Alf Weaver

Standards

John Harauz

Reservoir Labs Inc.

Bauhaus University Weimar

Information and

Data Management

Naren Ramakrishnan

Virginia Tech

Multimedia

Savitha Srinivasan

IBM Almaden Research Center

Networking

Jonathan Liu

University of Florida

Software

Dan Cooke

Texas Tech University

Robert B. France

Colorado State University

Rochester Institute of Technology

Ron Vetter

University of North Carolina at Wilmington

Alf Weaver

University of Virginia

Jonic Systems Engineering Inc.

CS Publications Board

Web Technologies

Simon S.Y. Shim

Jon Rokne (chair), Mike Blaha,

Doris Carver, Mark Christensen, David

Ebert, Frank Ferrante, Phil Laplante,

Dick Price, Don Shafer, Linda Shafer,

Steve Tanimoto, Wenping Wang

University of Virginia

SAP Labs

In Our Time

David A. Grier

Advisory Panel

George Washington University

University of Virginia

IT Systems Perspectives

Richard G. Mathieu

Thomas Cain

James H. Aylor

CS Magazine

Operations Committee

University of Pittsburgh

James Madison University

Doris L. Carver

Invisible Computing

Bill N. Schilit

The Profession

Neville Holmes

Louisiana State University

Ralph Cavin

Semiconductor Research Corp.

Ron Hoelzeman

University of Pittsburgh

Robert E. Filman (chair), David Albonesi,

Jean Bacon, Arnold (Jay) Bragg,

Carl Chang, Kwang-Ting (Tim) Cheng,

Norman Chonacky, Fred Douglis,

Hakan Erdogmus, David A. Grier,

James Hendler, Carl Landwehr,

Sethuraman (Panch) Panchanathan,

Maureen Stone, Roy Want

University of Tasmania

H. Dieter Rombach

AG Software Engineering

Administrative Staff

Editorial Staff

Scott Hamilton

Lee Garber

Senior Acquisitions Editor

shamilton@computer.org

_____________

Senior News Editor

Judith Prow

Associate Editor

Managing Editor

jprow@computer.org

___________

Bob Ward

Chris Nelson

Bryan Sallis

Senior Editor

Design and Production

Larry Bauer

Cover art

Dirk Hagner

Margo McCall

Associate Staff Editor

Associate Publisher

Dick Price

Membership & Circulation

Marketing Manager

Georgann Carter

Business Development

Manager

Sandy Brown

Senior Advertising

Coordinator

Marian Anderson

Publication Coordinator

James Sanders

Senior Editor

Circulation: Computer (ISSN 0018-9162) is published monthly by the IEEE Computer Society. IEEE Headquarters, Three Park Avenue, 17th Floor, New York, NY 100165997; IEEE Computer Society Publications Office, 10662 Los Vaqueros Circle, PO Box 3014, Los Alamitos, CA 90720-1314; voice +1 714 821 8380; fax +1 714 821 4010;

IEEE Computer Society Headquarters,1730 Massachusetts Ave. NW, Washington, DC 20036-1903. IEEE Computer Society membership includes $19 for a subscription to

Computer magazine. Nonmember subscription rate available upon request. Single-copy prices: members $20.00; nonmembers $99.00.

Postmaster: Send undelivered copies and address changes to Computer, IEEE Membership Processing Dept., 445 Hoes Lane, Piscataway, NJ 08855. Periodicals Postage Paid

at New York, New York, and at additional mailing offices. Canadian GST #125634188. Canada Post Corporation (Canadian distribution) publications mail agreement

number 40013885. Return undeliverable Canadian addresses to PO Box 122, Niagara Falls, ON L2E 6S8 Canada. Printed in USA.

Editorial: Unless otherwise stated, bylined articles, as well as product and service descriptions, reflect the author’s or firm’s opinion. Inclusion in Computer does not

necessarily constitute endorsement by the IEEE or the Computer Society. All submissions are subject to editing for style, clarity, and space.

Published by the IEEE Computer Society

Computer

1

December 2007

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

December 2007, Volume 40, Number 12

Innovative Technology for Computer Professionals

IEEE Computer Society: http://computer.org

Computer : http://computer.org/computer

computer@computer.org

______________

IEEE Computer Society Publications Office: +1 714 821 8380

COMPUTING PRACTICES

24

Examining the Challenges of Scientific Workflows

Yolanda Gil, Ewa Deelman, Mark Ellisman, Thomas Fahringer,

Geoffrey Fox, Dennis Gannon, Carole Goble, Miron Livny,

Luc Moreau, and Jim Myers

Workflows have emerged as a paradigm for representing and

managing complex distributed computations and are used to

accelerate the pace of scientific progress. A recent National Science

Foundation workshop brought together domain, computer, and

social scientists to discuss requirements of scientific applications

and the challenges they present to workflow technologies.

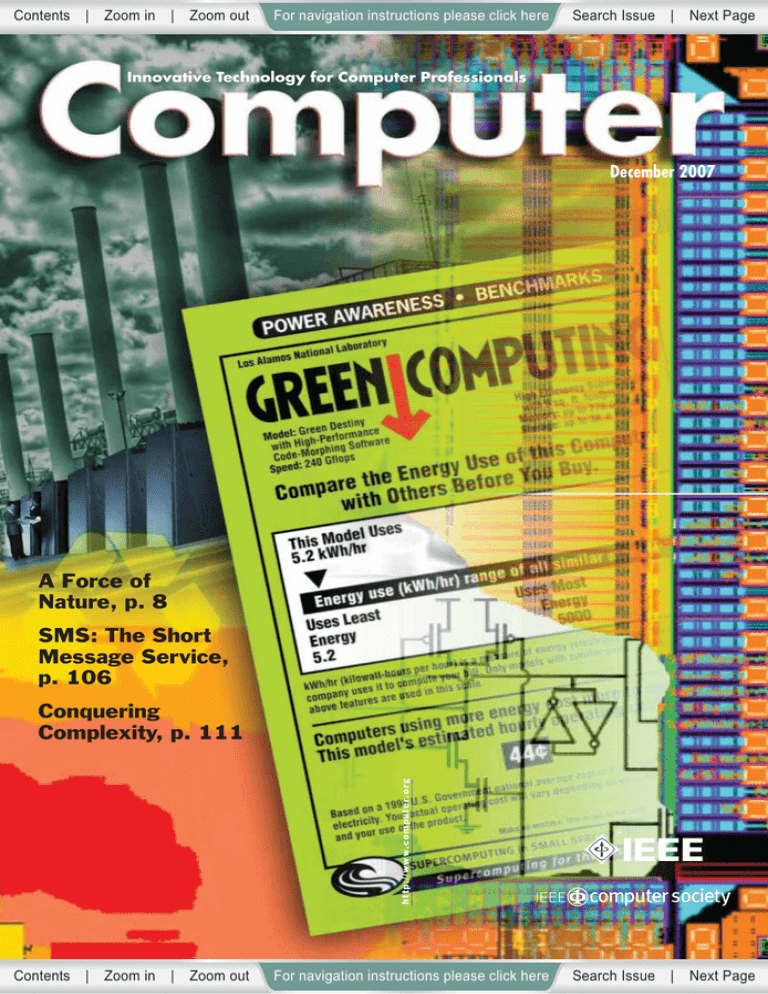

COVER FEATURES

33

The Case for Energy-Proportional Computing

Luiz André Barroso and Urs Hölzle

Energy-proportional designs would enable large energy savings in

servers, potentially doubling their efficiency in real-life use.

Achieving energy proportionality will require significant

improvements in the energy usage profile of every system

component, particularly the memory and disk subsystems.

39

Suzanne Rivoire, Mehul A. Shah, Parthasarathy Ranganathan,

Christos Kozyrakis, and Justin Meza

Power consumption and energy efficiency are important factors in

the initial design and day-to-day management of computer systems.

Researchers and system designers need benchmarks that

characterize energy efficiency to evaluate systems and identify

promising new technologies. To predict the effects of new designs

and configurations, they also need accurate methods of modeling

power consumption.

Cover design and artwork by Dirk Hagner

ABOUT THIS ISSUE

espite advances in power efficiency

fueled largely by the mobile computing industry, computer-energy

consumption continues to challenge

both industry and the global economy.

In the US, enterprise energy consumption doubled over the past five

years and will continue to do so. And

this does not include the energy cost of

manufacturing components—it is

estimated that Japan’s semiconductor

industry will consume 1.7 percent of

that country’s energy budget by 2015.

The articles in this issue propose strategies for mitigating these costs by

designing systems that consume energy

in proportion to the amount of work

performed, establishing new benchmarks and accurate ways of modeling

power consumption, and even recycling

older processors over several computing

generations.

D

Computer

Models and Metrics to Enable Energy-Efficiency

Optimizations

50

The Green500 List: Encouraging Sustainable

Supercomputing

Wu-chun Feng and Kirk W. Cameron

The performance-at-any-cost design mentality ignores

supercomputers’ excessive power consumption and need for heat

dissipation and will ultimately limit their performance. Without

fundamental change in the design of supercomputing systems, the

performance advances common over the past two decades won’t

continue.

56

Life Cycle Aware Computing: Reusing Silicon

Technology

John Y. Oliver, Rajeevan Amirtharajah, Venkatesh Akella,

Roland Geyer, and Frederic T. Chong

Despite the high costs associated with processor manufacturing, the

typical chip is used for only a fraction of its expected lifetime.

Reusing processors would create a “food chain” of electronic

devices that amortizes the energy required to build chips over

several computing generations.

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Flagship Publication of the IEEE Computer Society

CELEBRATING THE PAST

8 In Our Time

A Force of Nature

David Alan Grier

11 32 & 16 Years Ago

Computer, December 1975 and 1991

Neville Holmes

NEWS

13 Industry Trends

The Changing World of Outsourcing

Neal Leavitt

17 Technology News

A New Virtual Private Network for Today’s Mobile World

Karen Heyman

20 News Briefs

Linda Dailey Paulson

MEMBERSHIP NEWS

6

62

64

66

President’s Message

Report to Members: Election Results

IEEE Computer Society Connection

Call and Calendar

COLUMNS

103 Security

Natural-Language Processing for Intrusion Detection

Allen Stone

106 How Things Work

NEXT MONTH:

Outlook

Issue

SMS: The Short Message Service

Jeff Brown, Bill Shipman, and Ron Vetter

111 Software Technologies

Conquering Complexity

Gerard J. Holzmann

114 Entertainment Computing

Enhancing the User Experience in Mobile Phones

S.R. Subramanya and Byung K. Yi

118 Invisible Computing

Taking Online Maps Down to Street Level

Luc Vincent

124 The Profession

Making Computers Do More with Less

Simone Santini

DEPARTMENTS

4 Article Summaries

23 Computer Society Information

68 IEEE Computer Society

Membership Application

Computer

72

85

86

102

Annual Index

Advertiser/Product Index

Career Opportunities

Bookshelf

COPYRIGHT © 2007 BY THE INSTITUTE OF ELECTRICAL AND

ELECTRONICS ENGINEERS INC. ALL RIGHTS RESERVED.

ABSTRACTING IS PERMITTED WITH CREDIT TO THE SOURCE.

LIBRARIES ARE PERMITTED TO PHOTOCOPY BEYOND THE LIMITS OF US COPYRIGHT LAW

FOR PRIVATE USE OF PATRONS: (1) THOSE POST-1977 ARTICLES THAT CARRY A CODE

AT THE BOTTOM OF THE FIRST PAGE, PROVIDED THE PER-COPY FEE INDICATED IN THE

CODE IS PAID THROUGH THE COPYRIGHT CLEARANCE CENTER, 222 ROSEWOOD DR.,

DANVERS, MA 01923; (2) PRE-1978 ARTICLES WITHOUT FEE. FOR OTHER COPYING,

REPRINT, OR REPUBLICATION PERMISSION, WRITE TO COPYRIGHTS AND PERMISSIONS

DEPARTMENT, IEEE PUBLICATIONS ADMINISTRATION, 445 HOES LANE, P.O. BOX

1331, PISCATAWAY, NJ 08855-1331.

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

ARTICLE SUMMARIES

Examining the Challenges of

Scientific Workflows

pp. 24-32

Yolanda Gil, Ewa Deelman,

Mark Ellisman, Thomas Fahringer,

Geoffrey Fox, Dennis Gannon, Carole

Goble, Miron Livny, Luc Moreau, and

Jim Myers

W

orkflows have recently emerged

as a paradigm for representing

and managing complex distributed scientific computations, accelerating the pace of scientific progress.

Scientific workflows orchestrate the

data flow across the individual data

transformations and analysis steps, as

well as the mechanisms to execute

them in a distributed environment.

Workflows should thus become firstclass entities in the cyberinfrastructure

architecture.

Each step in a workflow specifies a

process or computation to be executed—a software program or Web service, for instance. The workflow links

the steps according to the data flow and

dependencies among them. The representation of these computational workflows contains many details required to

carry out each analysis step, including

the use of specific execution and storage

resources in distributed environments.

The Case for EnergyProportional Computing

pp. 33-37

Luiz André Barroso and Urs Hölzle

E

nergy management has now

become a key issue for servers and

data center operations, focusing on

the reduction of all energy-related costs,

including capital, operating expenses,

and environmental impacts. Many

energy-saving techniques developed for

mobile devices became natural candidates for tackling this new problem

space. Although servers clearly provide

many parallels to the mobile space, they

require additional energy-efficiency

innovations. Energy-proportional computers would enable such savings,

potentially doubling the efficiency of a

typical server.

4

Computer

Computer

In current servers, the lowest energyefficiency region corresponds to their

most common operating mode. Addressing this mismatch will require significant rethinking of components and

systems. To that end, energy proportionality should become a primary

design goal. Although researchers’

experience in the server space motivates

these observations, energy-proportional

computing also will significantly benefit other types of computing devices.

The Energy-Efficiency

Challenge: Optimization

Metrics and Models

pp. 39-48

Suzanne Rivoire, Mehul A. Shah,

Parthasarathy Ranganathan,

Christos Kozyrakis, and Justin Meza

I

n recent years, server and data center power consumption has become

a major concern, directly affecting a

data center’s electricity costs and requiring the purchase and operation of cooling equipment, which can consume

from one-half to one watt for every

watt of server power consumption.

All these power-related costs can

potentially exceed the cost of purchasing hardware. Moreover, the environmental impact of data center power

consumption is receiving increasing

attention, as is the effect of escalating

power densities on the ability to pack

machines into a data center.

The two major and complementary

ways to approach this problem involve

building energy efficiency into the initial design of components and systems,

and adaptively managing the power

consumption of systems or groups of

systems in response to changing conditions in the workload or environment.

The Green500 List: Encouraging

Sustainable Supercomputing

pp. 50-55

Wu-chun Feng and Kirk W. Cameron

mance per watt has only improved

300-fold and performance per square

foot only 65-fold, forcing researchers

to design and construct new machine

rooms and, in some cases, entirely new

buildings. Compute nodes’ exponentially increasing power requirements

are a primary driver behind this less

efficient use of power and space.

Today, several of the most powerful

supercomputers on the TOP500 List

each require up to 10 megawatts of

peak power—enough to sustain a city

of 40,000. To inspire more efficient

conservation efforts, the HPC community needs a Green500 List to rank

supercomputers on speed and power

requirements and to supplement the

TOP500 List.

Life Cycle Aware Computing:

Reusing Silicon Technology

pp. 56-61

John Y. Oliver, Rajeevan Amirtharajah,

Venkatesh Akella, Roland Geyer, and

Frederic T. Chong

M

any consumer electronic

devices, from computers to

set-top boxes to cell phones,

require sophisticated semiconductors

such as CPUs and memory chips. The

economic and environmental costs of

producing these processors for new

and continually upgraded devices are

enormous. Because the semiconductor

manufacturing process uses highly

purified silicon, the energy required is

quite high—about 41 megajoules (MJ)

for a 1.2 cm2 dynamic random access

memory (DRAM) die. In terms of

environmental impact, 72 grams of

toxic chemicals are used to create

such a die.

Processor reuse can help deal with

these increasingly severe economic and

environmental costs, but it will require

innovative techniques in reconfigurable computing and hardware-software codesign as well as governmental

policies that encourage silicon reuse.

D

espite a 10,000-fold increase

since 1992 in supercomputers’

performance when running parallel scientific applications, perfor-

Published by the IEEE Computer Society

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

“The technical details and clarity of the articles is

beyond anything I’ve seen available elsewhere.”

– Sun Microsystems Engineer and IEEE Subscriber

From Imagination to Market

IEEE Computer Society

Digital Library

Premier Collection of Computing Periodicals & Conferences

Covers the complete spectrum of computing and delivers the

highest quality, peer-reviewed content available to users.

Over 180,000 top quality computing articles and papers

23 peer-reviewed periodicals

Over 170 conference proceedings, with a backfile to 1995

OPAC links for easy cataloging

Monthly ‘what’s new’ email notification of new content

and services

Free Trial!

Experience IEEE – request a trial for your company.

www.ieee.org/computerlibrary

IEEE Information Driving Innovation

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

PRESIDENT’S MESSAGE

An Interesting Year

Michael R. Williams

IEEE Computer Society 2007 President

The Society’s 2007 president

reflects on a year of challenges

and accomplishments.

T

here is an old phrase that

says, “May you live in interesting times.” This saying is

often interpreted as a curse

because “interesting” can

imply a wide variety of situations. I

can confidently say that 2007 has

been “interesting” in almost every

sense of the word.

After much debate, we chose Angela

Burgess for the job, and she assumed

her duties shortly thereafter. Those of

you who have met Angela will, I am

sure, think that the search committee

did a great job in arriving at this decision. I am confident that this will be a

major step forward in the Society’s

history, and Angela’s abilities will be

welcomed for many years to come.

A NEW EXECUTIVE DIRECTOR

One major event this year was the

search for a new executive director of

the Computer Society. The search was

open, in the sense that there was no

obvious candidate. Many extremely

well-qualified individuals applied,

and reducing the list to manageable

proportions was a difficult task for

the search committee. The candidates

represented diverse areas, including

academic, industrial, and nonprofit

institutions.

Although I have been involved in

searching for senior leadership and

administrative people in the past, I

have seldom seen a more impressive

list of individuals than those we

chose to interview. After several

interviews, we finally met over a holiday weekend to spend both formal

and informal time with the top three

candidates. They each brought a different set of strengths to the position

and each would have made a fine

executive director.

6

A BUILDING CRISIS

With every good “interesting”

development, there is often a counterpart. One of the worst this year was

the result of trying to be good citizens

within the IEEE.

Our staff reorganization (about

which, more later) left us with some

excess space in our Washington, DC,

headquarters building. The building

is well over 100 years old and is a

heritage-listed structure in the middle of Embassy Row in DC. Another

IEEE organization was headquartered in Washington, and it seemed

to make eminent sense to agree to a

proposal that we share office space

in our building.

Since this would require some renovations, we called in engineers and

architects to advise us—no sense trying to remove a support wall or something equally devastating. When the

reports came back, we were surprised

to learn that the building infrastruc-

ture—electricity, plumbing, heating,

and so on—not only could not accommodate the proposed renovations, but

was outdated enough to pose potential safety risks. We immediately

moved our staff out until we could

determine the best course of action to

remedy the problems.

As I write this message, a second

group of engineers is studying the situation, so I can’t give you any definite

word on the final outcome. While we

would all like instant answers in such

situations, doing the job properly takes

time. I hope that we can have definitive

plans and cost estimates in hand by the

time you actually read this.

I would like to thank our sister IEEE

organization, IEEE-USA, for providing us emergency office accommodations until we sort out this mess.

They’ve been extremely helpful and,

despite this disruption to their own

office functions, have been more than

welcoming to our employees.

REORGANIZING THE SOCIETY

My presidential message at the start

of 2007 indicated that this would be a

year of decision regarding our organization. I am pleased to say that much

of the staff and volunteer reorganization has begun, and I am sure this will

result in a more effective organization

in the future. Like any major change,

the complete plan for reorganizing our

Society will take time and will likely

be an ongoing effort for several years.

Angela Burgess, our new executive

director, has been instrumental in

implementing the myriad details of

such things as rewriting position

descriptions and recruiting staff to fill

the new vacancies.

It is not only the staff that is being

reorganized, but also the volunteer

side of the organization. While it

might be simple to say that combining the Technical Activities Board and

the Conferences and Tutorials Board

will result in greater synergy in both

areas, it is something else to actually

plan for a smooth transition, rewrite

the governing bylaws, establish new

modes of working, and try to foresee

potential pitfalls. I would like to

Computer

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

express my personal thanks to all the

many volunteers who have helped in

this and similar endeavors. The list is

long, and I will not try to name them

all. Of course, such dedicated effort

also will be required in 2008 to

accomplish the next steps in the plan.

All this reorganization effort is necessary for both budget and efficiency

reasons. However, it is disruptive and

we must not lose sight of the reasons

this Society exists in the first place. We

have so far managed to keep a good

perspective on the situation and not

only have kept up such things as our

benefits to members but have actually

increased them in some areas. For

example, in 2008, student members

will have a particularly attractive benefit of being able to access free software from Microsoft.

I have always tried to remember

that making changes is but a step

toward providing better services to

our constituents. However, common

wisdom—particularly that saying

about when you’re up to your waist

in alligators, it isn’t easy to remember

that the objective was to drain the

swamp—rings true.

AN END AND A BEGINNING

As my term as president comes to

an end, I look back on this as truly

being an “interesting” year. The three

items I have touched on in this message are but a fraction of the events

and situations that have kept me busy.

In my January message in Computer,

I said, “I hope that, at the end of this

year, we can look back and not only

conclude that I did my best but that it

was to the benefit of the Society and

IEEE as a whole.” I can say that I have

done my best, but I will leave it to others to make the rest of that judgment.

On 1 January 2008, Ranga Kasturi

will take over from me as president,

and Susan (Kathy) Land will be the

president-elect. Kasturi (as he is usually known) is one of the most

thoughtful and capable individuals I

have ever met. He will certainly be a

president who will take the Society

forward to even greater accomplishments. Kathy is also an accomplished

and dedicated volunteer, and the two

of them will make a good team. I have

every confidence that I leave the

Society in the best possible hands for

2008 and beyond.

A

BEMaGS

F

W

ith a Society as large as ours,

the personal experiences of

our members span the complete spectrum. I have heard from

some of you who have gone on to

great success in 2007, from others who

suffered the ravages of earthquakes

and hurricanes, and others who have

had less extreme experiences. Whatever your experiences in 2007, I want

to wish you the very best possible

2008.

It has been my honor to serve as

your president in this “interesting”

year, and I thank you for the opportunity. I would also be remiss if I did

not thank all the dedicated volunteers

and staff that made it possible to

actually accomplish all that we did in

2007. ■

Michael R. Williams, a professor emeritus of computer science at the University of Calgary, is a historian specializing

in the history of computing technology.

Contact him at _______________

m.williams@computer.

org.

__

_______________________________________________

7

December 2007

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

IN OUR TIME

A Force

of Nature

David Alan Grier

George Washington University

Our educational system does

little to prepare computer

science students for making the

transition to the working world.

W

hen I first met them,

Jeff, Jeff, and Will were

inseparable. Un pour

tous, tous pour un. As

far as I could tell, they

spent every waking moment in each

other’s presence. I could commonly

find them at the local coffee shop or

huddled together in some corner of a

college building. The younger Jeff

would be telling an elaborate story to

whatever audience was ready to listen.

The elder Jeff would be typing on a

computer keyboard. Will might be

doodling in a notebook or flirting with

a passerby or playing garbage can basketball with pages torn from the day’s

newspaper.

Among colleagues, I referred to

them as the Three Musketeers, as they

seemed to embody the confidence of

the great Dumas heroes. They were

masters of technology and believed

that their mastery exempted them

from the rules of ordinary society. The

younger Jeff, for example, believed

that he was not governed by the law

of time. When given a task, he would

ignore it until the deadline was bearing down on him. Then in an explosion of programming energy, he would

pound perfect code into his machine.

The elder Jeff was convinced that

specifications were written for other

8

people, individuals with weaker

morals or limited visions. He wrote

code that was far grander than the

project required. It would meet the

demands of the moment, but it also

would spiral outward to handle other

tasks as well. You might find a game

embedded in his programs or a trick

algorithm that had nothing to do with

the project or a generalization that

would handle the problem for all time

to come.

Will was comfortable with deadlines and would read specifications but

he lived in a non-Euclidian world. He

shunned conventional algorithms and

obvious solutions. His code appeared

inverted, dissecting the final answer in

search of the original causes. It was

nearly impossible to read, but it

worked well and generally ran faster

than more straightforward solutions.

DISRUPTION

The unity of the Three Musketeers

was nearly destroyed when Alana

came into their circle. She was a force

of nature and every bit the intellectual

equal of the three boys. She took possession of their group as if it were her

private domain. Within a few weeks,

she had them following her schedule,

meeting at her favorite places, and

doing the things that she most liked

to do. She even got them to dress

more stylishly, or at least put on

cleaner clothes.

Alana could see the solution of a

problem faster than her compatriots,

and she knew how to divide the work

with others. For a time, I regularly saw

the four of them in the lounge, laughing and boasting as they worked on

some class project. One of their number, usually a Jeff, would be typing

into a computer while the others discussed what task should be done next.

Paper wads would be scattered

around a wastebasket. A clutch of

pencils would be neatly balanced into

a pyramid.

It was not inevitable that Alana

should destabilize the group, but that

is what eventually happened. Nothing

had prepared the boys for a woman

who had mastered both the technical

details of multiprocessor coding and

the advanced techniques of eye makeup. For reasons good or ill, Alana was

able to paint her face in a way that

made the souls of ordinary men melt

into simmering puddles of sweat.

Steadily, the group began to dissolve. The end was marked with little, gentle acts of kindness that were

twisted into angry, malicious incidents by the green-eyed monster of

jealousy. Soon Jeff was not speaking

to Jeff, Will was incensed with the

Elder, the Younger had temporarily

decamped for places unknown, and

Alana was looking for a more congenial group of colleagues.

Eventually, the four were able to

recover the remnants of their friendship and rebuild a working relationship, but they never completely

recovered their old camaraderie.

Shortly, they moved to new jobs and

new worlds, where they faced not

only the pull of the opposite sex but

also had to deal with the equally

potent and seductive powers of

finance and money.

MOVING ON

The younger Jeff was the first to

leave. He packed his birthright and

followed the western winds, determined to conquer the world. With a

Computer

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

few friends, he built an Internet radio

station, one of the first of the genre.

They rented space in a warehouse,

bought a large server, and connected

it to the Internet. They found some

software to pull songs off their collection of CDs and wrote a system that

would stream music across the network while displaying ads on a computer screen. They christened their

creation “intergalactic-radio-usa.net.”

__________________

For a year or two, the station occupied a quirky corner of the Net. It was

one of the few places in those days

before MP3 downloads where you

could listen to music over the Internet.

Few individuals had a computer that

reproduced the sounds faithfully, but

enough people listened to make the

business profitable.

One day, when he arrived at work,

Jeff was met at the door by a man with

a dark overcoat, a stern demeanor,

and a letter from one of the music

publishing organizations, BMI or

ASCAP. The letter noted that intergalactic-radio-usa had not been paying royalties on the music it broadcast,

and it demanded satisfaction. A date

was set. Seconds were selected. Discussions were held. Before the final

confrontation, the station agreed to a

payment schedule and returned to the

business of broadcasting music.

Under the new regime, the station

had to double or triple its income in a

short space of time. This pushed Jeff

away from the technical work of the

station. His partners had grown anxious over his dramatic programming

techniques. They wanted steady progress toward their goals, not weeks of

inaction followed by days of intense

coding. They told him to get a suit,

build a list of clients, and start selling

advertising.

Jeff was not a truly successful salesman, but he also was not a failure. His

work allowed him to talk with new

people, an activity he loved, but it did

not give him the feeling of mastery

that he had enjoyed as a programmer.

“It’s all governed by the budget,” he

told me when he started to look for a

new job. “Everything is controlled by

the budget.”

AN EVOLVING BUSINESS

The elder Jeff left shortly after his

namesake. He and some friends

moved into an old house in a reviving

part of the city, a living arrangement

that can best be described as an

Internet commune. They shared

expenses and housekeeping duties and

looked for ways to make money with

their computing skills. Slowly, they

evolved into a Web design and hosting company. They created a Web

page for one business and then one for

another and finally admitted that they

had a nice, steady line of work.

Overcoming the pressures

and demands of the

commercial world

requires qualities

learned over a lifetime.

As the outlines of their company

became clearer, Jeff decided that they

were really a research and development laboratory that supported itself

with small jobs. He took a bedroom

on an upper floor and began working

on a program that he called “the ultimate Web system.” Like many of the

programs the elder Jeff produced, the

ultimate Web system was a clever idea.

It is best considered an early content

management system, a way to allow

ordinary users to post information

without working with a programmer.

As good as it was, the ultimate

Web system never became a profitable product. Jeff had to abandon

it as he and his partners began to

realize that their company needed

stronger leadership than the collective anarchy of a commune. They

needed to coordinate the work of

designers, programmers, salespeople,

and accountants.

As the strongest personality of the

group, Jeff slowly moved into the role

of president. As he did, the company

became a more conventional organization. Couples married and moved

into their own homes. The large house

A

BEMaGS

F

uptown ceased to be a residence and

became only an office.

Long after Jeff had begun purchasing Web software, he continued to

claim that he was a software developer.

“I’ll get back to it some day,” he would

say. “It will be a great product.”

MAKING CHOICES

Will was the last to leave. He started

a company that installed computers

for law firms. Our city hosts a substantial number of lawyers, so his

long-term prospects were good. I once

saw Will on the street, pushing a cart

of monitors and network cables. He

looked active and happy. Things were

going well, he said. He had plenty of

work but still had enough time to do

a little programming on the side.

We shook hands, promised to keep

in touch, and agreed to meet for dinner on some distant day. That dinner

went unclaimed for five years. It might

have never been held had not I

learned, through one of the Jeffs, that

Will had prospered as a programmer

and now owned a large specialty software firm.

I scanned his Web page and was

pleased with what I saw. “Software in

the service of good,” it read. “Our

motto is people before profit. Do unto

others as you would have them do

unto you.”

I called his office, was connected to

“President Will,” and got a quick

summary of his career. He had started

creating programs for disabled users

and had found a tremendous market

for his work. After a brief discussion,

we agreed to a nice dinner downtown, with spouses and well-trained

waiters and the gentle ambience of

success. We spent most of the evening

talking about personal things—families, children, and houses. Only at the

end of the evening did we turn to

work. “How is the business going?”

I asked.

“Well,” he said, but the corners of

his lips pursed.

I looked at him a moment.

“Everything okay?” I queried.

He exchanged a glance with his wife

and turned to back to me. “Extremely

9

December 2007

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

IN OUR TIME

well. We have more business than we

can handle.”

Again, I paused. “Starting to draw

competition?”

He smiled and relaxed for a brief

moment. “No,” he said.

I sensed that something was happening, so I took a guess. “A suitor

sniffing around?”

He looked chagrined and shook his

head. “Yeah.”

“A company with three letters in its

name?”

“Yup,” he said.

“It would be a lot of money,” I

noted.

“But then it wouldn’t be my firm,”

he said. After a pause, he added, “And

if I don’t sell, the purchaser might try

to put me out of business.”

We moved to another subject, as Will

was clearly not ready to talk any more

about the potential sale of his company. It was months later that I learned

that he had sold the company and had

decided to leave the technology industry. The news came in a letter that

asked me to write a recommendation

for a young man who wanted to

become “Reverend Will.”

Almost every technical field feels the

constant pull of business demands.

“Engineering is a scientific profession,” wrote the historian Edwin

Layton, “yet the test of the engineer’s

work lies not in the laboratory but in

the marketplace.” By training, most

engineers want to judge their work by

technical standards, but few have that

opportunity. “Engineering is intimately related to fundamental choices

of policy made by organizations

employing engineers,” notes Layton.

LESSONS LEARNED

The experiences of Jeff, Jeff, and

Will have been repeated by three

decades of computer science students.

They spend four years studying languages, data structures, and algorithms and pondering that grand

question, “What can be automated?”

Then they leave that world and move

to one in which profit is king, deadlines are queens, and finance is a knave

that keeps order.

Our educational system does little

to prepare them for this transition.

An early report reduced the issue to

a pair of sentences. “A large portion

of the job market involves work in

business-oriented computer fields,”

the report noted before making the

obvious recommendation. “As a

result, in those cases where there is a

business school or related department, it would be most appropriate

to take courses in which one could

learn the technology and techniques

appropriate to this field.”

Of course, one or two courses can’t

really prepare an individual for the

pressures and demands of the commercial world. Overcoming pressures

requires qualities that are learned over

a lifetime. Individuals need poise,

character, grace, a sense of right and

wrong, an ability to find a way

through a confused landscape.

Often professional organizations,

including many beyond the field of

computer science, have reduced such

qualities to the concept of skills that

can be taught in training sessions:

communications, teamwork, self-confidence. In fact, these skills are better

imparted by the experiences of life, by

The IEEE Computer Society

publishes over 150 conference

publications a year.

For a preview of the latest

papers in your field, visit

www.computer.org/publications/

10

learning that your roommate is writing and releasing virus code, that you

have missed a deadline and will not be

paid for your work, that a member of

your study group is passing your work

as his own.

“It is doubly important,” wrote

Charles Babbage, “that the man of science should mix with the world.” In

fact, most computer scientists have little choice but to mix with the world,

as the world provides the discipline

with problems, ideas, and capital. It is

therefore doubly important to know

how the ideas of computer science

interact with the world of money.

O

ne of the Three Musketeers,

safely out of earshot of his wife,

once asked me what had become

of Alana. I was not in close contact

with her. However, I knew that her life

had been shaped by the same forces

that had influenced the careers of her

three comrades, although, of course,

her story had been complicated by the

issues that women must face. She had

moved to Florida and built a business

during the great Internet bubble. She

had taken some time to start a family,

perhaps when that bubble had burst in

2001, and was now president of her

own firm. I didn’t believe that she had

done technical work for years.

“You’ve not kept in touch,” I said

more as a statement than a question.

“No,” he said.

I saw a story in his face, but that

story might reflect more of my observations than of his experience. It told

of a lost love, perhaps; a lesson

learned; a recognition that he and his

college friends were all moving into

that vast land of the mid-career knowing a little something about how to

deal with the forces of nature. ■

David Alan Grier is the editor in chief,

IEEE Annals of the History of Computing, and the author of When Computers

Were Human (Princeton University

Press, 2005). Grier is associate dean of

International Affairs at George Washington University. Contact him at ____

grier@

gwu.edu.

______

Computer

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

1975•1991• 1975•1991•1975•1991•1975•1991

32 & 16 YEARS AGO

DECEMBER 1975

COMPUTER EDUCATION (p. 27). “From the educator’s

point of view, perhaps no problem is so apparent as that

of overcoming the dichotomy between computer science

and computer engineering. the task of developing curricula that harmoniously integrate those two components

of computing has been reminiscent of the battles of great

prehistoric beasts in the tar pits: the fiercer and more passionate the struggle, the sooner the combatants are

ensnared in the tar. So far at any rate, the student has had

to choose between computer science or computer engineering—and of course both he and his employer have

had to pay the price in terms of increased training requirements and delayed effectiveness.”

COMPUTING CURRICULA (p. 29). “It is the conclusion of

[the IEEE Computer Society Model Curricula Subcommittee] that these four areas will provide a first entry to

industry-level instruction in computer science and engineering:

“Digital/Processor Logic Instruction towards an

understanding of digital logic devices and their interconnection to provide processing functions. …

“Computer Organization Instruction towards an

understanding of the interaction between computer

hardware and software, and the interconnection of system components.

“Operating Systems and Software Engineering “Theory of Computing Instruction towards an understanding of the formal aspects of computing concepts, to

include discrete structure, automata and formal languages, and analysis of algorithms.”

US SURVEY (p. 40). “The growth of computer science

and computer engineering, at least in EE departments,

has slowed but still continues. Throughout the survey,

growth since 1972 has been slower than growth before

1972. EE departments offer only 2% more CS or CE

options than they did two years ago. They have slightly

more faculty in the computer area. They do offer more

computer courses, particularly minicomputer and microcomputer courses. The growth of the latter courses has

been very rapid with 37% of EE departments reporting

one or more courses on microprocessors in the 19741975 school year.”

LARGE-SCALE COMPUTERS (p. 82). “Amdahl Corporation has delivered three of its $3.7 to $6.0 million 470V/6

large-scale computers and expects to deliver three more

before the end of the year. In all installations to date (Texas

A&M University, Columbia University, and the University

of Michigan), the computer replaced one or more IBM

systems.

“The company states that the 470V/6 can be substituted for an IBM 370 or 360 being used to run any set

of programs and using any peripheral mix. No more

changes are required than would be to change from one

model of the 370 to another.”

COMPUTER MEDIA SERVICING (p. 84). “A new

approach in the servicing of computer centers will be

pursued jointly in the Los Angeles area by Memorex and

Datavan Corporations.

“Specially-outfitted and staffed Datavan mobile units

will travel to customer computer installations where they

will re-ink ribbons, clean and recertify tapes, and clean

disk packs. They will be stocked with Memorex’s computer media products consisting of computer tape, disk

packs and cartridges, data modules, and flexible disks.”

IMPACT PRINTER (p. 86). “Documation, Inc. has

announced the availability of the new DOC 2250 highspeed, impact line printer, capable of printing 2250 single-spaced lines per minute using a 48 graphic character

set.”

“The printer is a free-standing unit containing its own

power supply and control logic. The integrated controller is a Documation-developed microprocessor. The

controller communicates through its interface with the

host system, decodes all commands, controls the printer

hardware, and reports various errors and status.”

PANAMA CANAL (p. 88). “About 40 ships per day pass

through the Panama Canal and that number is expected

to increase significantly in coming years. Because of the

projected upswing, the Panama Canal Company performed studies to develop requirements and specifications for a new Marine Traffic Control System (MTCS).

“The MTCS, implementing 25 General Electric

TermiNet 300 send-receive printers, is a computer-based

system designed to assist in collecting, assimilating, displaying, and disseminating schedules and related data

used to coordinate and control transit operations.”

POST OFFICE LABELS (p. 88). “The United States Postal

Service will introduce a labeling system next year which

will increase the efficiency of transporting mail between

the post office’s 40,000 stations around the country.

“The system, being developed by the Electronics and

Space Division of Emerson Electric Company, is essentially a computerized printing system which has a capability of producing a yearly total of eight-billion labels

and slips used to attach to mail bags and bundles of letters with a common destination.”

“With the new system, the post office’s computerized

printing plant for labels and slips will produce a supply

for every post office based on its own special needs. The

necessary data will be stored on magnetic tape and used

to produce two-week supplies for each order. Changes

to the order will be easily assimilated on the tape, reflecting each office’s latest routing needs.”

11

December 2007

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

DECEMBER 1991

PRESIDENT’S REPORT (p. 4). “… At its November 1 meeting, the Board of Governors approved an agreement with

ACM to form the Federation on Computing in the United

States (FOCUS), the successor to the American Federation

of Information Processing Societies (AFIPS). That agreement establishes an organization of technical societies to

represent US computing interests in the International

Federation for Information Processing (IFIP).”

“We must develop ways to disseminate our transactions, magazines, conferences, and other material electronically. … I have become convinced that the effort

required is beyond what we can expect from a few volunteers. For that reason, we asked and obtained board

approval for a staff position dedicated to this effort.”

DATABASE INTEGRATION (p. 9). “Many believe that

standards development will resolve problems inherent in

integrating heterogeneous databases. The idea is to

develop systems that use the same standard model, language and techniques to facilitate concurrent access to

databases, recovery from failures, and data administration functions. This is easier said than done. Agreement on

standards has proven to be one of the most difficult problems in the industry. Most vendors and end users have

already invested in separate solutions for their problems.

Getting them to agree on a common way of handling their

data is challenging.”

ADDRESSING HETEROGENEITY (p. 17). “Our approach

to addressing schematic heterogeneity is to define views

on the schemas of more than one component database

and to formulate queries against the views. The view definition can specify how to homogenize the schematic heterogeneity in CDBS [Component DataBase System] views.

Our approach to data heterogeneity is twofold: First, we

allow the MDBS [MultiDataBase System] query processor to issue warnings when it detects wrong-data conflicts

in query results. Second, we allow the MDBS users and/or

database administrator to prepare and register lookup

tables in the database so that the MDBS query processor

can match different representations of the same data.”

MULTIDATABASE TRANSACTIONS (p. 28). “A transaction is a program unit that accesses and updates the data

in a database. An everyday example of a transaction is

the transfer of money between bank accounts. The debiting of one account and the crediting of another are each

separate actions, yet the combination of these actions is

viewed by the ‘user’ as one. The notion of combining

several actions into a single logical unit is central to many

of the properties associated with transactions.”

“Transaction processing in a distributed environment

is complex because the actions that compose a transaction can occur at several sites. Either all these actions

12

A

BEMaGS

F

should succeed, or none should. Thus, an important

aspect of transaction processing for a distributed system is reaching agreement among sites. The most widely

used solution to this agreement problem is the twophase commit (2PC) protocol.”

INTERDATABASE CONSISTENCY (p. 46). “In most applications, the mutual consistency requirements among

multiple databases are either ignored or the consistency

of data is maintained by the application programs that

perform related updates to all relevant databases.

However, this approach has several disadvantages. First,

it relies on the application programmers to maintain

mutual consistency of data, which is not acceptable if

the programmers have incomplete knowledge of the

integrity constraints to be enforced. … Since integrity

requirements are specified within an application, they

are not written in a declarative way. If we need to identify these requirements, we must extract them from the

code, which is a tedious and error-prone task.”

AUTONOMOUS TRANSACTIONS (pp. 71-72). “The evolution of classic TP [Transaction Processing] to

autonomous TP has just begun. The issues are not yet

clearly drawn, but they undoubtedly affect the way TP

systems are designed and administrated Transaction

execution is affected as well if users require independent

TP operations during network partitions or communication failures. We believe that asynchronous TP provides a suitable mechanism to support execution

autonomy, because temporary inconsistency can be tolerated and database consistency restored after the failure

is repaired. Divergence control methods must be devised

that give systems the flexibility needed to evolve from

classic TP to autonomous TP.”

GENETIC ALGORITHMS (p. 93). “NovaCast of Sweden

has launched the C Darwin II general-purpose tool for

solving optimization problems. The shell can reproduce

with stepwise, leaping changes and an adaptive selection

process that accumulates small, successive, and favorable variations from one generation to another.

“The program solves product design and planning,

machine scheduling, composition, and functional optimization problems. Users define how they want the solution to be presented and describe the environment in

terms of its conditions, restrictions, and cost factors.

The program evaluates a generation of conceivable solutions in parallel.”

PDFs of the articles and departments of the December

1991 issue of Computer are available through the

Society’s Web site, www.computer.org/computer.

Editor: Neville Holmes; __________________

neville.holmes@utas.edu.au

Computer

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

INDUSTRY TRENDS

The Changing

World of

Outsourcing

Neal Leavitt

O

utsourcing—a

practice

once considered controversial—has become widespread, not only with technology companies but also

with the IT departments of firms in

other industries.

The volume of tech offshore outsourcing—in which companies in

economically advanced countries

send work to businesses in developing nations—has increased since the

approach became popular during

the economic boom of the mid1990s. During that time, the nature

of the practice stayed largely the

same.

Now, though, this appears to be

changing.

For example, companies that handle outsourcing are beginning to

consolidate, creating larger providers offering a broader range of

services. At the same time, niche

providers are emerging.

In addition, countries such as

China are beginning to compete

with India, the longtime outsourcing-services leader.

Once, large companies did most

tech outsourcing. Now, smaller and

mid-size companies are beginning to

outsource work. Also, companies

primarily used to outsource large

projects, such as basic application

development or call-center operations. Now, as outsourcing becomes

more widespread, businesses are

starting to contract out smaller

SHORT-TERM TRENDS

projects—including complex scientific and R&D projects—to more

and more providers.

Various technical and marketplace

developments have driven and

enabled these changes. And industry

observers expect more changes in

the long run.

INSIDE OUTSOURCING

Technology-related outsourcing

began in the early 1980s and grew

rapidly in the mid-1990s. The driving forces included the expanding

tech economy, increased pressure on

IT departments to do more with

their resources, the increasing complexity of managing IT and keeping

up with rapidly advancing technologies, and the difficulty in finding IT workers in all skill areas,

noted David Parker, vice president

of IBM Global Technology Services’

Strategic Outsourcing operations.

Since then, companies have outsourced more than just IT functions.

For example, they have used the

approach to make their production,

customer service, and other processes

Published by the IEEE Computer Society

Computer

efficient and inexpensive by farming

them out to businesses that have the

necessary expertise and can perform

them less expensively, Parker noted.

This work includes help desk, data

center, and network management

operations; database administration;

and server management.

There are outsourcing providers

in both economically advanced and

developing countries. The latter are

able to offer lower costs because

workers there receive lower wages.

Offshore outsourcing has become

controversial, especially in the US,

where critics say it is a way for

domestic companies to save money

by taking jobs from local workers

and moving them overseas.

Technology has helped change the

face of outsourcing. For example,

improvements in telecommunications

and Internet-based technologies such

as videoconferencing, instant messaging, and Internet telephony make

communication faster and more

widely available for outsourcing

providers and their clients, noted Alex

Golod, vice president of business

development for Intetics, a global

software development company.

Legal issues are also important.

Some businesses in developed

nations are outsourcing work to

overseas branches of domestic companies to have more legal recourse

if problems occur, said Ashish

Gupta, CEO of Evalueserve Business

Research, a market-analysis firm.

Consolidation

Because more companies are

farming out a greater variety of projects, there has been a proliferation

of outsourcing suppliers in recent

years to meet the demand.

Meanwhile, as outsourcing companies have grown, they have

looked for ways to reinvest profits,

expand, and acquire new capabilities, noted Gupta.

For these reasons, outsourcing is

experiencing a number of mergers

and acquisitions.

13

December 2007

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

INDUSTRY TRENDS

For instance, the US-based EPAM

Systems became the largest software-engineering-services provider

in Central and Eastern Europe by

acquiring Vested Development, a

Russian software-developmentservices firm.

India’s Wipro acquired Infocrossing, a US infrastructuremanagement services provider, for

$600 million. Wipro has since

opened several software-development and IT-services offices in the US.

Among other recent outsourcing

deals, IBM purchased Daksh,

Computer Sciences Corp. bought

Covansys, and Electronic Data

Systems acquired MphasiS.

According to Intetics’ Golod,

consolidation is a way for small

and midsize vendors to survive

and grow while gaining efficiencies in marketing and operations,

and for larger companies to

acquire competitors and firms

with niche skills.

Beyond India

India has been the leader in outsourcing services since the mid1990s, mostly because it has a large,

educated, English-speaking tech

workforce; low salaries; and a technology sector that has pursued the

work for many years.

Market-research firm Gartner

warns that a shortage of tech workers and rising wages could erode as

much as 45 percent of India’s market share by next year. In addition,

Indian outsourcers can’t handle the

high volume of available projects.

According to Intetics’ Golod, competition is coming largely from places

such as China, the Philippines,

Eastern Europe, Latin America, and

even developed countries like Ireland

and Israel, as discussed in the sidebar “Developing Countries Join the

Outsourcing Marketplace.”

Market-research firm IDC predicts that China will overtake India

by 2011.

Emerging low-wage countries that

might also pull business from India

over the next few years include

Egypt, Malaysia, Pakistan, and

Thailand.

Small-scale and

niche outsourcing

Traditionally, outsourcing has

entailed big companies and large,

long-term projects such as major

application development or the

operations of entire departments.

Bigger companies have been more

willing to pay for outsourcing than

smaller ones, have had more tasks

they could offload, and could provide larger contracts than smaller

businesses.

Now, though, more companies are

competing with large outsourcing

providers. This includes outsourcing

providers targeting smaller businesses and smaller, shorter-term

jobs—including minor testing and

business-analysis projects—either to

Developing Countries Join the Outsourcing Marketplace

India has been the leading offshore technologyoutsourcing supplier for a decade. The country has

leveraged several advantages, including low salaries

and a big, English-speaking workforce with college

degrees in technology fields.

However, there have been growing opportunities for

other developing countries to enter the outsourcing-services market. For example, there is more outsourcing

work than India can handle. In addition, wages in India

are rising, and companies in other countries are actively

pursuing and promoting their services.

China

This country attracts outsourcing projects largely in

areas such as low-end, PC-based application development, quality-assurance testing, system integration,

data processing, and product development. India is

even outsourcing work to China.

Market research firm IDC’s Global Delivery Index—

which ranks locations according to criteria such as available skills, political risk, and labor costs—said Chinese

cities have made significant investments in infrastructure, English-language instruction, and Internetconnection availability.

The country has undergone a massive telecommunica-

14

tions expansion as a result of national economic policy.

Also, China is producing 400,000 college graduates in

technology fields annually, said Kenneth Wong, managing partner at SmithWong Associates, a China-focused

US consulting firm.

“Language issues are no longer the major handicap

to China-based outsourcing,” noted Wong. “Many IT

personnel in China today are US-educated. The Chinese

government knows it has a way to go in reaching

English-proficiency parity with India, but the signs are

encouraging.”

Paul Schmidt, a partner with outsourcing consultancy

TPI, added that demand for the country’s outsourcing

services is driven largely by multinational corporations

looking for access to China’s domestic market and a

presence in the rest of the Asia-Pacific region.

Russia

Russia’s principal outsourcing competencies include

Internet programming, Web design, and Web-server

and Web-database application development.

The country has an educated, experienced labor

force, with the world’s third-largest pool of engineers

and scientists per capita. English competency is good

for mid- to higher-level managers and acceptable for

Computer

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

capture a market niche or to expand

their customer base. This work can

entail either parts of larger jobs or

small, individual projects.

To remain competitive, the larger

providers are also looking at the

smaller end of the outsourcing market, in part by standardizing their

offerings to make them less expensive, noted IBM’s Parker.

Outsourcing consultancy TPI says

the number of commercial outsourcing contracts of more than $50

million, especially in the US, has

declined.

According to TPI, many Indian

providers are seeking smaller jobs than

in the past and thus are expected to

increase revenue from North American companies by 37 percent during

the next two years, even though the

region is outsourcing less work.

Niche outsourcing providers are

well placed for the smaller contracts—often for more focused projects—that are becoming more

popular, said Peter Allen, TPI partner and managing director of marketing development.

The growth of niche outsourcing

is contributing to multisourcing, in

which companies farm out different

parts of a project to multiple specialty service providers, said Brian

C. Daly, public relations director for

Unisys’ outsourcing business.

“This makes it possible to choose

best-of-breed outsourcing firms to

handle different tasks and to optimize costs,” noted Intetics’ Golod.

Outsourcing new

and more complex tasks

As outsourcers have gained more

skills, companies are beginning to

farm out more difficult technology

tasks. Banks and other businesses,

for instance, are moving well beyond

outsourcing low-level applicationmaintenance work and are increasingly relying on offshore providers

for help with full-system projects.

F

Cost not the only factor

Companies are farming out work

not only to save money but also to

gain long-term access to outsourcing firms’ talent, as well as their

innovative, creative, and advanced

approaches, said Unisys’ Daly.

Many companies find recruiting

to be a tedious and expensive

advantage of time-zone proximity to the US. This

makes Latin America ideal for time-sensitive projects

and work where the outsourcer must communicate

quickly and regularly with the outsourcing company.

Eastern Europe

The Philippines

Countries such as Belarus, Bulgaria, the Czech

Republic, Hungary, Poland, Romania, and Ukraine specialize largely in application development, particularly

for complex scientific projects or commercial products.

The region has a solid educational base, producing

qualified scientists and engineers, explained Golod.

Also, he said, the workers in these companies prefer

complex, challenging projects to simple coding.

In addition, larger clients are interested in sending

work to Eastern Europe because they want to diversify

their outsourcing across geographic regions.

Eastern Europe gets a lot of projects from Western

Europe and the UK, which are in nearby time zones, as

well as the US, he said.

This nation has a large English-speaking population

and is carving out a niche for call-center operations.

Evalueserve Business Research, a market-analysis firm,

reports that favorable factors include a 94 percent literacy rate, a high-quality telecommunications infrastructure, familiarity with Western corporate culture, and

government initiatives such as exemptions from license

fees and export taxes that have stimulated outsourcing

growth. Also, wages are low.

Countries in this region—particularly Argentina,

Brazil, and Mexico—specialize in outsourcing projects

such as application development. They have the

BEMaGS

These include providing online

banking capabilities, customizing

enterprise-resource-planning systems, or conducting statistical and

actuarial projects for insurance

firms.

Outsourcing the modernization of

legacy applications is another area

with significant potential. This

would help, for example, companies

that no longer have the in-house

expertise to work with older applications written in languages such as

Cobol and that don’t want to spend

the time and money necessary to

rewrite them in other languages.

developers who communicate directly with clients,

noted Alex Golod, vice president of business development for Intetics, a global software-development

company.

Latin America

A

Developed countries

Ireland has a favorable IT services infrastructure and

is strong in software development and testing. Israeli

outsourcing providers specialize in commercial software development, particularly security and antivirus

products. Although both nations have higher labor

costs than developing countries, they continue to

attract business because of their well-educated workforces and stable governments.

15

December 2007

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

Computer

Previous Page | Contents | Zoom in | Zoom out | Front Cover | Search Issue | Next Page

A

BEMaGS

F

INDUSTRY TRENDS

process, with choices often limited

by local manpower resources,

added Rob Enderle, president and

principal analyst with the Enderle

Group, a market-research firm.

Global outsourcing firms have

access to extensive pools of talent

and well-established recruiting procedures.

I

ndustry observers expect offshore

outsourcing to continue growing.

For example, Forrester Research,

a market-analysis firm, estimates that

software outsourcing will account

for 28 percent of IT budgets in the

US and Europe by 2009, up from 20

percent in 2006.

Forrester also predicts that the

number of overseas software developers working on projects for firms

in developed countries will rise from

360,000 this year to about 1 million

by 2009.

Companies will increasingly outsource telecommunications- and

Internet-based development because

the technologies in these areas are

based on international standards

and are thus easy for offshore vendors to work on.

Security is a big concern for many

companies, with the growing use of

mobile technology and the increasing complexity of threats. More

companies will thus outsource shortterm and ongoing security efforts, as

keeping up with the work themselves will require too much time

and money.

However, there may be a limit on

growth prospects, Golod said. For

example, he noted, international

political and economic problems

could reduce the amount of outsourced work.

According to Enderle, outsourcers

might face problems coordinating

their work with customers as they

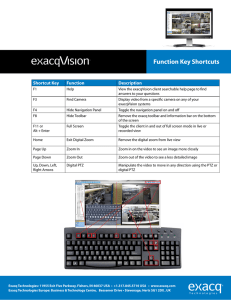

Windows Kernel Source and Curriculum Materials

for Academic Teaching and Research.

The Windows® Academic Program from Microsoft® provides the

materials you need to integrate Windows kernel technology into

the teaching and research of operating systems.

The program includes:

• Windows Research Kernel (WRK): Sources to build and

experiment with a fully-functional version of the Windows

kernel for x86 and x64 platforms, as well as the original design

documents for Windows NT.

• Curriculum Resource Kit (CRK): PowerPoint® slides presenting

the details of the design and implementation of the Windows

kernel, following the ACM/IEEE-CS OS Body of Knowledge,

and including labs, exercises, quiz questions, and links to the

relevant sources.