as a PDF

advertisement

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

Experience of Adaptive Replication

in Distributed File Systems

Giacomo Cabri, Antonio Corradi

Franco Zambonelli

Dipartimento di Elettronica Informatica e

Dipartimento di Scienze dell’Ingegneria

Sistemistica - Università di Bologna

Università di Modena

2, Viale Risorgimento - 40136 Bologna - ITALY

213/b, Via Campi - 41100 Modena - ITALY

E-mail: {gcabri, acorradi, fzambonelli}@deis.unibo.it

Abstract

Replication is a key strategy for improving locality,

fault tolerance and availability in distributed systems.

The paper focuses on distributed file systems and

presents a system to transparently manage file

replication through a network of workstations sharing

the same distributed file system. The system integrates an

adaptive file replication policy that is capable of

reacting to changes in the patterns of access to the file

system by dynamically creating or deleting replicas. The

paper evaluates the efficiency of the system in several

situations and shows its effectiveness.

1. Introduction

Distributed operating systems are becoming

increasingly well spread because of their capacity of

providing a uniform view of a set - even heterogeneous of computing resources [Dis93]. This allows a user to

neglect distribution related issues, such as the allocation

and the access to remote resources, transparently

managed by the system itself. In addition, the diffusion of

distributed operating systems stems also from other

issues:

• high performance: application can be distributed

across the system considered as a parallel computing

[HooM95];

• fault tolerance: it is possible not to compromise the

whole system because of one local failure [Bir85];

Among the resources a distributed system can provide

a uniform view, the file system is an important one:

dispersed users and applications can have access to a

unique global file system, transparently distributed over

the physical computing resources of the system.

In distributed file systems, a variety of mechanisms

can be considered to improve performances and fault

tolerance: file migration grant locality of accesses by

migrating files to the site from where they are accessed

[Hac89, GavL90]. Replication can provide multiple

copies of a file so to grant both locality and better

availability [Bal92, Lom93]. However, to give the user

transparent access to the file system without involvement

in low-level issues related to allocation requires

automated policies to evaluate whether to effectively

apply the above mechanisms.

The paper focuses on this issues and presents a

system for dynamic file replication in distributed file

system. The system is based on a distributed

implementation that limits the coordination degree

needed among their components. This makes it possible

to grant scalability and to minimise the overhead of the

policy on applications. The replication policy integrated

in the system is based on an adaptive scheme: the

replication degree of a file is not statically decided but it

can be tuned to the current system status, i.e., to the

current pattern of access to the file system from the

application level.

The presented system has been implemented for a

network of heterogeneous workstations on the top of

standard commercial software - i.e., UNIXTM and NFSTM

[Sun90]. This allowed us to experience the possibility of

enhancing the performance of already available systems

without any specialised hardware or operating system

support.

The behaviour of the system has been tested under a

variety of traffic situation, from static and quasi-static

one - i.e., with pattern of access to the file system that do

not substantially change in time - to very dynamic ones.

In both cases the policy is able to increase the throughput

of the file system: in the former by granting a very low

overhead; in the latter, by adapting the replication degree

of files so to minimise the costs associated to replica

management.

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

The paper is organises as follows. Section 2

introduces the general issues connected to the definition

and to the implementation of a replication policy for

distributed file-system. Section 3 describes the

implemented system and section 4 evaluates its achieved

performances..

2. The Replication Problem

This section presents the replication concept and its

role in the area of distributed operating systems and, in

particular, of distributed file systems. In addition, it

sketches the problems related to the implementation of a

policy for the management of replicated files.

2.1 General concepts

Replication is that associates a single logical entity to

several physical copies within a system. The replication

degree of an entity is the number of replicas of the entity

that are currently available in the system.

Replication may achieve many goals:

• high-performances: replicating an entity at the site

where it is mostly refereed, one can grant better

performance and minimise access time. In addition,

replication can improve the overall by decreasing the

communication load imposed on the network [Hac89,

Bal92, HooM95].

• greater availability: if different physical copies of an

entity are distributed in the system, a replicated

resource is more available than a single-copy one

[Lad92].

• fault tolerance: a failure that occurs to one physical

replica of an entity does not compromise the access to

the logical entity since other replicas exist in the

system [Bir85, HuaT93].

With regard to the logical entity - physical replicas

relationship, two main schemes are possible: the active

and the passive ones. In an active (or flat) replication

scheme all replicas are considered at the same level (and

accessed without any preference), thus loosing any

distinction between the original entity and its copies. A

passive (or hierarchical) replication scheme identifies a

primary copy and a set (possibly organised in a multilevel hierarchy) of backup replicas, to be used when the

primary is not easily available.

While an active replication scheme is more oriented

to achieve the goal of high-performances, by allowing

locality of references, a passive replication scheme,

instead is more oriented to fault tolerance.

Despite the above described advantages, the presence

of replicated entities within a distributed system

introduces several additional overheads:

• any single logical entity consumes systems

resources. Thus, the higher the replication degree the

more resources need to be used, proportionally;

• coordination is needed among the replicas to

maintain their consistency: if the status of a physical

replica changes, all other replicas of the same logical

entity (or, at least, a quorum of them) must be made

aware of this change in order to maintain the

coherence of the involved entity. This obviously

implies overhead and traffic over the net.

As a consequence of the above introduced costs, it

may even happen that the performance improvements

granted by having replicated entities are outweighed by

the introduced overhead.

As an additional issue, transparency of replication,

must be achieved: a user should not be involved in lowlevel details about allocation and replication. A system

defined replication policy must grant an effective

exploitation of the replication mechanism without user

involvement, i.e., it must automatically decide when an

entity has to be replicated in the systems and where.

2.2 Replication Policies

A replication policy is the decision strategy module in

charge of managing the replication of logical entities

within a system. In particular, a replication policy has to

decide [Lom93]:

• the replication degree of a given entity, i.e., the

number of physical replicas to be provided at a given

time for a given logical entity of the system;

• the allocation of the replicas within the systems, i.e.,

the nodes of the systems in which a replicas must be

physically stored;

In addition, a replication policy is in charge of issuing

the protocols to grant the consistency among the

replicas, whenever a change in the state of one of then

makes it necessary [Bal92].

Depending on the moment at which decisions are

taken, we can distinguish between static and dynamic

replication policies. In particular:

• a static replication policy decides the replication of

an entity at the moment it enters the system, i.e., at its

creation. In this case, both the replication degree and

the allocation is decided at the entity creation time;

• a dynamic replication policy, instead, takes its

decision while the entity has been already created

during its life in the system. For instance, both the

replication degree and the allocation of the replicas

are likely to change on time.

We emphasise the distinction between static and

dynamic replication policies is not always so sharp:

several parameters may influence the decision of a given

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

policy; some of them may dynamically change while

others may be fixed. For example, it is possible for a

policy to behave statically with regard to the replication

degree and dynamically with regard to the allocation of

the replicas. In this case, a given replica is only allowed

to migrate from one node of the system to another during

its life and not to generate any additional replica or to

delete any existing one.

As a general rule, a static replication policy takes its

decision independently of the current state of the system:

once one decision has been taken it is maintained

permanently, even if the conditions that could have

caused it have substantially changed. A dynamic policy,

instead, bases its decisions on the current state of the

system, by adapting the replication degree and the

allocation of the replicas to the configuration that best

fits the current situation. In the following of the paper we

mainly refer to dynamic replication policy.

With regard to the implementation of a replication

policy within a distributed system two main solutions

arises:

• a centralised replication policy, based on a single

module, concentrated on one node of the system and

in charge of all decisions about replicas for the whole

system;

• a distributed replication policy, where multiple

decisional modules are distributed in the systems,

one module for any node of the system, typically.

A centralised solution is the most simple to

implement: it can come to its decisions on the basis of a

global view of state of the system, thus making them

near-optimal. However, a centralised policy is likely to

become a bottleneck for large distributed system and it is

less resilient.

A distributed solution, instead, is more likely to be

implemented in large systems since it does not require

any centralisation point. However, some form of coordination may be required among the different

decisional modules in order to agree on replication

decision. At one extreme, a decision can influence the

whole system and requires a global coordination among

all decisional modules. At the other extreme, one

decision can have very limited and local coordination

degree or being taken autonomously by a decisional

module. In general, a solution that requires a very

limited co-ordination among all decisional components is

to be preferred, since it is likely to produce less overhead

on the system with respect to a global one.

2.3 Replication Policies for Distributed File

Systems

When dealing with distributed file systems, file

replication can be used to store multiple copies of the

same file (or of parts of the same file [HarO95]) in

different physical supports [Hac89].

Depending on the goals to be achieved by file

replication, one can choose between passive and active

file replication techniques [Dis93]: the former is mainly

fault tolerance oriented, the latter can even provide better

availability and performances.

A passive file replication technique - world wide

known as primary backup - identifies a primary copy of

the file, to be generally accessed from anywhere in the

system. Replicas of the same file are created for fault

recovery and are referred only when the primary copy is

not available. Replicas are only periodically updated,

thus making this technique simple and low-intrusive

method. However, the technique does not achieve any

advantage from the performance point of view.

An alternative replication technique - known as

caching - is based on an active scheme: all replicas are

at the same level and they can be accessed. The decision

on which replica to access to can be based on a locality

principle: for example, one local replica of a remote file

can be created to grant local availability of the file.

Though more powerful and capable of granting both

locality and fault tolerance, caching techniques tends to

be more expensive to be implemented: since the system

must handle different copies of the file on different sites,

which can be independently modified, the replication

policy must grant the consistency of all replicas. As a

general rule, only read operations on files do not cause

any problem and can be performed concurrently. Write

operations on a replica, instead, introduce inconsistencies

and that make it necessary firstly to grant exclusive

access to the file and, then, to update all other replicas.

Different algorithms can be though and implemented to

grant different degree of consistency and more or less

expansive: though the analysis of these algorithms is

beyond the scope of the paper, we emphasise that the

higher the degree of consistency the algorithm grants, the

higher is its implementation cost.

3. The System for Adaptive File Replication

This section describes the file replication system

implemented on a network of UNIXTM-based

workstations, with the main goals of throughput and

fault tolerance. The target environment of the system is

a transparent distributed file system [LevS90]: from

every site, users see the same directories and file

structure, independently on the physical location of every

single part of file system could be on different disks.

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

The presented system a is based on an active

replication scheme: all replicas of a file are considered

at the same level. Caching is exploited to improve file

availability: one local replica can be provided onto those

nodes that intensively access it.

In addition, replication is dealt dynamically

depending on resource usage and in the respect of the

locality principle. The file replication policy is adaptive,

i.e., it is not based on a fixed replication scheme: new

replicas can be created and/or deleted depending on the

pattern of access: on the one hand, the policy can decide

of fully replicate the file; on the other hand, it can decide

of non replicating the file at all (section 3.2 presents the

criteria the policy is based on to decide the

creation/deletion of a replica). However, to grant fault

tolerance, the system provides, similarly to backup

techniques, the capacity of storing multiple copies of a

file independently of the fact that client exists that needs

to locally access them. Thus, even if only a single process

access a file, it is anyway possible to recover faults on it.

From the user point of view, replication is

transparently managed by the system. A library of

primitives, semantically equivalent to standard ones used

in UNIXTM, deals with files without any idea of

replication. Only one peculiar file creation primitive has

been added that permits to define the replication degree

of a file and the initial allocation of its replicas.

3.1 The System Architecture

The implementation of the system is based on the

multiple active agents model [Weg95], towards a

distributed management: one agent called the file

server runs in every node of the system.

All requests to access to the file systems coming from

the application processes are redirected to the local file

server. The local server provides the access to the local

physical replicas, if present, otherwise it redirects the

access request to the others file servers. Coordination is

needed among file servers in order to provide remote

accesses to files and to issue the consistency protocols.

3.1.1 Structure of the Client

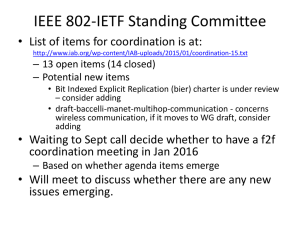

In a more detailed structure of the system (see figure

1), the client works as follows: when the user program

calls a primitive to handle a replicated file, a “stub”

translates the standard UNIX call in a special call to the

local server. When the server sends an answer, the same

stub of the client translates it into a “program friendly”

answer. To limit the duties of the servers, each server is

stateless, i.e., it does not keep trace of the files in use by

clients [LevS90]. Thus, the stub of each client has to

keep track of opened files. The state consists of the

following items: the name of the file, kind of access

(read-only, write-only, read-and-write), current position

of the pointer to the file.

The choice of integrating a stub for each client is in

the direction of improving efficiency and concurrency, by

allowing each process to directly access to the server

without any kernel involvement. Anyway, this solution

incurs in a larger occupation of memory for each process:

information such as server location, the type of

primitives, the protocols interface between the clients and

the servers, must be duplicated within each client.

User Program

System Calls

Interface

Stub

Replica

Local Server

Figure 1. Structure of the client.

3.1.2 Structure of the Server

As we have already stated, the implemented server is

stateless and does not keep track of any file opened by

clients. Every operation is self-contained, i.e. when the

server receives a request, it opens the specific file,

performs the operation and then it closes the. The major

advantage of this solution is to diminish the

computational load of the server, by distributing the

control of files among clients; moreover, this choice can

avoid situations in which the server can’t reach the

client, and it doesn’t know if and how to maintain the

state of files. The well-known drawback of this policy is

the impossibility of implementing locking policies at

level higher that the one of single write instructions.

With regard to the implementation, the server is

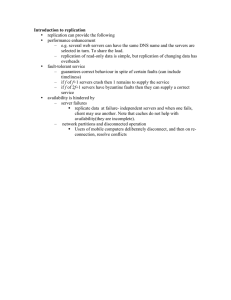

modular and structured in three layers (see figure 2):

• the upper layer (interface layer) defines the interface

to the clients and is in charge of receiving requests

from clients and answering them;

• the intermediate layer (coordination layer) serves the

clients' requests by coordinating with other servers

and by commanding the mechanisms for accessing

local replicas;

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

• the lower layer (replication policy layer) implements

the replication policies by exploiting information on

the local replicas

Whenever a client requests an operation for a file, the

coordination layer checks whether it refers to a locally

replicated file or not. In case one replica is locally

present, it can be directly accessed via the local

mechanisms, i.e., read and write operations. In case one

replica is not present, the request is broadcast (via a

broadcast RPC call [BirN84]) to all other servers and

one answer is waited from them. In addition, when a

write operation is invoked on a local replica, the

coordination layer is in charge of commanding the

integrated consistency protocol before accessing the

replica. Since the server does not keep track of which

servers maintain replicas of a given file, broadcast

messages are used by servers to coordinate each other

and to ensure that every interested server can take part to

the consistency protocol. In any case, any server knows

how many replicas of the file are present in the system

(as explained in the section 3.2), making it capable to

detect if all interested servers have correctly participated

to the protocol.

In the current implementation, the integrated

consistency protocol is a write-through based one: when

a process is in need of writing to a file, it should contact

all other servers that hold one replica of the same file.

When at least a half plus one of the contacted servers

notifies that no other process is currently writing on the

same file, the client is allowed to write a file and this

change is suddenly notified to all other replicas. Anyway,

the modular layout of the server and, in particular, of the

coordination layer makes it possible to easily change the

integrated consistency protocol without any side effect on

other part of the server.

Client

Interface Layer

Access Mechanism

(read, write, ....)

Replica

Coordination

Layer

Other

Servers

Consistency

Protocols

Replication Policies Layer

Figure 2. Structure of the server.

The third layer (replication policy layer) implements

the replication policy for replica management. In

particular, this layer is devoted to deciding whether to

create a new replica or delete an already replicated one.

This decision can be based on several information the

replication policy has access to:

• the number of replicas of a given file present in the

system;

• the number and the type of accesses (i.e., the number

of read and write operations) that have been made by

local clients to a given file, either locally replicated or

not.

Whenever a decision is taken, the replication

mechanisms starts: is it realised via standard access

mechanisms such as create, unlink, and by transferring

data with the help of the coordination layer - is issued.

3.2 The Adaptive Replication Policy

The implemented replication policy has been

designed with the main goal of locality. In particular:

• a replication policy module is present on each node of

the system and made responsible of local replicas

only, without having the capability of influencing the

state of the replicas in other nodes;

• at every node, decisions are based on local

information only (i.e., the number of locally requested

read and write operations).

A great advantage of the adopted local approach is

that it avoids the need of expansive coordination

protocols among the policy modules of different nodes. In

addition, locality grants more robustness to the system:

one failure in a single site compromises only data of this

site and does not interfere with the activities of other

policy modules.

A drawback of the local replication policy is the

unreachability, because of the lack of a global vision.

However, the advantages, in terms of robustness and low

overhead a local policy provides, outweigh the advantage

that would had come from a globally coordinated

implementation, likely to be fault prone and expansive.

The implemented replication policy is adaptive, i.e.,

it dynamically adapts the replication degree of files and

the allocation of file replicas to the current state of the

accesses. At each local site, the policy periodically

evaluates the local statistical data and decides whether

the current condition makes it convenient to remove the

local replica, or to create one local replica of a remotely

accessed file. When a server decides to change the

current situation, it must notify of the change all other

servers to update the current number of replicas of the

stated file. The decisions about the new state of replicas

are based on the following criteria.

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

and then

Let us suppose one system with N hosts where the

cost of a broadcast RPC call is b and the cost of a single

answer is a.

Let F be a file with a current replication degree of n

and r and w be the number of read and write operations,

respectively, recorded in local statistic information for

the file.

If the replica is local to a node, the total access cost to

the local replica is:

T1 = 0r+(b+(n-1)a)w

In fact, the read operations do not cause any traffic on

the network, while each write operation implies a

broadcast call and n-1 answers from all other hosts that

own one replica of the file.

If the replica had been removed from the host, the

cost of accessing to the file would become:

T2 = (b+(n-1)a)r+(b+(n-1)a)w

In fact, both read and write operations issued from the

hosts imply a broadcast request and the reception of n-1

answer from all the hosts in the system that hold a local

replica.

Then, the decision to remove is convenient if:

T2 < T1

By substituting:

(b+(n-1)a)r+(b+(n-1)a)w < (b+(n-1)a)w

and so:

r

a

<

w b + ( n − 1)a

In the case of a broadcast network, we can assume b =

a and obtain the following simplified formula:

r 1

(i)

<

w n

The expression (i) represents the condition under

which a local replica can be deleted (apart for the case in

which n is the number of minimum number of replicas to

be granted for the given file).

Let us consider the case in which a host does not hold

a replica of a file with a n-1 replication degree. The

situation is complementary to the previous one. Since

there is no local replica of the file, the cost of accessing

to it is:

T1=(b+(n-1)a)r+(b+(n-1)a)w

while the cost to access to the file if a local replica

were present would be:

T2=0r+(b+(n)a)w

In this case the server can take advantage of creating

a local replica of the file if:

T2 < T1

By substituting:

(b+(na)w < (b+(n-1)a)r+(b+(n-1)a)w

r

a

>

w b + ( n − 1)a

again, in the case of a broadcast network, we can use

the reduced formula:

r 1

(ii)

>

w

n

The expression (ii) represents the condition under

which a local replica can be created from a file with a

replication degree of n-1 (apart for the case in which n-1

is the maximum number of replicas allowed for the given

file).

The above policy can show an unstable behaviour:

whenever the r/w ratio is close to 1/n, any dynamic

variation of its value can cause to a replicas to be

continuously created and deleted, leading to system

thrashing. A corrective coefficient K, varying from 0 to

1, can be introduced to tune the inertia of the system and

to smooth temporarily instability. K represents the weight

old collected statistic information to be given to in the

decision of the policy. In particular, the statistic data the

policy takes into account are:

Statitics(r,w) = NewlyCollected + K(Statistics)

If K is zero, only the statistics collected during the

last period are considered in the policy, without any

smoothing factor. The more the coefficient grows, the

more old statistics are important in the decisions and the

less temporal variation are influent. Old data become less

and less important because K is lower than 1.

To summarise, the algorithm implemented by the

replication policy on each node is reported in figure 3.

do

sleep (interval time)

Statitics(r,w) =

NewlyCollected+K(Statistics)

For each file

do

if replica is local then begin

if

( r <

w

a

and n > min_rep_deg)

b + (n − 1)a

then begin

remove local replica;

advise all sites;

end;

end

else begin

if (

r

a and n < max_rep_deg)

>

w b + na

then begin

create a local replica;

advise all sites;

end;

end;

while true

Figure 3. Pseudo-code of the replication

policy.

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

4. Evaluation

The presented replication systems has been

implemented on a network of Sun and HP of

workstations connected by an Ethernet network and

sharing a file systems via NFSTM [Sun90]. Because of the

broadcast-based architecture of the network, the

simplified formulas can be adopted by the policy to

decide the presence of the replicas (see section 3.2) .

To test the efficiency of the system, we performed

repeatable tests under a variety of conditions, by varying

both the patterns of access to the files by the clients and

the internal parameters of the policy. The tests performed

with static patterns of access to the files permit to

evaluate the overhead of the policy. The tests with

dynamic patterns of access to the files permits to evaluate

the capacity of the systems of adapting to a changing

situation depending on the inertia produced by the

corrective coefficient.

4.1 Static Evaluation

When the patterns of access to files do not

substantially change in time, the distribution of the

replicas in the systems is - after an initial transient

situation - stable, and the policy does not produce

dynamic movement of replicas. As a consequence, the

decisional activities periodically issued within the policy

module are not able to provide further benefits and,

instead, overhead in the system.

This overhead has been measured via throughput of

the system both by activating the policy and by inhibiting

it (including the inhibition of the statistics collection).

Figure 4 reports the total number of operations that can

be performed on the average by one host (in a system of 4

total hosts) when the policy is active, compared with the

case in which the policy activities have been stopped. Xaxis reports different values for the interval of periodicity

of the policy. As it can be seen, the overhead is limited:

when the interval time is 30 seconds, only 2% less of

operations are performed w.r.t when the policy is not

working.

The same tests have been performed by varying the

number of hosts involved. The main result is that the

average overhead imposed by the policy on one host is

not dependent on the global system size, making it

scalable.

Figure 4. Number of operations performed on

one host with and without policy in static

situations

4.2 Dynamic Evaluation

In dynamic situations, the patterns of access to the

files in the system change with the time. Dynamicity can

show itself in the change of the r/w ratio of operations

requested on files from the client to the nodes of the

system. Several tests have been performed by varying the

r/w ratio with different frequency.

As stated in the previous section, the policy tends to

remove replicas from nodes onto which a replica is

intensively accessed with a high number of write

operation: this may prevent a large number of

consistency protocol to be issued from the node.

Conversely, a file intensively accessed for reading from a

node is likely to be locally replicated onto that node:

owning a local replica for reading does not make the

traffic increase too much because of the consistency

protocols. Starting from these considerations, it is

important is to tune the internal parameter of the

algorithm so to make it neither too much inertial in

reacting to a changed situation nor so prompt to lead to

unstable behaviour.

A first consideration is about the strict interrelation

between the interval of periodicity of the policy and the K

coefficient. In fact, being the statistic data updated by the

policy every specified periodic interval, diminishing

either the interval of periodicity or the K coefficient

should produce the same effects on the system inertia.

When the corrective coefficient K is high or the

interval between two control activities is long, the system

tends to presents high inertia, i.e., it tends to delay any

reaction due to a changed situation. An example of this

situation is shown in figure 5 and 6. Figure 5 reports the

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

temporal diagram of r/w ratio in the accesses to a given

file in 3 (out of 5) hosts of the system. Figure 6 report the

correspondent temporal diagram of the presence of

replicas in these node when the policy is acting: the

coefficient K is 0,5 and the interval of periodicity is 60

seconds.

In host A, a local replica began to be intensively

accessed for reading (r/w=10) at time 0. In this case the

algorithm does not remove the replicas. After three

minutes the local pattern of access to the replica change

and it become intensively accessed for writing (r/w=0,1).

In this case, the algorithm would locally decide to

remove the local replicas. However, as it can be seen

from figure 6, this decision is taken only 2 minutes after

the situation has changed.

An even worse situation occurs in host B. In this case,

a local replica started, at time 0, to be intensively

accessed for writing. However, the policy decides to

remove it only after three minutes, when the replica has

already began to be accessed mainly for writing.

That is easily explained by:

• the corrective factor quite high and, therefore, the

great influence of past operations on the decisions of

the policy;

• the interval between two controls, long enough to

block any prompt reaction to changes in the load.

local replica absent

local replica present

host A

5:03

7:03

11:03

host B

3.02

4.01

9.02

10.02

host C

2.03

time

0

(minutes)

4.01

8.00

3

6

10.01

9

10

Figure 6. Temporal diagram of presence of

replicas with K=0,5 and interval of 30 seconds.

local replica present

host A

local replica absent

3:40

6:10

host B

1:20

6:50

3:20

9:40

9:20

host C

3:20

0:21

time

(minutes)

0

3

6:50

6

9:20

9

10

Figure 7. Temporal course of the presence of

replicas with K=0 and interval of 30 seconds.

Figure 5. Temporal diagram of the r/w ratio

on hosts A, B and C

If either the coefficient K or the interval diminishes,

the system becomes more reactive and can adapt replicas

allocation to current load in a faster way. This situation

is shown in figure 7, that refers again to the patterns of

accessed reported in figure 5, but shows the temporal

diagram of the presence of replicas when K is 0. In this

case, we can see that the replica of host A, for example,

is removed only one minute after the r/w ration has

changed.

A synthesis of all performed tests is shown in figure

8. This figure points out that a too high inertia could lead

to a worse throughput than in the case in which the

replication policy is not activated. An increased

throughput can be reached either by diminishing the

factor K or the interval of periodicity. However, the

figure shows that diminishing too much the interval of

periodicity is not effective: increasing the frequency at

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

which the policy is activated, in fact, increases its

overhead on the system and, finally, the throughput.

Number of Operations

10 s.

30 s.

40 s.

12000

10000

8000

6000

10 s.

30 s.

4000

40 s.

2000

Without policy

0

Without policy

0

"

#

$

$

%

&

'

(

)

%

"

!

#

%

*

*

(

&

(

%

+

'

,

-

0,5

1

Corrective Coefficient (K)

.

Figure 9. Throughput for different value of K

and for different interval of periodicity in case of

highly dynamic patterns of access

Figure 8. Throughput for different value of K

and for different interval of periodicity (10, 30

and 40 seconds)

Granting promptness in the algorithm is an important

requirement in dynamic systems. However, it is equally

important to grant the algorithm stability: it should not

waste a lot of system resources without coming to a better

situation.

Further tests have shown that when the dynamicity of

the patterns of access becomes higher and less regular changing every few seconds or less - a little inertia in the

algorithm may cause unstable behaviour. Then, the

situation reported in figure 8 reverts and lead to figure 9.

If the inertia of the system is low, the overall

throughput of the system worse because of the high

number of movements of replicas and because the

interval of validity of a decision is short (the information

onto which to base a decision are likely to be obsolete).

If the inertia of the system is high, instead, the

throughput of the system can be increased. This can be

achieved either by increasing K or the interval of

periodicity.

As final remarks, during the dynamic tests we have

rediscovered the locality principle upon which the system

is based: only by respecting it one can bound the scope of

decisions.

With regard to figure 5, one can change the r/w ratio

of access to a given file on a host, say B, while leave

those of hosts A and C unchanged. Figure 8 reports the

temporal diagram of the presence of the replicas of the

specified file on hosts A, B and C. After a comparison

with figure 6, one can point out that the presence of

replicas on hosts A and C does not change and the

change in B of the pattern of access to the local replica is

locally confined. Global effects are limited and they are

caused only by the fact that the algorithm uses the total

number of replicas in the system to evaluate the

appropriate decision in local sites.

The orthogonality shown by this test is fundamental

to give validity to a local algorithm. In fact, if the

behaviour of one node can be kept quite independent

from other nodes, every site can make good choices only

on the basis of local data. The property of locality allows

the use of more efficient protocols with less coordination.

Copyright 1996 IEEE. Published in the Proceedings of EUROMICRO '96, September 1996 at Praha, Chzech Republic. Personal use of this material is

permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or

redistribution to servers or lists, or to reuse any copyrighted component of this work in other works, must be obtained from the IEEE. Contact: Manager,

Copyrights and Permissions / IEEE Service Center / 445 Hoes Lane / P.O. Box 1331 / Piscataway, NJ 08855-1331, USA. Telephone: + Intl. 732-562-3966.

6. References

computer

A

B

3:50

6:20

9:50

3:50

6:20

9:50

C

0:50

0

3:20

3

6:50

6

9:20

9

10

time

(minutes)

Figure 8. Temporal diagram of the presence

of replicas with different values of load on host

B.

5. Conclusions

The paper presents a system for file replication in

distributed file system implemented atop of commercial

products.

The replication policy integrated within the system is

distributed and local: it can take decisions near to

optimality, even if it uses only local data, and it can

minimise its intrusion on the system. In addition, the

policy is adaptive and can vary the replication degree of

files depending on the patterns of access to them.

Experimental results show that the implemented

system can permit a significant performance

improvement in the accesses to a distributed file system,

even without any specialised hardware or operating

system support.

A problem of the implemented system is that the

replication policy requires a tuning of its internal

parameter to achieve efficiency: one the one hand, when

the patterns of access to the file system are highly

dynamic, it is convenient to increase the inertia of the

policy to avoid thrashing; on the other hand, when the

patterns of access change slowly, too much inertia can

make the system not prompt.

Future works will deal with the integration in the

system of a replication policy able to automatically adapt

its internal parameter to the dynamicity of the patterns of

access to the file systems.

[Bal92] H. E. Bal et al., Replication Techniques for Speeding

Up Parallel Applications on Distributed Systems”,

Concurrency: Practice and Experience, Vol. 5, No. 5,

Aug. 1992.

[BirN84] A. D. Birrel, B. J. Nelson, “Implementing Remote

Procedure Calls”, ACM Transactions on Computer

Systems, Vol. 2, No. 2, Feb. 1984.

[Bir85] A. Birman, “Replication and Fault-Tolerance in the

ISIS System”, Proceedings of the 10th ACM

Symposium on Operating Systems Principles, Dec.

1985.

[Fra95] M. C. Franky, “DGDBM: Programming Support for

Distributed Transactions over Replicated Files”,

ACM Operating Systems Review, Vol. , No., 1995.

[GavL90]B. Gavish, O. R. Liu Sheng, “Dynamic File

Migration in Distributed Computer Systems”,

Communications of the ACM, Vol. 33, No. 2, Feb.

1990.

[Hac89] A. Hac, “ A Distributed Algorithm for Performance

Improvement through File Replication, File

Migration

and

Process

Migration”,

IEEE

Transactions on Software Engineering, Vol. 15, No.

11, Nov. 1989.

[HarO95]J. H. Hartman, J. K. Ousterhout, “The Zebra Striped

Network File System”, ACM Transactions on

Computer Systems, Vol. 13, No. 3, Aug. 1995.

[HooM95]P. Hoogerbrugge, R. Mirchandeney, “Experiences

with Networked Parallel Computing, ”, Concurrency:

Practice and Experience, Vol. 7, No. 1, Feb. 1995.

[HuaT93]Y. Huang, S. K. Tripathi, “Resource Allocation for

Primary-Site

Fault-Tolerant

Systems”,

IEEE

Transactions on Software Engineering, Vol. 19, No.

2, Feb. 1993.

[LevS90] E. Levy, A Silberschatz, “ Distributed File Systems:

Concepts and Examples”, ACM Computing Surveys,

Vol. 22, No. 4, Dec. 1990.

[Lom93] M. E. S. Loomis, “Managing Replicated Objects”,

The Journal of Object-Oriented Programming, Vol. 6,

No. 5, Sept. 1993.

[Lad92] R: Ladin et al., “Providing Availability Using Lazy

Replication”, ACM Transactions on Computer

Systems, Vol. 10, No. 4, April 1992.

[Dis93] “Distributed Systems”, Editor. D. Mullender,

Addison Wesley, 1993.

[Sun90] Sun Microsystems, Network Programming Guide,

1990.

[Weg95] P. Wegner, “Tutorial Notes: Models and Paradigms

of Interaction”, Technical Reports No. CS-95-11,

Brown University, Providence (RI), Sept. 1995.