High Precision Galaxy Cluster Mass Estimation

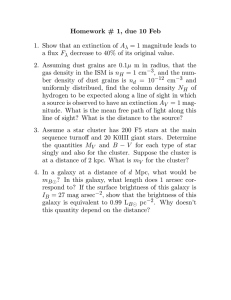

advertisement