- ASU Digital Repository

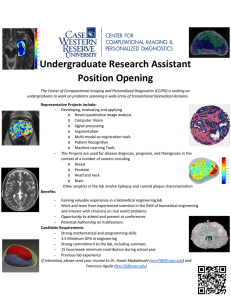

advertisement