Lecture 2 The Capacity of Wireless Channels

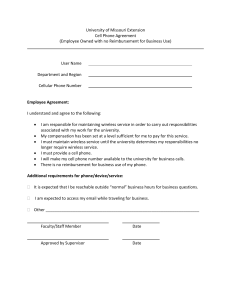

advertisement

MIMO Communication Systems

Lecture 2

TheCapacityofWirelessChannels

Prof. Chun-Hung Liu

Dept. of Electrical and Computer Engineering

National Chiao Tung University

Fall 2015

2015/10/11

Lecture2:CapacityofWirelessChannels

1

Outline

• Capacity of SISO Channels (Chapter 4 in Goldsmith’s Book)

• Capacity in AWGN

• Capacity of Flat-Fading Channels

• Channel and System Model

• Channel Distribution Information (CDI) Known

• Channel Side Information at Receiver

• Channel Side Information at Transmitter and Receiver

• Capacity of MIMO Channels (Chapter 10.1~10.3 in

Goldsmith’s Book)

2015/10/11

Lecture2:CapacityofWirelessChannels

2

Introduction to Shannon Channel Capacity

•

Channel capacity was pioneered by Claude Shannon in the late 1940s,

using a mathematical theory of communication based on the notion of

mutual information between the input and output of a channel

• C. E. Shannon, “A Mathematical Theory of Communication”, Bell System

Technical Journal, vol. 27, pp. 379-423, 623-656, July, October, 1948

• Proposed Entropy as a measure of information

1916-2001

• Showed that Digital Communication is

possible.

• Derived the Channel Capacity Formula

• Showed that reliable information transmission

is always possible if transmitting rate does not

exceed the channel capacity

Claude Shannon in 1948 (32 years old)

2015/10/11

Lecture2:CapacityofWirelessChannels

3

Introduction to Shannon Channel Capacity

The fundamental problem of communication is that of reproducing at one

point, either exactly or approximately, a message selected at another point.

~ Claude Shannon

Channel

Channel Capacity :

C = B log2 (1 + SN R)

2015/10/11

Lecture2:CapacityofWirelessChannels

4

Introduction to Shannon Channel Capacity

• Consider a discrete-time additive white Gaussian noise (AWGN) channel

with channel input/output relationship

, where x[i] is the

channel input at time i, y[i] is the corresponding channel output,

and n[i] is a white Gaussian noise random process.

•

Assume a channel bandwidth B and transmit power P.

•

The channel SNR, the power in x[i] divided by the power in n[i] , is

constant and given by

, where

is the power spectral

density of the noise.

•

The capacity of this channel is given by Shannon’s well-known formula

where the capacity units are bits/second (bps).

•

Shannon’s coding theorem proves that a code exists that achieves data

rates arbitrarily close to capacity with arbitrarily small probability of bit

error.

2015/10/11

Lecture2:CapacityofWirelessChannels

5

Introduction to Shannon Channel Capacity

•

Shannon proved that channel capacity equals the mutual information of

the channel maximized over all possible input distributions:

(4.3)

For the AWGN channel, the maximizing input distribution is Gaussian,

which results in the channel capacity

.

•

•

At the time that Shannon developed his theory of information, data rates

over standard telephone lines were on the order of 100 bps. Thus, it was

believed that Shannon capacity, which predicted speeds of roughly 30

Kbps over the same telephone lines, was not a very useful bound for real

systems.

However, breakthroughs in hardware, modulation, and coding

techniques have brought commercial modems of today very close to the

speeds predicted by Shannon in the 1950s.

2015/10/11

Lecture2:CapacityofWirelessChannels

6

I. Capacity of SISO Channels

2015/10/11

Lecture2:CapacityofWirelessChannels

7

Mutual Information

• The converse theorem shows that any code with rate R>C has a

probability of error bounded away from zero.

•

For a memoryless time-invariant channel with random input x and

random output y, the channel’s mutual information is defined as

where the sum is taken over all possible input and output pairs

and

the input and output alphabets.

•

Mutual information can also be written in terms of the entropy in the

channel output y and conditional output y|x as

where

2015/10/11

Lecture2:CapacityofWirelessChannels

8

The AWGN Channel

•

The most important continuous alphabet channel is the Gaussian

channel depicted in the following figure. This is a time-discrete

channel with output Yi at time i, where Yi is the sum of the input Xi

and the noise Zi .

•

The noise Zi is drawn i.i.d. from a Gaussian distribution with

variance N. Thus,

Yi = Xi + Z i ,

Zi ⇠ N (0, N )

•

The noise Zi is assumed to be

independent of the signal Xi .

•

If the noise variance is zero or the

input is unconstrained, the

capacity of the channel is infinite.

Figure: A Gaussian Channel

2015/10/11

Lecture2:CapacityofWirelessChannels

9

The AWGN Channel

•

Assume an average power constraint. For any codeword (x1 , x2 , . . . , xn )

transmitted over the channel, we require that

n

1X 2

xi P

n i=1

•

Assume that we want to send 1 bit over the channel in one use of the

channel.

•

Given the power

the best that we can do is to send one

pconstraint,

p

of two levels,

P or P . The receiver looks at the corresponding

Y received and tries to decide which of the two levels was sent.

•

Assuming that both levels are equally likely (this would be the case if

we wish to send exactly 1 bit of information), the optimump

decoding

p

rule is to decide that P was sent if Y > 0 and decide

P was

sent if Y 0 .

2015/10/11

Lecture2:CapacityofWirelessChannels

10

Probability of Error in AWGN Channels

•

The probability of error with such a decoding scheme is

p

p

1

1

Pe = P Y < 0 X = P + P Y > 0 X =

P

2

2

p

p

p

1

1

= P Z<

P X= P + P Z> P X=

2

2

h

p i

=P Z> P =1

where

P

⇣p

⌘

P/N

(x) is the cumulative normal function

(x) =

•

p

Z

x

1

1

p e

2⇡

t2

2

dt = 1

Q(x).

Using such a scheme, we have converted the Gaussian channel into

a discrete binary symmetric channel with crossover probability Pe .

2015/10/11

Lecture2:CapacityofWirelessChannels

11

Differential Entropy

•

Let X now be a continuous r.v. with cumulative distribution

F (x) = P[X x]

d

and f (x) =

F (x) is the density function.

dx

•

Let S = {x : f (x) > 0} be the support set. Then

• Definition of Differential Entropy h(X) :

Z

h(X) =

f (x) log f (x) dx

S

Since we integrate over only the support set, no worries about log 0.

2015/10/11

Lecture2:CapacityofWirelessChannels

12

Differential Entropy of Gaussian Distribution

•

If we have:

X ⇠ N (0,

•

2

)

Let’s compute this in nats.

=

E[X 2 ]

1

log2 2⇡e 2 , bits

2

Note: only a function of the variance 2 , not the mean. Why?

So entropy of a Gaussian is monotonically related to the variance.

=

•

•

2015/10/11

Lecture2:CapacityofWirelessChannels

13

Gaussian Distribution Maximizes Differential Entropy

•

Definition : The relative entropy (or Kullback–Leibler distance) D(f ||g)

between two densities f and g is defined by

D(g|| ) =

•

Z

g(x) log

✓

g(x)

(x)

◆

dx

0

(Why?)

Theorem : Let random variable X have zero mean and variance

Then h(X) 12 log2 (2⇡e 2 ), with equality iff X ⇠ N (0, 2 ) .

2

.

R

Proof: Let g(x) be any density satisfying x2 g(x)dx = 2 . Let (x) be the

density of a Gaussian random variable with zero mean and variable 2 .

2

Note that log (x) is a quadratic form and it is 2x 2 12 log(2⇡ 2 ) . Then

D(g|| ) =

=

=

•

Z

✓

g(x)

(x)

◆

g(x) log

dx

Z

h(g)

g log( (x))dx =

h(g) + h( )

0

h(g)

Z

(x) log( (x))dx (Why?)

Therefore, the Gaussian distribution maximizes the entropy overall

distributions with the same variance.

2015/10/11

Lecture2:CapacityofWirelessChannels

14

Capacity of Gaussian Channels

•

Definition: The information capacity of the Gaussian channel with

power constraint P is

C=

•

max2

f (x):E[X ]P

I(X; Y ),

where I(X; Y ) is called the mutual information between X and Y.

We can calculate the information capacity as follows:

Expanding I(X; Y ) , we have

I(X; Y ) = h(Y )

h(Y |X) = h(Y )

h(X + Z|X)

= h(Y )

h(Z|X) = h(Y )

h(Z),

since Z is independent of X. Now h(Z) =

1

2

log 2⇡eN . Also,

E[Y 2 ] = E[(X + Z)2 ] = E[X 2 ] + 2E[X]E[Z] + E[Z 2 ] = P + N,

since X and Z are independent and E[Z] = 0 .

2015/10/11

Lecture2:CapacityofWirelessChannels

15

Capacity in AWGN

• Given E[Y 2 ] = P + N , the entropy of Y is bounded by 12 log 2⇡e(P + N )

(the Gaussian distribution maximizes the entropy for a given variance).

•

•

Applying this result to bound the mutual information, we obtain

I(X; Y ) = h(Y ) h(Z)

1

log 2⇡e(P + N )

2

✓

◆

1

P

= log 1 +

2

N

1

log 2⇡eN

2

Hence, the information capacity of the Gaussian channel is

✓

1

P

C = max

I(X; Y ) = log 1 +

2

2

N

E[X ]P

◆

,

and the maximum is attained when X ⇠ N (0, P ) .

2015/10/11

Lecture2:CapacityofWirelessChannels

16

Bandlimited Channels

•

The output of a channel can be described as the convolution

Y (t) = [X(t) + Z(t)] ⇤ c(t),

where X(t) is the signal waveform, Z(t) is the waveform of the white

Gaussian noise, and c(t) is the impulse response of an ideal bandpass

filter, which cuts out all frequencies greater than B.

•

Nyquist–Shannon Sampling Theorem: Suppose that a function f(t) is

bandlimited to B, namely, the spectrum of the function is 0 for all

frequencies greater than B. Then the function is completely determined

1

by samples of the function spaced 2B

seconds apart.

Proof:

Let F (!) be the Fourier transform of f (t). Then

1

f (t) =

2⇡

2015/10/11

Z

1

1

i!t

F (!)e d! =

2⇡

1

Z

2⇡B

F (!)ei!t d!,

2⇡B

Lecture2:CapacityofWirelessChannels

17

Bandlimited Channels

since F (!) is zero outside the band 2⇡B ! 2⇡B . If we consider

1

samples spaced 2B

seconds apart, the value of the signal at the sample

points can be written

•

Z

⇣ n ⌘

1

f

=

2B

2⇡

2⇡B

F (!)ei!n/2B d!.

2⇡B

The right-hand side of this equation is also the definition of the

coefficients of the Fourier series expansion of the periodic extension of

the function F (!) , taking the interval −2πB to 2πB as the fundamental

period.

n

• The sample values f ( 2B

) determine the Fourier coefficients and, by

extension, they determine the value of F (!) in the interval (−2πB, 2πB).

•

Consider the function

sin(2⇡Bt) (This function is 1 at t=0 and is 0

sinc(t) =

for t=n/2B, n=0)

2⇡Bt

2015/10/11

Lecture2:CapacityofWirelessChannels

18

Bandlimited Channels

•

The spectrum of this function is constant in the band (−B, B) and is

zero outside this band.

•

Now define

g(t) =

n=

•

•

⇣ n ⌘

⇣

f

sinc t

2B

1

1

X

n ⌘

2B

From the properties of the sinc function, it follows that g(t) is

bandlimited to B and is equal to f (n/2B) at t = n/2B . Since there is

only one function satisfying these constraints, we must have g(t) = f (t).

This provides an explicit representation of f (t) in terms of its samples.

What does this theorem tell us?

A general function has an infinite number of degrees of freedom-the

value of the function at every point can be chosen independently. The

Nyquist–Shannon sampling theorem shows that a bandlimited function

has only 2B degrees of freedom per second. The values of the function

at the sample points can be chosen independently, and this specifies the

entire function.

2015/10/11

Lecture2:CapacityofWirelessChannels

19

Bandlimited Channels

•

•

If a function is bandlimited, it cannot be limited in time. But we can

consider functions that have most of their energy in bandwidth B and

have most of their energy in a finite time interval, say (0,T).

Now let’s look back the problem of communication over a

bandlimited channel.

Assuming that the channel has bandwidth B, we can represent both the

input and the output by samples taken 1/2B seconds apart

•

If the noise has power spectral density N0 /2 watts/hertz and bandwidth

B hertz, the noise has power N20 2B = N0 B and each of the 2BT noise

samples in time T has variance N0 BT /2BT = N0 /2 .

•

Looking at the input as a vector in the 2TB-dimensional space, we see

that the received signal is spherically normally distributed about this

point with covariance N0 I .

2

2015/10/11

Lecture2:CapacityofWirelessChannels

20

Capacity of Bandlimited Channels

•

Let the channel be used over the time interval [0,T]. In this case, the

energy per sample is P T /2BT = P/2B , the noise variance per sample

is N0 2B T = N0 /2 , and hence the capacity per sample is

2

2BT

!

✓

◆

P

1

1

P

C = log2 1 + 2B

= log2 1 +

(bits per sample)

2

N0 /2

2

N0 B

•

Since there are 2B samples each second, the capacity of the channel

can be rewritten as

✓

◆

P

C = B log2 1 +

(bits/s, bps)

N0 B

•

(This equation is one of the most famous formulas of information

theory. It gives the capacity of a bandlimited Gaussian channel with

noise spectral density N0 /2 watts/Hz and power P watts.)

If we let B ! 1 in the above capacity formula, we obtain

P

C=

log2 e

N0

2015/10/11

(for infinite bandwidth channels, the capacity grows

linearly with the power.)

Lecture2:CapacityofWirelessChannels

21

Capacity of Flat-Fading Channels

•

Assume a discrete-time

channel with stationary and ergodic timep

varying gain g[i], 0 g[i], and AWGN n[i], as shown in Figure 4.1.

•

The channel power gain g[i] follows a given distribution p(g), e.g.

for Rayleigh fading p(g) is exponential. The channel gain g[i] can

change at each time i, either as an i.i.d. process or with some

correlation over time.

2015/10/11

Lecture2:CapacityofWirelessChannels

22

Capacity of Flat-Fading Channels

•

In a block fading channel g[i] is constant over some block length T after

which time g[i] changes to a new independent value based on the

distribution p(g) .

•

Let

denote the average transmit signal power,

denote the noise

power spectral density of n[i] , and B denote the received signal

bandwidth. The instantaneous received signal-to-noise ratio (SNR) is

then

and its expected value over all time

is

.

•

The channel gain g[i] , also called the channel side (state) information

(CSI), changes during the transmission of the codeword.

•

The capacity of this channel depends on what is known about g[i] at the

transmitter and receiver. We will consider three different scenarios

regarding this knowledge: Channel Distribution Information (CDI),

Receiver CSI and Transmitter and Receiver CSI.

2015/10/11

Lecture2:CapacityofWirelessChannels

23

Capacity of Flat-Fading Channels

•

•

•

First consider the case where the channel gain distribution p(g) or,

equivalently, the distribution of SNR p( ) is known to the transmitter

and receiver.

For i.i.d. fading the capacity is given by (4.3), but solving for the

capacity-achieving input distribution, i.e., the distribution achieving

the maximum in (4.3), can be quite complicated depending on the

fading distribution.

For these reasons, finding the capacity-achieving input distribution

and corresponding capacity of fading channels under CDI remains an

open problem for almost all channel distributions.

•

Now consider the case where the CSI g[i] is known at the receiver at

time i. Equivalently, [i] is known at the receiver at time i.

•

Also assume that both the transmitter and receiver know the

distribution of g[i] . In this case, there are two channel capacity

definitions that are relevant to system design: Shannon capacity, also

called ergodic capacity, and capacity with outage.

2015/10/11

Lecture2:CapacityofWirelessChannels

24

Capacity of Flat-Fading Channels

• For the AWGN channel, Shannon capacity defines the maximum data

rate that can be sent over the channel with asymptotically small error

probability.

• Note that for Shannon capacity the rate transmitted over the channel is

constant: the transmitter cannot adapt its transmission strategy relative

to the CSI.

• Capacity with outage is defined as the maximum rate that can be

transmitted over a channel with some outage probability corresponding

to the probability that the transmission cannot be decoded with

negligible error probability.

• The basic premise of capacity with outage is that a high data rate can be

sent over the channel and decoded correctly except when the channel is

in deep fading.

• By allowing the system to lose some data in the event of deep fades, a

higher data rate can be maintained if all data must be received correctly

regardless of the fading state.

2015/10/11

Lecture2:CapacityofWirelessChannels

25

Capacity of Flat-Fading Channels

•

Shannon capacity of a fading channel with receiver CSI for an average

power constraint P can be obtained as

(4.4)

•

By Jensen’s inequality,

E[B log2 (1 + )]

where

•

•

B log2 (1 + E[ ])

is the average SNR on the channel.

Here we see that the Shannon capacity of a fading channel with receiver

CSI only is less than the Shannon capacity of an AWGN channel with the

same average SNR.

In other words, fading reduces Shannon capacity when only the receiver

has CSI.

2015/10/11

Lecture2:CapacityofWirelessChannels

26

Capacity of Flat-Fading Channels

•

Example 4.2: Consider a flat-fading channel with i.i.d. channel gain g[i]

which can take on three possible values:

with probability

,

with probability

with probability

.

The transmit power is 10 mW, the noise spectral density is

W/Hz,

and the channel bandwidth is 30 KHz. Assume the receiver has knowledge

of the instantaneous value of g[i] but the transmitter does not. Find the

Shannon capacity of this channel and compare with the capacity of an

AWGN channel with the same average SNR.

2015/10/11

Lecture2:CapacityofWirelessChannels

27

Capacity of Flat-Fading Channels

•

•

•

•

•

Capacity with outage applies to slowly-varying channels, where the

instantaneous SNR γ is constant over a large number of transmissions (a

transmission burst) and then changes to a new value based on the fading

distribution.

If the channel has received SNR γ during a burst, then data can be sent

over the channel at rate

with negligible probability of error

Capacity with outage allows bits sent over a given transmission burst to

be decoded at the end of the burst with some probability that these bits

will be decoded incorrectly.

Specifically, the transmitter fixes a minimum received SNR

and

encodes for a data rate

.

The data is correctly received if the instantaneous received SNR is greater

than or equal to

.

2015/10/11

Lecture2:CapacityofWirelessChannels

28

Capacity of Flat-Fading Channels

•

If the received SNR is below

then the receiver declares an outage.

The probability of outage is thus

.

•

The average rate correctly received over many transmission bursts is

since data is only correctly received on

transmissions.

•

Capacity with outage is

typically characterized by a plot

of capacity versus outage, as

shown in Figure 4.2.

• In Figure 4.2. we plot the

normalized capacity

as a

function of outage prob.

for a Rayleigh

fading channel with

dB.

2015/10/11

Lecture2:CapacityofWirelessChannels

29

Capacity of Flat-Fading Channels

•

When both the transmitter and receiver have CSI, the transmitter can

adapt its transmission strategy relative to this CSI, as shown in Figure

4.3.

•

In this case, there is no notion of capacity versus outage where the

transmitter sends bits that cannot be decoded, since the transmitter

knows the channel and thus will not send bits unless they can be

decoded correctly.

2015/10/11

Lecture2:CapacityofWirelessChannels

30

Capacity of Flat-Fading Channels

•

Now consider the Shannon capacity when the channel power gain g[i] is

known to both the transmitter and receiver at time i.

•

Let s[i] be a stationary and ergodic stochastic process representing the

channel state, which takes values on a finite set S of discrete memoryless

channels.

• Let

denote the capacity of a particular channel

, and p(s)

denote the probability, or fraction of time, that the channel is in state

s. The capacity of this time-varying channel is then given by

(4.6)

•

The capacity of an AWGN channel with average received SNR γ is

2015/10/11

Lecture2:CapacityofWirelessChannels

31

Capacity of Flat-Fading Channels

•

From (4.6) the capacity of the fading channel with transmitter and

receiver side information is

•

Let us now allow the transmit power P ( ) to vary with γ, subject to

an average power constraint

•

Define the fading channel capacity with average power constraint as

(4.9)

(Can this capacity be achieved?)

2015/10/11

Lecture2:CapacityofWirelessChannels

32

Capacity of Flat-Fading Channels

•

Figure 4.4 shows the main idea of how to achieve the capacity in (4.9)

•

To find the optimal power allocation P ( ) , we form the Lagrangian

2015/10/11

Lecture2:CapacityofWirelessChannels

33

Capacity of Flat-Fading Channels

•

Next we differentiate the Lagrangian and set the derivative equal to zero:

•

Solving forP ( ) with the constraint that P ( ) > 0 yields the optimal

power adaptation that maximizes (4.9) as

(4.12)

for some “cutoff” value

:

•

If [i] is below this cutoff then no data is transmitted over the ith time

interval, so the channel is only used at time i if

.

•

Substituting (4.12) into (4.9) then yields the capacity formula:

(4.13)

2015/10/11

Lecture2:CapacityofWirelessChannels

34

Capacity of Flat-Fading Channels

•

The multiplexing nature of the capacity-achieving coding strategy

indicates that (4.13) is achieved with a time varying data rate, where the

rate corresponding to instantaneous SNR γ is

.

•

Note that the optimal power allocation policy (4.12) only depends on

the fading distribution p( ) through the cutoff value . This cutoff

value is found from the power constraint.

•

By rearranging the power constraint and replacing the inequality with

equality (since using the maximum available power will always be optimal)

yields the power constraint

• By using the optimal power allocation (4.12), we have

2015/10/11

Lecture2:CapacityofWirelessChannels

35

Capacity of Flat-Fading Channels

•

•

Note that this expression only depends on the distribution p( ) . The value

for

cannot be solved for in closed form for typical continuous pdfs p( )

and thus must be found numerically.

Since γ is time-varying, the maximizing power adaptation policy of (4.12)

is a “water-filling” formula in time, as illustrated in Figure 4.5.

1

1/

0 •

P( )

P

1/

2015/10/11

Lecture2:CapacityofWirelessChannels

The water-filling

terminology refers to the

fact that the line 1/

sketches out the bottom of

a bowl, and power is

poured into the bowl to a

constant water level

of

.

36

Capacity of Flat-Fading Channels

•

Example 4.4: Assume the same channel as in the previous example, with

a bandwidth of 30 KHz and three possible received SNRs:

with

with

with

.

Find the ergodic capacity of this channel assuming both transmitter and

receiver have instantaneous CSI.

Solution: We know the optimal power allocation is water-filling, and we

need to find the cutoff value that satisfies the discrete version of (4.15)

given by

We first assume that all channel states are used to obtain

, i.e.

assume

, and see if the resulting cutoff value is below that of

the weakest channel. If not then we have an inconsistency, and must redo

the calculation assuming at least one of the channel states is not used.

Applying (4.17) to our channel model yields

2015/10/11

Lecture2:CapacityofWirelessChannels

37

Capacity of Flat-Fading Channels

2015/10/11

Lecture2:CapacityofWirelessChannels

38

Capacity of Flat-Fading Channels

Comparing with the results of the previous example we see that this rate is

only slightly higher than for the case of receiver CSI only, and is still

significantly below that of an AWGN channel with the same average SNR.

That is because the average SNR for this channel is relatively high: for low

SNR channels capacity in flat-fading can exceed that of the AWGN channel

with the same SNR by taking advantage of the rare times when the channel

is in a very good state.

•

Zero-Outage Capacity and Channel Inversion: now consider a suboptimal

transmitter adaptation scheme where the transmitter uses the CSI to

maintain a constant received power, i.e., it inverts the channel fading.

•

The channel then appears to the encoder and decoder as a time-invariant

AWGN channel. This power adaptation, called channel inversion, is

given by

where σ equals the constant received SNR that

can be maintained with the transmit power constraint (4.8).

2015/10/11

Lecture2:CapacityofWirelessChannels

39

Capacity of Flat-Fading Channels

= E[1/ ]

•

The constant σ thus satisfies

•

Fading channel capacity with channel inversion is just the capacity of an

AWGN channel with SNR σ:

1

E[1/ ]

•

•

•

•

(4.18)

The capacity-achieving transmission strategy for this capacity uses a fixedrate encoder and decoder designed for an AWGN channel with SNR σ.

This has the advantage of maintaining a fixed data rate over the channel

regardless of channel conditions.

For this reason the channel capacity given in (4.18) is called zero-outage

capacity.

Zero-outage capacity can exhibit a large data rate reduction relative to

Shannon capacity in extreme fading environments. For example, in

Rayleigh fading E[1/ ] is infinite, and thus the zero-outage capacity given

by (4.18) is zero.

2015/10/11

Lecture2:CapacityofWirelessChannels

40

Capacity of Flat-Fading Channels

•

Example 4.5: Assume the same channel as in the previous example, with

a bandwidth of 30 KHz and three possible received SNRs:

with

with

with

.

Assuming transmitter and receiver CSI, find the zero-outage capacity

of this channel.

= 1/E[1/ ]

E[1/ ] =

•

The outage capacity is defined as the maximum data rate that can be

maintained in all non-outage channel states times the probability of nonoutage.

2015/10/11

Lecture2:CapacityofWirelessChannels

41

Capacity of Flat-Fading Channels

•

Outage capacity is achieved with a truncated channel inversion policy for

power adaptation that only compensates for fading above a certain cutoff

fade depth

•

Since the channel is only used when

yields = 1/E 0 [1/ ] , where

Z

E 0 [1/ ] ,

•

, the power constraint (4.8)

1

1

p( )d .

0

The outage capacity associated with a given outage probability

corresponding cutoff

is given by

1

P[

E 0 [1/ ]

2015/10/11

Lecture2:CapacityofWirelessChannels

and

0]

42

Capacity of Flat-Fading Channels

•

We can also obtain the maximum outage capacity by maximizing outage

capacity over all possible

1

P[

E 0 [1/ ]

•

0]

This maximum outage capacity will still be less than Shannon capacity

(4.13) since truncated channel inversion is a suboptimal transmission

strategy.

• Example 4.6: Assume the same channel as in the previous example, with

a bandwidth of 30 KHz and three possible received SNRs:

with

with

, and

with

.

Find the outage capacity of this channel and associated outage

probabilities for cutoff values

. Which of these cutoff

values yields a larger outage capacity?

2015/10/11

Lecture2:CapacityofWirelessChannels

43

II. Capacity of MIMO Channels

2015/10/11

Lecture2:CapacityofWirelessChannels

44

Circular Complex Gaussian Vectors

•

•

•

•

A complex random vector is of the form x = xR + jxI where xR and xI

are real random vectors.

Complex Gaussian random vectors are ones in which [xR , xI ]t is a

real Gaussian random vector.

The distribution is completely specified by the mean and covariance

matrix of the real vector [xR , xI ]t .

Define

where A⇤ is the transpose of the matrix A with each element replaced by its

complex conjugate, and At is just the transpose of A.

•

Note that in general the covariance matrix K of the complex random

vector x by itself is not enough to specify the full second-order statistics

of x. Indeed, since K is Hermitian, i.e., K = K⇤, the diagonal elements are

real and the elements in the lower and upper triangles are complex

conjugates of each other.

2015/10/11

Lecture2:CapacityofWirelessChannels

45

Circular Complex Gaussian Vectors

•

In wireless communication, we are almost exclusively interested in

complex random vectors that have the circular symmetry property:

•

For a circular symmetric complex random vector x,

for any ✓ ; hence the mean µ = 0 . Moreover

for any ✓ ; hence the pseudo-covariance matrix J is also zero.

•

Thus, the covariance matrix K fully specifies the first and second

order statistics of a circular symmetric random vector.

2015/10/11

Lecture2:CapacityofWirelessChannels

46

Circular Complex Gaussian Vectors

•

•

•

And if the complex random vector is also Gaussian, K in fact specifies

its entire statistics.

A circular symmetric Gaussian random vector with covariance matrix

K is denoted as

.

Some special cases:

• A complex Gaussian random variable w = wR + jwI with i.i.d.

zero-mean Gaussian real and imaginary components is circular

symmetric. In fact, a circular symmetric Gaussian random

variable must have i.i.d. zero-mean real and imaginary

components.

• A collection of n i.i.d. CN (0, 1) random variables forms a standard

circular symmetric Gaussian random vector w and is denoted

by CN (0, I) . The density function of w can be explicitly written as

• Uw has the same distribution as w for any complex orthogonal

matrix U (such a matrix is called a unitary matrix and

characterized by the property UU⇤ = I.

2015/10/11

Lecture2:CapacityofWirelessChannels

47

Narrowband MIMO Model

•

Here we consider a narrowband MIMO channel. A narrowband point-topoint communication system of

transmit and

receive antennas is

shown in the following figure.

2015/10/11

Lecture2:CapacityofWirelessChannels

48

Narrowband MIMO Model

•

Or simply as

•

We assume a channel bandwidth of B and complex Gaussian noise with

zero mean and covariance matrix

, where typically

.

•

For simplicity, given a transmit power constraint P we will assume an

equivalent model with a noise power of unity and transmit power

, where ρ can be interpreted as the average SNR per receive

antenna under unity channel gain.

This power constraint implies that the input symbols satisfy

•

Mt

X

E[xi x⇤i ] = ⇢,

(10.1)

i=1

where

2015/10/11

is the trace of the input covariance matrix Rx = E[xxT ]

Lecture2:CapacityofWirelessChannels

49

Parallel Decomposition of the MIMO Channel

•

•

•

•

•

When both the transmitter and receiver have multiple antennas, there is

another mechanism for performance gain called (spatial) multiplexing

gain.

The multiplexing gain of an MIMO system results from the fact that a

MIMO channel can be decomposed into a number R of parallel

independent channels.

By multiplexing independent data onto these independent channels, we

get an R-fold increase in data rate in comparison to a system with just

one antenna at the transmitter and receiver.

Consider a MIMO channel with

channel gain matrix

known to both the transmitter and the receiver.

Let

denote the rank of H. From matrix theory, for any matrix H we

can obtain its singular value decomposition (SVD) as

(10.2)

where Σ is an

2015/10/11

diagonal matrix of singular values

Lecture2:CapacityofWirelessChannels

of H.

50

Parallel Decomposition of the MIMO Channel

•

Since

cannot exceed the number of columns or rows of H,

If H is full rank, which is sometimes referred to as a rich scattering

environment, then

.

The parallel decomposition of the channel is obtained by defining a

transformation on the channel input and output x and y through transmit

precoding and receiver shaping, as shown in the following figure:

•

•

The transmit precoding and receiver shaping transform the MIMO

channel into RH parallel single-input single-output (SISO) channels with

input

and output , since from the SVD, we have that

2015/10/11

Lecture2:CapacityofWirelessChannels

51

Parallel Decomposition of the MIMO Channel

2015/10/11

Lecture2:CapacityofWirelessChannels

52

Parallel Decomposition of the MIMO Channel

•

This parallel decomposition is shown in the following figure:

2015/10/11

Lecture2:CapacityofWirelessChannels

53

MIMO Channel Capacity

• The capacity of a MIMO channel is an extension of the mutual

information formula for a SISO channel given in Lecture 3 to a matrix

channel.

• Specifically, the capacity is given in terms of the mutual information

between the channel input vector x and output vector y as

(10.5)

•

The definition of entropy yields that H(Y|X) = H(N), the entropy in the

noise. Since this noise n has fixed entropy independent of the channel

input, maximizing mutual information is equivalent to maximizing the

entropy in y.

•

The mutual information of y depends on its covariance matrix, which

for the narrowband MIMO model is given by

E[yyH ]

2015/10/11

Lecture2:CapacityofWirelessChannels

(10.6)

54

MIMO Channel Capacity

where Rx is the covariance of the MIMO channel input.

•

The mutual information can be shown as

(10.7)

•

The MIMO capacity is achieved by maximizing the mutual information

over all input covariance matrices

satisfying the power constraint:

(10.8)

where

denotes the determinant of the matrix A.

• Now let us consider the case of Channel Known at Transmitter

2015/10/11

Lecture2:CapacityofWirelessChannels

55

MIMO Channel Capacity

•

Substituting the matrix SVD of H into C and using properties of unitary

matrices we get the MIMO capacity with CSIT and CSIR as

(10.9)

Since ⇢ = P/ n2 , the capacity (10.9) can also be expressed in terms of the

power allocation Pi to the ith parallel channel as

(10.10)

where ⇢i = Pi / n2 and

channel at full power.

•

i

=

2

2

i P/ n

is the SNR associated with the ith

Solving the optimization leads to a water-filling power allocation for the

MIMO channel:

(10.11)

2015/10/11

Lecture2:CapacityofWirelessChannels

56

MIMO Channel Capacity

• The resulting capacity is then

(10.12)

•

Now consider the case of Channel Unknown at Transmitter (Uniform

Power Allocation)

•

Suppose now that the receiver knows the channel but the transmitter

does not. Without channel information, the transmitter cannot optimize

its power allocation or input covariance structure across antennas.

If the distribution of H follows the ZMSW channel gain model, there is

no bias in terms of the mean or covariance of H.

Thus, it seems intuitive that the best strategy should be to allocate equal

power to each transmit antenna, resulting in an input covariance matrix

equal to the scaled identity matrix:

•

•

2015/10/11

Lecture2:CapacityofWirelessChannels

57

MIMO Channel Capacity

•

•

It is shown that under these assumptions this input covariance matrix

indeed maximizes the mutual information of the channel.

For an Mt transmit, Mr-receive antenna system, this yields mutual

information given by

(10.13)

•

Using the SVD of H, we can express this as

where

singular values of H.

•

and

is the number of nonzero

The mutual information of the MIMO channel (10.13) depends on the

specific realization of the matrix H, in particular its singular values { i }.

2015/10/11

Lecture2:CapacityofWirelessChannels

58

MIMO Channel Capacity

• In capacity with outage the transmitter fixes a transmission rate C, and

the outage probability associated with C is the probability that the

transmitted data will not be received correctly or, equivalently, the

probability that the channel H has mutual information less than C.

• This probability is given by

(10.14)

P

•

Note that for fixed Mr , under the ZMSW model the law of large numbers

implies that

(10.15)

• Substituting this into (10.13) yields that the mutual information in the

asymptotic limit of large Mt becomes a constant equal to

2015/10/11

Lecture2:CapacityofWirelessChannels

59

MIMO Channel Capacity

• We can have two important observations from the results in (10.14)

and (10.15)

• As SNR grows large, capacity also grows linearly with M = min{Mt , Mr }

for any Mt and Mr .

• At very low SNRs transmit antennas are not beneficial: Capacity only

scales with the number of receive antennas independent of the number of

transmit antennas.

• Fading Channels

• Channel Known at Transmitter: Water-Filling

EH

(10.16)

EH

2015/10/11

Lecture2:CapacityofWirelessChannels

60

MIMO Channel Capacity

•

•

A less restrictive constraint is a long-term power constraint, where we

can use different powers for different channel realizations subject to the

average power constraint over all channel realizations.

The ergodic capacity in this case is

EH

•

(10.17)

Channel Unknown at Transmitter: Ergodic Capacity and Capacity

with Outage

• Consider now a time-varying channel with random matrix H

known at the receiver but not the transmitter. The transmitter

assumes a ZMSW distribution for H.

• The two relevant capacity definitions in this case are ergodic

capacity and capacity with outage.

• Ergodic capacity defines the maximum rate, averaged over all

channel realizations, that can be transmitted over the channel

for a transmission strategy based only on the distribution of H.

2015/10/11

Lecture2:CapacityofWirelessChannels

61

MIMO Channel Capacity

•

This leads to the transmitter optimization problem - i.e., finding the

optimum input covariance matrix to maximize ergodic capacity subject

to the transmit power constraint.

Mathematically, the problem is to characterize the optimum Rx to

maximize

•

EH

(10.18)

where the expectation is with respect to the distribution on the channel matrix

H, which for the ZMSW model is i.i.d. zero-mean circularly symmetric unit

variance.

•

As in the case of scalar channels, the optimum input covariance matrix

that maximizes ergodic capacity for the ZMSW model is the scaled

identity matrix M⇢ IMt . Thus the ergodic capacity is given by:

t

EH

2015/10/11

Lecture2:CapacityofWirelessChannels

(10.19)

62

MIMO Channel Capacity

• The ergodic capacity of a 4x4 MIMO system with i.i.d. complex

Gaussian channel gains is shown in Figure 10.4.

2015/10/11

Lecture2:CapacityofWirelessChannels

63

MIMO Channel Capacity

•

•

•

•

•

Capacity with outage is defined similar to the definition for static channels

described in previous, although now capacity with outage applies to a

slowly-varying channel where the channel matrix H is constant over a

relatively long transmission time, then changes to a new value.

As in the static channel case, the channel realization and corresponding

channel capacity is not known at the transmitter, yet the transmitter must

still fix a transmission rate to send data over the channel.

For any choice of this rate C, there will be an outage probability associated

with C, which defines the probability that the transmitted data will not be

received correctly.

The outage capacity can sometimes be improved by not allocating power to

one or more of the transmit antennas, especially when the outage

probability is high. This is because outage capacity depends on the tail of

the probability distribution.

With fewer antennas, less averaging takes place and the spread of the tail

increases.

2015/10/11

Lecture2:CapacityofWirelessChannels

64

MIMO Channel Capacity

•

The capacity with outage of a 4 × 4 MIMO system with i.i.d. complex

Gaussian channel gains is shown in Figure 10.5

2015/10/11

Lecture2:CapacityofWirelessChannels

65

MIMO Channel Capacity

2015/10/11

Lecture2:CapacityofWirelessChannels

66