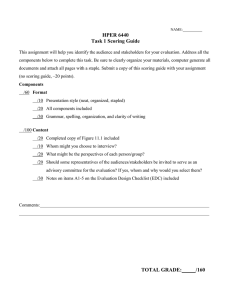

Automated Tools for Subject Matter Expert Evaluation of Automated

advertisement