found - MartinHilbert.net

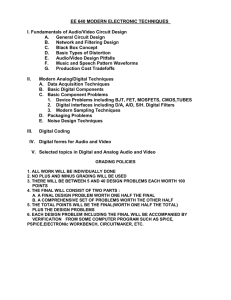

advertisement