WEB MIMICRY ATTACK DETECTION USING HTTP TOKEN

advertisement

International Journal of Innovative

Computing, Information and Control

Volume 7, Number 7(B), July 2011

c

ICIC International ⃝2011

ISSN 1349-4198

pp. 4347–4362

WEB MIMICRY ATTACK DETECTION USING HTTP TOKEN

CAUSAL CORRELATION

Ching-Hao Mao1 , Hahn-Ming Lee1,2 , En-Si Liu1 and Kuo-Ping Wu1

1

Department of Information Science and Information Engineering

National Taiwan University of Science and Technology

No. 43, Sec. 4, Keelung Rd., Taipei 106, Taiwan

{ d9415004; hmlee; m9615046; wgb }@mail.ntust.edu.tw

2

Research Center for Information Technology Innovation

Academia Sinica

128 Academia Road, Section 2, Nankang, Taipei 115, Taiwan

Received March 2010; revised November 2010

Abstract. Web mimicry attacks evade anomaly-based web intrusion detection systems (WIDSs) by inserting non-functional characters. In this study, we propose a web

mimicry anomaly detection method that uses HTTP token causal correlation. The proposed method extracts token sequences from web application HTTP requests and models

the token correlation based on conditional random fields (CRFs) in order to identify web

mimicry attacks. The CRF model is widely used for solving sequence labeling problems

and suitable for capturing the dependency among different tokens in a token sequence.

Since CRFs relax the strong independence assumptions that the other probabilistic sequence analysis methods (e.g., hidden Markov model) have, it can capture long term

dependencies among the observed sequences of web tokens to improve the detection capability by observing significant attack patterns. The proposed method requires only HTTP

request information and can be easily plugged into existing intrusion detection systems.

Two datasets from “ECML/PKDD 2007’s Analyzing Web Traffic challenge” and real

world HTTP traffic data extracted from a private telecom company are used for an evaluation. The experiment results show that the proposed system performs well in the detection of both web mimicry attacks and general web application attacks even in heavily

intersecting cases.

Keywords: Web security, Mimicry attack, Conditional random field, Cross site script,

SQL injection

1. Introduction. Since many economic activities such as shopping and cyber banking

can be performed through web applications, web applications attract the attention of

hackers who break into computer systems with the aim of obtaining valuable information

[1,23]. The anomaly detection paradigm, which models normal behavior, is widely used

in protecting web application services and particularly useful for detecting novel and

unknown attacks. Attackers thus try to hide their malicious behavior by mimicking

normal behavior to deceive an anomaly-based web intrusion system (WIDS). This type

of attack behavior results in a high false negative detection performance for an anomaly

based WIDS making it difficult to identify known attacks. The term “mimicry attack”

was first introduced by Wagner et al. [21] to describe attacks that allow a sophisticated

attacker to craft malicious actions to evade a detection mechanism. Mimicry attacks also

exist at the network traffic application level, involving malicious web application behavior

used to evade WIDSs [12,15]. In this study, we use the term web mimicry attacks to

denote mimicry attacks involving the network traffic at a web application. Similar to

host or network mimicry attacks, web mimicry attacks usually use evasion techniques to

4347

4348

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

match a normal appearing behavior model and manifest the malicious injected code as

normal-liked web scripts from the statistical or probabilistic viewpoints.

Although the concept of web mimicry attacks is an extension of network/host mimicry

attacks, some differences exist in web mimicry attacks in anomaly intrusion detection

systems based on the host-based [21] or payload-based [13]. These differences are the result

of the high variations in web environments. Web application traffic contains dynamic and

diverse information involving various languages, libraries, scripts and human concepts.

The high variation in web traffic makes it more difficult to build a normal behavior model.

Attackers can exploit the high variation in network environments to craft sophisticated

web mimicry attacks that appear to be normal web scripts. Consequently, a web anomaly

IDS requires a high variation tolerance mechanism to avoid being easily deceived by web

mimicry attacks.

Web mimicry attacks usually utilize several evasion techniques to transform common

attacks into more sophisticated attacks to evade detection. The three major types of

evasion techniques are as follows:

1. Substitution, which replaces an attack with another that is functionally equivalent,

for example, utilizing URL encoding to replace “ ′ or” with “%27%6F %72”.

2. Interchange, which replaces an attack with any valid permutation of the attack. For

example, in an SQL injection attack, the “ ′ or 4 = 4– –.” string is equal to “ ′ or 5

>– –.”

3. Insertion, which inserts normal characters or meaningless into an attack in order to

make the attack match the normal model of the anomaly detection system, as shown

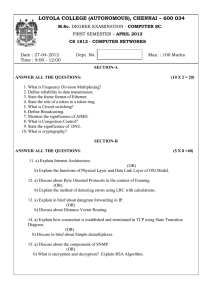

in Figure 1.

Original Pattern

A

B

C

D

Insertion Pattern

A

B

X

Y

Z

C

D

Figure 1. Demonstration of insertion pattern produces in mimicry attacks

(The filled circles denote the inserted tokens for mimicking normal behavior

to evade anomaly detection)

Among the above three evasion techniques, the insertion-typed evasion technique poses

the most intractable problem for both signature and anomaly-based detection mechanisms. Therefore, detecting insertion-type attacks could be helpful for identifying web

mimicry attacks.

Many anomaly detection methods have been proposed to protect web applications,

such as N-gram [22], deterministic finite automation (DFA) [12], Morkov Model [15,20]

and hidden Markov model (HMM) [8]. Raju et al. [7] give a comprehensive survey related

to the sequential pattern and clustering pattern for Web usage data. Ingham and Inoue

[11] proposed a framework for testing conventional web anomaly IDS algorithms and

showed the serious limitations of all approaches for detecting web mimicry attacks. They

proposed two important criteria for preventing web mimicry attacks: (1) a token-based

parsing mechanism and (2) robust token correlation modeling. The first criterion implies

that token-based algorithms should be used instead of character-based algorithms for

modeling the high-level structure in valid web application HTTP requests. The second

criterion shows that the relationship among the tokens is important for capturing the

semantics of valid HTTP requests. Conventional anomaly detectors do not usually apply

WEB MIMICRY ATTACK DETECTION

4349

both of these criteria and, therefore, the anomaly scores are skewed by the insertion of

normal or irrelevant characters. Because these methods do not perfectly consider the

token correlation of the sequence structure in HTTP requests, a normal model built by

a conventional approach cannot adequately describe the normal behavior of valid HTTP

requests. Table 1 shows whether conventional methods can detect the three types of

evasion techniques. It should be noted that none of these methods address the insertion

issue 1.

Table 1. Conventional methods for web anomaly detection system

Token-based N-gram

DFA

HMM

Markov model

Substitution Interchange Insertion

Limitation

O

O

X

Weakness of similarity measure

O

O

X

Weakness of similarity measure

O

O

X

Strict independent assumption

O

O

X

High false positive

To find outdetecting web mimicry attacks, we must overcome the high variation of web

traffic for when modeling the web scripts token structures in HTTP requests. Therefore,

we propose a mechanism that applies the Conditional Random Fields (CRFs) to model the

token correlation of the sequence structures in HTTP requests in order to find outdetect

insertion-type web mimicry attacks. HTTP requests are transformed into token sequences

for profiling the proximity token correlation based on CRFs, which build probabilistic

models [16]. In addition to using CRFs to model the token correlation of HTTP requests,

the proposed method also recognizes subtle web mimicry attacks which are including

the insertion ofed by normal-liked or meaningless characters. One advantage of CRFs

is that it is practical to represent the long range dependencies of the tokens in a token

sequence. Another advantage of CRFs is that labeling the current token depends on not

only the adjacent tokens, but also the whole web token sequence. Our approach can

effectively identify web mimicry attacks based on the basis of CRFs, and is also effective

for different ratios of insertion ratios and general web attacks. The experiment results

indicate that modeling the correlation of HTTP requests, based on CRFs, is suitable for

finding outdetecting web mimicry attacks.

We summarize the main contributions of the proposed approach as follows:

1. It exploits the advantage of CRFs to detect insertion web mimicry attacks. Furthermore, our approach is also effective in identifying general web attacks and different

insertion ratios.

2. Instead of building huge attack patterns, our approach can automatically build a

probabilistic model to identify web mimicry attacks.

3. The proposed method can be easily plugged into existing intrusion detection n

systems for identifying subtle web attacks.

The remainder of this paper is organized as follows: Section 2 describes the architecture

of the proposed method; Section 3 introduces the performance evaluation of the proposed

approach; finally, Section 4 presents the conclusion and discussion.

2. Problem Statement and Preliminaries. Token-based methods (e.g., DFA) are

widely applied in web application attack detection, but most token-based methods do not

perfectly model token correlation with insertion. In this study, we proposed capturing

many more token correlations to distinguish valid and malicious web application HTTP

requests. The proposed method extracts the token sequence from HTTP requests and

models the token correlation of token sequences based on CRFs in order to identify web

mimicry attacks, especially insertion cases. As shown in Figure 2, the system architecture

4350

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

is composed of three modules: the Token Sequence Extractor, CRF-based Token Correlator and Web Mimicry Attack Detector. The Token Sequence Extractor is responsible

for transforming HTTP requests into token sequences. The CRF-based Token Correlator

uses CRFs to extract the proximity token correlation and labels token sequence for building the CRF model. The Web Mimicry Attack Detector is used to determine whether an

incoming token sequence is normal or is an attack.

Expert

Labeled

HTTP Requests

New HTTP

Requests

Attack?

Token Sequence

Extractor

CRFs-based

Token Correlator

Web Mimicry

Attacks Detector

CRFs

Parameters

Profile

Training phase

Testing phase

Figure 2. Proposed system architecture. HTTP requests are considered as

token sequences, and the token correlation is modeled through conditional

random fields in order to identify web mimicry attacks.

Additionally, the system utilizes a data source and an intermediate profile, called labeled

HTTP requests and CRF parameter profile, for the construction of a CRF-based token

correlation model. The labeled HTTP requests represent the HTTP requests classified by

domain experts in advance. CRF Parameter Profile records the parameters of the CRF

model and is used to determine the label of the predicted token sequences with the Web

Mimicry Attack Detector.

2.1. Token sequence extractor. The Token Sequence Extractor is used to covert an

HTTP request into a token sequence in order to model the token correlation. The conventional method [12] usually extracts tokens according to the HTTP RFC standard [5]. It is

difficult to parse HTTP traffic in a straightforward way because most web browsers and

web robots do not fully follow the RFC standard. In order to overcome these problems,

we utilize a special symbolic HTTP parsing mechanism to deal with complicated HTTP

requests. The high variation characteristic of HTTP traffic makes modeling the normal

behavior of HTTP request becomes more difficult. The elimination of this high variability

in token sequences is an efficient way to model the potentially meaningful token correlation, as well as gain insight into the semantic meaning of an HTTP request. The tokens

contain words (letters and numbers) and special symbols. Algorithm 1 accepts HTTP

requests as inputs and generates relative token sequences as outputs. An HTTP request

WEB MIMICRY ATTACK DETECTION

4351

consists of a series of single characters, which are sequentially extracted from the HTTP

request. Special symbols are used in an HTTP request to delimit the token boundary,

and these special symbols are output as tokens. Continuous letters and numbers without

special symbols are output as a token. The detailed pseudo code is described in Algorithm

1. Figure 3 shows an example of token sequence extraction. High variability generalizing

and obfuscated character decoding shown in Algorithm 1 are next described in detail.

Figure 3. Example of token sequence extractor. It segments an HTTP

request into a token sequence in order to find the token correlation.

2.1.1. High variability generalizing. High variability generalizing is used to reduce the

high variability in HTTP requests in order to extract significant tokens from token sequences. Ingham et al. [12] mentioned that high variability universally exists in HTTP

requests, because HTTP traffic contains not only protocols and parameters but also various languages, libraries, scripts and human concepts. In an anomaly detection system,

4352

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

this high variability makes it difficult to profile normal behavior from normal HTTP requests. Ingham et al. [12] proposed parsing heuristics to generalize the high variability in

HTTP requests. We eliminate or transform the non-meaningful or non-informative tokens

in token sequences to reduce the impact from variability through the use of some parsing

heuristics, as shown below:

• All the upper case letters in HTTP requests are rewritten as lower case letters [12],

e.g., “HTTp://wWw.GooGLe.COM” is transformed into “http://www.google.com”.

• IP addresses in HTTP requests are replaced with other strings. (e.g., “IP”), e.g.,

“118.169.1.1” is transformed into “IP”.

• Dates in HTTP requests are parsed and replaced with other strings. (e.g., “date”),

e.g., “2009/9/5” is transformed into “TIME”.

• Since hash values in literal strings are mostly meaningless, we remove them from

HTTP requests, e.g., “hash value:0934394302430” is transformed into “hash”.

• Redundant Characters Remover: this removes the redundant characters that are

inserted in attack strings, without making the attacks invalid, e.g., “or+++1+++

= +++1” is transformed into “or 1 = 1”.

2.1.2. Obfuscated characters decoding. Obfuscated characters decoding is used to decode

characters that have been obfuscated by encoding techniques, such as URL Encoding and

UTF-8 or UTF-16 encoding techniques. Even though encoding techniques are often used

in a legitimate way, they can also be applied to craft web mimicry attacks. Hackers utilize

these encoding techniques to obfuscate malicious HTTP requests as normal-like HTTP

requests, while retaining the intention of the obfuscated malicious HTTP requests. However, the obfuscated characters might have potential meaning or functions behind the

tokens. URL encoding and unicode encoding are two types of encoding techniques widely

applied to web applications to encode/decode strings in a web page. Actually putting

text in a query might result in a confused URL. For example, a browser could send an

HTTP request with form data to a web server. The spaces are usually replaced with

the “+” string, and unsafe ASCII characters are also transformed into 8-bit hexadecimal

code character, with a “%” string placed before the encoded terms. The unicode encoding

technique collects the major languages spoken today in a unification unicode space. Unicode provides a unique number to represent every character across the operation system

platform. Attackers can utilize the Unicode encoding technique to replace malicious text

with Unicode to hide their malicious intention. Therefore, it is necessary to decode the

obfuscated characters to capture their semantic meaning.

2.2. CRFs-based token correlator. The CRF-based Token Correlator utilizes CRFs

to construct a CRF-based correlation model that represents the token correlation of the

token sequences. This module accepts the labeled token sequences as input from the

Token Sequence Generator. Let X be a random variable over the token sequences to be

labeled and Y is a random variable over the corresponding label sequences. There are two

advantages to using CRFs to model the token correlation from web script sequences. First,

CRFs can be used for a discriminative model that directly models conditional distribution

p(Y | X) instead of modeling joint distribution p(Y, X), as a generative model does. The

primary limitation of a generative model is that it requires the model marginal distribution

p(X). It is very difficult to estimate the marginal distribution because there is usually

a high dependency among the different tokens in token sequences X. This means that

when modeling p(X), it is necessary to enumerate all the possible token sequences. Yet,

often the amount of training data is insufficient. Second, CRFs determine the label of

the current token based on the past and future tokens instead of merely considering the

WEB MIMICRY ATTACK DETECTION

4353

current token or previous tokens. There are two components in this module, called the

Feature Template Configurator and CRF Model Constructor.

2.2.1. Feature template configurator. Feature Template Configurator intends to set the

feature template for constructing CRFs-based token correlation model according to expert

knowledge. Feature template describes the feature functions which are used to train CRFs

model. The feature function defines what kind of correlations we want to capture such

as relation of neighbor tokens or relation between two tokens with different distance. In

this paper, we mainly capture the correlation between neighbor tokens in token sequences

for constructing CRFs model. Figure 4 displays the feature used in Feature Template

Configuration. Figure 5 shows that the template is used for defining a regular format for

training and testing data.

Input data

Feature f ij for xi

j

0

1

i

xi

expanded

feature

yi

0

‘

‘

SQL injection

1

or

Key

SQL injection

2

1

Var

SQL injection

3

=

=

SQL injection

4

1

Var

SQL injection

5

-

-

SQL injection

6

-

-

SQL injection

j

Input data

Feature f ij for xi

0

1

expanded

xi

feature

i

yi

1

2

or [-2,0]

1 [-1,0]

Key [-2,1]

Var [-1,1]

SQL injection

SQL injection

3

= [0,0]

=

SQL injection

4

5

1 [+1,0]

- [+2,0]

Var [+1,1]

[+2,1]

[0,1]

SQL injection

SQL injection

Figure 4. Features used in templates configuration

The Feature Template Configurator sets the feature template for constructing a CRFbased token correlation model based on expert knowledge. A feature template describes

the feature functions that are used to train a CRF model. The feature function defines

the types of correlations we want to capture such as the relation of neighbor tokens or

relation between two tokens with different distances. In this paper, we mainly capture

the correlation between neighbor tokens in token sequences to construct a CRF model.

Figure 4 displays the feature used in the feature template configuration. Figure 5 shows

that the template is used for defining a regular format for training and testing data.

4354

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

Input Data

‘

or 1 = 1 - Feature Template

Feature f ij for xi

j

0

1

i

Xi (tokens)

Expanded

Feature

0

‘

1

[-3,0]

‘

Yi (labels)

[-3,1]

SQL injection

or [-2,0]

Key [-2,1]

SQL injection

2

3

1 [-1,0]

= [0,0]

Var [-1,1]

=

[0,1]

SQL injection

SQL injection

4

1 [+1,0]

Var [+1,1]

SQL injection

5

-

[+2,0]

-

[+2,1]

SQL injection

6

-

[+3,0]

-

[+3,1]

SQL injection

Figure 5. Example of feature template for training by CRFs. This template represent the proximity relations for training and testing data.

2.2.2. CRF model constructor. This component is capable of constructing a CRF-based

token correlation model and estimating the essential parameters of this CRF model. Given

a token sequence whose length is n, a random variable over the token sequence is defined

as X = (x1 , x2 , . . ., xn ), and a random variable over the corresponding label sequence

is denoted as Y = (y1 , y2 , . . ., yn ). CRFs provide a probabilistic graphical model for

calculating the probability of Y globally conditioned on X, as shown in Lafferty et al. [16].

We first define G = (V, E) as a graph composed of vertices V and edges E such that

Y = (Yv ), where Y ∈ v and Y is specified by the vertices of the graph G. (X, Y )

represents a CRF. The random variable Yv follows the Markov property with regard to

the graph when conditioned on X: p(Yv |X, Yw , w ̸= v) = p(Yv |X, Yw , w ∼ v), where w ∼ v

represent that w and v in G are neighbors. As in our proposed approach, we use a simple

sequence modeling. That is, the joint distribution over the label sequence Y given X has

the form:

(

)

∑

∑

µk gk (v, y|v , x) ,

(1)

pθ (y|x) ∝ exp

λk fk (e, y|e , x) +

e∈E,k

v∈V,k

where x is the token sequence, y is the corresponding label sequence, and y|s contains

the set of components of y that is related to the vertices or edges in a subgraph of S.

The features fk and gk are given in advance and fixed. For constructing the CRF model,

we need to find the essential parameters θ = (λ1 , λ2 , . . .; µ1 , µ2 , . . .) from training data

D = (xi , y i )N

i=1 according to the empirical distribution p̃(x; y).

2.3. Web mimicry attacks detector. The goal of the Web Mimicry Attacks Detector

is to label each token of a token sequence as normal or attack, with a confidence score of

prediction given to each label based on the CRF-based token correlation model. Then,

we can determine whether a token sequence is an attack or not according to the total

confidence score. This module accepts the predicted token sequences from the Token

Sequence Extractor as input. This module contains two components: the CRF-based

Token Sequence Labeler and Mimicry Attack Identifier.

WEB MIMICRY ATTACK DETECTION

4355

2.3.1. Token sequence labeler. The CRF-based Token Sequence Labeler is used to label

each token of a predicated token sequence as normal or attack, with a confidence score,

based on the CRF-based token correlation model. This component uses the CRF-base

token correlation model to determine label sequence Y corresponding to predicted token

sequence X with the highest probability by the Viterbi algorithm (MAP) [6]. In the

meantime, CRFs-based token correlation model would estimate the likelihood value of

each label for a predicted token and then the label with the maximum likelihood would

be the label of that predicted token with a confidence score.

2.3.2. Mimicry attacks identifier. The Mimicry Attack Identifier accepts both token sequences and the corresponding confidence scores as input for determining whether the

incoming HTTP request is normal or an attack. Since the CRF-based Token Correlator gives a confidence score for each category (normal and attacks), we propose an attack

identifying mechanism that uses the confidence score for categorizing the incoming HTTP

token sequence. This mechanism determines the label with the maximum total confidence

score. The notations are described as follows, and the pseudo code is shown in Algorithm

2.

Notations Given label sequence S = (s1 , s2 , . . ., s|s| ), which corresponds to the predicted

token sequence, | S | is the length of the label sequence. ci represents the confidence score

of the ith label in the label sequence. N corresponds to | A | types of attack labels in the

label sequence, so (a1 , a2 , . . ., a|A| ) represents these attack labels. Ck represents the sum

of confidence scores of the k th attack in the label sequence.

In Algorithm 2, the input is a token sequence from the Token Sequence Labeler, and

each token of the token sequence has a corresponding label and confidence score. The

output would determine the label for the entire token sequence. We utilize the confidence

scores of the label sequence to calculate the total confidence scores of the labels that

appear in the label sequence, and then determine the relevant label as the one with the

maximum total confidence score. If all the labels for each token in a token sequence are

normal, the entire token sequence under the HTTP request could be regarded as normal.

Otherwise, we calculate all the total confidence scores of the attack labels that exist in

the token sequence. To get the total confidence score for an attack label, we sum the

confidence scores of each type of attack label in the token sequence. Finally, we set the

4356

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

label for the entire token sequence as the attack label whose total confidence score is the

maximum of all the attack labels in the token sequence. Figure 6 gives an example for

Algorithm 2.

Label Sequence

N

N

N

N

N

Token Sequence

T1

T2

T3

T4

T5

NORMAL

(a)

confidence scores

f1

f2

f3

f4

Label Sequence

N

A1

A1

A2

A2

N

Token Sequence

T1

T2

T3

T4

T5

T6

A2

f1 + f2 < f3 + f4

(b)

Figure 6. Two cases of decision mechanism for determining the label for

entire token sequence from Algorithm 2. (a) If labels for all the tokens

are normal, label for entire token sequence is normal. (b) Label of entire

token sequence considered as attack label whose total confidence score is

maximum of total confidence scores of all attack labels in token sequence.

3. Experiment Design and Results. This section discusses four experiments that

were performed to evaluate the effectiveness of our proposed approach for identifying

web mimicry attacks. We found that in insertion web mimicry attacks with different

insertion ratios and general web attacks, the proposed approach is effective and better than

baseline methods. We also compared the proposed method with some baseline methods

such as TFIDF-naı̈ve Bayes (TFIDF-NB) [3,10] and the hidden Markov model (HMM)

[17] to analyze the strength of the proposed method from the perspective of its profiling

capability. The three goals for the experiments used for evaluating the performance

of our proposed approach are described as follows. First, we intended to evaluate the

performance on insertion based web mimicry attacks. Meanwhile, different insertion ratios

were analyzed to determine whether they would be helpful for evading detection under

our approach. Finally, we demonstrated that the proposed approach was not only helpful

for insertion based web mimicry attacks but also for general web attacks.

3.1. Datasets. Two datasets were used for evaluations in the experiments: PKDD07

and a dataset obtained from Chunghwa Telecom Laboratories 1 . First, the performance of

our proposed approach when detecting PKDD 2007’s “Analyzing Web Traffic challenge”

(PKDD07) dataset [4] was evaluated. The PKDD 2007 dataset extracted from HTTP

query logs is composed of 50000 pre-classifier examples in a valid (normal query) category

and 7 attack categories. Each example in the dataset is completely independent of the

others and has a unique id, context (describes what environment the query runs in), class

(the category for the sample as classified by an expert), and the content of the query

itself. Additionally, the important element “attackInterval” indicates the location of the

attack sequences. There are eight types of labels in the PKDD07 dataset including one

valid and seven types of attacks: (1) normal query (Valid), (2) cross-site scripting (XSS),

(3) SQL injection (SQL), (4) LDAP injection (LDAP), (5) XPATH injection (XPATH),

(6) path traversal (PATH), (7) command execution (OSC) and (8) SSI attacks (SSI).

1

Chunghwa Telecom, http://www.cht.com.tw/

WEB MIMICRY ATTACK DETECTION

4357

PKDD07 dataset was divided, with 70% (# 24504) used for a training set and 30%

(# 10502) for a testing set. The training set was used as learning data to construct our

proposed model. The testing set was used to generate three types of test datasets D1,

D2 and D3 for effectiveness evaluations. The data in D3 was extracted from PKDD07

to represent general web attacks. D1 contained samples that were identified as insertion

web mimicry attacks by domain knowledge from D3. D2 contained samples whose class

was SQL Injection from D1 and were crafted as insertion-type SQL Injection attacks by

utilizing different insertion ratios. Table 2 shows the distribution of each dataset in our

experiments.

Table 2. Distribution of datasets (D2 is the number of interjection attacks

and D1 is the number of testing instances) (LDAP: LdapInjection, OSC:

OSCommand, PATH: Path Transversal, SQL: SqlInjection, XPATH:

XPathInjection)

Type Total Number Training Number (D1) (D2)

Valid

35006

24504

10502

0

LDAP

2279

1596

683

0

OSC

2254

1578

676 1036

PATH

2262

1584

678 1764

SQL

2015

1411

604

238

SSI

16

12

4

0

XPATH

2279

1596

683

0

XSS

1825

1278

547

0

The dataset from Chunghwa Telecom Laboratories was obtained from the access logs

of the web servers in a telecom company called Chunghwa Telecom Laboratories. We

took the ordinary access logs as normal training and testing datasets. Some of tools

and websites that were used to generate the access logs of XSS attacks provided the

functionality of producing XSS attack strings. For example, CAL9000 [18] is an OWASP

project and provides a collection of web application security testing tools. It provided the

flexibility and functionality that we needed to generate the XSS attacks. On the other

hand, RSnake [19] provides an XSS cheat sheet, which contains numerous XSS attack

strings. We wrote some web pages, launched the XSS attacks, and got the access logs of

the XSS attacks.

3.2. Performance measurements and tools. A confusion matrix [14] was used to

measure the precision and recall in our experiments. A confusion matrix contains classified results related to the actual and test outcomes from a classification model. In our

experiment, CRF toolkit, CRF++ [2], was utilized to implement the proposed approach.

In order to make a comparison with other methods, we utilized an open source WEKA

[9] written by Java and HMM Toolbox [17] based on Matlab to evaluate the performance.

3.3. Experiment results. Four experiment results of finding web mimicry attacks and

a comparison with baseline methods are shown in this section. The first experiment

evaluated the accuracy of identifying out insertion web mimicry attacks. The second

experiment showed that the proposed approach can identify web mimicry attacks with

different insertion ratios. The third experiment showed that the proposed approach was

also effective for general web attacks. The fourth experiment demonstrated the experiment

result for the Chunghwa Telecom dataset.

4358

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

3.3.1. Effectiveness analysis. This experiment compared the proposed approach with TFI

DF-NB and HMM on the insertion web mimicry attack dataset D1. Figure 7 shows the

precision and recall rates of our proposed approach and the other methods on the insertion

web mimicry attack dataset. Our approach is obviously effective for identifying insertion

mimicry attacks and better than the other methods. The experiment results show that

using CRF to model the sequence structure of HTTP requests is helpful for identifying

insertion mimicry attacks, especially in OSCommand, PathTraversal and SQLInjection

with more complex attack mechanisms.

1

Precision

0.8

0.6

0.4

0.2

0

Ours

TFIDF−NB

HMM

ZERO

Oscommand Pathtraversal

Sql injection

(a)

1

Recall

0.8

0.6

0.4

0.2

0

Ours

TFIDF−NB

HMM

ZERO

Oscommand Pathtraversal

Sql injection

(b)

Figure 7. Effectiveness analysis of identifying insertion web mimicry attacks: (a) precision and (b) recall. Our proposed method performed better

than the other two baseline approaches (i.e., naı̈ve Bayes and hidden Markov

model) in different types of web application attacks.

3.3.2. Tolerance analysis for insertion. The goal of this experiment was to show the tolerance of our method for different insertion ratios using the data from D2. We randomly

WEB MIMICRY ATTACK DETECTION

4359

inserted normal characters of different ratios into attack strings extracted from the SQL

Injection samples of web mimicry attacks from D1.

The insertion ration is the length proportion of the inserted string to the original

attack string. We verified the performance for three insertion ratios. WEKA and the

HMM toolbox were used to obtain TFIDF-NB and HMM results. Figure 8 shows the

recall rate of the proposed approach and the other methods on D2, which contained

different insertion ratios. Based on the experimental results, we can obviously reach two

conclusions. First, our approach maintains higher performance than the other methods

in precision and recall measurements. Second, the performance decline of the proposed

approach was smaller than that of the other methods, which means high ratio interjection

had a smaller impact on the proposed approach that it did on the others.

1

Recall

0.9

0.7

0.5

0.45

Ours

TFIDF−NB

HMM

pad1

pad5

pad10

Figure 8. Recall rate of identifying web mimicry attacks, where meaningless characters were inserted at different tations

3.3.3. Performance analysis in general web attacks. In this experiment, we intended to

show that the proposed approach was not only effective at identifying insertion web

mimicry attacks but also general web attacks using D3. We also utilized a confusion

matrix to evaluate the performance of proposed approach, TFIDF-NB and HMM on D3.

Figure 9 shows the precision and recall rates of the proposed approach and the other

methods on eight types of data samples in testing data D3. The proposed approach was

more effective than the other methods at identifying general web attacks.

3.3.4. Performance analysis on real dataset. In this experiment, we intended to evaluate

the proposed approach using a real world dataset. Figure 10 shows that the proposed

approach could identify all the XSS attacks in the Chunghwa Telecom dataset. Our

approach can model the structure of normal data and malicious attacks to distinguish

between XSS attacks and benign web applications. Although the recall performance of

HMM was as good as our proposed mechanism, our approach still performed significantly

well compared to HMM when considering the forward and backward causal correlation.

The naı̈ve Bayes model still had the lowest precision and recall, demonstrating that

only considering the occurrence of script tokens could not clearly differentiate attacks

from benign applications in web script sequences.

4. Discussion. We discuss the computation and deployment analysis for our proposed

approach in more detail.

4360

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

1

0.9

0.8

0.7

Ours Approach

TF−IDF+NB

HMM

0.6

0.5

Valid

OSC

Path

SQL

XSS

Figure 9. F-measure for identifying general web attacks. The average

performance of the proposed approach was better than that of the baseline

methods for detecting general web attacks.

4.1. Computation analysis. The computation in the proposed approach includes two

parts, one is CRF token correlation training time, and the other one is for token extraction.

The CRF with discriminative version can also be efficiently done in O(ml3 ) where m is

the number of production of the grammar and l is the length of the sequence. In this

application, the token length of HTTP request/response is around 5 to 10 in average. For

the number of production of the grammar, that is, the token size (alphabet) in our work

is around 40 50. Therefore, it would not give a heavy loading or be impractical in real

environment. In another point of view, the token extraction process just one-pass process

(linear computation, O(n)) for replacing the matched scripts or symbols.

4.2. Deployment analysis. We discuss the deployment conditions of our proposed approach in two views, one is the quality of ground truth for learning, and the other view

is the detection location for data collection. The quality of ground truth is the critical

point for learning quality. In this work, we apply both PKDD2007 benchmark and one

real world dataset for analysis. The result reflect that either public benchmark or real

world dataset, the proposed method could tolerant the environment divergence to achieve

better result than other baseline approach. For the detection location of data collection,

the proposed approach only inspect the request/response messages of HTML, therefore,

it is easily collect the inspect data.

5. Conclusion. In this paper, we proposed a detection mechanism for web mimicry

attacks, which exploits the advantage of both novel web script token extraction and

insertion-tolerated CRF mechanisms. In this method, incoming HTTP requests are segmented into a sequence of tokens, and CRFs are used to model the token correlation, especially to identify the insertion tricks of mimicry attacks. The proposed method requires

only HTTP request information and can be easily plugged into web intrusion detection

systems for sound protection on web applications. To the best of our knowledge, this is

the first anomaly detection system design for insertion-based web mimicry attacks.

We tested our approach on the PKDD 2007 dataset and a real world dataset and found

that the proposed approach has both high precision and recall rates in detecting web

mimicry attacks with different insertion ratios. This method also significantly improves

the current web attack detection mechanism when a sequence of web scripts is used. The

WEB MIMICRY ATTACK DETECTION

4361

XSS Attack Detection

1

Precision

0.8

0.6

0.4

0.29

Ours

HMM

TDIDF−NB

(a)

XSS Attack Detection

1

Recall

0.98

0.96

0.93

Ours

HMM

TDIDF−NB

(b)

Figure 10. Precision and recall rates for identifying XSS attacks. The

proposed approach could identify all the XSS attacks in the Chunghwa

Telecom dataset because the approach can model the structure of normal

data and malicious attacks to distinguish XSS attacks.

results of the evaluation using both public data and real world data indicated stable performance. Therefore, ideally, the system will not require any pre-configuration, although

the level of sensitivity to anomalous data has to be automatically adjusted according to

the network environment (e.g., diversity of web scripts). Our proposed approach is also

suitable for modeling web traffic and is capable of identifying both web mimicry attacks

and general web attacks.

REFERENCES

[1] C. C. Chang and L.-W. Sung, A computer maintenance expert system based on web services, ICIC

Express Letters, vol.3, no.4(B), pp.1209-1214, 2009.

[2] CRF++: Yet Another CRF Toolkit, 2006.

4362

C.-H. MAO, H.-M. LEE, E.-S. LIU AND K.-P. WU

[3] P. Domingos and M. J. Pazzani, On the optimality of the simple bayesian classifier under zero-one

loss, Machine Learning, vol.29, pp.103-130, 1997.

[4] ECML/PKDD 2007’s Analyzing Web Traffic Challenge, 2007.

[5] R. Fielding, J. Gettys, J. C. Mogul, H. Frystyk, L. Masinter, P. Leach and T. Berners-Lee, Hypertex

Transfer Protocol, HTTP/1.1. 1998.

[6] G. D. Fornay, The viterbi algorithm, Proc. of the IEEE, vol.61, pp.268-278, 1973.

[7] G. T. Raju and L. M. Patnaik, Knowledge discovery from web usage data: Extraction and applications of sequential and clustering patterns – A survey, International Journal of Innovative

Computing, Information and Control, vol.4, no.2, pp.381-390, 2008.

[8] D. Gao, M. K. Reiter and D. X. Song, Behavioral distance measurement using hidden Markov models,

Proc. of the 9th International Symposium on Recent Advances in Intrusion Detection, pp.19-40, 2006.

[9] I. H. Witten and E. Frank, Data Mining: Practical Machine Learning Tools and Techniques, 2nd

Edition, Morgan Kaufmann, 2005.

[10] K. S. Jones, A statistical interpretation of term specificity and its application in retrieval, Document

Retrieval Systems, pp.132-142, 1988.

[11] K. L. Ingham and H. Inoue, Comparing anomaly detection techniques for http, Proc. of the 10th

International Symposium on Recent Advances in Intrusion Detection, pp.42-62, 2007.

[12] K. L. Ingham, A. Somayaji, J. Burge and S. Forrest, Learning DFA representations of HTTP for

protecting web applications, The International Journal of Computer and Telecommunications Networking, vol.51, pp.1239-1255, 2007.

[13] M. Kiani, A. Clark and G. Mohay, Evaluation of anomaly based character distribution models in

the detection of sql injection attacks, Reliability and Security, ARES, pp.47-55, 2008.

[14] R. Kohavi and F. Provost, Glossary of terms, Machine Learning, vol.30, 1998.

[15] C. Kruegel, G. Vigna and W. Robertson, A multi-model approach to the detection of web-based

attacks, The International Journal of Computer and Telecommunications Networking, vol.48, pp.717738, 2005.

[16] J. Lafferty, A. McCallum and F. Pereira, Conditional random fields: Probabilistic models for segmenting and labeling sequence data, Proc. of the 18th International Conference on Machine Learning,

pp.282-289, 2001.

[17] L. R. Rabiner, A tutorial on hidden Markov models and selected applications in speech recognition,

Proc. of the IEEE, vol.77, pp.257-286, 1989.

[18] OWASP, Owasp Cal9000 Project.

[19] RSnake. Xss (Cross Site Scripting) Cheat Sheet Esp: For Filter Evasion.

[20] Y. Song, S. Stolfo and A. Keromytis, Spectrogram: A mixture-of-markov-chains model for anomaly

detection in web traffic, The 16th Annual Network and Distributed System, 2009.

[21] D. Wagner and P. Soto, Mimicry attacks on host-based intrusion detection systems, Proc. of the 9th

ACM Conference on Computer and Communications Security, pp.255-264, 2002.

[22] K. Wang, J. J. Parekh and S. J. Stolfo, Anagram: A content anomaly detector resistant to mimicry

attack, Proc. of the 9th International Symposium on Recent Advances in Intrusion Detection, pp.226248, 2006.

[23] W.-J. Shyr, K.-C. Yao, D.-F. Chen and C.-Y. Lu, Web-based remote monitoring and control syste

m for mechatronics module, ICIC Express Letters, vol.3, no.3(A), pp.367-372, 2009.