KM Lee, EECS, SNU - Computer Vision Lab.

advertisement

Categorization I 1

Introduction to Computer Vision

Object Categorization I

(Bag-of-Words Model)

Prof. Kyoung Mu Lee

Dept. of EECS, Seoul National University

Most of the lecture slides are from B. Leibe

Topics of This Lecture

Categorization I 2

• Indexing with Local Features

9

9

9

9

Inverted file index

Visual Words

Visual Vocabulary construction

Use for content based image/video retrieval

• Object Categorization

9 More exact task definition

9 Basic level categories

9 Category representations

• Bag-of-Words Representations

9 Representation

9 Learning

9 Use for image classification

Introduction to Computer Vision

K. M. Lee, EECS, SNU

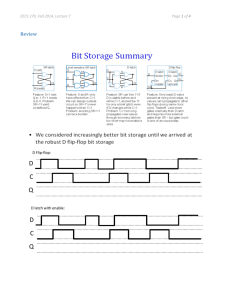

Indexing Local Features

Categorization I 3

• Each patch / region has a descriptor, which is a point in

some high-dimensional feature space (e.g., SIFT)

Figure credit: A. Zisserman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Indexing Local Features

Categorization I 4

• When we see close points in feature space, we have similar

descriptors, which indicates similar local content.

• This is of interest not only for 3d reconstruction, but also for

retrieving images of similar objects.

Figure credit: A. Zisserman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Indexing Local Features

Introduction to Computer Vision

…

Slide credit: Kristen Grauman

Categorization I 5

K. M. Lee, EECS, SNU

Indexing Local Features

Categorization I 6

• With potentially thousands of features per image, and hundreds

to millions of images to search, how to efficiently find those

that are relevant to a new image?

• Low-dimensional descriptors (e.g. through PCA):

9 Can use standard efficient data structures for nearest neighbor search

• High-dimensional descriptors

9 Approximate nearest neighbor search methods more practical

• Inverted file indexing schemes

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Indexing Local Features: Inverted File Index

Categorization I 7

• For text documents, an

efficient way to find all

pages on which a word

occurs is to use an

index…

• We want to find all

images in which a

feature occurs.

• To use this idea, we’ll

need to map our features

to “visual words”.

Introduction to Computer Vision

K. Grauman, B. Leibe

K. M. Lee, EECS, SNU

Text Retrieval vs. Image Search

Categorization I 8

• What makes the problems similar, different?

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Words: Main Idea

Categorization I 9

• Extract some local features from a number of images …

e.g., SIFT descriptor space:

each point is 128dimensional

Slide credit: David Nister

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Words: Main Idea

Categorization I 10

Slide credit: David Nister

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Words: Main Idea

Categorization I 11

Slide credit: David Nister

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Words: Main Idea

Categorization I 12

Slide credit: David Nister

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Categorization I 13

Each point is a local

descriptor, e.g.

SIFT vector.

Slide credit: David Nister

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Categorization I 14

Slide credit: David Nister

to Computer Vision

SlideIntroduction

credit: D. Nister

K. M. Lee, EECS, SNU

Indexing with Visual Words

Categorization I 15

Map high-dimensional descriptors to tokens/words by

quantizing the feature space

• Quantize via

clustering, let cluster

centers be the

prototype “words”

Descriptor space

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Indexing with Visual Words

Categorization I 16

Map high-dimensional descriptors to tokens/words by

quantizing the feature space

• Determine which

word to assign to

each new image

region by finding the

closest cluster center.

Descriptor space

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Words

Categorization I 17

• Example: each group

of patches belongs to

the same visual word

Slide credit: Kristen Grauman

Introduction to Computer Vision

Figure from Sivic & Zisserman, ICCV 2003

K. M. Lee, EECS, SNU

Visual Words: Texture Representation

Categorization I 18

• First explored for texture and

material representations

• Texton = cluster center of filter

responses over collection of

images

• Describe textures and materials

based on distribution of

prototypical texture elements.

Leung & Malik 1999; Varma &

Zisserman, 2002; Lazebnik,

Schmid & Ponce, 2003;

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Words: Texture Representation

• Texture is characterized by the repetition of basic elements

or textons

• For stochastic textures, it is the identity of the textons, not

their spatial arrangement, that matters

Julesz, 1981; Cula & Dana, 2001; Leung & Malik 2001; Mori, Belongie & Malik, 2001;

Schmid 2001; Varma & Zisserman, 2002, 2003; Lazebnik, Schmid & Ponce, 2003

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Words

Categorization I 20

• More recently used for

describing scenes and objects for

the sake of indexing or

classification.

Sivic & Zisserman 2003; Csurka,

Bray, Dance, & Fan 2004; many

others.

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Inverted File for Images of Visual Words

Categorization I 21

Word List of image

number numbers

When will this give us a significant gain in efficiency?

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Vocabulary Formation

Categorization I 22

Issues:

• Sampling strategy: where to extract features?

• Clustering / quantization algorithm

• Unsupervised vs. supervised

• What corpus provides features (universal vocabulary?)

• Vocabulary size, number of words

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Sampling Strategies

Categorization I 23

Sparse, at

interest points

Dense, uniformly

Randomly

• To find specific, textured objects, sparse

sampling from interest points often more

reliable.

• Multiple complementary interest

operators offer more image coverage.

Multiple interest

operators

• For object categorization, dense

sampling offers better coverage.

[See Nowak, Jurie & Triggs, ECCV 2006]

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Clustering / Quantization Methods

Categorization I 24

• K-means (typical choice), agglomerative clustering, meanshift,…

• Hierarchical clustering: allows faster insertion / word

assignment while still allowing large vocabularies

9 Vocabulary tree [Nister & Stewenius, CVPR 2006]

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Example: Recognition with Vocabulary Tree

Categorization I 25

• Tree construction:

Slide credit: David Nister

Introduction to Computer Vision

[Nister & Stewenius, CVPR’06]

K. M. Lee, EECS, SNU

Vocabulary Tree

Categorization I 26

• Training: Filling the tree

Slide credit: David Nister

Introduction to Computer Vision

[Nister & Stewenius, CVPR’06]

K. M. Lee, EECS, SNU

Vocabulary Tree

Categorization I 27

• Training: Filling the tree

Slide credit: David Nister

Introduction to Computer Vision

[Nister & Stewenius, CVPR’06]

K. M. Lee, EECS, SNU

Vocabulary Tree

Categorization I 28

• Training: Filling the tree

Slide credit: David Nister

Introduction to Computer Vision

[Nister & Stewenius, CVPR’06]

K. M. Lee, EECS, SNU

Vocabulary Tree

Categorization I 29

• Training: Filling the tree

Slide credit: David Nister

Introduction to Computer Vision

[Nister & Stewenius, CVPR’06]

K. M. Lee, EECS, SNU

Vocabulary Tree

Categorization I 30

• Training: Filling the tree

Slide credit: David Nister

Introduction to Computer Vision

[Nister & Stewenius, CVPR’06]

K. M. Lee, EECS, SNU

30

Vocabulary Tree

Categorization I 31

• Recognition

RANSAC

verification

[Nister & Stewenius, CVPR’06]

Introduction to Computer Vision

K. M. Lee, EECS, SNU

31

Quiz Questions

• What is the computational advantage of the hierarchical

representation for bag of words, vs. a flat vocabulary?

• What dangers does such a representation carry?

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Vocabulary Tree: Performance

Categorization I 33

• Evaluated on large databases

9 Indexing with up to 1M images

• Online recognition for database

of 50,000 CD covers

9 Retrieval in ~1s

• Experimental finding that large

vocabularies can be beneficial for

recognition

[Nister & Stewenius, CVPR’06]

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Vocabulary Size

Categorization I 34

• Larger vocabularies can be

advantageous…

Branch factor

• But what happens when the

vocabulary gets too large?

9 Efficiency?

9 Robustness?

Introduction to Computer Vision

K. M. Lee, EECS, SNU

tf-idf Weighting

Categorization I 35

• Term frequency – inverse document frequency

• Describe frame by frequency of each word within it,

downweight words that appear often in the database

• (Standard weighting for text retrieval)

Number of

occurrences of word

i in document d

Number of words in

document d

Total number of

documents in database

Number of occurrences

of word i in whole

database

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Application for Content Based Img Retrieval

Categorization I 36

• What if query of interest is a portion of a frame?

Slide credit: Andrew Zisserman

Introduction to Computer Vision

[Sivic & Zisserman, ICCV’03]

K. M. Lee, EECS, SNU

Video Google System

Query

region

Retrieved frames

1. Collect all words within query

region

2. Inverted file index to find

relevant frames

3. Compare word counts

4. Spatial verification

Categorization I 37

Sivic & Zisserman, ICCV 2003

•

Demo online at :

http://www.robots.ox.ac.uk/~vgg/researc

h/vgoogle/index.html

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

37

Example Results

Query

Retrieved shots

Slide credit: Andrew Zisserman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Collecting Words Within a Query Region

• Example: Friends

Query region:

pull out only the SIFT

descriptors whose positions

are within the polygon

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Example Results

Query

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

More Results

Query

Retrieved shots

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

More Results

Query

Retrieved shots

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Topics of This Lecture

• Indexing with Local Features

9

9

9

9

Inverted file index

Visual Words

Visual Vocabulary construction

Use for content based image/video retrieval

• Object Categorization

9 More exact task definition

9 Basic level categories

9 Category representations

• Bag-of-Words Representations

9 Representation

9 Learning

9 Use for image classification

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Revisiting Object Categorization

• Find this particular object

• Recognize ANY car

• Recognize ANY cow

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Object Categorization

• Task Description

9 “Given a small number of training images of a category, recognize

a-priori unknown instances of that category and assign the correct

category label.”

• Which categories are feasible visually?

9 Extensively studied in Cognitive Psychology,

e.g. [Brown’58]

“Fido”

German

shepherd

Introduction to Computer Vision

dog

animal

living

being

K. M. Lee, EECS, SNU

Visual Object Categories

• Basic Level Categories in human categorization

[Rosch 76, Lakoff 87]

9 The highest level at which category members have similar

perceived shape

9 The highest level at which a single mental image reflects the entire

category

9 The level at which human subjects are usually fastest at identifying

category members

9 The first level named and understood by children

9 The highest level at which a person uses similar motor actions for

interaction with category members

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Visual Object Categories

• Basic-level categories in humans seem to be defined

predominantly visually.

• There is evidence that humans (usually)

start with basic-level categorization

before doing identification.

Abstract

⇒ Basic-level categorization is easier

levels

and faster for humans than object

identification!

⇒ Most promising starting point

for visual classification

Basic level

dog

German

shepherd

Individual

level

Introduction to Computer Vision

…

…

animal

…

…

quadruped

…

cat

cow

Doberman

“Fido”

…

K. M. Lee, EECS, SNU

How many object categories are there?

Source: Fei-Fei Li, Rob Fergus, Antonio Torralba.

Introduction to Computer Vision

Categorization I 48

Biederman 1987

K. M. Lee, EECS, SNU

Categorization I 49

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Other Types of Categories

• Functional Categories

9 e.g. chairs = “something you can sit on”

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Other Types of Categories

• Ad-hoc categories

9 e.g. “something you can find in an office environment”

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Challenges: Robustness

Illumination

Occlusions

Object pose

Intra-class

appearance

Clutter

Viewpoint

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Challenges: Context and Human Experience

Context cues

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Challenges: Learning with Minimal Supervision

Less

More

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Learning from One Example

Slide from Pietro Perona, 2004 Object Recognition workshop

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Slide from Pietro Perona, 2004 Object Recognition workshop

Introduction to Computer Vision

K. M. Lee, EECS, SNU

How to Arrive at a Category Representation?

Categorization I 57

• If a local image region is a visual word, how can we

summarize an image (the document)?

• How can we build a representation that is suitable for

representing an entire category?

9

9

9

9

Robust to intra-category variation

Robust to deformation, articulation

…

But still discriminative

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Topics of This Lecture

• Indexing with Local Features

9

9

9

9

Inverted file index

Visual Words

Visual Vocabulary construction

Use for content based image/video retrieval

• Object Categorization Revisited

9 More exact task definition

9 Basic level categories

9 Category representations

• Bag-of-Words Representations

9 Representation

9 Learning

9 Use for image classification

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Analogy to Documents

Of all the sensory impressions proceeding

to the brain, the visual experiences are the

dominant ones. Our perception of the

world around us is based essentially on

the messages that reach the brain from

our eyes. For a long time it was thought

that the retinal

image was brain,

transmitted

sensory,

point by point to visual centers in the

visual,cortex

perception,

brain; the cerebral

was a movie

screen,retinal,

so to speak,

upon which

the image

cerebral

cortex,

in the eye was projected. Through the

cell,

discoveries eye,

of Hubel

and optical

Wiesel we now

know that behind

the origin

of the visual

nerve,

image

perception in the brain there is a

Hubel, Wiesel

considerably more complicated course of

events. By following the visual impulses

along their path to the various cell layers

of the optical cortex, Hubel and Wiesel

have been able to demonstrate that the

message about the image falling on the

retina undergoes a step-wise analysis in a

system of nerve cells stored in columns.

In this system each cell has its specific

function and is responsible for a specific

detail in the pattern of the retinal image.

Slide credit: Li Fei-Fei

Introduction to Computer Vision

China is forecasting a trade surplus of

$90bn (£51bn) to $100bn this year, a

threefold increase on 2004's $32bn. The

Commerce Ministry said the surplus

would be created by a predicted 30% jump

in exports to $750bn, compared with a

18% rise in imports

to $660bn.

China,

trade,The figures

are likely to further annoy the US, which

surplus, commerce,

has long argued that China's exports are

unfairly exports,

helped by a deliberately

imports, US,

undervalued yuan. Beijing agrees the

yuan, bank, domestic,

surplus is too high, but says the yuan is

foreign,

increase,

only one factor.

Bank of

China governor

Zhou Xiaochuan

said thevalue

country also

trade,

needed to do more to boost domestic

demand so more goods stayed within the

country. China increased the value of the

yuan against the dollar by 2.1% in July

and permitted it to trade within a narrow

band, but the US wants the yuan to be

allowed to trade freely. However, Beijing

has made it clear that it will take its time

and tread carefully before allowing the

yuan to rise further in value.

K. M. Lee, EECS, SNU

Categorization I 60

Object

Bag of ‘words’

Source: ICCV 2005 short course, Li Fei-Fei

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Bags of Visual Words

Categorization I 62

• Summarize entire image based on

its distribution (histogram) of

word occurrences.

• Analogous to bag of words

representation commonly used for

documents.

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

62

Bag-of-features steps

1.

2.

3.

4.

Extract features

Learn “visual vocabulary”

Quantize features using visual vocabulary

Represent images by frequencies of “visual words”

K. M. Lee, EECS, SNU

Similarly, Bags-of-Textons for Texture Repr.

Histogram

Universal texton dictionary

Julesz, 1981; Cula & Dana, 2001; Leung & Malik 2001; Mori, Belongie & Malik, 2001; Schmid 2001;

Varma & Zisserman, 2002, 2003; Lazebnik, Schmid & Ponce, 2003

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Comparing Bags of Words

• We build up histograms of word activations, so any

histogram comparison measure can be used here.

• E.g. we can rank frames by normalized scalar product

between their (possibly weighted) occurrence counts

9 Nearest neighbor search for similar images.

[1 8 1 4]’ •

r

dj

[5 1 1 0]

r

q

Introduction to Computer Vision

Slide credit: Kristen Grauman

K. M. Lee, EECS, SNU

Other Functions for comparing histograms

N

• L1 distance:

• χ2 distance:

D (h1 , h2 ) = ∑ | h1 (i ) − h2 (i ) |

i =1

N

D(h1 , h2 ) = ∑

i =1

(h1 (i) − h2 (i) )2

h1 (i ) + h2 (i )

• Quadratic distance (cross-bin distance):

D(h1 , h2 ) = ∑ Aij (h1 (i ) − h2 ( j )) 2

i, j

• Histogram intersection (similarity function):

N

I (h1 , h2 ) = ∑ min(h1 (i ), h2 (i ))

i =1

K. M. Lee, EECS, SNU

Learning/Recognition with BoW Histograms

Categorization I 67

• Bag of words representation makes it possible to describe the

unordered point set with a single vector (of fixed dimension

across image examples)

• Provides easy way to use distribution of feature types with

various learning algorithms requiring vector input.

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

67

BoW for Object Categorization

{face, flowers, building}

• Works pretty well for image-level classification

Csurka et al. (2004), Willamowski et al. (2005), Grauman & Darrell (2005), Sivic et al.

(2003, 2005)

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Image Classification

• Given the bag-of-features representations of images from

different classes, how do we learn a model for

distinguishing them?

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Discriminative Methods

• Learn a decision rule (classifier) assigning bag-of-features

representations of images to different classes

Decision

boundary

Zebra

Non-zebra

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Classification

• Assign input vector to one of two or more classes

• Any decision rule divides input space into decision regions

separated by decision boundaries

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Nearest Neighbor Classifier

• Assign label of nearest training data point to each test data

point.

Voronoi partitioning of feature space

for 2-category 2-D and 3-D data

Slide credit: David Lowe

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Recap: Classifier Construction Choices…

Neural networks

Nearest neighbor

106 examples

Shakhnarovich, Viola, Darrell 2003

Berg, Berg, Malik 2005...

Support Vector Machines

Guyon, Vapnik

Heisele, Serre, Poggio,

2001,…

LeCun, Bottou, Bengio, Haffner 1998

Rowley, Baluja, Kanade 1998

…

Boosting

Conditional Random Fields

Viola, Jones 2001,

Torralba et al. 2004,

Opelt et al. 2006,…

McCallum, Freitag,

Pereira 2000; Kumar,

Hebert 2003, …

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

K-Nearest Neighbors

• For a new point, find the k closest points from training data

• Labels of the k points “vote” to classify

• Works well provided there is lots of data and the distance

function is good

Slide credit: David Lowe

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Recap: Support Vector Machines (SVMs)

• Discriminative classifier

based on optimal

separating hyperplane

(i.e. line for 2D case)

• Maximize the margin

between the positive

and negative training

examples

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Generative Methods

• Model the probability of a bag of features given a class

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

The Naïve Bayes Model

• Assume that each feature is conditionally independent

given the class

N

p ( w1 , K, wN | c) = ∏ p ( wi | c)

i =1

Slide credit: Li Fei-Fei

Introduction to Computer Vision

K. M. Lee, EECS, SNU

The Naïve Bayes Model

• Assume that each feature is conditionally independent

given the class

N

c* = arg max c p (c)∏ p ( wi | c)

i =1

MAP

decision

Prior prob. of

the object classes

Likelihood of i-th visual word

given the class

Estimated by empirical

frequencies of visual words

in images from a given class

Slide credit: Li Fei-Fei

Introduction to Computer Vision

Csurka et al. 2004

K. M. Lee, EECS, SNU

BoW for Object Categorization

Caltech6 dataset

bag of features

bag of features

Parts-and-shape model

• Good performance for pure classification

(object present/absent)

9 Better than more elaborate part-based models with spatial

constraints…

9 What could be possible reasons why?

Slide credit: Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

BoW Representation: Spatial Information

• A bag of words is an orderless representation: throwing

out spatial relationships between features

• Middle ground:

9

9

9

9

9

Visual “phrases” : frequently co-occurring words

Semi-local features : describe configuration, neighborhood

Let position be part of each feature

Count bags of words only within sub-grids of an image

After matching, verify spatial consistency (e.g., look at neighbors –

are they the same too?)

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Spatial Pyramid Matching

Hierarchy of

localized BoW

histograms

Image from Svetlana Lazebnik

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Summary: Indexing features

Categorization I 82

…

…

…

Index each one into

pool of descriptors

from previously seen

images

Describe

features

List of

positions,

scales,

orientations

Associated list

of ddimensional

descriptors

or

Quantize to form

bag of words

vector for the

image

…

Detect or sample

features

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Summary: Bag-of-Words

• Pros:

9

9

9

9

Flexible to geometry / deformations / viewpoint

Compact summary of image content

Provides vector representation for sets

Empirically good recognition results in practice

• Cons:

9 Basic model ignores geometry – must verify afterwards, or encode

via features.

9 Background and foreground mixed when bag covers whole image

9 Interest points or sampling: no guarantee to capture object-level

parts.

9 Optimal vocabulary formation remains unclear.

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

Summary

• Local invariant features

9 Distinctive matches possible in spite of significant view change,

useful not only to provide matches for multi-view geometry, but

also to find objects and scenes.

9 To find correspondences among detected features, measure

distance between descriptors, and look for most similar patches.

• Bag of words representation

9

9

9

9

Quantize feature space to make discrete set of visual words

Summarize image by distribution of words

Index individual words

Inverted index: pre-compute index to enable faster search at query

time

Slide credit: Kristen Grauman

Introduction to Computer Vision

K. M. Lee, EECS, SNU

References and Further Reading

Categorization I 85

• Bag-of-Words approaches are a relatively new

development. I will look for a good reference paper and

provide it on the webpage…

• Details about the Video Google system can be found in

9 J. Sivic, A. Zisserman,

Video Google: A Text Retrieval Approach to Object Matching in

Videos, ICCV’03, 2003.

• Try the Oxford Video Google demo

9 http://www.robots.ox.ac.uk/~vgg/research/vgoogle/index.html

• Try the available local feature detectors and descriptors

9 http://www.robots.ox.ac.uk/~vgg/research/affine/detectors.html#binaries

Introduction to Computer Vision

K. M. Lee, EECS, SNU