How to increase efficacy and efficiency of feedback for

advertisement

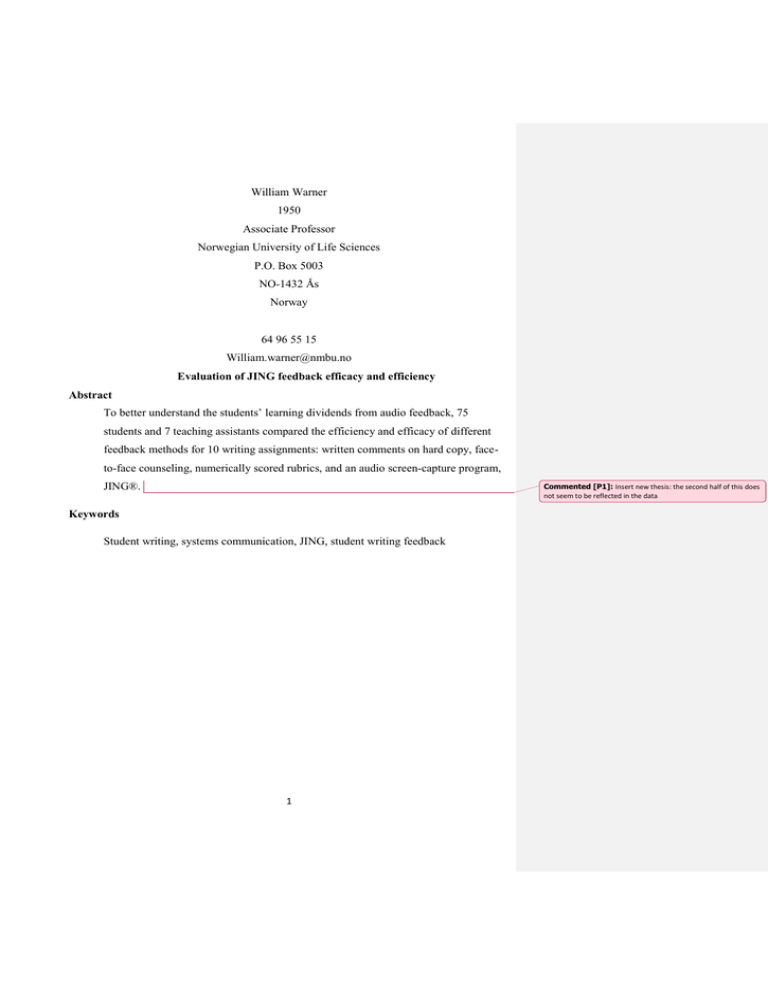

William Warner 1950 Associate Professor Norwegian University of Life Sciences P.O. Box 5003 NO-1432 Ås Norway 64 96 55 15 William.warner@nmbu.no Evaluation of JING feedback efficacy and efficiency Abstract To better understand the students’ learning dividends from audio feedback, 75 students and 7 teaching assistants compared the efficiency and efficacy of different feedback methods for 10 writing assignments: written comments on hard copy, faceto-face counseling, numerically scored rubrics, and an audio screen-capture program, Commented [P1]: Insert new thesis: the second half of this does not seem to be reflected in the data JING®. Keywords Student writing, systems communication, JING, student writing feedback 1 Introduction The value of video feedback on student writing has been well documented (Mathisen, 2012). In particular the screen-capture program JING® distributed via Learning Management Systems (LMS), such as Fronter. Lumadue and Fish (2010, cited in Mathisen) assert video feedback is a paradigm shift with far reaching consequences. On the one hand, JING suggests increasing what Mathisen refers to as the student’s learning dividends; on the other hand, it explicitly increases the teacher’s feedback efficiency. However, to what extent JING contributes to feedback efficiency and efficacy and under what circumstances requires further investigation. Often, the efficacy and efficiency of feedback methods exist in tension. . Generally speaking, the most effective feedback appears the least efficient; likewise the most efficient seems least effective. For instance, though faceto-face consultation with a student is an effective method of feedback, it can be exceedingly time consuming. Likewise, a brief LMS comment is extremely efficient feedback, but its effect on student writing is minimal (Fig. 1). Furthermore, efficiency and efficacy appear to be valued differently by providers and receivers. Typically, efficiency is valued primarily by the instructor, because the instructor provides feedback to many students within a limited time frame. Hence, efficiency is associated with the instructor’s process. Efficacy is associated with the student’s product, because the objective is to improve the student’s writing, not to save time. However, these value-associations are assumptions. Students, like instructors, are time conscious; instructors, like students, are frequently quality minded. The purpose of this study was two-fold: to evaluate the efficiency and efficacy of JING feedback relative to other forms of feedback, and to better understand the students’ learning dividends. The study was based upon perspectives from both those providing and those receiving feedback on academic writing assignments at the Norwegian University of Life Sciences (NMBU). The study will end by reflecting on what this study suggests about the utility of JING for giving feedback in different types of courses. Background Academic writing and English for academic purposes (EAP) are growing concerns at NMBU. The Internationalization Plan (2010-2014) aims to increase foreign students from 10% to 2 16%. Currently, half of all topics are taught in English, including 14 master programs and a bachelor program taught entirely in English. However, only two academic writing courses are offered to NMBU’s 4,000 students, one for bachelor students and the other for master students. Both courses are pass/fail and are offered during the autumn and spring terms. As expected, enrollment is high (>70 students/term). Each course has weekly assignments, mostly rewriting (300-700 word papers). Thus, feedback must be swift. Comments are returned within three days so the students have time to revise their documents. Teaching Assistants (TAs) at The Writing Centre support the instructor by providing feedback, one-on-one consultation, and scoring rubrics on the three final papers (Fig.2). NMBU is also concerned with improving students’ learning experience, or in Mathisen’s words learning dividends. Proponents of JING claim it represents a paradigm shift, but few studies have provided concrete evidence. Since JING holds promise to enhance the learning experience, teachers should know specifically what dividends they can look forward to. Method The study was conducted at NMBU over three academic terms. In the first term (spring, 2012), a pilot project evaluated 5 feedback modes with 65 students: (1) one-on-one consultation, (2) Fronter comments, (3) written comments on hard copy, (4) scored rubrics, and (5) audio comments via Word software. Audio feedback was applied to three assignments; JING feedback was not applied to any assignments. At the end of the term, students ranked the efficacy of each feedback method on a course evaluation questionnaire. The efficacy question was a five-point, semantic differential (Likert scale) that asked to what extent a feedback method was “very unhelpful” to “very helpful.” The three TAs and the instructor evaluated the efficiency of only audio feedback to determine its suitability. The following term, autumn 2012, Word’s audio feature was replaced with JING. The exercises were repeated for 80 students for 3 assignments. Likewise, the five methods were assessed at the end of the term. However, both students and feedback providers (instructor and five TAs, hereafter referred to as reviewers) assessed efficiency and efficacy. To assure a fair sample size for evaluating feedback, students were required to complete an evaluation 3 questionnaire. Class discussion supplemented the questionnaire to add nuance the data and insure against missing something important that was not addressed in the survey. In the third term, spring 2013, JING replaced Fronter comments and was applied to 10 assignments for 75 students. To reduce potential bias, the course instructor did not participate in the evaluation. Seven TAs evaluated feedback on the 4 feedback methods: face-to-face TA consultation (ad hoc), scored rubrics (3 assignments), written comments on the final papers (3 assignments), and JING (10 assignments). Questionnaires and discussions with both students and TAs assessed the efficacy and efficiency of the different feedback methods. Results Spring, 2012 Less than half of the students completed the course evaluation. Nevertheless, the consensus was that written comments on the final three papers and TA’s tutor consultations were the most helpful feedback modes (Table 1). Although the students found audio feedback the least helpful method – several claimed it unhelpful – class discussion revealed the students considered it an appealing idea, provided the technology was reliable. Several students found Word’s audio function unstable, especially those using Macintosh platforms. Likewise, the four reviewers found Word’s audio function unstable and time consuming to set up, circa 15 minutes. Although the reviewers did not quantify each feedback operation, the consensus was that they spent 10-30 second per audio comment, with about 6 comments per assignment. Because of the technical difficulties, it was premature to judge the efficiency of audio feedback. It appeared, however, more suitable for suggesting than editing. Autumn, 2012 Eighty students evaluated the five feedback methods. The results were quite different from the pilot project: JING’s feedback (based on three assignments) was the most effective; written comments and rubrics (on the three final papers) were the least (Table 2). Ad hoc face-to-face consultation ranked in the middle. Although Fronter comments (on four assignments) scored high, students clearly preferred JING (Table 3). 4 In addition, almost all the students felt that JING had made their editing process more efficient. Audio supplemented with color highlighting and Track Changes’ balloon comments reduced the students’ time to rewrite. An explanation is in order: students claimed they spent less time listening to JING than trying to understand written comments on Fronter, which often appeared less specific and more cryptic (Fig. 3).Indeed, because JING permits instructors to point to the sentence as they refer to it, using JING for feedback requires less time spent switching between comments and their paper. Equally important, though unexpected, many students claimed the “tone” of oral feedback influenced their writing. As the old adage goes, “It’s not what you say but how you say it.” Several students said the reviewer’s voice helped them prioritize improvements, others claimed “tone” motivated them or increased their confidence, or both. Moreover, they were more likely to re-watch a five-minute video than re-read a comment. These findings indicate that JING does indeed offer wider student dividends for their learning experience. Giving criticism on student work requires tact and sensitivity;the findings indicate that this is easier when the reviewer can express emotion with their voice. Commented [G2]: Now I think about it, this should probably go in the discussion The reviewers also evaluated the efficacy and efficiency of each method. All six reviewers claimed that when they wrote comments they were careful to demonstrate exemplary writing, which is time-consuming. Several reviewers also claimed that compared with writing comments, they felt less fatigued using JING. Commented [G3]: I don’t think that the reviewers views on this can be expected to be suffiently accurate. Spring 2013 During the previous terms, reviewers provided feedback on a first-come first-serve basis. To validate the assumed feedback efficacy, each reviewer was assigned 10-12 students to monitor student progress for a given paper, which typically included an outline and several drafts before scoring the rubric that accompanied the last submission. Reviewers then rotated students for the next two papers. Thus, each reviewer monitored around 30 students, and each student received feedback from 3 reviewers. As a result the sample was robust, with 7 TA’s covering 10 assignments for all 75 students – or 750 screen-capture recordings. In addition, three rubrics were applied (one for each final paper). Fronter comments were omitted. 5 Generally speaking, all the students found the different feedback methods helpful to some extent, though JING ranked most effective (Table 4). Surprisingly, 82% claimed that JING was as effective as face-to-face mentoring. This claim might be misleading, because only about half the students agreed with the following statement: I found JING as helpful as visiting The Writing Centre. Perhaps an explanation is in order. Our first question ranked JING relative to three other forms of feedback: tutorial consultation, written comments on their final papers, and scored rubrics. The second question compared it only with visiting the Centre for face-to-face consultation. Thus, this curious finding might be explained by the limitations in the survey: students could reasonably have felt both Jing and face-to-face feedback were “very helpful”, they could still have had a preference for face to face feedback. Regarding efficiency, three-fourths of the students felt JING saved them time and either motivated them or bolstered their confidence. More than half claimed that JING improved their writing (Table 5). Discussion Efficiency Stannard (2007) asserts that a 2-minute video recording equates with about 400 words, or 1 page of text. Our JING recordings were limited to 5 minutes, which, for the entire course, was equal to about 25 pages of written feedback for each student. For an average class of 75 students, a reviewer would need to write 1875 pages, which is improbable for even a dozen reviewers. The time reviewers spent on JING feedback averaged 15 minutes for an outline and 30 minutes for a paper. Admittedly, the papers were limited to 300-700 words, and a typical 8 to 10-page term paper would probably take more time, but not necessarily. Time depends on what, and to what extent, the instructor is evaluating. Nevertheless, talking consumes less time than writing, especially if reviewers are acutely aware of their own writing, as in teaching academic writing. Efficacy Class discussions at the end of last two terms revealed an expected and understandable finding: students felt a connection with those providing feedback. This was evident in Mathisen’s (2012, p.109) study with JING, in which students had a feeling of being “seen” by 6 the teacher. Combining verbal and visual feedback within a screen-cast context gives the sense that the feedback provider is with the student. Our study, however, went one step further than Mathisen’s evaluation. Our reviewers also felt a connection with their students. By reviewing two or more rewrites, the TAs could see whether or not their suggestions were applied. As a result they could see as well as be seen, and judge the extent of their feedback. In other words, the reviewers could evaluate the relative efficacy of JING for each student. This is where tone played an important function in verbal feedback: a favorable tone for those applying the reviewer’s advice, a harsh tone for those ignoring it, and an encouraging tone for those apparently trying but not quite succeeding. Continuous use of Jing over more than one assignment might be likened to a discussion: one where the student speaks with written changes and the reviewer responds with praise, evaluation and further suggestions. Conclusion In the final analysis, our findings indicate that JING offers not only a good alternative to written feedback, but can provide additional advantages in both efficacy and efficiency of feedback. Indeed, the quantitative survey revealed that Jing was the most effective long distance feedback method under review. Meanwhile, the qualitative responses from the students suggests that using Jing pays large learning dividends by helping to build a rapport with students that offsets the difficulties associated with giving critical feedback However, Jing cannot yet substitute perfectly for a face-to-face consultation. Although 82% of the third-term students claimed that JING was as helpful as face-to-face conversation with a TA, only about half said it was as helpful as visiting The Writing Centre. Mathisen (2012) found a similar response: a fourth of his students claimed that video feedback produced the same learning dividend as discussion with their teacher. Ultimately, at the Writing Centre we decided that it was best to continue with Jing for weekly feedback and provide an option for one 30 minute face-to-face consultation per week with a TA. Recommendations: a guide to using Jing Reviewer efficiency and efficacy came with experience. At the beginning of the third term, 7 the reviewers conducted a peer-review workshop to critique their previous JING feedback. c. The reviewers suggested a feedback protocol, as opposed to making comments while reading the assignment.1 Be aware of your tone of voice: try to sound authoritative but also positive. Note passages to comment on with color highlights or Track Changes balloon callouts. Begin recording with a positive comment, even if it is minor, e.g., I’m pleased that you submitted the assignment on time. Tell the reader what to expect at the beginning of the feedback and specify the key areas the student needs to focus on. If color-coded highlights are used, explain their meaning, e.g., You’ll notice certain passages are highlighted: o Green indicates well written passages that I’ll discuss; o Yellow passages are worrisome, and I suggest modifying; o Red passages need to be rewritten or omitted. Conclude with a motivational suggestion, e.g., I encourage you to watch the video on paraphrasing that is posted on Fronter. 2 Obviously these recommendations were intended for teaching assistants conducting feedback on writing tasks. Nonetheless, tone of voice, structure, and explaining color coding will likely remain important for feedback on most student work. Also, we recommend that teaching considering using Jing consider conducting a collective group feedback once the reviewers have had some experience. Indeed, he consensus amongst the reviewers was that the process itself had proved extremely helpful in terms of sharing good practices and building confidence. References 1 The guide to jing “Jingback” that the workshop produced is available on The Learning Centre website. 2 The writing courses have several videos on topics such as paraphrasing, referencing, clarity, concision. 8 Commented [G4]: To me this is just obvious. Would anyone imagine that we suggest feedback be “ad hoc and casual” Lumadue, R., and Fish, W. (2010). A technologically based approach to providing quality feedback to students: A paradigm shift for the 21st century. Academic Leadership, the online journal, 8 (1). Mathisen, P. (2012). Video feedback in higher education – a contribution to improving the quality of written feedback. Nordic Journal of Digital Literacy, 7 (2). Stannard, R. (2007) Using screen capture software in student feedback. HEA English Subject Centre Commissioned Case Studies. Available at http://www.english.heacademy.ac.uk/explore/publications/casestudies/technology/camtasia.ph p (accessed 28 October 2013). Appendix of Tables Table 1: Efficacy (helpfulness) of 5 feedback methods evaluated by 29 ELS students. Table 2. Efficacy (helpfulness) of 5 feedback methods evaluated by writing students (N = 80). Table 3. Students (N = 80) preference for JING as expressed in the following statement: 9 Table 4: Efficacy of 4 feedback methods evaluated by academic writing students (N=75). Table 5. Student response to the following statements based upon on 10 assignments (N=75). 10