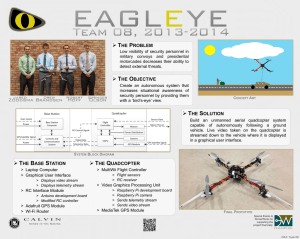

Third-person Immersion

advertisement