i Use Authorization - Idaho State University

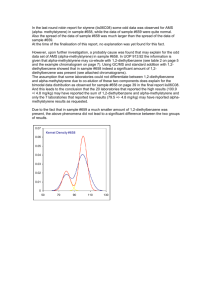

advertisement