as a PDF

advertisement

Action Systems in Pipelined

Processor Design

J. Plosila

University of Turku, Department of Applied Physics,

Laboratory of Electronics and Information Technology,

FIN-20014 Turku, Finland,

tel: +358-2-333 6657,

fax: +358-2-333 5070,

email: juplos @utu.fi

K. Sere

Abo Akademi University, Department of Computer Science,

FIN-20520 Turku, Finland,

tel: +358-2-265 4537,

fax: +358-2-265 4732,

email: Kaisa.Sere@abo.fi

Turku Centre for Computer Science

TUCS Technical Report No 54

October 1996

ISBN 951-650-858-8

ISSN 1239-1891

Abstract

We show that the action systems framework combined with the re nement calculus is a powerful

method for handling a central problem in hardware design, the design of pipelines. We present a

methodology for developing asynchronous pipelined microprocessors relying on this framework.

Each functional unit of the processor is stepwise brought about which leads to a structured and

modular design. The handling of dierent hazard situations is realized when verifying re nement

steps. Our design is carried out with circuit implementation using speed-independent techniques

in mind.

Keywords: action systems, re nement, microprocessors, pipelines, asynchronous circuits

TUCS Research Group

Programming Methodology Research Group

1 Introduction

The design of pipelines is an important and complicated basic activity in hardware design 17, 14].

We show how the action systems framework combined with the re nement calculus is used to

design an asynchronous pipelined microprocessor. Action systems have proved to be very suited

to the design of parallel and distributed systems 2, 4, 3]. They are similar to the UNITY

programs 6] which have an associated temporal logic. The design and reasoning about action

systems is carried out within the re nement calculus that is based on the use of predicate

transformers. The re nement calculus for sequential programs has been studied by several

researchers 1, 12, 13].

Our starting point is a conventional sequential program describing the behaviour of the processor. Via a number of re nement steps we end up in a non-trivial pipelined processor where

several components can work concurrently. The main re nement technique used is atomicity

re nement 4] where the granularity of an action is changed. We also utilize the idea of decomposing an action system into a number of systems working in parallel and communicating

asynchronously via channels. Compared to our previous work 3] we have here given a more

lower-level derivation of the pipeline. Furthermore, we here present ways to handle pipeline

hazard and stalling within the framework.

We show how a realistic pipelined processor emerges step by step. At each step we concentrate on the design of one functional unit of the processor. The steps follow a very clear pattern

and are easily mechanizable. The basic strategy in the derivation is to realize ve pipeline stages:

(1) instruction fetch , (2) instruction decode, (3) execution, (4) memory access, and (5) write

back. The parallel operation of these stages is made possible by inserting bu ering assignments

into the data path, which corresponds to adding storage devices, i.e., registers, between the

stages. The decomposition process does not directly take a stand whether the data path should

be realized using the single-rail bundled data convention or the delay-insensitive dual-rail code

8]. Both approaches are possible in principle. However, the style in which the control and data

paths are here separated from each other is characteristic for the speed-independent bundled

data approach.

Asynchronous microprocessors have been modelled and designed by several research groups

11, 7, 10, 16]. What makes our approach dierent is the use of re nement calculus-based

transformation rules. We have identi ed the relevant language constructs and re nement rules

for pipeline design. We demonstrate that the same design method can be used for extracting

the functional components, dividing the control and data paths and for taking care of the

hazard situations. Only a small, limited set of transformation rules and re nement techniques

is necessary. The main advantage we gain is that in addition to having an uniform formalism to

rely on during the design, each design step can be formally veri ed correct within the re nement

calculus. This can be done at every design phase, not only in the high-level derivation, but also in

transforming a program into an implementable gate-level form containing boolean variables only

15]. Furthermore, we believe that the derivation presented here is useful in understanding the

ideas behind pipelined processors and the diculties managing with dierent hazard situations.

We proceed as follows. In section 2 we present the action systems framework together with

the needed re nement calculus concepts. The design methodology is sketched in section 3. The

design of the pipelined processor is presented in sections 4-7. We end with some concluding

remarks in section 8.

2 Action systems

Actions An action is a guarded command of the form g ! S where g is a boolean condition,

the guard and S , the body, is any statement in the guarded command language 9]. This language

1

includes assignment, sequential composition, conditional choice and iteration, and is de ned

using weakest precondition predicate transformers. The action A is said to be enabled when the

guard is true. The guard of an action A is denoted by gA and the body by sA.

We also use the following constructs:

Choice: The action A1 ] A2 tries to choose an enabled action from A1 and A2 , the choice

being nondeterministic when both are enabled.

Sequencing: The sequential composition of two actions A1 A2 rst behaves as A1 if this is

enabled, then as A2 if this is enabled at the termination of A1 . Sequencing forces A1 and

A2 to be exclusive. The scope of the sequential composition is indicated with parenthesis,

for example A (B ] C ).

Action systems An action system has the form:

A = j var x:I do A1 ] : : : ] Am od ]j : z:

The action system A is initialised by action I . Then, repeatedly, an enabled action from

A1 : : : Am is nondeterministically selected and executed. The action system terminates when

no action is enabled.

The local variables of A are the variables x and the global variables of A are the variables

z . The local and global variables are assumed to be distinct. The state variables of A consist

of the local variables and the global variables. The actions are allowed to refer to all the state

variables of an action system.

The actions are taken to be atomic. Therefore two actions that do not have any variables

in common can be executed in any order, or simultaneously. Hence, we can model parallel

programs with action systems taking the view of interleaving action activations.

Parallel composition Consider two action systems A and B

A = j var x:I do A1 ] : : : ] Am od ]j : z

B = j var y:J do B1 ] : : : ] Bn od ]j : v

where x \ y = . We de ne the parallel composition A k B of A and B to be the action system

C = j var x:I y:J do A1 ] : : : ] Am ] B1 ] : : : ] Bn od ]j : z v:

Thus, parallel composition will combine the state spaces of the two constituent action systems,

merging the global variables and keeping the local variables distinct.

The behaviour of a parallel composition of action systems is dependent on how the individual

action systems, the reactive components, interact with each other via the global variables that

are referenced in both components. We have for instance that a reactive component does not

terminate by itself: termination is a global property of the composed action system.

Hiding and revealing variables In the sequel we will explicitely denote with a bullet nota-

tion the action system where the global variables are declared as follows: The action system

v A : z accesses the global variables v and z. The variables v are declared within A whereas

the variables z are declared in some reactive component of A. We can hide some of the variables v by removing them from the list v. Hiding the variables makes them inaccessible from

other actions outside A in a parallel composition. Hiding thus has an eect only on the global

variables declared in A. The opposite operation, revealing, is also useful.

2

In connection with the parallel composition below we will use the following convention. Let

a1 A : a2 and b1 B : b2 be two action systems. Then their parallel composition is the action

system a1 b1 A k B : a2 b2 . Sometimes there is no need to reveal all these identi ers, i.e.,

when they are exclusively accessed by the two component action systems A and B. This eect is

achieved with the following construct that turns out to be extremely useful later: v j A k B ]j : c

Here the identi ers v are a subset of a1 b1 . In the sequel we will often omit the variable lists

v and c when they are clear from the context.

Renement Action systems are intended to be developed in a stepwise manner within the

re nement calculus. In the processor derivation, atomicity re nement is used as a main tool.

Here we briey describe these techniques.

The re nement calculus is based on the following de nition. Let S S be statements. The

statement S is rened by statement S , denoted S S , if

0

0

0

8Q:(wp(S Q) ) wp(S Q)):

0

Re nement between actions is de ned similarly, where the weakest precondition for an action

A is de ned as wp(A Q) =b gA ) wp(sA Q). This usual re nement relation is reexive and

transitive. It is also monotonic with respect to most of the action constructors used here,

e.g. choice and sequencing, see 5]. (Re nement between actions does not necessarily imply

re nement between action systems.)

Properties of actions We de ne some properties of actions that will be useful in describing

the atomicity re nement rule for action systems.

A predicate I is invariant over an action A, if I ) wp(A I ) holds. The way in which actions

can enable and disable each other is captured by the following de nitions.

A cannot enable B = :gB ) wp(A :gB )

A cannot disable B = gB ) wp(A gB )

A excludes B

= gA ) :gB:

Another important set of properties has to do with commutativity of actions. We say that

A commutes with B if A B B A. A sucient condition for two actions A and B to commute

is that there are no read-write conicts for the variables that they access: none of the variables

written by A is read or written by B and vice versa.

Atomicity renement The re nement calculus can be used to derive special purpose trans-

formation rules to be used within program development. The following theorem expresses one

such rule that will be applied repeteadly in our design. It is the so called atomicity re nement

rule that is a powerful tool when developing parallel and distributed systems 4]. It gives the

conditions under which the atomicity of an action can be changed by transforming one big

atomic action (b0 ! S0 do A1 od) into a number of smaller actions (b0 ! S0 and A1 ).

Theorem 1 fQg do (b0 ! S0 do A1 od) ] L ] R od do (b0 ! S0 ) ] A1 ] L ] R od

provided that

(i) Q ) :gA1 ,

(ii) fL Rg cannot enable or disable A1 ,

(iii) A0 = b0 ! S0 is excluded by A1 and

(iv) the actions in fL Rg that are not excluded by fA0 A1 g are such that

3

(a)

(b)

(c)

(d)

for each i = 0 1, L commutes with Ai ,

A1 commutes with R,

L commutes with R and

do R od always terminates.

Observe that above both A1 L and R can model a nondeterministic choice of actions. Furthermore, when applied to an action system A in parallel composition of another action system B,

the actions L and R above might also come from the system B. Hence, we need to consider the

entire system j A k B ]j.

The atomicity re nement rule is very general. It can, however, be used to derive more

specialiced rules, dedicated towards circuit design. Below we give one such rule:

Corollary 1

fQg do (b0 ! S0 do A1 A2 od) ] L ] R od do ((b0 ! S0) A1 A2 ) ] L ] R od

provided that

(i) Q ) :gA1 and

(ii) the actions in fL Rg that are not excluded by fA0 = b0 ! S0 A1 A2 g are such that

(a)

(b)

(c)

(d)

for each i = 0::2, L commutes with Ai ,

for each i = 1::2, Ai commutes with R,

L commutes with R and

do R od always terminates.

3 Initial specication and the design approach

The approach we use in our design is as follows. We start from an initial speci cation that

describes the behavior of the microprocessor as one big action that is continuously executed.

This system is stepwise re ned into a number of smaller, atomic actions and the single system

is decomposed into a number of reactive components modelling the dierent functional parts of

the microprocessor. At a design step one functional component is identi ed and extracted from

the rest of the design. The following re nements are carried out:

Bu ering assignment: The state of the relevant data variables is copied to the new component: x := f (y) j var y y := y x := f (y ) ]j.

Communication channel: A communication channel is introduced between the component

0

0

0

and the rest of the design. Here a channel is a variable c which in the simplest case

has two values: the value req (\request") is assigned by the active system and the value

ack (\acknowledgement") by the passive system, respectively. The initial state of such

a channel is ack. The sequential composition operator `' is used as a powerful tool for

separating the requesting action from the action that waits for the acknowledgement.

Atomicity renement: The action system is brought into a form where the atomicity

re nement theorem can be applied and thereafter the rule is applied. Applying the theorem

and proving it correct the dierent hazard situations arise as actions that don not commute

as required by the theorem.

4

Decomposition: The system is decomposed using the de nition of parallel composition of

action systems making the necessary adjustments for revealing variables.

In the following derivation the atomic entities, actions, are enclosed in the angle brackets `<>'

in order to make reading of the programs easier. The initial description of the microprocessor

is given as

P

:: imem dmem

j var i pc imem0::l] dmem0::m]

pc := pc0

do < true ! i := imempc]

if i:t = R ! pc reg i:c] := pc + 1 aluf(reg i:a] reg i:b] i:f )

] i:t = ld ! pc regi:c] := pc + 1 dmemregi:a] + i:o s]

] i:t = st ! pc dmemregi:a] + i:o s] := pc + 1 regi:b]

] i:t = be !

if reg i:a] 6= reg i:b] ! pc := pc + 1

] regi:a] = regi:b] ! pc := pc + i:o s

]j

od

>

where the variable i contains an instruction fetched from the instruction memory imem. The

variable pc is the program counter of the processor pointing to the instruction to be fetched.

An instruction is a record of six elds: i = (t a b c f o s). The eld t identi es the type of

the instruction: R denotes an arithmetic-logical or R operation speci ed in the eld f which is

a parameter of the function aluf , ld is a load operation from the data memory dmem to the

register bank reg, st is a store operation from the register bank to the data memory, and, nally,

be denotes a \branch when equal"-operation. The elds a and b contain the numbers of the read

register needed by the instruction, and c the number of the write register, respectively. The

oset eld o s contains the relative address used by the load, store and branch instructions.

4 Instruction fetching and decoding

Extracting the fetch and program counter units We start the derivation by separating

the fetch unit F and its close partner, the program counter unit P c, from the initial system P .

In this case, auxiliary buering assignments are not needed, because the variables i and pc can

be regarded as buers. Let us consider the fetch unit more closely.

We introduce an auxiliary variable fd with the values req and ack by re ning the system

P into

P1

:: imem dmem

j var fd i pc imem0::l] dmem0::m]

fd pc := ack pc0

do < fd = ack ! i fd := imempc] req if as before fd := ack > od

]j

This is a trivial transformation because at this point the variable fd is, even though it will

later model a communication channel, only an internal variable.

We bring the system P1 into the form P2 , where the atomicity re nement theorem can be

applied:

5

P2

:: imem dmem

j var fd i pc imem0::l] dmem0::m]

fd pc := ack pc0

do < fd = ack ! i fd := imempc] req do fd = req ! if as before fd := ack od >

]j

od

The above operation is only an syntactical trick: the systems P1 and P2 are actually

equivalent. Referring to the Theorem 1 we have that Q, A0 , and A1 correspond to (fd =

ack ^ pc = pc0), < fd = ack ! i fd := imempc] req >, and < fd = req ! if >,

respectively. The actions L and R do not exist in this case. Hence we only have to check

the conditions (i) and (iii) of the Theorem 1:

(i)

(iii)

(fd = ack ^ pc = pc0 ) :(fd = req)) true

(fd = req ) :(fd = ack)) true

Hence, according to the theorem, we can re ne the single action of P2 into two atomic

actions yielding the system

P3

:: imem dmem

j var fd i pc imem0::l] dmem0::m]

fd pc := ack pc0

do < fd = ack ! i fd := imempc] req > ] < fd = req ! if as before fd := ack > od

]j

The last step is quite straightforward: the above system P3 is decomposed into two separate

systems by the de nition of parallel composition. In other words, P3 j F k P 1 ]j, where

F

P1

:: fd i imem

j var i fd imem0::l]

fd := ack

do < fd = ack ! i fd := imempc] req > od

]j: pc

:: dmem

j var fd i pc dmem0::m]

pc := pc0

do < fd = req ! if as before fd := ack > od

]j

The program counter unit P c is extracted, beginning from the above system P 1 , basically

in the similar manner. We have that P 1 j P c k P 2 ]j, where

P c ::

pc

j var pc

pc := pc0

do < p = inc ! pc p := pc + 1 ack > ] < p = load ! pc p := iaddr ack > od

]j: p iaddr

6

P2

:: p iaddr dmem

j var p reg 0::k] iaddr dmem0::m]

p := ack

do < fd = req !

if i:t = R ! p reg i:c] := inc aluf(reg i:a] reg i:b] i:f )

] i:t = ld ! p regi:c] := inc dmemregi:a] + i:o s]

] i:t = st ! p dmemregi:a] + i:o s] := inc regi:b]

] i:t = be !

if reg i:a] 6= reg i:b] ! p := inc

] regi:a] = regi:b] ! iaddr p := pc + i:o s load

>

< p = ack ! fd := ack >

od

]j: fd i pc

The case is now somewhat more complex, because the single action of the P 1 is actually devided

into three parts instead of two as in the previous re nement. However, the same atomicity

re nement theorem can be applied. The required channel variable is now p which has three

values: the requests inc (increment the counter) and load (load the counter), and an acknowledgement ack. Observe that in P 2 the sending of a request and receival of the corresponding

acknowledgement takes place in two separate actions separated by the semicolon operator.

Extracting the decode unit Next we separate the decode unit D from P 2 . The job of this

system is to separate the elds of the incoming instruction and to read the needed registers. By

introducing the channel dx we re ne using the same approach as above P 2 j D k P 3 ]j, where

D

:: dx p t c f o s A B pc

j var i dx p t a b c o s f A B pc p dx := ack ack

do < fd = req ^ dx = ack ! i pc := i pc >

((< i :t 6= be !

if i :t = R ! t a b c f := i :t i :a i :b i :c i :f A B := reg a] reg b]

] i :t = ld ! t a c o s := i :t i :a i :c i :o s A := rega]

] i :t = st ! t a b o s A B := req i :t i :a i :b i :o s A B := rega] regb]

p := inc >

< p = ack ! dx fd := req ack >)

] (< i :t = be ! t a b o s := i :t i :a i :b i :o s A B dx := rega] regb] req >

< dx = ack ! fd := ack >))

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

od

]j: fd i reg pc

P3

:: iaddr reg dmem

j var reg 0::k] iaddr dmem0::m]

do < dx = req ^ t 6= be !

if t = R ! reg c] := aluf(A B f )

] t = ld ! regc] := dmemA + o s]

] t = st ! dmemA + o s] := B

dx := ack >

] (< dx = req ^ t = be !

if A 6= B ! p := inc

] A = B ! iaddr p := pc + o s load

>

< p = ack ! dx := ack >)

0

od

]j: dx p t c f o s A B pc

0

Note that now we have used a buering assignment, where the values of the variables i and

pc are copied into the local variables i and pc , respectively. The eect is that the systems F

and P 3 can execute concurrently, if i :t 6= be. Furthermore, communication through the channel

0

0

0

7

p is distributed to both above systems, because in the case of the branch instruction we must

postpone the program counter update until the comparison between the registers rega] and

regb] has been completed. In the case of the R, load, or store instruction we can update the

counter earlier. This distribution of the channel p is valid since the p-communications in the

systems are mutually exclusive.

5 Execution and hazard handling

Extracting the execution unit The execution unit X , given below, contains the ALU-

functions and the memory address computations. Observe that the jump address (iaddr) computation skips the buering assignment and uses the old variables t, A, B and o s instead of the

new ones t , A , B , and o s . This arrangement makes the execution of the branch instruction

more ecient in practise. The trick in question is possible, because a new instruction is not

fetched before the branch instruction has been completed.

The system X is obtained from the system P 3 by introducing the new channel xm and

re ning j D k P 3 ]j j D k X k P 4 ]j with

0

X

0

0

0

:: xm t iaddr daddr c C 1

j var xm iaddr daddr t c o s f A B C 1

xm := ack

do < dx = req ^ t 6= be ^ xm = ack !

if t = R ! t c f A B := t c f A B C 1 := aluf(A B f )

] t = ld ! t c o s A := t c o s A daddr := A + o s

] t = st ! o s A B := A B o s daddr := A + o s

xm dx := req ack >

] (< dx = req ^ t = be !

if A 6= B ! p := inc

] A = B ! iaddr p := pc + o s load

>

< p = ack ! dx := ack >)

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

od

]j: dx p t c f o s A B pc

4

P :: reg dmem

j var reg 0::k] dmem0::m]

do < xm= req !

if t = R ! reg c ] := C 1

] t = ld ! regc ] := dmemdaddr]

] t = st ! dmemdaddr] := B

xm := ack >

0

0

0

0

0

0

od

0

]j: xm t c B C 1 daddr

0

0

0

Application of the atomicity re nement theorem reveals that the action in P 4 and a corresponding L-action in the system D, a register read operation in fact, do not exclude each other, but

they do not commute. This violates the condition (iv)(a) of Theorem 1 which requires that such

an L-action commutes with the inner action A1 which in this case is the action of P 4 . Hence,

the above re nement is valid only under certain constraints, i.e., if the processor satis es the

invariant

Ihaz =b :(((i :t 6= ld) ^ haz1 ) _ ((i :t = ld) ^ haz2 )))

where

haz1 =b (xm = req) ^ (t = R _ t = ld) ^ (i :a = c _ i :b = c )

haz2 =b (xm = req) ^ (t = R _ t = ld) ^ (i :a = c )

This is justi ed as follows. The violation occurs when (1) the type of the instruction in the

system P 4 , the variable t , is either R or ld, which indicates a register write operation, and (2)

0

0

0

0

0

0

0

0

0

0

0

8

0

0

the write register in P 4 , the variable c , is the same as a read register in D, the variable i :a

or i :b. In other words, an incoming instruction in D wants to read the register into which the

executing instruction in P 4 is going to write. This read-write conict is called a pipeline hazard .

The above predicate haz1 applies to a case, where the incoming instruction is of the R, store,

or branch type, because such an instruction needs two read registers rega] and regb]. The

predicate haz2 , in turn, applies to an incoming load instruction which reads the register rega]

only.

0

0

0

Adding the hazard detection unit It is not a very practical approach to assume that the

system describing the processor satis es always the above invariant Ihaz . Instead the decode

unit D must deal with a conict state by postponing the execution of the incoming instruction

(stalling the pipeline) until the conict has been resolved, i.e., until the executing R or load

instruction has completed its register write operation. For this we add the hazard or conict

detection unit Haz into the above composition by introducing the channel hz and re ning

j D k X k P 4 ]j j Haz k D1 k X k P 4 ]j, where the initial composition satis es the invariant

Ihaz , and

Haz

:: j do < hz = req ^ t 6= ld ^ :haz1 ! hz := ack >

] < hz = req ^ t = ld ^ :haz2 ! hz := ack >

0

0

od

]j: t a b t c hz

D1

0

0

:: dx p t c f o s A B pc

j var i dx p hz t a b c o s f A B pc p dx hz := ack ack ack

do < fd = req ^ dx = ack ! i pc := i pc >

((< i :t 6= be !

if i :t = R ! t a b c f := i :t i :a i :b i :c i :f

] i :t = ld ! t a c o s := i :t i :a i :c i :o s

] i :t = st ! t a b o s A B := req i :t i :a i :b i :o s

hz := req >

< hz := ack ! if t 6= ld ! A B := reg a] reg b] ] t = ld ! A := reg a] p := inc >

< p = ack ! dx fd := req ack >)

] (< i :t = be ! t a b o s := i :t i :a i :b i :o s hz := req >

< hz = ack ! A B dx := reg a] reg b] req >

< dx = ack ! fd := ack >))

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

od

]j: fd i reg pc

where haz1 =b haz1 a b=i :a i :b] and haz2 =b haz2 a=i :a]. The resulting composition satis es

dx = req ) Ihaz t haz1 haz2 =i :t haz1 haz2 ], which indicates that there is no conict present

whenever the execution unit X is active. Furthermore, the hazard detection with the possible

waiting period takes place after the incoming instruction has been decoded in D1 , but before the

register bank is read and the program counter incremented. This ensures that the right register

values are copied to the variables A and B .

0

0

0

0

0

0

0

0

6 Memory and register access

Extracting the memory access and write back units The system P 4 is split into two

parts: the memory access unit M and the write back unit W which communicate via the channel

mw. Hence, we re ne j P 4 k Haz ]j j M k W k Haz1 ]j, where

9

M ::

mw t C 2 dmem

j var mw C 2 dmem0::m]

mw := ack

do < xm = req ^ t 6= st ^ mw = ack !

if t = R ! t c C 1 := t c C 1

] t = ld ! t c daddr := t c daddr C 2 := dmemdaddr ]

mw xm := req ack >

] < xm = req ^ t = st ^ mw = ack !

t B daddr := t B daddr dmemdaddr ] xm := B ack >

00

0

0

00

00

0

00

00

0

0

0

0

0

0

0

0

00

od

00

0

0

0

0

00

]j: xm t B c daddr

W

0

0

0

:: reg

j var reg 0::k] c C do < mw = req !

if t = R ! c C := c C 1

] t = ld ! c C := c C 2

regc ] mw := C ack >

000

00

000

00

00

000

00

0

000

od

]j: mw t c C 1 C 2

00

00

0

Because this atomicity re nement splits the initial register write operation into two atomic actions connected by the channel mw, we have to weaken the hazard conditions into haz1 =b haz1 _

(mw = req ^ (a = c _ b = c )), and haz2 =b haz2 _ (mw = req ^ a = c ). This yields the system

Haz1 mentioned in the above re nement relation.

00

00

00

00

0

0

00

Extracting the register bank The register access operations are separated from the systems

D and W . As the result, we get the register system Rgs. The register read operations are

activated through the channel regr and the write operations through regw, respectively. We

re ne j D1 k W k Haz 1 ]j j Rgs k D2 k W 1 k Haz 2 ]j, where

Rgs ::

reg

j var reg 0::k] A B do < regw = req ! reg c ] regw := C ack >

] < reqr = req !

if t 6= ld ! A B := reg a] reg b]

] t = ld ! A := rega]

regr := ack >

000

od

]j: regw regr c a b C

D2

000

:: dx p t c f o s A B pc

j var i dx p hz t a b c o s f A B pc p dx hz := ack ack ack

do < fd = req ^ dx = ack ! i pc := i pc >

((< i :t 6= be !

if i :t = R ! t a b c f := i :t i :a i :b i :c i :f

] i :t = ld ! t a c o s := i :t i :a i :c i :o s

] i :t = st ! t a b o s A B := req i :t i :a i :b i :o s

hz := req >

< hz := ack ! p regr := inc req >

< p = ack ^ regr = ack ! dx fd := req ack >)

] (< i :t = be ! t a b o s := i :t i :a i :b i :o s hz := req >

< hz = ack ! regr := req >

< regr = ack ! dx := req >

< dx = ack ! fd := ack >))

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

od

]j: fd i reg pc

10

0

0

0

0

0

W1

:: regw c C

j var regw C c regw := ack

do < mw = req ^ regw = ack !

if t = R ! c C := c C 1

] t = ld ! c C := c C 2

regw mw := req ack >

000

000

od

00

000

00

00

000

00

0

]j: mw t c C 1 C 2

00

00

0

The atomicity re nement using the channel regw enforces us to weaken the hazard conditions

into haz1 =b haz1 _ (regw = req ^ (a = c _ b = c )), and haz2 =b haz2 _ (regw = req ^ a = c ),

which gives the mentioned system Haz 2 .

000

00

000

000

000

00

000

Extracting the memory blocks As done with the register operations, also the memory

manipulations are placed into separate systems. The instruction memory system I m is separated

from the fetch unit F , and the data memory system Dm from the memory access unit M,

respectively. The system I m needs only a read channel imr, while Dm requires both a read

channel dmr and a write channel dmw. We re ne j F k M k Haz 2 ]j j I m k Dm k F 1 k

M1 k Haz3 ]j, where

I m ::

imem i

j var imem0::l] i

do < imr = req ! i imr := dmempc] ack > od

]j: imr pc

Dm :: C 2 dmem

j var dmem0::m] C 2

do < dmw = req ! dmemdaddr ] dmw := B ack >

] < dmr = req ! C 2 dmr := dmemdaddr ] ack >

0

00

0

od

]j: dmw dmr daddr B

1

F :: fd imr

j var fd imr

fd imr := ack ack

do < fd = ack ! imr := req >

< imr = ack ! fd := req >

0

00

od

]j

1

M :: mw dmw t B c daddr em

j var mw dmw t B c daddr em mw dmw dmr em := ack ack ack false

do (< xm = req ^ t = R ^ :em ^ dmw = ack !

t c C 1 := t c C 1 em mw xm := true req ack >

< mw = ack ! em := false >)

] (< xm = req ^ t = ld ^ :em ^ dmw = ack !

t c daddr := t c daddr em dmr xm := true req ack >

< dmr = ack ! mw := req >

< mw = ack ! em := false >)

] < xm = req ^ t = st ^ :em ^ dmw = ack !

B daddr := B daddr dmw xm := req ack >

00

00

00

0

00

00

00

0

0

00

00

00

00

0

0

0

0

0

0

0

0

00

od

0

0

]j: xm t B c daddr

0

0

0

In this case, atomicity of the involved actions is changed in such a way, that the hazard conditions

haz1 and haz2 cannot be preserved, i.e., weakened enough, without introducing an auxiliary

variable. The reason for this is that in the system M1 the assignments xm := ack and mw := req

are executed sequentially, in two separate actions, if t = ld. The problem is solved by using the

boolean variable em in M1 and weakening the hazard conditions into haz1 =b haz1 em =(mw =

000

000

0

0000

11

000

req)], and haz2 =b haz2 em =(mw = req)]. This update yields the hazard detection system

Haz3.

0000

000

7 Extracting the other data path components

By combining the above re nement steps we have that

P j F 1 k D2 k X k M1 k W 1 k P c k I m k Rgs k Dm k Haz3 ]j

Dm

C2

C1’

t’’

c’’

M

1

dmw

mw

W

1

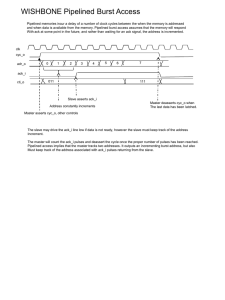

The block diagram of this composition is shown in Fig. 1

dmr

c’’’

C

regw

B’

B

B’’

daddr

A

C1

c’

b

D

Pc

p

pc

imr

F1

Im

i

fd

a

b

t

a

Rgs

c

f

t

offs

dx

pc’

hz

Haz

2

regr

3

X

t’

xm

c’’’

c’’

c’

xm

em

regw

daddr’

iaddr

Figure 1: Intermediate block diagram of the processor

We complete the decomposition process by separating the four pipeline registers P r1 , . . . ,

P r4 from the stages. In addition, the decode unit and the execution unit are split into the

control and data parts. The control systems are D:c and X :c, and the data systems D:d and X :d,

12

respectively. These transformations follow exactly the same principles as the above derivation.

To summarize, we perform the re nements

D2 j D:c k D:d k P r1 ]j

j X k Haz3 ]j j X :c k X :d k P r2 k Haz4 ]j

M1 j M2 k P r3 ]j

W 1 j W 2 k P r4 ]j

As an example, for the execution unit we have that

X :c ::

xm xd r2w ex

j var xm xd r2w ex xm xd r2w ex := ack ack ack false

do (< dx = req ^ :ex ^ t 6= be ! r2w := req >

< r2w = ack ! ex xd dx := true req ack >

< xd = ack ! xm := req >

< xm = ack ! ex := false >)

] (< dx = req ^ t = be ! xd := req >

(< xd = inc ! p := inc >

] < xd = load ! p := load >)

< p = ack ! dx := ack >)

od

]j: dx t

X :d :: iaddr daddr C 1

j var iaddr daddr C 1

do < xd = req !

if t = R ! C 1 xd := aluf(A B f ) ack

] t = ld ! daddr xd := A + o s ack

] t = st ! daddr xd := A + o s ack

] t = be !

if A 6= B ! xd := inc

] A = B ! iaddr xd := pc + o s load

0

0

0

0

0

0

0

0

0

0

0

>

od

]j: xd t t f o s A B A B

P r2 :: t A B c f o s

j var t A B f o s c do < r2w = req !

if t = R ! t A

] t = ld ! t A

] t = st ! t A

r2w := ack >

0

0

0

0

0

0

0

0

0

0

0

0

pc

0

0

0

0

0

0

0

0

0

0

0

0

B c f := t A B c f

c o s := t A c o s

B o s := t A B c f

0

0

0

0

0

0

0

od

]j: t A B c f o s r 2w

where two new communication channels, xd and rw2, are introduced. Note that the auxiliary

boolean variable ex is used to make updating of the hazard conditions possible in the above

atomicity re nement. This procedure corresponds to the earlier discussion concerning the auxiliary variable em of the memory access system M1 . The new hazard detection unit Haz 4 is

obtained by substituting haz1 =b haz1 ex =(xm = req)], and haz2 =b haz2 ex =(xm = req)].

The block diagram of the nal composition is shown in Fig. 2

00000

0000

00000

0000

8 Concluding remarks

We have presented a formal framework for the design of asynchronous pipelined microprocessors.

The main tools used were the atomicity re nement rule for action systems and the spatial

13

Figure 2: Final block diagram of the processor

14

Pc

p

iaddr

pc

Im

F

imr

1

i

fd

Pr1

i’

pc’

r1w

D.d

dd

D.c

b

a

Rgs

A

B

f’

f

A’

B’

offs’

c’’’

c’’

c’

offs

c’

Pr2

r2w

c

dx

4

t’

hz

Haz

t

regr

a

b

t

ex

em

regw

X.d

xd

X.c

B’

daddr

C1

c’

t’

Pr3

xm

B’’

daddr’

C1’

c’’

t’’

r3w

2

Dm

dmw

M

C2

dmr

Pr4

mw

C

c’’’

r4w

regw

W

2

decomposition of an action system into a number of reactive components communicating via

shared variables.

We did not give the low-level descriptions of the pipeline stages in this paper, but the

emphasis was on identifying and separating the functional units by introducing an abstract

communication mechanism which can be easily transformed into a concrete, realizable form

15].

Acknowledgements Our work was inspired by discussions with the participants (especially

with Mark Josephs) at the ACiD-WG working group meeting in Groningen in September 1996.

The research is supported by the Academy of Finland.

References

1] R. J. R. Back. On the Correctness of Renement Steps in Program Development. PhD thesis, Department

of Computer Science, University of Helsinki, Helsinki, Finland, 1978. Report A{1978{4.

2] R. J. R. Back and R. Kurki-Suonio. Decentralization of process nets with centralized control. In Proc. of

the 2nd ACM SIGACT{SIGOPS Symp. on Principles of Distributed Computing, pages 131{142, 1983.

3] R. J. R. Back, A. J. Martin, and K. Sere. Specifying the Caltech asynchronous microprocessor. Science of

Computer Programming, North-Holland. Accepted for publication.

4] R.J.R. Back and K. Sere. Stepwise renement of action systems. Structured Programming, 12:17-30, 1991.

5] R. J. R. Back and J. von Wright. Renement calculus, part I: Sequential nondeterministic programs. In J.

W. de Bakker, W.{P. de Roever, and G. Rozenberg, editors, Stepwise Renement of Distributed Systems:

Models, Formalisms, Correctness. Proceedings. 1989, volume 430 of Lecture Notes in Computer Science,

pages 42{66. Springer{Verlag, 1990.

6] K. Chandy and J. Misra. Parallel Program Design: A Foundation. Addison{Wesley, 1988.

7] I. David, Ran Ginosar, and Michael Yoeli. Self-timed architecture of a reduced instruction set computer. In

S. Furber, M. Edwards, editors, Asynchronous Design Methodologies , pages 29-43, North-Holland, 1993.

8] A. Davis and S.M. Nowick. Asynchronous circuit design: motivation, background and methods. In G.

Birtwistle and A. Davis, editors, Asynchronous Digital Circuit Design , pages 1-49. Springer, 1995.

9] E. W. Dijkstra. A Discipline of Programming. Prentice{Hall International, 1976.

10] S. Furber. Computing wthout clocks: micropipelining the ARM processor. In G. Birtwistle and A. Davis,

editors, Asynchronous Digital Circuit Design , pages 211-262. Springer, 1995.

11] A. J. Martin, S. Burns, T. Lee, D. Borkovic, and P. Hazewindus. The rst asynchronous microprocessor: the

test results. Computer Architecture News , pages 95-110, MIT Press,1989.

12] C. C. Morgan. The specication statement. ACM Transactions on Programming Languages and Systems,

10(3):403{419, July 1988.

13] J. M. Morris. A theoretical basis for stepwise renement and the programming calculus. Science of Computer

Programming, 9:287{306, 1987.

14] D. A. Patterson and J. L. Hennessy. Computer Organization and Design. The Hardware/Software Interface.

Morgan Kaufmann Publishers, 1994.

15] J. Plosila, R. Rukse_ nas, K. Sere, and T. Kuusela. Synthesis of DI Circuits within the Action Systems

Framework. Manuscript,1996.

16] R. Sproull, I. Sutherland, C. Molnar. Counterow pipeline architecture. Technical report, SMLI TR-94-25,

Sun Microsystems Laboratories Inc, April 1994.

17] I. E. Sutherland. Micropipelines. Communications of the ACM, 32(6):720{738, June, 1989.

15

Turku Centre for Computer Science

Lemminkaisenkatu 14

FIN-20520 Turku

Finland

http://www.tucs.abo.

University of Turku

Department of Mathematical Sciences

Abo Akademi University

Department of Computer Science

Institute for Advanced Management Systems Research

Turku School of Economics and Business Administration

Institute of Information Systems Science