and sym Log(Q∗ Z) - an der Universität Duisburg

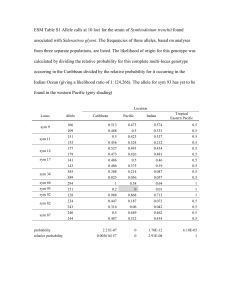

advertisement

arXiv:1302.3235v2 [math.CA] 18 Feb 2013

The unitary polar factor Q = Up minimizes

k Log(Q∗Z)k2 and k sym* Log(Q∗Z)k2 in the spectral

norm in any dimension and the Frobenius matrix norm

in three dimensions

Patrizio Neff∗ and Yuji Nakatsukasa† and Andreas Fischle‡

February 19, 2013

Abstract

The unitary polar factor Q = Up in the polar decomposition of Z = Up H is the

minimizer for both k Log(Q∗ Z)k2 and its Hermitian part k sym*(Log(Q∗ Z))k2 over both

R and C for any given invertible matrix Z ∈ Cn×n and any matrix logarithm Log, not

necessarily the principal logarithm log. We prove this for the spectral matrix norm in

any dimension and for the Frobenius matrix norm in two and three dimensions. The

result shows that the unitary polar factor is the nearest orthogonal matrix to Z not

only in the normwise sense, but also in a geodesic distance. The derivation is based on

Bhatia’s generalization of Bernstein’s trace inequality for the matrix exponential and

a new sum of squared logarithms inequality. Our result generalizes the fact for scalars

that for any complex logarithm and for all z ∈ C \ {0}

min | LogC (e−iϑ z)|2 = | log |z||2 ,

ϑ∈(−π,π]

min | Re LogC (e−iϑ z)|2 = | log |z||2 .

ϑ∈(−π,π]

Keywords: unitary polar factor, matrix logarithm, matrix exponential, Hermitian part,

minimization, unitarily invariant norm, polar decomposition, sum of squared logarithms inequality, optimality, matrix Lie-group, geodesic distance

AMS 2010 subject classification: 15A16, 15A18, 15A24, 15A44, 15A45, 26Dxx

∗

Corresponding author: Patrizio Neff, Lehrstuhl für Nichtlineare Analysis und Modellierung, Fakultät

für Mathematik, Universität Duisburg-Essen, Campus Essen, Thea-Leymann Str. 9, 45127 Essen, Germany,

email: patrizio.neff@uni-due.de, Tel.: +49-201-183-4243

†

Yuji Nakatsukasa, School of Mathematics, The University of Manchester, Manchester, M13 9PL, UK,

email: yuji.nakatsukasa@manchester.ac.uk

‡

Andreas Fischle, Fakultät für Mathematik, Universität Duisburg-Essen, Campus Essen, Thea-Leymann

Str. 9, 45127 Essen, Germany, email: andreas.fischle@uni-due.de

1

1

1.1

Introduction

The polar decomposition

Every matrix Z ∈ Cm×n admits a polar decomposition

Z = Up H ,

where the unitary polar factor Up has orthonormal columns and H is Hermitian positive

semidefinite [2], [17, Ch. 8]. The decomposition is unique if Z has full column rank. In the

following we assume that Z is an invertible matrix, in which case H is positive definite. The

polar decomposition is the matrix analog of the polar form of a complex number

z = ei arg(z) · r,

r = |z| ≥ 0,

−π < arg(z) ≤ π .

The polar decomposition has a wide variety of applications: the solution to the Euclidean

orthogonal Procrustes problem minQ∈U(n) kZ − BQk2F is given by the unitary polar factor of

B ∗ Z [14, Ch. 12], and the polar decomposition can be used as the crucial tool for computing

the eigenvalue decomposition of symmetric matrices and the singular value decomposition

(SVD) [30]. Practical methods for computing the polar decomposition are the scaled Newton iteration [11] and the QR-based dynamically weighted Halley iteration [28], and their

backward stability is established in [29].

The unitary polar factor Up has the important property [6, Thm. IX.7.2], [13], [17, p. 197],

[20, p.454] that it is the nearest unitary matrix to Z ∈ Cn×n , that is,

√

min kZ − Qk2 = min kQ∗ Z − Ik2 = kUp∗ Z − Ik2 = k Z ∗ Z − Ik2 ,

(1.1)

Q∈U(n)

Q∈U(n)

where k · k denotes any unitarily invariant norm. For the Frobenius matrix norm this

optimality implies for real Z ∈ Rn×n and the orthogonal polar factor [24]

∀ Q ∈ O(n) : tr QT Z = hQ, Zi ≤ hUp , Zi = tr UpT Z .

(1.2)

In the complex case we similarly have [20, Thm. 7.4.9, p.432]

∀ Q ∈ U(n) :

Re tr (Q∗ Z) ≤ Re tr Up∗ Z .

(1.3)

For invertible Z ∈ GL+ (n, R) and the Frobenius matrix norm k·kF it can be shown that [9, 24]

min µ k sym*(QT Z − I)k2F + µc k skew∗(QT Z − I)k2F = µ kUpT Z − Ik2F ,

Q∈O(n)

(1.4)

for µc ≥ µ > 0. Here, sym*(X) = 21 (X ∗ +X) is the Hermitian part and skew∗(X) = 21 (X −X ∗ )

is the skew-Hermitian part of X. The family (1.4) appears as strain energy expression in

geometrically exact Cosserat extended continuum models [23, 32, 33, 34, 38].

2

Surprisingly, the optimality (1.4) of the orthogonal polar factor ceases to be true for

0 ≤ µc < µ. Indeed, for µc = 0 one can show that [36] there exist Z ∈ R3×3 such that

min k sym*(QT Z − I)k2F < kUpT Z − Ik2F .

Q∈SO(3)

(1.5)

By compactness of SO(n) and continuity of Q 7→ k sym* QT Z − Ik2F it is clear that the

minimum in (1.5) exists. Here, the polar factor Up of Z ∈ GL+ (3, R) is always a critical point,

but is not necessarily (even locally) minimal. In contrast to kXk2F the term k sym* Xk2F is not

invariant w.r.t. left-action of SO(3) on X, which does explain the appearance of nonclassical

solutions in (1.5) since now

k sym* QT Z − Ik2F = k sym* QT Zk2F − 2 tr QT Z + 3

(1.6)

and optimality does not reduce to optimality of the trace term (1.2). The reason there is no

nonclassical solution in (1.4) is that for µc ≥ µ > 0 we have

min µ k sym*(QT Z − I)k2F + µc k skew∗(QT Z − I)k2F

(1.7)

Q∈O(n)

≥ min µ k sym*(QT Z − I)k2F + µ k skew∗(QT Z − I)k2F = min µ kQT Z − Ik2F .

Q∈O(n)

1.2

Q∈O(n)

The matrix logarithm minimization problem and results

Formally, we obtain our minimization problem by replacing the matrix QT Z − I by the

matrix Log(QT Z) in (1.4). Then, introducing the weights µ, µc ≥ 0 we embed the problem

in a more general family of minimization problems at a given Z ∈ GL+ (n, R)

min µ k sym* Log(QT Z)k2F + µc k skew∗ Log(QT Z)k2F ,

Q∈SO(n)

µ > 0, µc ≥ 0 .

(1.8)

For the solution of (1.8) we consider separately the minimization of

min k Log(Q∗ Z)k2 ,

Q∈U(n)

min k sym* Log(Q∗ Z)k2 ,

Q∈U(n)

(1.9)

on the group of unitary matrices Q ∈ U(n) and with respect to any matrix logarithm Log.

We show that the unitary polar factor Up is a minimizer of both terms in (1.9) for both

the Frobenius norm (dimension n = 2, 3) and the spectral matrix norm for arbitrary n ∈ N,

and the minimum is attained when the principal logarithm is taken.

Finally, we show that the minimizer of the real problem (1.8) for all µ > 0, µc ≥ 0 is also

given by the polar factor Up . Note that sym*(QT Z − I) is the leading order approximation

to the Hermitian part of the logarithm sym* Log QT Z in the neighborhood of the identity I,

and recall the non-optimality of the polar factor in (1.5) for µc = 0. The optimality of the

polar factor in (1.9) is therefore rather unexpected. Our result implies also that different

members of the family of Riemannian metrics gX on the tangent space (1.22) lead to the

same Riemannian distance to the compact subgroup SO(3), see [35].

3

Since we prove that the unitary polar factor Up is the unique minimizer for (1.8) and

(1.9)1 in the Frobenius matrix norm for n ≤ 3, it follows that these new optimality properties

of Up provide another characterization of the polar decomposition.

In our optimality proof we do not use differential calculus on the nonlinear manifold

SO(n) for the real case because the derivative of the matrix logarithm is analytically not

tractable. However, if we assume a priori that the minimizer Q♯ ∈ SO(n) can be found

in the set {Q ∈ SO(n) | kQT Z − IkF ≤ q < 1 }, we can use the power series expansion of

the principal logarithm and differential calculus to show that the polar factor is indeed the

unique minimizer (unpublished).

Instead, motivated by insight gained in the simple complex case, we first consider the

Hermitian minimization problem, which has the advantage of allowing us to work with the

positive definite Hermitian matrix exp sym* Log Q∗ Z. A subtlety that we encounter several

times is the possible non-uniqueness of the matrix logarithm Log. Our optimality result

relies crucially on unitary invariance and a Bernstein-type trace inequality [3]

tr (exp X exp X ∗ ) ≤ tr (exp (X + X ∗ )) ,

(1.10)

for the matrix exponential. Together, these imply some algebraic conditions on the eigenvalues in case of the Frobenius matrix norm, which we exploit using a new sum of squared

logarithms inequality [8]. The case of the spectral norm is considerably easier.

This paper is organized as follows. In the remainder of this section we describe an

application that motivated this work. In Section 2 we present two-dimensional analogues to

our minimization problems in both, complex and real matrix representations, to illustrate

the general approach and notation. In Section 3 we collect properties of the matrix logarithm

and its Hermitian part. Section 4 contains the main results where we discuss the unitary

minimization (1.9). From the complex case we then infer the real case in Section 5 and

finally discuss uniqueness

pin Section 6.

Notation. σi (X) = λi (X ∗ X) denotes the

i-th largest singular value of X. kXk2 =

qP

n

2

σ1 (X) is the spectral matrix norm, kXkF =

i,j=1 |Xij | is the Frobenius matrix norm

with associated inner product hX, Y i = tr (X ∗ Y ). The symbol I denotes the identity matrix.

An identity involving k·k without subscripts holds for any unitarily invariant norm. To avoid

confusion between the unitary polar factor and the singular value decomposition (SVD)

of Z = UΣV ∗ , Up with the subscript p always denotes the unitary polar factor, while U

denotes the matrix of right singular vectors. Hence for example Z = Up H = UΣV ∗ . U(n),

O(n), GL(n, C), GL+ (n, R), SL(n) and SO(n) denote the group of complex unitary matrices,

orthogonal real matrices, invertible complex matrices, invertible real matrices with positive

determinant, the special linear group and the special orthogonal group, respectively. The

set so(n) is the Lie-algebra of all n × n skew-symmetric matrices and sl(n) denotes the

Lie-algebra of all n × n traceless matrices. The set of all n × n Hermitian matrices is H(n)

and positive definite Hermitian matrices are denoted by P(n). We let sym* X = 21 (X ∗ + X)

denote the Hermitian part of X and skew∗ X = 21 (X − X ∗ ) the skew-Hermitian part of X

such that X = sym* X + skew∗ X. In general, Log Z with capital letter denotes any solution

to exp X = Z, while log Z denotes the principal logarithm.

4

1.3

Application and practical motivation for the matrix logarithm

In this subsection we describe how our minimization problem including the matrix logarithm arises from new concepts in nonlinear elasticity theory and may find applications in

generalized Procrustes problems. Readers interested only in the result may continue reading

Section 2.

1.3.1

Strain measures in linear and nonlinear elasticity

Define the Euclidean distance dist2euclid (X, Y ) := kX − Y k2F , which is the length of the

2

line segment joining X and Y in Rn . We consider an elastic body which in a reference

configuration occupies the bounded domain Ω ⊂ R3 . Deformations of the body are prescribed

by mappings

ϕ : Ω 7→ R3 ,

(1.11)

where ϕ(x) denotes the deformed position of the material point x ∈ Ω. Central to elasticity

theory is the notion of strain. Strain is a measure of deformation such that no strain means

that the body Ω has been moved rigidly in space. In linearized elasticity, one considers

ϕ(x) = x + u(x), where u : Ω ⊂ R3 7→ R3 is the displacement. The classical linearized strain

measure is ε := sym* ∇u. It appears through a matrix nearness problem

dist2euclid (∇u, so(3)) := min k∇u − W k2F = k sym* ∇uk2F .

W ∈so(3)

(1.12)

Indeed, sym* ∇u qualifies as a linearized strain measure: if dist2euclid (∇u, so(3)) = 0 then

c .x + bb is a linearized rigid movement. This is the case since

u(x) = W

dist2euclid (∇u(x), so(3)) = 0 ⇒ ∇u(x) = W (x) ∈ so(3)

(1.13)

and 0 = Curl ∇u(x) = Curl W (x) implies that W (x) is constant, see [37].

In nonlinear elasticity theory one assumes that ∇ϕ ∈ GL+ (3, R) (no self-interpenetration

of matter) and considers the matrix nearness problem

dist2euclid (∇ϕ, SO(3)) := min k∇ϕ − Qk2F = min kQT ∇ϕ − Ik2F .

Q∈SO(3)

Q∈SO(3)

(1.14)

From (1.1) it immediately follows that

p

(1.15)

dist2euclid (∇ϕ, SO(3)) = k ∇ϕT ∇ϕ − Ik2F .

p

p

stretch

tensor

and

The term ∇ϕT ∇ϕ is called the right

∇ϕT ∇ϕ − I is called the Biot

p

strain tensor. Indeed, the quantity ∇ϕT ∇ϕ − I qualifies as a nonlinear strain measure: if

b + bb is a rigid movement. This is the case since

dist2euclid (∇ϕ, SO(3)) = 0 then ϕ(x) = Q.x

dist2euclid (∇ϕ, SO(3)) = 0 ⇒ ∇ϕ(x) = Q(x) ∈ SO(3)

5

(1.16)

and 0 = Curl ∇ϕ(x) = Curl Q(x) implies that Q(x) is constant, see [37]. Many other

expressions can serve as strain measures. One classical example is the Hill-family [18, 19, 39]

of strain measures

( p

m

1

T

∇ϕ ∇ϕ − I , m 6= 0

(1.17)

am (∇ϕ) := m p

m = 0.

log ∇ϕT ∇ϕ ,

The case m = 0 is known as Hencky’s strain measure [16]. Note that am (I + ∇u) =

sym* ∇u + . . . all coincide in the first-order approximation for all m ∈ R.

In case of isotropic elasticity the formulation of a boundary value problem of place may

be based on postulating an elastic energy by integrating an SO(3)-bi-invariant (isotropic and

frame-indifferent) function W : R3×3 7→ R of the strain measure am over Ω

Z

E(ϕ) :=

W (am (∇ϕ)) dx , ϕ(x)ΓD = ϕ0 (x)

(1.18)

Ω

and prescribing the boundary deformation ϕ0 on the Dirichlet part ΓD ⊂ ∂Ω. The goal

is to minimize E(ϕ) in a class of admissible functions. For example, choosing m = 1 and

W (am ) = µ kamk2F + λ2 (tr (am ))2 leads to the isotropic Biot strain energy [36]

Z

2

p

λ p T

tr

µ k ∇ϕT ∇ϕ − Ik2F +

∇ϕ ∇ϕ − I

dx

(1.19)

2

Ω

with Lamé constants µ, λ. The corresponding Euler-Lagrange equations constitute a nonlinear, second order system of partial differential equations. For reasonable physical response

of an elastic material Hill [18, 19, 39] has p

argued that W should be a convex function of the

logarithmic strain measure a0 (∇ϕ) = log ∇ϕT ∇ϕ. This is the content of Hill’s inequality.

Direct calculation shows that a0 is the only strain measure among the family (1.17) that has

the tension-compression symmetry, i.e., for all unitarily invariant norms

ka0 (∇ϕ(x)−1 )k = ka0 (∇ϕ(x))k .

(1.20)

In his Ph.D thesis [31] the first author was the first to observe that energies convex in the

logarithmic strain measure a0 (∇ϕ) are not rank-one convex. However, rank-one convexity

is true in a large neighborhood of the identity [10].

Assume for simplicity that we deal with an elastic material that can only sustain volume

preserving deformations. Locally, we must have det ∇ϕ(x) = 1. Thus, for the deformation

gradient ∇ϕ(x) ∈ SL(3). On SL(3) the straight line X + t(Y − X) joining X, Y ∈ SL(3)

leaves the group. Thus, the Euclidean distance dist2euclid (∇ϕ, SO(3)) does not respect the

group structure of SL(3).

Since the Euclidean distance (1.15) is an arbitrary choice, novel approaches in nonlinear

elasticity theory aim at putting more geometry (i.e. respecting the group structure of the

deformation mappings) into the description of the strain a material endures. In this context,

it is natural to consider the strain measures induced by the geodesic distances stemming from

choices for the Riemannian structure respecting also the algebraic group structure, which we

introduce next.

6

1.3.2

Geodesic distances

In a connected Riemannian manifold M with Riemannian metric g, the length of a continuously differentiable curve γ : [a, b] 7→ M is defined by

Z bq

gγ(t) (γ̇(s), γ̇(s)) ds .

L(γ) : =

(1.21)

a

At every X ∈ M the metric gX : TX M×TX M 7→ R is a positive definite, symmetric bilinear

form on the tangent space TX M. The distance distgeod,M (X, Y ) between two points X and Y

of M is defined as the infimum of the length taken over all continuous, piecewise continuously

differentiable curves γ : [a, b] 7→ M such that γ(a) = X and γ(b) = Y . With this definition

of distance, geodesics in a Riemannian manifold are the locally distance-minimizing paths,

in the above sense. Regarding M = SL(3) as a Riemannian manifold equipped with the

metric associated to one of the positive definite quadratic forms of the family

∀ µ, µc > 0 : gX (ξ, ξ) : = µ ksym(X −1 ξ)k2F + µc kskew(X −1 ξ)k2F , ξ ∈ TX SL(3) ,

(1.22)

we have γ −1 (t)γ(t) ∈ TI SL(3) = sl(3) (by direct calculation, sl(3) denotes the trace free

R3×3 -matrices) and

gγ(t) (γ̇(t), γ̇(t)) = µ ksym(γ −1 (t)γ̇(t))k2F + µc kskew(γ −1 (t)γ̇(t))k2F .

(1.23)

It is clear that

∀ µ, µc > 0 :

µ ksym Y k2F + µc kskew Y k2F

(1.24)

is a norm on sl(3). For such a choice of metric we then obtain associated Riemannian distance

metric

distgeod,SL(3) (X, Y ) = inf{L(γ), γ(a) = X , γ(b) = Y } .

(1.25)

This construction ensures the validity of the triangle inequality [22, p.14]. The geodesics on

SL(3) for the family of metrics (1.22) have been computed in [25] in the context of dissipation

distances in elasto-plasticity.

With this preparation, it is now natural to consider the strain measure induced by the

geodesic distance. For a given deformation gradient ∇ϕ ∈ SL(3) we thus compute the

distance to the nearest orthogonal matrix in the geodesic distance (1.25) on the Riemanian

manifold and matrix Lie-group SL(3), i.e.,

dist2geod,SL(3) (∇ϕ, SO(3)) := min dist2geod,SL(3) (∇ϕ, Q) .

Q∈SO(3)

(1.26)

It is clear that this defines a strain measure, since dist2geod,SL(3) (∇ϕ(x), SO(3)) = 0 implies

b + bb. Fortunately, the minimization on the right hand

∇ϕ(x) ∈ SO(3), whence ϕ(x) = Qx

7

side in (1.26) can be carried out although the explicit distances dist2geod,SL(3) (∇ϕ, Q) for

Q ∈ SO(3) remain unknown to us. In [35] it is shown that

min dist2geod,SL(3) (∇ϕ, Q) = min k Log(QT ∇ϕ)k2F .

Q∈SO(3)

Q∈SO(3)

(1.27)

Here and throughout, by Log Z we denote any matrix logarithm, one of the many solutions

X to exp X = Z. By contrast, log Z denotes the principal logarithm, see Section 3.3. The

last equality constitutes the basic motivation for this work, where we solve the minimization

problem on the right hand side of (1.27) and determine thus the precise form of the geodesic

strain measure. As a result of this paper it turns out that

p

(1.28)

dist2geod,SL(3) (∇ϕ, SO(3)) = k log ∇ϕT ∇ϕk2F ,

which is nothing else but a quadratic expression in Hencky’s strain measure (1.17) and therefore satisfying Hill’s inequality.

Geodesic distance measures have appeared recently in many other applications: for example, one considers a geodesic distance on the Riemannian manifold of the cone of positive

definite matrices P(n) (which is a Lie-group but not w.r.t. the usual matrix multiplication)

[7, 27] given by

−1/2

dist2geod,P(n) (P1 , P2 ) := k log(P1

−1/2

P2 P1

)k2F .

(1.29)

Another distance, the so-called log-Euclidean metric on P(n)

dist2log,euclid,P(n) (P1 , P2 ) :=k log P2 − log P1 k2F

in general 6= k log(P1−1P2 )k2F = dist2log,euclid,P(n) (P1−1 P2 , I) (1.30)

is proposed in [1]. Both formulas find application in diffusion tensor imaging or in fitting of

positive definite elasticity tensors. The geodesic distance on the compact matrix Lie-group

SO(n) is also well known, and it has important applications in the interpolation and filtering

of experimental data given on SO(3), see e.g. [26]

2

dist2geod,SO(n) (Q1 , Q2 ) := k log(Q−1

1 Q2 )kF ,

−1 6∈ spec(Q−1

1 Q2 ) .

(1.31)

In cases (1.29), (1.30), (1.31) it is, contrary to (1.27), the principal matrix logarithm that

appears naturally. A common and desirable feature of all distance measures involving the

logarithm presented above, setting them apart from the Euclidean distance, is invariance

under inversion: d(X, I) = d(X −1 , I) and d(X, 0) = +∞. We note in passing that

d2log,GL+ (n,R) (X, Y ) := k Log(X −1 Y )k2F

(1.32)

does not satisfy the triangle inequality and thus it cannot be a Riemannian distance metric

on GL+ (n, R). Further, X −1 Y is in general not in the domain of definition of the principal

matrix logarithm. If applicable, the expression (1.32) measures in fact the length of curves γ :

8

[0, 1] 7→ M, γ(0) = X, γ(1) = Y defining one-parameter groups γ(s) = X exp(s Log(X −1 Y ))

on the matrix Lie-group M. Note that it is only if the manifold M is a compact matrix Liegroup (like e.g. SO(n)) equipped with a bi-invariant Riemannian metric that the geodesics

are precisely one-parameter subgroups [40, Prop.9]. This point is sometimes overlooked in

the literature.

1.3.3

A geodesic orthogonal Procrustes problem on SL(3).

The Euclidean orthogonal Procrustes problem for Z, B ∈ SL(3)

min dist2euclid (Z, BQ) = min kZ − BQk2F

Q∈U (3)

Q∈U (3)

(1.33)

has as solution the unitary polar factor of B ∗ Z [14, Ch. 12]. However, any linear transformation of Z and B will yield another optimal unitary matrix. This deficiency can be

circumvented by considering the straightforward extension to the geodesic case

min dist2geod,SL(3) (Z, BQ) .

Q∈U (3)

(1.34)

In contrast to the Euclidean distance, the geodesic distance is by construction SL(3)-leftinvariant:

∀ B ∈ SL(3) :

dist2geod,SL(3) (X, Y )) = dist2geod,SL(3) (BX, BY )

(1.35)

and therefore we have

min dist2geod,SL(3) (Z, BQ) = min dist2geod,SL(3) (B −1 Z, Q)

Q∈U (3)

Q∈U (3)

(1.36)

with “another” geodesic optimal solution: the unitary polar factor of B −1 Z, according to

the results of this paper.

2

Prelude on optimal rotations in the complex plane

Let us turn to the optimal rotation problem (1.9)1 :

min k Log(Q∗ Z)k2 .

Q∈U(n)

(2.1)

In order to get hands on this problem we first consider the scalar case. We may always

identify the punctured complex plane C \ {0} =: C× = GL(1, C) with the two-dimensional

conformal group CSO(2) ⊂ GL+ (2, R) through the mapping

a b

, a2 + b2 6= 0} .

(2.2)

z = a + i b 7→ Z ∈ CSO(2) := {

−b a

9

Let us define a norm k · kCSO on CSO(2). We set kXk2CSO := 12 kXk2F = 12 tr X T X . Some

useful connections between C× and CSO(2) are given in the appendix.

Next we introduce the logarithm. For every invertible z ∈ C \ {0} =: C× there always

exists a solution to eη = z and we call η ∈ C the natural complex logarithm LogC (z)

of z. However, this logarithm may not be unique, depending on the unwinding number

[17, p.269]. The definition of the natural logarithm has some well known deficiencies: the

formula LogC (w z ) = z LogC (w) does not hold, since, e.g. i π = LogC (1) = LogC ((−i)2 ) 6=

2 LogC (−i) = 2( −i2 π ) = −i π. Therefore the principal complex logarithm [4, p.79]

log : C× 7→ { z ∈ C | − π < Im z ≤ π }

(2.3)

is defined as the unique solution η ∈ C of the equation

eη = z

⇔

η = log(z) := log |z| + i arg(z) ,

(2.4)

such that the argument arg(z) ∈ (−π, π].1 The principal complex logarithm is continuous

(indeed holomorphic) only on the smaller set C \ (−∞, 0]. Let us define the set D := {z ∈

C | |z − 1| < 1 }. In order to avoid unnecessary complications at this point, we introduce a

further open set, the “near identity subset” D ♯ ⊂ D, containing 1 and with the property that

z1 , z2 ∈ D ♯ implies z1 z2 ∈ D and z1−1 ∈ D. On D ♯ ⊂ C× all the usual rules for the logarithm

apply. On R+ \ {0} all the logarithmic distance measures encountered in the introduction

coincide with the logarithmic metric [41, p.109] (the ”hyperbolic distance” [27, p.735])

dist2log,R+ (x, y) := | log(x−1 y)|2 = | log y − log x|2 ,

dist2log,R+ (x, +1) := | log |x||2 .

(2.5)

This metric can still be extended to a metric on D ♯ through

dist2log,D♯ (z1 , z2 ) : = | log(z1−1 z2 )|2 , z1 , z2 ∈ D ♯ ,

dist2log,D♯ (r1 eiϑ1 , r2 eiϑ2 ) = | log(r1−1 r2 )|2 + |ϑ1 − ϑ2 |2 ,

r1 eiϑ1 , r2 eiϑ2 ∈ D ♯ .

(2.6)

Further, for z ∈ D ♯ we formally recover a version of (2.5)2 : mineiϑ ∈D♯ dist2log,D♯ (eiϑ , z) =

| log |z||2 . We remark, however, that dist2log,D♯ does not define a metric on C× due to the

periodicity of the complex exponential. Let us also define a log-Euclidean distance metric

on C× , continuous only on C \ (−∞, 0], in analogy with (1.30)

dist2log,euclid,C× (z1 , z2 ) : = | log z2 − log z1 |2 = | log

|z2 | 2

| + | arg(z2 ) − arg(z1 )|2 .

|z1 |

(2.7)

The identity

dist2log,D♯ (z1 , z2 ) = dist2log,euclid,C× (z1 , z2 )

1

(2.8)

For example log(−1) = i π since eiπ = −1. Otherwise, the complex logarithm always exists but may

not be unique, e.g. e−iπ = ei1π = −1. Hence LogC (−1) = {i π, −i π, . . .}. For scalars, our definition of the

principal complex logarithm can be applied to negative real arguments. However, in the matrix setting the

principal matrix logarithm is defined only on invertible matrices which do not have negative real eigenvalues.

10

on D ♯ is obvious but fails on C× . With this preparation, we now approach our minimization

problem in terms of CSO(2) versus C× . For given Z ∈ CSO(2) we find that

2

min k Log(QT Z)k2CSO ⇔ min | LogC (e−iϑ z)|2 ⇔ min distlog,C× (eiϑ , z) . (2.9)

Q∈SO(2)

ϑ∈(−π,π]

ϑ∈(−π,π]

It is important to avoid the additive representation dist2log,euclid,C× (z1 , z2 ), because in the

general matrix setting Q and Z will in general not commute and the equivalence (2.9) is

then lost. If, however, Q ∈ SO(n) and Z ∈ P(n) do commute, the optimality result is a

trivial consequence of the Campbell-Baker-Hausdorff formula [17, p.270,Thm. 11.3], since

T

2

2

2

in that

√ case minQ∈SO(n) k Log Q ZkF = minQ∈SO(n) k log Z − log QkF = k sym* log ZkF =

k log Z T Zk2F .

The restrictions implied by working on D ♯ are unduly hard, so we have also extended the

distance function distlog,D♯ defined on D ♯ to a function distlog,C× defined on C× by sacrificing

the metric properties and by using any complex logarithm LogC .2 In order to give this

minimization problem a precise sense, we define

min | LogC (e−iϑ z)|2 := min {|w|2 | ew = e−iϑ z } = min {|w|2 | ew = e−iϑ ei arg(z) |z| }

ϑ∈(−π,π]

ϑ∈(−π,π]

ϑ∈(−π,π]

= min {|w|2 | ew = e−iϑ̃ |z| } = min | LogC (e−iϑ |z|)|2 .

ϑ∈(−π,π]

ϑ̃∈(−π,π]

(2.10)

The solution of this minimization problem is again3 | log |z||2 . However, our goal is to

introduce an argument that can be generalized to the non-commutative matrix setting.

From |z| ≥ | Re(z)| it follows that

min | LogC (e−iϑ z)|2 ≥ min | Re(LogC (e−iϑ z))|2 = | log |z||2 ,

ϑ∈(−π,π]

ϑ∈(−π,π]

(2.11)

where we used the result (2.15) below for the last equality. The minimum for ϑ ∈ (−π, π]

is achieved if and only if ϑ = arg(z) since arg(z) ∈ (−π, π] and we are looking only for

ϑ ∈ (−π, π]. Thus

min | LogC (e−iϑ z)|2 = | log |z||2 .

ϑ∈(−π,π]

(2.12)

The unique optimal rotation Q(ϑ) ∈ SO(2) is given by the polar factor Up through ϑ =

2

T

2

arg(z)

√ and the minimum is | log |z|| , which corresponds to minQ∈SO(2) k Log(Q Z)kCSO =

k log Z T Zk2CSO .

Next, consider the symmetric minimization problem (1.9)2 for given Z ∈ CSO(2) and its

equivalent representation in C× :

min k sym* Log(QT Z)k2CSO

Q∈SO(2)

⇔

2

min | Re(LogC (e−iϑ z))|2 .

ϑ∈(−π,π]

(2.13)

Note that writing minϑ∈(−π,π] | log(e−iϑ z)|2 poses problems, since evaluating the principal complex logarithm log ei(arg(z)−ϑ) |z| would restrict ϑ such that arg(z) − ϑ ∈ (−π, π].

3

minϑ∈(−π,π] | LogC (e−iϑ |z|)|2 = minϑ∈(−π,π] | log |z| + i(−ϑ)|2 = minϑ∈(−π,π] | log |z||2 + |ϑ|2 = | log |z||2 .

11

Note that the expression distlog,Re,C× (z1 , z2 ) := | Re LogC (z1−1 z2 )| does not define a metric,

even when restricted to D ♯ . As before, we define

min | Re(LogC (e−iϑ z))|2 := min {| Re w|2 | ew = e−iϑ z }

ϑ∈(−π,π]

ϑ∈(−π,π]

(2.14)

and obtain

min | Re(LogC (e−iϑ z))|2 = min | Re(LogC (e−iϑ |z|ei arg(z) ))|2

ϑ∈(−π,π]

ϑ∈(−π,π]

= min | Re(LogC (|z|ei (arg(z)−ϑ) ))|2

ϑ∈(−π,π]

(2.15)

= min {| Re(log |z| + i(arg(z) − ϑ + 2π k))|2 , k ∈ N}

ϑ∈(−π,π]

= | log |z||2 .

Thus the minimum is again realized by the polar factor Up , but note that the optimal

rotation is completely undetermined, since ϑ is not constrained in the problem. Despite the

logarithm LogC being multivalued, this formulation of the minimization problem circumvents

the problem of the branch points of the natural complex logarithm. This observation suggests

that considering the generalization of (2.15), i.e. minQ∈U(n) k sym* Log Q∗ Zk2 in the first place

is helpful also for the general matrix problem. This is indeed the case.

With this preparation we now turn to the general, non-commutative matrix setting.

3

3.1

Preparation for the general complex matrix setting

Multivalued formulation

For every nonsingular Z ∈ GL(n, C) there exists a solution X ∈ Cn×n to exp X = Z which

we call a logarithm X = Log(Z) of Z. As for scalars, the matrix logarithm is multivalued depending on the unwinding number [17, p. 270] since in general, a nonsingular real or complex

matrix may have an infinite number of real or complex logarithms. The goal, nevertheless,

is to find the unitary Q ∈ U(n) that minimizes k Log(Q∗ Z)k2 and k sym* Log(Q∗ Z)k2 over

all possible logarithms.

Since k Log(Q∗ Z)k, k sym* Log(Q∗ Z)k2 ≥ 0, it is clear that both infima exist. Moreover,

U(n) is compact and connected. One problematic aspect is that U(n) is a non-convex set

and the function X 7→ k Log Xk2 is non-convex. Since, in addition, the multivalued matrix

logarithm may fail to be continuous, at this point we cannot even claim the existence of

minimizers.

There is also some subtlety due to the non-uniqueness of the matrix logarithm depending on the unwinding number. What should minQ∈U(n) k sym* Log Q∗ Zk2 mean? For any

unitarily invariant norm we define, similar to the complex scalar case (2.10)1 and (2.14)

min k Log(Q∗ Z)k2 := min { kXk2 ∈ R | exp X = Q∗ Z} ,

Q∈U(n)

Q∈U(n)

min k sym* Log(Q Z)k := min { k sym* Xk2 ∈ R | exp X = Q∗ Z} .

∗

Q∈U(n)

2

Q∈U(n)

12

(3.1)

We first observe that without loss of generality we may assume that Z ∈ GL(n, C) is real,

diagonal and positive definite, similar to (2.15)2 . To see this, consider the unique polar

decomposition Z = Up H and the eigenvalue decomposition H = V DV ∗ for real diagonal

positive D = diag(d1 , . . . , dn ). Then, in analogy to (2.10)2 ,

min k sym* Log(Q∗ Z)k2 = min {k sym* Xk2 | exp X = Q∗ Z}

Q∈U(n)

Q∈U(n)

= min {k sym* Xk2 | exp X = Q∗ Up H}

Q∈U(n)

= min {k sym* Xk2 | exp X = Q∗ Up V DV ∗ }

Q∈U(n)

= min {k sym* Xk2 | V ∗ (exp X)V = V ∗ Q∗ Up V D}

Q∈U(n)

= min {k sym* Xk2 | exp(V ∗ XV ) = V ∗ Q∗ Up V D}

Q∈U(n)

e∗ D}

= min {k sym* Xk2 | exp(V ∗ XV ) = Q

e

Q∈U(n)

(3.2)

e∗ D}

= min {kV ∗ (sym* X)V k2 | exp(V ∗ XV ) = Q

e

Q∈U(n)

e∗ D}

= min {k sym*(V ∗ XV )k2 | exp(V ∗ XV ) = Q

e

Q∈U(n)

e 2 | exp(X)

e =Q

e∗ D}

= min {k sym*(X)k

e

Q∈U(n)

= min {k sym* Xk2 | exp X = Q∗ D}

Q∈U(n)

= min k sym* Log Q∗ Dk2 ,

Q∈U(n)

where we used the unitary invariance for any unitarily invariant matrix norm and the fact

that X 7→ sym* X and X 7→ exp X are isotropic functions, i.e. f (V ∗ XV ) = V ∗ f (X)V for

all unitary V . If the minimum is achieved for Q = I in minQ∈U(n) k sym* Log(Q∗ D)k2 then

this corresponds to Q = Up in minQ∈U(n) k sym* Log Q∗ Zk2 . Therefore, in the following we

assume that D = diag(d1 , . . . dn ) with d1 ≥ d2 ≥ . . . dn > 0.

13

3.2

Some properties of the matrix exponential exp and matrix

logarithm Log

Let Q ∈ U(n). Then the following equalities hold for all X ∈ Cn×n .

exp(Q∗ XQ) = Q∗ exp(X) Q , definition of exp, [4, p.715],

(3.3)

∗

∗

Q Log(X) Q is a logarithm of Q XQ ,

(3.4)

∗

det(Q XQ) = det(X) ,

(3.5)

−1

exp(−X) = exp(X) ,

series definition of exp, [4, p.713] ,

exp Log X = X ,

for any matrix logarithm ,

(3.6)

det(exp X) = etr(X) ,

∀ Y ∈ Cn×n , det(Y ) 6= 0 : det(Y ) = etr(Log Y )

[4, p.712] ,

(3.7)

for any matrix logarithm [17] .

A major difficulty in the multivalued matrix logarithm case arises from

∀ X ∈ Cn×n :

3.3

Log exp X 6= X

in general, without further assumptions .

(3.8)

Properties of the principal matrix-logarithm log

The principal matrix logarithm Let X ∈ Cn×n , and assume that X has no real eigenvalues in (−∞, 0]. The principal matrix logarithm of X is the unique logarithm of X (the

unique solution Y ∈ Cn×n of exp Y = X) whose eigenvalues are elements of the strip

{z ∈ C : −π < Im(z) < π}. If X ∈ Rn×n and X has no eigenvalues on the closed

negative real axis R− = (−∞, 0], then the principal matrix logarithm is real. Recall that

log X is the principal logarithm and Log X denotes one of the many solutions to exp Y = X.

The following statements apply strictly only to the principal matrix logarithm [4, p.721]:

log exp X = X if and only if | Im λ| < π for all λ ∈ spec(X) ,

log(X α ) = α log X ,

α ∈ [−1, 1] ,

∗

∗

log(Q XQ) = Q log(X) Q , ∀ Q ∈ U(n) .

(3.9)

Let us define the set of Hermitian matrices H(n) := {X ∈ Cn×n | X ∗ = X } and the set

P(n) of positive definite Hermitian matrices consisting of all Hermitian matrices with only

positive eigenvalues. The mapping

exp : H(n) 7→ P(n)

(3.10)

is bijective [4, p.719]. In particular, Log exp sym* X is uniquely defined for any X ∈ Cn×n up

to additions by multiples of 2πi to each eigenvalue and any matrix logarithm and therefore

we have

∀ H ∈ H(n) : sym* Log H = log H ,

∀ X ∈ Cn×n : log exp sym* X = sym* X ,

∀ X ∈ Cn×n : sym* Log exp sym* X = sym* X .

14

(3.11)

Since exp sym* X is positive definite, it follows from (3.9)3 also that

∀ X ∈ Cn×n :

4

Q∗ (log exp sym* X)Q = log(Q∗ (exp sym* X)Q) .

(3.12)

Minimizing minQ∈U(n) k Log(Q∗Z))k2

Our starting point is, in analogy with the complex case, the problem of minimizing

min k sym*(Log(Q∗ Z))k2 ,

Q∈U(n)

where sym*(X) = (X ∗ + X)/2 is the Hermitian part of X. As we will see, a solution of this

problem will already imply the full statement, similar to the complex case, see (2.11). For

every complex number z, we have

|ez | = eRe z = |eRe z | ≤ |eRe z | .

(4.1)

While the last inequality in (4.1) is superfluous it is in fact the “inequality” |ez | ≤ |eRe z |

that can be generalized to the matrix case. The key result is an inequality of Bhatia [6,

Thm. IX.3.1],

∀ X ∈ Cn×n : k exp Xk2 ≤ k exp sym* Xk2

(4.2)

for any unitarily invariant norm, cf. [17, Thm. 10.11]. The result (4.2) is a generalization

of Bernstein’s trace inequality for the matrix exponential: in terms of the Frobenius matrix

norm it holds

k exp Xk2F = tr (exp X exp X ∗ ) ≤ tr (exp (X + X ∗ )) = k exp sym* Xk2F ,

with equality if and only if X is normal [4, p.756], [21, p.515]. For the case of the spectral

norm the inequality (4.2) is already given by Dahlquist [12, (1.3.8)]. We note that the

Golden-Thompson inequalities [4, p.761],[21, Cor.6.5.22(3)]:

∀ X, Y ∈ H(n) :

tr (exp(X + Y )) ≤ tr (exp(X) exp(Y ))

seem (misleadingly) to suggest the reverse inequality.

Consider for the moment any unitarily invariant norm, any Q ∈ U(n), the positive real

diagonal matrix D as before and any matrix logarithm Log. Then it holds

k exp(sym* Log Q∗ D)k2 ≥ k exp(Log Q∗ D)k2 = kQ∗ Dk2 = kDk2 ,

(4.3)

due to inequality (4.2) and

k exp(− sym* Log Q∗ D)k2 = k exp(sym*(− Log Q∗ D))k2

≥ k exp((− Log Q∗ D))k2 = k(exp(Log Q∗ D))−1 k2

= k(Q∗ D)−1 k2 = kD −1(Q∗ )−1 k2 = kD −1 k2 ,

15

(4.4)

where we used (4.2) again. Note that we did not use − Log X = Log(X −1 ) (which may be

wrong, depending on the unwinding number).

Moreover, we note that for any Q ∈ U(n) we have

∗

∗

0 < det(exp(sym* Log Q∗ D)) = etr(sym* Log Q D) = eRe tr(Log Q D)

∗

∗

= |eRe tr(Log Q D) | = |etr(Log Q D) |

= |det(Q∗ D)| = |det(Q∗ )det(D)|

= |det(Q∗ )| |det(D)| = |det(D)| = det(D) ,

(4.5)

where we used the fact that

etr(X) = det(exp X) ,

etr(Log Q

∗ D)

X = Log Q∗ D ⇒

= det(exp Log Q∗ D) = det(Q∗ D) ,

(4.6)

is valid for any solution X ∈ Cn×n of exp X = Q∗ D and that tr (sym* Log Q∗ D) is real.

For any Q ∈ U(n) the Hermitian positive definite matrices exp(sym* Log Q∗ D) and

exp(− sym* Log Q∗ D) can be simultanuously unitarily diagonalized with positive eigenvalues, i.e., for some Q1 ∈ U(n)

Q∗1 exp(sym* Log Q∗ D)Q1 = exp(Q∗1 (sym* Log Q∗ D)Q1 ) = diag(x1 , . . . , xn ) ,

Q∗1 exp(− sym* Log Q∗ D)Q1 = exp(−Q∗1 (sym* Log Q∗ D)Q1 )

1

1

= (exp(Q∗1 (sym* Log Q∗ D)Q1 )−1 = diag( , . . . , ) ,

x1

xn

(4.7)

since X 7→ exp X is an isotropic function. We arrange the positive real eigenvalues in

decreasing order x1 ≥ x2 ≥ . . . ≥ xn > 0. For any unitarily invariant norm it follows

therefore from (4.3), (4.4) and (4.5) together with (4.7) that

k diag(x1 , . . . , xn )k2 = kQ∗1 exp(sym* Log Q∗ D)Q1 k2 = k exp(sym* Log Q∗ D)k2 ≥kDk2

(4.8)

1

1

k diag( , . . . , )k2 = kQ∗1 exp(− sym* Log Q∗ D)Q1 k2 = k exp(− sym* Log Q∗ D)k2 ≥ kD −1 k2

x1

xn

det diag(x1 , . . . , xn ) = det(Q∗1 exp(sym* Log Q∗ D)Q1 ) = det(exp(sym* Log Q∗ D)) = det(D) .

4.1

Frobenius matrix norm for n = 2, 3

Now consider the Frobenius matrix norm for dimension n = 3. The three conditions in (4.8)

can be expressed as

x21 + x22 + x23 ≥ d21 + d22 + d23

1

1

1

1

1

1

+ 2+ 2 ≥ 2+ 2+ 2

2

x1 x2 x3

d1 d2 d3

x1 x2 x3 = d1 d2 d3 .

16

(4.9)

By a new result: the “sum of squared logarithms inequality” [8], conditions (4.9) imply

(log x1 )2 + (log x2 )2 + (log x3 )2 ≥ (log d1 )2 + (log d2 )2 + (log d3 )2 ,

(4.10)

with equality if and only if (x1 , x2 , x3 ) = (d1 , d2, d3 ). This is true, despite the map t 7→ (log t)2

being non-convex. Similarly, for the two-dimensional case with a much simpler proof [8]

x21 + x22 ≥ d21 + d22

1

1

1

1

+

≥ 2+ 2

⇒ (log x1 )2 + (log x2 )2 ≥ (log d1 )2 + (log d2 )2 .

(4.11)

x21 x22

d1 d2

x x

=d d

1

2

1

2

Since on the one hand (3.11) and (3.12) imply

(log x1 )2 + (log x2 )2 + (log x3 )2 = k log diag(x1 , x2 , x3 )k2F

= k log(Q∗1 exp(sym* Log Q∗ D)Q1 )k2F

(4.12)

∗

∗

2

= kQ1 log exp(sym* Log Q D)Q1 kF

= k log exp(sym* Log Q∗ D)k2F = k sym* Log Q∗ Dk2F

and clearly

(log d1 )2 + (log d2 )2 + (log d3 )2 = k log Dk2F ,

(4.13)

we may combine (4.12) and (4.13) with the sum of squared logarithms inequality (4.10) to

obtain

k sym* Log Q∗ Dk2F ≥ k log Dk2F

(4.14)

for any Q ∈ U(3). Since on the other hand we have the trivial upper bound (choose Q = I)

min k sym* Log(Q∗ D)k2F ≤ k log Dk2F ,

(4.15)

min k sym* Log(Q∗ D)k2F = k log Dk2F .

(4.16)

Q∈U(3)

this shows that

Q∈U(3)

The minimum is realized for Q = I, which corresponds to the polar factor Up in the original

formulation. Noting that

k Log(Q∗ D)k2F = k sym* Log(Q∗ D)k2F + k skew∗ Log(Q∗ D)k2F ≥ k sym* Log(Q∗ D)k2F

(4.17)

by the orthogonality of the Hermitian and skew-Hermitian parts in the trace scalar product,

we also obtain

min k Log(Q∗ D)k2F ≥ min k sym* Log(Q∗ D)k2F = k log Dk2F .

Q∈U(3)

Q∈U(3)

(4.18)

Hence, combining again we obtain for all µ > 0 and all µc ≥ 0

min µ k sym* Log(Q∗ D)k2F + µc k skew∗ Log(Q∗ D)k2F = µ k log Dk2F .

Q∈U(3)

(4.19)

Observe that although we allowed Log to be any matrix logarithm, the one that gives

the smallest k Log(Q∗ D)kF , k sym* Log(Q∗ D)kF is the principal logarithm.

17

4.2

Spectral matrix norm for arbitrary n ∈ N

For the spectral norm, the conditions (4.8) can be expressed as

1

1

≥ 2,

x21 ≥ d21 ,

2

xn

dn

x1 x2 x3 . . . xn = d1 d2 d3 . . . dn .

(4.20)

This yields the following ordering

0 < xn ≤ . . . ≤ dn ≤ . . . ≤ d1 ≤ . . . ≤ x1 .

(4.21)

max{| log xn | , | log dn | , | log d1 | , | log x1 |} = max{| log xn | , | log x1 |} ,

(4.22)

max{| log dn | , | log d1 |} ≤ max{| log xn | , | log x1 |} .

(4.23)

It is easy to see that this implies (even without the determinant condition (4.20)3 )

which shows

Therefore, cf. (4.12),

k sym* Log Q∗ Dk22 =k log diag(x1 , . . . , xn )k22

=k diag(log x1 , . . . , log xn )k22

= max {| log x1 | , | log x2 | , . . . , | log xn |}2

i=1,2,3, ...,n

=

max {(log x1 )2 , (log x2 )2 , . . . , (log xn )2 }

i=1,2,3, ...,n

= max{(log x1 )2 , (log xn )2 }

≥ max{(log d1 )2 , (log dn )2 }

= max {(log d1 )2 , (log d2 )2 , . . . , (log dn )2 }

i=1,2,3,...n

=

(4.24)

max {| log d1 | , | log d2 | , . . . , | log dn |}2

i=1,2,3, ...,n

= k diag(log d1 , . . . , log dn )k22

= k log diag(d1 , . . . , dn )k22 = k log Dk22 ,

from which we obtain, as in the case of the Frobenius norm, due to unitary invariance,

min k sym* Log(Q∗ D)k22 = k log Dk22 .

Q∈U(n)

(4.25)

For complex numbers we have the bound |z| ≥ | Re z|. A matrix analogue is that the spectral

norm of some matrix X ∈ Cn×n bounds the spectral norm of the Hermitian part sym* X,

see [4, p.355] and [21, p.151], i.e. kXk22 ≥ k sym* Xk22 . In fact, this inequality holds for all

unitarily invariant norms [20, p.454]:

∀ X ∈ Cn×n :

kXk2 ≥ k sym* Xk2 .

(4.26)

Therefore we conclude that for the spectral norm, in any dimension we have

min k Log(Q∗ D)k22 ≥ min k sym* Log(Q∗ D)k22 = k log Dk22 ,

Q∈U(n)

Q∈U(n)

with equality holding for Q = Up .

18

(4.27)

5

The real Frobenius case on SO(3)

In this section we consider Z ∈ GL+ (3, R), which implies that Z = Up H admits the polar

decomposition with Up ∈ SO(3) and an eigenvalue decomposition H = V DV T for V ∈ SO(3).

We observe that

min k sym* Log(QT D)k2F ≥ min k sym* Log(Q∗ D)k2F .

Q∈SO(n)

(5.1)

Q∈U(n)

Therefore, for all µ > 0, µc ≥ 0 we have, using inequality (5.1)

min µ k sym* Log(QT Z)k2F + µc k skew∗ Log(QT Z)k2F

Q∈SO(3)

≥ min µ k sym* Log(QT Z)k2F

(5.2)

Q∈SO(3)

= µk sym* log(UpT Z)k2F = µk sym* log(UpT Z)k2F + µc k skew∗ log(UpT Z)k2F ,

{z

}

|

=0

and it follows that the solution to the minimization problem (1.8) for Z ∈ GL+ (n, R) and

n = 2, 3 is also obtained by the orthogonal polar factor (a similar argument holds for n = 2).

Denoting by devn X = X − n1 tr (X)I the orthogonal projection of X ∈ Rn×n onto trace

free matrices in the trace scalar product, we obtain a further result of interest in its own

right (in which we really need Q ∈ SO(3)), namely

min k dev3 Log(QT D)k2F = k dev3 log Dk2F ,

Q∈SO(3)

min k dev3 sym* Log(QT D)k2F = k dev3 log Dk2F .

Q∈SO(3)

(5.3)

As was in the previous section, it suffices to show (5.3)2 . This is true since by using (4.6)

for Q ∈ SO(3) we have

2

1

T

T

2

T

2

min k dev3 sym* Log(Q D)kF = min

k sym* Log(Q D)kF − tr Log Q D

Q∈SO(3)

Q∈SO(3)

3

1

T

2

T

2

= min

k sym* Log(Q D)kF − (log det(Q D))

Q∈SO(3)

3

1

(5.4)

= min k sym* Log(QT D)k2F − (log det(D))2

Q∈SO(3)

3

1

= min k sym* Log(QT D)k2F − tr (log D)2

Q∈SO(3)

3

1

≥ min k sym* Log(Q∗ D)k2F − tr (log D)2

Q∈U(n)

3

1

= k sym* log Dk2F − tr (log D)2

3

1

= k sym* log Dk2F − tr (sym* log D)2

3

= k dev3 sym* log Dk2F = k dev3 log Dk2F .

19

6

Uniqueness

We have seen that the polar factor Up minimizes both k Log(Q∗ Z)k2 and k sym*(Log(Q∗ Z)k2 ,

but what about its uniqueness? Is there any other unitary matrix that also attains the

minimum? We address these questions below.

6.1

Uniqueness of Up as the minimizer of k Log(Q∗Z)k2

Note that the unitary polar factor Up itself is not unique when Z does not have full column

rank [17, Thm. 8.1]. However in our setting we do not consider this case because Log(UZ)

is defined only if UZ is nonsingular.

We show below that Up is the unique minimizer of k Log(Q∗ Z)k2 for the Frobenius

norm, while for the spectral norm there can be many Q ∈ U(n) for which k log(Q∗ Z)k2 =

k log(Up∗ Z)k2 .

Frobenius norm for n ≤ 3. We focus on n = 3 as the case n = 2 is analogous and simpler.

By the fact that Q = Up satisfies equality in (4.18), any minimizer Q of k Log(Q∗ D)kF must

satisfy

k Log(Q∗ D)kF = k sym* Log(Q∗ D)kF = k log DkF .

(6.1)

log(Q∗ D) = Q∗1 diag(log x1 , log x2 , log x3 )Q1 .

(6.2)

Note that by (4.17) the first equality of (6.1) holds only if Log(Q∗ D) is Hermitian.

We now examine the condition that satisfies the latter equality of (6.1). Since Log(Q∗ D)

is Hermitian the matrix exp(Log(Q∗ D)) is positive definite, so we can write exp(Log(Q∗ D)) =

Q∗1 diag(x1 , x2 , x3 )Q1 for some unitary Q1 and x1 , x2 , x3 > 0. Therefore

Hence for k sym* Log(Q∗ D)kF = k log DkF to hold we need

(log x1 )2 + (log x2 )2 + (log x3 )2 = (log d1 )2 + (log d2 )2 + (log d3 )2 ,

which is precisely the case where equality holds in the sum of squared logarithms inequality

(4.10). As discussed above, equality holds in (4.10) if and only if (x1 , x2 , x3 ) = (d1 , d2 , d3 ).

Hence by (6.2) we have log(Q∗ D) = Q∗1 diag(log x1 , log x2 , log x3 )Q1 = Q∗1 log(D)Q1 , so taking the exponential of both sides yields

Q∗ D = Q∗1 DQ1 .

(6.3)

Hence Q1 Q∗ DQ∗1 = D. Since Q1 Q∗ and Q∗1 are both unitary matrices this is a singular

value decomposition of D. Suppose d1 > d2 > d3 . Then since the singular vectors of

distinct singular values are unique up to multiplication by eiϑ , it follows that Q1 Q∗ = Q1 =

diag(eiϑ1 , eiϑ2 , eiϑ3 ) for ϑi ∈ R, so Q = I. If some of the di are equal, for example if

d1 = d2 > d3 , then we have Q1 = diag(Q1,1 , eiϑ3 ) where Q1,1 is a 2 × 2 arbitrary unitary

matrix, but we still have Q = I. If d1 = d2 = d3 , then Q1 can be any unitary matrix but

again Q = I. Overall, for (6.3) to hold we always need Q = I, which corresponds to the

unitary polar factor Up in the original formulation. Thus Q = Up is the unique minimizer of

k Log(Q∗ D)kF with minimum k log(Up∗ D)kF .

20

Spectral norm. For the spectral norm there can be many unitary

matrices Q that attain

k Log(Q∗ Z)k22 = k log(Up∗ Z)k22 . For example, consider Z = 0e 01 . The unitary polar factor

is Up = I. Defining U1 = 10 e0iϑ we have k log(U1 Z)k2 = k 10 ϑ0 k2 = 1 for any ϑ ∈ [−1, 1].

Now we discuss the general form of the minimizer Q. Let Z = UΣV ∗ be the SVD with

Σ = diag(σ1 , σ2 , . . . , σn ). Recall that k log(Up∗ Z)k2 = max(| log σ1 (Z)|, | log σn (Z)|).

Suppose that k log(Up∗ Z)k2 = | log σ1 (Z)| ≥ | log σn (Z)|. Then for any Q = U diag(1, Q22 )V ∗

we have

log Q∗ Z = log V diag(1, Q22 )ΣV ∗ ,

so we have k log Q∗ Zk2 = | log σ1 (Z)| = k log Up∗ Zk2 for any Q22 ∈ U(n − 1) such that

k log Q22 diag(σ2 , σ3 , . . . , σn )k2 ≤ k log Up∗ Zk2 . Note that such Q22 always includes In−1 , but

may not include the entire set of (n − 1) × (n − 1) unitary matrices as evident from the above

simple example.

Similarly, if k log Up∗ Zk2 = | log σn (Z)| ≥ | log σ1 (Z)|, then we have k log Q∗ Zk2 = k log Up∗ Zk2

for Q = U diag(Q22 , 1)V ∗ where Q22 can be any (n − 1) × (n − 1) unitary matrix satisfying

k log Q22 diag(σ1 , σ2 , . . . , σn−1 )k2 ≤ k log Up∗ Zk2 .

6.2

Non-uniqueness of Up as the minimizer of k sym*(Log(Q∗Z))k2

The fact that Up is not the unique minimizer of k sym*(Log(Q∗ Z))k2 can be seen by the

simple example Z = I. Then Log Q∗ is a skew-Hermitian matrix, so sym*(Log(Q∗ Z)) = 0

for any unitary Q.

In general, every Q of the following form gives the same value of ksym(Log(Q∗ Z))k2 . Let

Z = UΣV ∗ be the SVD with Σ = diag(σ1 In1 , σ2 In2 , . . . , σk Ink ) where n1 + n2 + · · · + nk = n

(k = n if Z has distinct singular values). Then it can be seen that any unitary Q of the form

Q∗ = Udiag(Qn1 , Qn2 , . . . , Qnk )V ∗ ,

(6.4)

where Qni is any ni × ni unitary matrix, yields k sym*(Log(Q∗ Z))k2 = k sym*(log(Up∗ Z))k2 .

Note that this holds for any unitarily invariant norm.

The above argument naturally leads to the question of whether Up is unique up to Qni

in (6.4). In particular, when the singular values of Z are distinct, is Up determined up to

scalar rotations Qni = eiϑni ?

For the spectral norm an argument similar to that above shows there can be many Q for

which k sym*(Log(Q∗ Z))k2 = k sym*(log(Up∗ Z))k2 .

For the Frobenius norm, the answer is yes. To verify this, observe in (4.14) that

k sym* Log(Q∗ D)kF = k log DkF implies (x1 , x2 , x3 ) = (d1 , d2 , d3) and hence Log(Q∗ D) =

Q∗1 diag(log d1 , log d2 , log d3 )Q1 + S, where S is a skew-Hermitian matrix. Hence

exp(Q∗1 diag(log d1 , log d2 , log d3 )Q1 + S) = Q∗ D,

and by (4.2) we have

kQ∗ DkF = k exp(Q∗1 diag(log d1 , log d2 , log d3 )Q1 + S)kF

≤ k exp(Q∗1 diag(log d1 , log d2 , log d3 )Q1 )kF = kQ∗ DkF .

21

(6.5)

Since equality in (4.2) holds for the Frobenius norm if and only if X is normal (which

can be seen from the proof of [6, Thm. IX.3.1]), for the last inequality to be an equality,

Q∗1 diag(log d1 , log d2 , log d3 )Q1 +S must be a normal matrix. Since Q∗1 diag(log d1 , log d2 , log d3 )Q1

is Hermitian and S is skew-Hermitian, this means Q∗1 diag(log d1 , log d2 , log d3 )Q1 + S =

Q∗1 diag(is1 + log d1 , is2 + log d2 , is3 + log d3 )Q1 for si ∈ R. Together with (6.5) we conclude

that

Q∗ D = Q∗1 diag(d1 eis1 , d2eis2 , d3 eis3 )Q1 .

By an argument similar to that following (6.3) we obtain Q = diag(e−is1 , e−is2 , e−is3 ).

7

Conclusion and outlook

The result in the Frobenius matrix norm cases for n = 2, 3 hinges crucially on the use of the

new sum of squared logarithms inequality (4.10). This inequality seems to be true in any

dimensions with appropriate additional conditions [8]. However, we do not have a proof yet.

Nevertheless, numerical experiments suggest that the optimality of the polar factor Up

in both

min k Log(Q∗ Z)k2 ,

Q∈U(n)

min k sym* Log(Q∗ Z)k2

Q∈U(n)

(7.1)

is true for any unitarily invariant norm, over R and C and in any dimension. This would

imply that for all µ, µc ≥ 0 and for any unitarily invariant norm

√

min µ k sym* Log(Q∗ Z)k2 + µc k skew∗ Log(Q∗ Z)k2 = µ k log(Up∗ Z)k2 = µ k log Z ∗ Zk2 .

Q∈U(n)

We also conjecture that Q = Up is the unique unitary matrix that minimizes k Log(Q∗ Z)k2

for every unitarily invariant norm.

In a forthcoming contribution [35] we will use our new characterization of the orthogonal

factor in the polar decomposition to calculate the geodesic distance of the isochoric part of

the deformation gradient F 1 ∈ SL(3) to SO(3) in the canonical left-invariant Riemannian

det(F ) 3

metric on SL(3), namely based on (5.3)

dist2geod,SL(3) (

F

det(F )

1 , SO(3)) = k dev 3 log

3

√

F T F k2F = min k dev3 sym* Log QT F k2F .

Q∈SO(3)

Thereby, we provide √

a rigorous geometric justification for the preferred use of the Henckystrain measure k log F T F k2F in nonlinear elasticity and plasticity theory, see [16, 42] and

the references therein.

Acknowledgement

The authors are grateful to N. J. Higham (Manchester) for establishing their contact and for

valuable remarks. P. Neff acknowledges a helpful computation of J. Lankeit (Essen) which

led to the crucial formulation of estimate (4.4).

22

References

[1] V. Arsigny, P. Fillard, X. Pennec, and N. Ayache. Geometric means in a novel vector space structure

on symmetric positive definite matrices. SIAM J. Matrix Anal. Appl., 29(1):328–347, 2006.

[2] L. Autonne. Sur les groupes linéaires, réels et orthogonaux. Bull. Soc. Math. France, 30:121–134, 1902.

[3] D.S. Bernstein. Inequalities for the trace of matrix exponentials. SIAM J. Matrix Anal. Appl., 9(2):156–

158, 1988.

[4] D.S. Bernstein. Matrix Mathematics. Princeton University Press, New Jersey, 2009.

[5] D.S. Bernstein and Wasin So. Some explicit formulas for the matrix exponential. IEEE Transactions

on Automatic Control, 38(8):1228–1231, 1993.

[6] R. Bhatia. Matrix Analysis, volume 169 of Graduate Texts in Mathematics. Springer, New-York, 1997.

[7] R. Bhatia and J. Holbrook. Riemannian geometry and matrix geometric means. Lin. Alg. Appl.,

413:594–618, 2006.

[8] M. Bı̂rsan, P. Neff, and J. Lankeit. Sum of squared logarithms - An inequality relating positive definite

matrices and their matrix logarithm. Peprint of Univ. Duisburg-Essen nr. SM-DUE-762, and arXiv:

1301.6604v1:submitted to Journal of Inequalities and Applications, 2013.

[9] C. Bouby, D. Fortuné, W. Pietraszkiewicz, and C. Vallée. Direct determination of the rotation in the

polar decomposition of the deformation gradient by maximizing a Rayleigh quotient. Z. Angew. Math.

Mech., 85:155–162, 2005.

[10] O.T. Bruhns, H. Xiao, and A. Mayers. Constitutive inequalities for an isotropic elastic strain energy

function based on Hencky’s logarithmic strain tensor. Proc. Roy. Soc. London A, 457:2207–2226, 2001.

[11] R. Byers and H. Xu. A new scaling for Newton’s iteration for the polar decomposition and its backward

stability. SIAM J. Matrix Anal. Appl., 30:822–843, 2008.

[12] G. Dahlquist.

Stability and Error Bounds in the Numerical Integration of Ordinary Differential Equations. PhD thesis, Royal Inst. of Technology, 1959. Available at http://su.divaportal.org/smash/record.jsf?pid=diva2:517380.

[13] K. Fan and A.J. Hoffmann. Some metric inequalities in the space of matrices. Proc. Amer. Math. Soc.,

6:111–116, 1955.

[14] G.H. Golub and C.V. Van Loan. Matrix Computations. The Johns Hopkins University Press, 1996.

[15] S. Helgason. Differential Geometry, Lie Groups, and Symmetric Spaces., volume 34 of Graduate Studies

in mathematics. American Mathematical Society, Heidelberg, 2001.

[16] H. Hencky. Über die Form des Elastizitätsgesetzes bei ideal elastischen Stoffen. Z. Techn. Physik,

9:215–220, 1928.

[17] N.J. Higham. Functions of Matrices: Theory and Computation. SIAM, Philadelphia, PA, USA, 2008.

[18] R. Hill. On constitutive inequalities for simple materials-I. J. Mech. Phys. Solids, 16(4):229–242, 1968.

[19] R. Hill. Constitutive inequalities for isotropic elastic solids under finite strain. Proc. Roy. Soc. London,

Ser. A, 314(1519):457–472, 1970.

[20] R.A. Horn and C.R. Johnson. Matrix Analysis. Cambridge University Press, New York, 1985.

[21] R.A. Horn and C.R. Johnson. Topics in Matrix Analysis. Cambridge University Press, New York, 1991.

[22] J. Jost. Riemannian Geometry and Geometric Analysis. Spinger-Verlag, 2002.

23

[23] A. Klawonn, P. Neff, O. Rheinbach, and S. Vanis. FETI-DP domain decomposition methods for elasticity

with structural changes: P -elasticity. ESAIM: Math. Mod. Num. Anal., 45:563–602, 2011.

[24] L.C. Martins and P. Podio-Guidugli. An elementary proof of the polar decomposition theorem. The

American Mathematical Monthly, 87:288–290, 1980.

[25] A. Mielke. Finite elastoplasticity, Lie groups and geodesics on SL(d). In P. Newton, editor, Geometry, mechanics and dynamics. Volume in honour of the 60th birthday of J.E. Marsden., pages 61–90.

Springer-Verlag, Berlin, 2002.

[26] M. Moakher. Means and averaging in the group of rotations. SIAM J. Matrix Anal. Appl., 24:1–16,

2002.

[27] M. Moakher. A differential geometric approach to the geometric mean of symmetric positive-definite

matrices. SIAM J. Matrix Anal. Appl., 26:735–747, 2005.

[28] Y. Nakatsukasa, Z. Bai, and F. Gygi. Optimizing Halley’s iteration for computing the matrix polar

decomposition. SIAM J. Matrix Anal. Appl., 31(5):2700–2720, 2010.

[29] Y. Nakatsukasa and N. J. Higham. Backward stability of iterations for computing the polar decomposition. SIAM J. Matrix Anal. Appl., 33(2):460–479, 2012.

[30] Y. Nakatsukasa and N. J. Higham. Stable and efficient spectral divide and conquer algorithms for the

symmetric eigenvalue decomposition and the SVD. MIMS EPrint 2012.52, The University of Manchester, May 2012.

[31] P. Neff. Mathematische Analyse multiplikativer Viskoplastizität. Ph.D. Thesis, Technische Universität

Darmstadt. Shaker Verlag, ISBN:3-8265-7560-1, Aachen, 2000.

[32] P. Neff. A geometrically exact Cosserat-shell model including size effects, avoiding degeneracy in the

thin shell limit. Part I: Formal dimensional reduction for elastic plates and existence of minimizers for

positive Cosserat couple modulus. Cont. Mech. Thermodynamics, 16(6 (DOI 10.1007/s00161-004-01824)):577–628, 2004.

[33] P. Neff. Existence of minimizers for a finite-strain micromorphic elastic solid. Preprint 2318,

http://www3.mathematik.tu-darmstadt.de/fb/mathe/bibliothek/preprints.html, Proc. Roy. Soc. Edinb.

A, 136:997–1012, 2006.

[34] P. Neff. A finite-strain elastic-plastic Cosserat theory for polycrystals with grain rotations. Int. J. Eng.

Sci., DOI 10.1016/j.ijengsci.2006.04.002, 44:574–594, 2006.

[35] P. Neff, √

B. Eidel, and F. Osterbrink. Appropriate strain measures: The Hencky shear strain energy

kdev log F T F k2 measures the geodesic distance of the isochoric part of the deformation gradient

F ∈ SL(3) to SO(3) in the canonical left invariant Riemannian metric on SL(3). in preparation, 2013.

[36] P. Neff, A. Fischle, and I. Münch. Symmetric Cauchy-stresses do not imply symmetric Biot-strains

in weak formulations of isotropic hyperelasticity with rotational degrees of freedom. Acta Mechanica,

197:19–30, 2008.

[37] P. Neff and I. Münch. Curl bounds Grad on SO(3). Preprint 2455, http://www3.mathematik.tudarmstadt.de/fb/mathe/bibliothek/preprints.html, ESAIM: Control, Optimisation and Calculus of Variations, DOI 10.1051/cocv:2007050, 14(1):148–159, 2008.

[38] P. Neff and I. Münch. Simple shear in nonlinear Cosserat elasticity: bifurcation and induced microstructure. Cont. Mech. Thermod., DOI 10.1007/s00161-009-0105-5, 21(3):195–221, 2009.

[39] R.W. Ogden. Compressible isotropic elastic solids under finite strain-constitutive inequalities. Quart.

J. Mech. Appl. Math., 23(4):457–468, 1970.

[40] B. O’Neill. Semi-Riemannian Geometry. Academic Press, New-York, 1983.

24

[41] A. Tarantola. Elements for Physics: Quantities, Qualities, and Intrinsic Theories. Springer, Heidelberg,

2006.

[42] H. Xiao. Hencky strain and Hencky model: extending history and ongoing tradition. Multidiscipline

Mod. Mat. Struct., 1(1):1–52, 2005.

8

8.1

Appendix

Connections between C× and CSO(2)

The following connections between C× and CSO(2) = R+ · SO(2) are clear:

1

kZk2CSO = kZk2F ,

2 a −b

⇔ ZT =

,

b a

2

a + b2

0

T

T

ZZ =Z Z =

,

0

a2 + b 2

1

1

= kZk2CSO = tr Z T Z = kZk2F ,

2

2

⇔ Z ·W = W ·Z,

|z|2 = a2 + b2

=

z = a − ib = a + i(−b)

z z = |z|2

z·w = w·z

z+z

=a

2

z−z

=b

Im(z) =

2

Re(z) =

⇔

| Re(z)|2 = |a|2

=

| Im(z)|2 = |b|2

=

|z|2 = Re(z)2 + Im(z)2

=

eiϑ = cos ϑ + i sin ϑ

⇔

Q(ϑ) =

e−iϑ z = (eiϑ )−1 z

⇔

QT Z ,

z = ei arg(z) |z|

|z|

|ez | = |ea+ib | = |eRe(z) |

8.2

⇔

1

a 0

(Z + Z T ) =

,

0 a

2

1

0 b

skew∗(Z) = (Z − Z T ) =

,

−b 0

2

1

k sym* Zk2CSO = k sym* Zk2F ,

2

1

2

k skew∗ ZkCSO = k skew∗ Zk2F ,

2

det(Z) ,

(8.1)

sym*(Z) =

cos ϑ

− sin ϑ

sin ϑ

∈ SO(2) ,

cos ϑ

(8.2)

ϑ ∈ (−π, π] ,

⇔

Z = Up H , polar form versus polar decomposition

√

p

⇔

H = Z T Z = det(Z)I2 ,

1

a b

Up = ZH −1 = √

∈ SO(2) ,

a2 + b2 −b a

⇔

(8.3)

k exp(Z)kF = k exp(aI2 + skew∗(Z))kF

= k exp(aI2 ) exp(skew∗(Z))kF = k exp(aI2 )kF = k exp(sym*(Z))kF .

Optimality properties of the polar form

The polar decomposition is the matrix analog of the polar form of a complex number

z = ei arg(z) |z| .

25

(8.4)

The argument arg(z) determines the unitary part ei arg(z) while the positive definite Hermitian matrix is |z|.

The argument arg(z) in the polar form is optimal in the sense that

min

ϑ∈(−π,π]

µ |e−iϑ z − 1|2 =

min

ϑ∈(−π,π]

µ |z − eiϑ |2 = µ ||z| − 1|2 ,

However, considering only the real (Hermitian) part

(

µ ||z| − 1|2

min µ | Re(e−iϑ z − 1)|2 =

ϑ∈(−π,π]

0

|z| ≤ 1 ,

|z| > 1 ,

ϑ = arg(z) .

ϑ = arg(z)

ϑ : cos(arg(z) − ϑ) =

(8.5)

1

|z|

,

(8.6)

shows that optimality of ϑ = arg(z) ceases to be true for |z| > 1 and the optimal ϑ is not unique. This is

the nonclassical solution alluded to in (1.6). In fact we have optimality of the polar factor for the Euclidean

weighted family only for µc ≥ µ:

min

ϑ∈(−π,π]

µ | Re(e−iϑ z − 1)|2 + µc | Im(e−iϑ z − 1)|2 = µ ||z| − 1|2 ,

ϑ = arg(z) ,

(8.7)

while for µ ≥ µc ≥ 0 there always exists a z ∈ C such that

min

ϑ∈(−π,π]

µ | Re(e−iϑ z − 1)|2 + µc | Im(e−iϑ z − 1)|2 < µ ||z| − 1|2 .

(8.8)

In pronounced contrast, for the logarithmic weighted family the polar factor is optimal for all choices of

weighting factors µ, µc ≥ 0:

(

µ | log |z||2

µc > 0 : ϑ = arg(z)

min µ | Re LogC (e−iϑ z)|2 + µc | Im LogC (e−iϑ z)|2 =

(8.9)

ϑ∈(−π,π]

µ | log |z||2

µc = 0 : ϑ arbitrary .

Thus we may say that the more fundamental characterization of the polar factor as minimizer is given by

the property with respect to the logarithmic weighted family.

8.3

8.3.1

The three-parameter case SL(2) by hand

Closed form exponential on SL(2) and closed form principal logarithm

The exponential on sl(2) can be given in closed form, see [41, p.78] and [5]. Here, the two-dimensional

Caley-Hamilton theorem is useful:

X 2 − tr (X)X + det(X)I2 = 0. Thus, for X with tr (X) = 0, it holds

X 2 = −det(X)I2 and tr X 2 = −2 det(X). Moreover, every higher exponent X k can be expressed in I and

X which shows that exp(X) = α(X)I2 + β(X)X. Tarantola [41] defines the ”near zero subset” of sl(2)

!

r

1

2

tr (X ) < π }

(8.10)

sl(2)0 := {X ∈ sl(2) | Im

2

and the ”near identity subset” of SL(2)

SL(2)I := SL(2) \ {both eigenvalues are real and negative } .

(8.11)

On this set the principal matrix logarithm is real. Complex eigenvalues appear always in conjugated pairs,

therefore the eigenvalues are either real or complex in the twodimensional case. Then it holds [15, p.149]

√

p

sinh( −det(X))

√

cosh(

X det(X) < 0

−det(X))

I

+

2

√ −det(X)

p

exp(X) = cos( det(X)) I + sin(√ det(X)) X

(8.12)

det(X) > 0

2

det(X)

I2 + X

det(X) = 0 .

26

Therefore

r

p

sinh(s)

1

(8.13)

X , s :=

tr (X 2 ) = −det(X) .

exp : sl(2)0 7→ SL(2)I , exp(X) = cosh(s)I2 +

s

2

p

Since the argument s = −det(X) is complex valued for det(X) > 0 we note that cosh(iy) = cos(y).

The one-parameter SO(2) case is included in the former formula for the exponential. The previous

formula (8.13) can be specialized to so(2, R). Then

√

p

sinh(i|α|) 0 α

sinh( −α2 ) 0 α

0 α

2

√

= cosh(i|α|)I2 +

exp

= cosh( −α )I2 +

−α 0

−α 0

−α 0

i|α|

−α2

i sin(|α|) 0 α

cos α sin α

= cos(|α|)I2 +

=

.

(8.14)

−α 0

− sin α cos α

i|α|

We need to observe that the exponential function is not surjective onto SL(2) since not every matrix

Z ∈ SL(2) can be written as Z = exp(X) for X ∈ sl(2). This is the case because ∀ X ∈ sl(2) : tr (exp(X)) ≥

−2, see (8.12)2 . Thus, any matrix Z ∈ SL(2) with tr (Z) < −2 is not the exponential of any real matrix

X ∈ sl(2). The logarithm on SL(2) can also be given in closed form [41, (1.175)].4 On the set SL(2)I the

principal matrix logarithm is real and we have

log : SL(2)I 7→ sl(2)0 ,

8.3.2

log[S] :=

s

(S − cosh(s)I2 ) ,

sinh s

cosh(s) =

tr (S)

.

2

(8.15)

Minimizing k log QT Dk2F for QT D ∈ SL(2)I

Let us define the open set

RD := {Q ∈ SO(2) | QT D ∈ SL(2)I } .

(8.16)

T

On RD the evaluation of log R D is in the domain of the principal logarithm. We are now able to show the

optimality result with respect to rotations in RD . We use the given formula (8.15) for the real logarithm on

4

In fact, the logarithm on diagonal matrices D in SL(2) with positive eigenvalues is simple. Their

can always be solved for s. Observe

trace is always λ + λ1 ≥ 2 if λ > 0. We infer that cosh(s) = tr(D)

2

cosh(s)2 − sinh(s)2 = 1.

27

SL(2)I to compute

T

inf k log R Dk2F = inf

R∈RD

R∈RD

s2

T

kR D − cosh(s)I2 k2F

sinh(s)2

T

s

T

kR Dk2F − 2 cosh(s)hR D, I2 i + 2 cosh(s)2

2

R∈RD sinh(s)

T tr R D

)

(using cosh(s) =

2

T

s2

2

2

2

= inf

Dk

−

4

cosh(s)

+

2

cosh(s)

kR

F

2

R∈RD sinh(s)

s2

T

2

2

= inf

Dk

−

2

cosh(s)

kR

F

2

R∈RD sinh(s)

2

s2

1 T

T

2

kR

hR

= inf

Dk

−

D,

Ii

F

2

2

R∈RD sinh(s)

2

2

1 T

s

T

2

inf

kR DkF − hR D, Ii

≥

inf

2

2

tr(RT D ) sinh(s)

R∈RD

2

= inf

R∈RD ,cosh(s)=

=

R∈RD

(8.17)

2

optimality of the orthogonal factor

s2

1

2

2

inf

kDk

−

hD,

Ii

F

2

2

tr(RT D ) sinh(s)

,cosh(s)=

2

= k dev2 Dk2F

s2

2

tr(RT D ) sinh(s)

,cosh(s)=

inf

R∈RD

2

and on the other hand

s2

1

1

tr (D)

1

2

λ

+

kD

−

tr

(D)I

k

,

with

s

∈

R

s.

that

cosh(s)

=

=

2

F

sinh(s)2

2

2

2

λ

2

s2

1

s

1

=

k dev2 Dk2F =

(λ1 − λ2 )2 since k dev2 Dk2F = (λ1 − λ2 )2

sinh(s)2

sinh(s)2 2

2

2

2

1

1

1

s

1

s

(λ − )2 =

(λ − )2

=

sinh(s)2 2

λ

1 + sinh(s)2 − 1 2

λ

1

1

1

s2

1

s2

(λ − )2 = 1

(λ − )2

(8.18)

=

1 2

2

cosh(s) − 1 2

λ

2

λ

(λ

+

)

−

1

4

λ

k log Dk2F =

λ+ 1

λ+ 1

(arcosh( 2 λ ))2 1

(arcosh( 2 λ ))2 1

1

1

(λ − )2 =

(λ − )2

= 1

1

1 2

1 2

2

λ

2

λ

(λ

+

)

−

1

(λ

−

)

4

λ

4

λ

= 2 (arcosh(

λ+

2

1

λ

))2 = 2(log λ)2 .

√

The last equality

can be seen by using the identity arcosh(x) = log(x + x2 − 1), x ≥ 1 and setting

x = 21 λ + λ1 , where we note that x ≥ 1 for λ > 0. Comparing the last result (8.18) with the simple

formula for the principal logarithm on SL(2) for diagonal matrices D ∈ SL(2) with positive eigenvalues

shows

λ 0 2

log λ

0

k log

k =k

k2 = (log λ)2 + (log 1 − log λ)2 = 2(log λ)2 .

(8.19)

0 λ1 F

0

log λ1 F

28

To finalize the SL(2) case we need to show that

R∈RD

s2

ŝ2

inf

=

,

2

sinh(ŝ)2

tr(RT D ) sinh(s)

,cosh(s)=

tr (D)

1

with ŝ ∈ R s. that cosh(ŝ) =

=

2

2

2

1

λ+

λ

. (8.20)

In order to do this, we write

R∈RD

s2

=

inf

2

tr(RT D) sinh(s)

,cosh(s)=

R∈R

2

D

s2

arcosh(ξ)2

inf

=

inf

2

ξ

ξ2 − 1

tr(RT D ) cosh(s) − 1

,cosh(s)=

for ξ =

T tr R D

2

.

2

One can check that the function

g : [1, ∞) → R+ ,

is strictly monotone decreasing. Thus g(ξ) =

for ξ =

T

tr R D

2

inf

ξ

is realized by ξ̂ =

tr(D)

2 .

g(ξ) :=

arcosh(ξ)2

ξ 2 −1

arcosh(ξ)2

ξ2 − 1

(8.21)

is the smaller, the larger ξ gets. The largest value

Therefore

ˆ2

arcosh(ξ)2

ŝ2

arcosh(ξ)

=

,

≥

ξ2 − 1

sinh(ŝ)2

ξˆ2 − 1

29

with ŝ ∈ R s. that cosh(ŝ) =

tr (D)

.

2