Computer Organization and Architecture

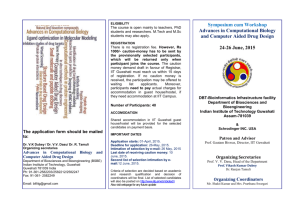

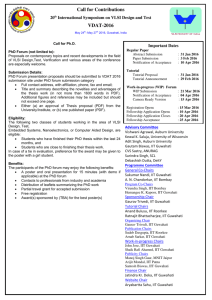

advertisement