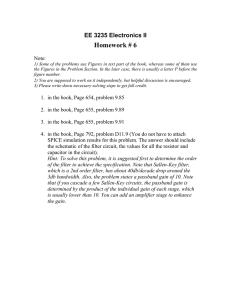

Filters Low-pass and high-pass filters By far the most frequent

Filters

Low-pass and high-pass filters

By far the most frequent purpose for using a filter is extracting either the low-frequency or the high-frequency portion of an audio signal, attenuating the rest. This is accomplished using a low-pass

or high-pass

filter.

Figure 1: Terminology for describing the frequency response of low-pass and high-pass filters. The horizontal axis is frequency and the vertical axis is gain. A low-pass filter is shown; a high-pass filter has the same features switched from right to left.

Ideally, a low-pass or high-pass filter would have a frequency response of 1 up to (or down to) a specified cutoff frequency and zero past it; but such filters cannot be designed in practice. Instead, we try to find realistic approximations to this ideal response. The more design effort and computation time we put into it, the closer we can get.

Figure 1 shows the frequency response of a low-pass filter. The frequency spectrum is divided into three bands, labeled on the horizontal axis. The passband

is the region

(frequency band) where the filter should pass its input through to its output with unit gain. For a low-pass filter (as shown), the passband reaches from a frequency of zero up to a certain frequency limit. For a high-pass filter, the passband would appear on the right-hand side of the graph and would extend from the frequency limit up to the highest frequency possible. Any realisable filter's passband will be only approximately flat; the deviation from flatness is called the ripple

, and is often specified by giving the ratio between the highest and lowest gain in the passband, expressed in decibels. The ideal low-pass or high-pass filter would have a ripple of 0 dB.

1

The stopband of a low-pass or high-pass filter is the region of the spectrum (the frequency range) over which the filter is intended not to transmit its input. The stopband attenuation

is the difference, in decibels, between the lowest gain in the passband and the highest gain in the stopband. Ideally this would be infinite; the higher the better.

Finally, a realisable filter, whose frequency response is always a continuous function of frequency, always needs a region, or frequency band, over which the gain drops from the passband gain to the stopband gain; this is called the transition band . The thinner this band can be made, the more nearly ideal the filter.

Band-pass and stop-band filters

A band-pass filter

admits frequencies within a given band, rejecting frequencies below it and above it. Much the same terminology as before may be used to describe a band-pass filter, as shown in figure 2. A stop-band filter does the reverse, rejecting frequencies within the band and letting through frequencies outside it.

Figure 2: Terminology for describing the frequency response of band-pass and stop-band filters. The horizontal axis is frequency and the vertical axis is gain. A band-pass filter is shown; a stop-band filter would have a contiguous stopband surrounded by two passbands.

In practice, a simpler language is often used for describing bandpass filters, as shown in

Figure 4. Here there are only two controls: a center frequency

and a bandwidth

. The passband is considered to be the region where the filter's output has at least half the power (0.707 times the gain) of its peak. The bandwidth is the width, in frequency units, of the passband. The center frequency is the point of maximum gain, which is approximately the midpoint of the passband.

2

Figure 4: A simplified view of a band-pass filter, showing bandwidth and center frequency.

3

Moving Average Filter

Figure 1 shows a sine wave with a spike on top of it. The spike is unwanted and it would be advantageous to remove it.

2.500

2.000

1.500

1.000

0.500

0.000

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

-0.500

Series1

-1.000

-1.500

Figure 1

One approach is to average the value on either side of the spike with the value of the spike. This would not eliminate the spike, but would reduce it. It can be achieved using the following algorithm. g(n) = f(n – 1) + f(n) + f(n + 1) [1]

3

Experiment

Using Excel enter the values in columns 1 and 2 and using formula [1] above calculate column 3. Display columns 2 and 3 on the same graph and save. Comment on the plots.

4

n f(n)

-1 0.000

0 0.000

1 0.383

2 0.707

3 0.924

4 2.000

5 0.924

6 0.707

7 0.383

8 0.000

9 -0.383

10 -0.707

11 -0.924

12 -1.000

13 -0.924

14 -0.707

15 -0.383

16 0.000

17 0.000

1 f(n-1) + f(n) + f(n+1)/3

Moving Average Filter

2.500

2.000

1.500

1.000

0.500

0.000

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

-0.500

-1.000

-1.500

Figure 2

Series1

Series2

5

The averager has been applied across the entire signal from n = -1 to 17. This has the effect of moving the centre point along the waveform. Therefore, this type of filter is known as a moving average

filter.

Notice that the filter starts at time n = – 1. Although this seems strange it does mean that the output starts at time n = 0, however, this does mean that all values prior to the start of the signal and the values after n = 15 have to be set to zero. The averaged values closely track the except at n = 4, 5 and 6 where the average values are much smaller than the input value.

Low Pass Filters

A low pass filter is a filter that passes low frequencies and attenuates high frequencies.

The amplitude response

of a low pass filter is flat from DC to a point where it begins to roll off. The amplitude response is defined as the point where the amplitude has decreased by 3 dB, to 70.7% of its original amplitude. The region from at or near DC to the point where the amplitude is down 3 dB is defined as the passband

of the filter.

The amplitude of the filter at ten times the 3 dB frequency is attenuated a total of 20 dB for a one pole filter, and a total of 40 dB for a two pole filter. At higher frequencies, the amplitude continues to roll off in a linear fashion, where the slope of the line is -20 dB per decade (10 times frequency) for a single pole filter and -40 dB per decade for a two pole filter.

A very small detail that might slip by the beginning designer is that the initial roll-off in the region below the 3 dB frequency starts later for a two pole filter than it does for a single pole filter. Therefore, the two pole filter has "flatter" response at a higher frequency than the single pole filter as shown in Figure 1.

Figure 1

A two pole filter is a filter that rolls off frequencies in the stop band 40 dB per decade.

Figure 2 is an example of a 10 kHz double pole low pass filter:

6

Figure 2

The phase response of a low pass filter doubles as well as shown in Figure . The change in phase of a single pole filter is 45 degrees at the 3 dB frequency, and ultimately approaches 90 degrees at infinity. The change in phase of a two pole filter, however, is 90 degrees at the 3 dB frequency, and ultimately approaches 180 degrees at infinity

Figure 3

High Pass Filters

A High Pass filter is a filter that passes high frequencies and attenuates low frequencies.

Figure 5

7

The phase response of a high pass filter doubles as well as shown in Figure 6 . The change in phase of a single pole filter is 45 degrees at the 3 dB frequency, and ultimately approaches 90 degrees at infinity. The change in phase of a two pole filter, however, is 90 degrees at the 3 dB frequency, and ultimately approaches 180 degrees at infinity.

Figure 6

8

Linear Time Invariant Systems

Definitions

A linear system may be defined as one which obeys the Principle of Superposition , which may be stated as follows:

If an input consisting the sum of a number of signals is applied to a linear system, then the output is the sum, or superposition, of the system’s responses to each signal considered separately.

A time-invariant system is one whose properties do not vary with time. The only effect of a time-shift on an input signal to the system is a corresponding time-shift in its output.

A causal system is one if the output signal depends only on present and/or previous values of the input. In other words all real time systems must be causal; but if data were stored and subsequently processed at a later date, it need not be causal.

Steps, Impulses and Ramps

The unit step function u[n] is defined as: u[n] = 0, n < 0 u[n] = 1, n ≥ 0

This signal plays a valuable role in the analysis and testing of digital signals and processors.

Another basic signal which is even more important than the unit step, is the unit impulse function [n] , and is defined as:

[n] = 0, n ≠ 0

[n] = 1, n = 0

9

Figure 1 (a) the unit step function, and (b) the unit impulse function

One further signal is the digital ramp which rises or falls linearly with the variable n . The unit ramp function r[n] is defined as: r[n] = n u[n]

Figure 2.

The unit ramp function

Since u[n] is zero for n<0, so is the ramp function

10

The Unit Impulse Response

The unit impulse was described above as:

[n] = 0, n ≠ 0

[n] = 1, n = 0

This is also sometimes known as the Kronecker delta function

This can be tabulated n -2 -1 0 1 2 3

[n]

[n-2]

Table 1

0

0

0

0

0

0

1

0

0

0

0

1

0

0

4

0

0

5

0

0

6

0

0

0

0

Figure 3 Shifted impulse sequence, [n – 2]

A shifted impulse such as [n – 2] is non-zero when its argument is zero, i.e. n – 2 = 0, or equivalently n = 2.

The third row of table 1 gives the values of the shifted impulse [n – 2] and Figure 3 shows a plot of the sequence.

Now consider the following signal: x[n] = 2 [n ] + 4 [n – 1] + 6 [n – 2] + 4 [n – 3] + 2 [n – 4]

Table 2 shows the individual sequences and their sum. n -2 -1 0 1 2 3 4 5 6

[n] x n

0

[n-1] 0

[n-2] 0

[n-3] 0

[n-4] 0

0

0

0

0

0

0

0

0

0

0

0

0

0

2 0 0

0 4 0

0 6

0

0

2

0

0

4

0

0

6

0

0

0

4

0

4

0

0

0

0

2

2

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

11

Figure 4

Table 2

Hence any sequence can be represented by the equation: x

[ n

] =

∑

k x

[ k

]

δ

[ n

− k

]

= + x[-1] [n + 1] + x[0] [n] + x[1] [n - 1] + x[2] [n - 2] +…… .

When the input to an FIR filter is a unit impulse sequence, x[n] = [n] , the output is known as the unit impulse response , which is normally donated as h[n] .

This is shown in Figure 4.

12

Convolution

Convolution is a weighted moving average with one signal flipped back to front :

The general expression for an FIR filter’s output is:- y [ n ] =

M

∑

k

= 0 h [ k ] x [ n

− k ]

A tabulated version of convolution n n<0 0 1 2 3 4 5 6 7 n<7 x[n] h[n] h[0]x[n] h[1]x[n-1] h[2]x[n-2] h[3]x[n-3]

0

0

0

0

0

0

2

3

6

0

0

0

4

-1

12

-2

0

0

6

2

18

-4

4

0

4 2 0 0 0 0

1

12 6 0 0 0 0

-6

8

2

-4

12

4

-2

8

6

0

4

4 y[n] 0 6 10 18 16 18 12 8 h[0]x[n] = x[0] * h[0] + x[1] * h[0] + x[2] * h[0] + x[3] * h[0] + x[4] * h[0] h[0]x[n] = 2 * 3 + 4 * 3 + 6 * 3 + 4 * 3 + 2 * 3 h[0]x[n] = 6 + 12 + 18 + 12 + 6 h[1]x[n-1] = x[0] * h[1] + x[1] * h[1] + x[2] * h[1] + x[3] * h[1] + x[4] * h[1] h[1]x[n-1] = 2 * -1 + 4 * -1 + 6 * -1 + 4 * -1 + 2 * -1 h[1]x[n-1] = -2 + -4 + -6 + -4 + -2

0

0

2

2

0

0

0

0

13

The diagrams below show how convolution works.

Figure 5 A single impulse input yields the system’s impulse response

Figure 6 A scaled impulse input yields a scaled response, due to the scaling property of the system's linearity.

Figure 7 :

This demonstrates the use the time-invariance property of the system to show that a delayed input results in an output of the same shape, only delayed by the same amount as the input

14

Figure 8 : This now demonstrates the additivity portion of the linearity property of the system to complete the picture. Since any discrete-time signal is just a sum of scaled and shifted discrete-time impulses, we can find the output from knowing the input and the impulse response

No if we convolve x(n) with h(n) as shown in Figure 9 we will get the output y(n)

Figure 9: This is the end result that we are looking to find

The following diagrams are a breakdown of how the y(n) output is achieved.

15

Figure 10: The impulse response, h

, is reversed and begin its traverse at time 0.

Figure 11: Continuing the traverse. At time 1 , the two elements of the input signal are multiplied by two elements of the impulse response.

Figure 12

16

Figure 13

What happens in the above demonstration is that the impulse response is reversed in time and "walks across" the input signal. This is the same result as scaling, shifting and summing impulse responses.

This approach of time-reversing, and sliding across is a common approach to presenting convolution, since it demonstrates how convolution builds up an output through time.

17

18