The Detection of Earnings Manipulation: The Three Phase Cutting

advertisement

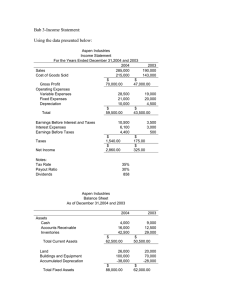

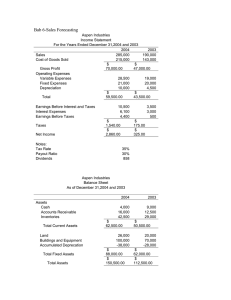

The Detection of Earnings Manipulation: The Three Phase Cutting Plane Algorithm using Mathematical Programming Burcu Dikmena∗ Güray Küçükkocaoğlub ABSTRACT The primary goal of this study is to propose an algorithm using mathematical programming to detect earnings management practices1. In order to evaluate the ability of this proposed algorithm, the traditional statistical models are used as a benchmark vis-a-vis with their timeseries counterparts. As emerging techniques in the area of mathematical programming yields better results, application of suitable models expected to result in highly performed forecasts. The motivation behind this paper is to develop an algorithm which will give a successful achievement on detecting companies which appeal to financial manipulation. The methodology is based on cutting plane formulation using mathematical programming. A sample of 126 Turkish manufacturing firms described over ten financial ratios and indexes are used for detecting factors associated with false financial statements. The results indicate that the proposed three phase cutting plane algorithm outperforms the traditional statistical techniques which are widely used for false financial statement detections. Furthermore, the results indicate that the investigation of financial information can be helpful towards the identification of false financial statements and highlight the importance of financial ratios/indexes such as Days’ Sales in Receivables Index (DSRI), Gross Margin Index (GMI), Working Capital Accruals to Total Assets (TATA), Days to Inventory Index (DINV). Key words: mathematical programming, mathematical model, cutting plane algorithm, earnings management, false financial statements 1. Introduction Detection of manipulated financial statements by using normal audit procedures becomes an incredibly difficult task (Porter and Cameron, 1987; Coderre, 1999). First of all, there is a shortage of knowledge concerning the extensions and characteristics of management fraud. Secondly, auditors lack the experience necessary to detect manipulated financial statements. Finally, managers are deliberately trying to deceive the auditors by using new techniques (Fanning and Cogger, 1998). On the other hand, the nature of accrual accounting gives managers a great deal of discretion in determining the actual earnings a firm reports in any a∗ The Scientific and Technological Research Council of Turkey - Space Technologies Research Institute, Ankara, Turkey, Tel : +90 312 210 13 10/1559, Fax : +90 312 210 13 15, e-mail : burcu.dikmen@uzay.tubitak.gov.tr b Baskent University, Faculty of Economics and Administrative Sciences, Ankara, Turkey, Tel : +90 312 234 10 10/1728, Fax : +90 312 234 10 43, e-mail : gurayk@baskent.edu.tr 1 The terms “earnings management”, “earnings manipulation”, “fraud”, “falsified financial statements” are all used interchangeably to describe the act of intentional misrepresentation of a firm’s financial statement by managers. 1/28 given period. Management has considerable control over the timing of actual expense items (e.g., advertising expenses or outlays for research and development). They can also, to some extent, alter the timing of recognition of revenues and expenses by, for example, advancing recognition of sales revenue through credit sales, or delaying recognition of losses by waiting to establish loss reserves (Teoh et al., 1998). For such managers, who are aware of the limitations of an audit, standard auditing procedures become insufficient. These limitations suggest the need for additional analytical procedures for the effective detection of earnings management practices (Spathis, 2002). Earnings management literature developed after 80’s has focused increased attention on the interpretations of accrual accounting practices. In particular, almost all existing empirical research conducted after Healy (1985) and DeAngelo (1986) have focused on specific accruals, and Jones (1991), Dechow, Sloan and Sweeney (1995) have focused on all accruals as being more prone to be used for earnings management purposes. Later on, Beneish (1997), (1999) adopted a weighted exogenous sample maximum likelihood (WESML) probit model for detecting manipulation. The model’s variables are designed to capture either the financial statement distortions that can result from manipulation or preconditions that might prompt companies to engage in such activity. Beneish (1999) provides evidence that accrual models have poor detective performance even among firms whose behavior is extreme enough to warrant the attention of regulators. Where he has stated that “Research that uses accrual models to investigate whether earnings are managed is a growing industry but its foundations are in need of redefinition.” With the emergence of artificial neural network applications on detecting the underlying functional relationships within a set of data and perform such tasks as pattern recognition, classification, evaluation, modeling, prediction and control, several algorithms are developed to assess the risk of fraud and irregularities in financial statements (Lawrance and Androlia, 1992). The motivation behind this paper is to develop an algorithm which will give a successful achievement on detecting companies which appeal to financial manipulation and intend to estimate the amount of earnings management practices from the publicly available information. The algorithm overcomes some of the major drawbacks of the existing statistical techniques and permits to test directly for the presence of earnings management in a sample of publicly traded 126 Turkish manufacturing firms. The remaining part of this paper is organized in five sections. In the next section, we provide a comparison of the theoretical and empirical research conducted on estimating earnings management practices and describe the existing techniques. Thus, section three discusses the existing mathematical programming techniques in detection of earnings management practices with their main assumptions and drawbacks. The following section derives theoretically the generalized form of the three phase cutting plane algorithm accompanied by a discussion of its advantages over the most popular existing models as well as of its weak sides. The results of the application of the algorithm can be found in the last part of section 4. Section five concludes. 2/28 2. Theoretical background This section, summarizes the several techniques available for the detection of earnings manipulation, is composed of three subsections. The first subsection discusses the competing discretionary accruals models in the earnings management literature developed by DeAngelo (1986), Healy (1985), Jones (1991), the second subsection discusses the statistical techniques applied by Hansen et. al. (1996), Beneish (1997, 1999) and Spathis (2002) and the third subsection reviews the literature on artificial neural network applications to earnings management practices. A detailed review of our proposed three phase cutting plane algorithm for the detection of earnings manipulation is provided in sections three and four. 2.1. Discretionary-accruals models The literature on earnings management has mainly discussed manipulations that are interpretations of standards, for instance decisions on the level of accruals and most of the reseach has found that discretionary accrual models can be effective tool for detecting earnings manipulation as they can expose the unusual figures associated with the reported and the expected amounts. Prior studies conducted by DeAngelo (1986), Healy (1985), Jones (1991) on earnings management have concentrated on how accounts are manipulated through accruals. Accruals management refers to changing estimates such as useful lives, the probability of recovering debtors and other year end accruals to try to alter reported earnings in the direction of a desired target (Ayres, 1994). Where The DeAngelo (1986) model uses last period’s total accruals scaled by lagged total assets as the measure of nondiscretionary accruals. Healy (1985) uses the mean of total accruals scaled by lagged total assets from the estimation period as the measure of nondiscretionary accruals. Jones (1991) attempts to control for the effects of changes in a firm’s economic circumstances on nondiscretionary accruals. 2.2. Statistical Techniques The absence of specific professional guidance and a lack of experience with management fraud have led practitioners and researchers to develop models or decision aids for predicting management fraud (Hansen et al., 1996) Using the same data set as Bell et.al. (1993), Hansen et al., (1996) used a powerful generalized qualitative-response model, EGB2, to model and predict management fraud based on a set of data developed by an international public accounting firm. The EGB2 specification includes the probit and logit models and incorporates the asymmetric costs of type I and type II errors. The results demonstrate good predictive capability with an overall accuracy of 85.3 percent. In the same area, Beneish (1997), (1999) used a weighted exogenous sample maximum likelihood (WESML) probit model for detecting manipulation. The model’s variables are designed to capture either the financial statement distortions that can result from manipulation or preconditions that might prompt companies to engage in such activity. Beneish (1997), provides evidence that accrual models have poor detective performance even among firms whose behavior is extreme enough to warrant the attention of regulators. 3/28 2.3. Neural Network Models Artificial Neural Network technology is a knowledge induction technique that builds a classification model by finding any exisiting patterns of input data. Neural Networks, consists of a set of interconnected processing nodes, arranged in input, hidden and output layers. Data flows through this network in one direction, from the input layer to the output layer. Data first enters the network through nodes in the input layer. While the input nodes pass data to the nodes in the hidden layer(s) to enable the network to model complex functions. The nodes in the output layer both receive and process all inputs (Coakley and Brown 2000). A number of studies conducted by Green and Choi (1997), Fanning and Cogger (1998), Lin et.al. (2003) have shown the power of neural network applications on the detection of fraudulent financial statements. Green and Choi (1997) presented the development of a neural network fraud classification model employing endogenous financial data and find that their model performs well at this classification. Fanning and Cogger (1998) use an artificial neural network to develop a model for detecting management fraud. Using publicly available predictors of fraudulent financial statements, they find a model of eight variables with a high probability of detection. Lin et.al. (2003) created a fuzzy neural network to investigate the utility of information technologies such as an integrated system of neural network and fuzzy logic for fraud detection. 3. Literature review on the existing mathematical programming techniques 3.1. Mathematical programming in discriminant analysis As mentioned above statistical techniques are widely used in the area of earnings manipulation. However, as emerging techniques such as mathematical programming yields better results as in the area of macroeconomical forecasting (Reagle and Salvatore, 2000), bankruptcy prediction (Freed and Glover, 1981), sectoral and political policy examinations (Bauer and Kasnakoglu, 1990), economic trend forecasting (Tingyan, 1990), insurance policy and profit calculations (Waters, 1990), application of a suitable model is expected to result in highly performed forecasts in the area of earnings management practices. Mathematical modeling techniques in earnings management practices are put into agenda with the study of Spathis et.al. (2002), where financial data of 76 firms, half of which is known to resort manipulation, is taken into consideration to develop a forecasting tool by UTADIS methodology. Although this study shows a superficial performance; using just one year data and distribution of the sample data (50% manipulated - 50 % non-manipulated) make the model to be questionable. In order to be used in real life problems, models should not be year specific. Although this (time) dependence on annual economic conditions can be eliminated using ratios instead of raw information, it is expected that multiple year data is the most reliable input when forming a model. Another point that needs to be discussed is the distribution of manipulated and non-manipulated firms, for the sake of realibility of discrimantion studies; it is not advised to use the same number of observations from different groups (Luoma and Laitinen, 1991). In this regard, our study compromises of a larger sample size with different sample distribution where the proposed algorithm is run on a data of eleven years. In order to decrease time dependency and form a more general and reliable model, financial reporting of manufacturing companies listed on the Istanbul Stock Exchange (ISE) between the years 1992 4/28 and 2002 is used. The sample size (number of firms) is 126 firms (including 21 manipulated and 105 non-manipulated firm data) and the model is formed on the basis year of 1997. 3.2. Mathematical Modelling in Discriminant Analysis - Definition The use of mathematical modeling in discriminant studies began with Freed and Glover (1981). In pursuit of this study, mathematical models on different areas of discrimination have been successfully conducted. These models consider several criterias with different constraints and try to obtain high performance on prediction of group membership. Considering a general classification problem, the steps of discrimination can be defined as: Step I. Detecting the group of sample observations (i.e: manipulated vs. non-manipulated), Step II. Determination of discrimination criterias (variables) (i.e: ratios) and gathering related data of observations, Step III. Determination of discriminant scores (combination of predefined variable data) and cut off point (which is thougt to be effective on class determination), Step IV. Application of the model on new observations, classifying them. In step III, a score (discriminant score) is obtained by calculating the weighted sum of the variables determined and using the data collected in step II. This score would determine the observation’s class. Another point is to determine the cut off point which is a benchmark for the observations discriminant score to determine their original class. The observation with a score above this value (cut off point) will be assigned to one class and the observation with a score below the cut off point will be assigned to another. The following section details the description of this procedure by mathematical programming technique. The observation data gathered and the weights designated to variables’ effect on discriminant score are named as “xij” and “wi” (i representing the observation and j representing the variable) respectively. The cut off point which is a benchmark for the observations’ discriminant value is represented by “c”. Observations with a higher value of discriminant score than the cut off point is a member of one group and with a lower value is a member of the other. The main aim of this kind of model is to weight the set of variables which maximizes the distance between the observations’ discriminant score and the cut off point. The sembolic representation of this model is shown below (Freed and Glover, 1981a): w1 x1 j + w2 x2 j ≥ c designated to one group w1 x1 j + w2 x2 j ≤ c designated to other group When inserting the variable d, which shows the distance between the observations’ discriminant score and the cut off point, to the model the equation becomes: w1 x1 j + w2 x2 j − d ≥ c designated to one group w1 x1 j + w2 x2 j + d ≤ c designated to other group Inserting d to the model, the probability of wrong classification arises. Non-existance of d results in either 100% success performance (which is not a real case) or no solution case. With the introduction of objective function of maximizing the distance d the model is defined as below: 5/28 max d w1 x1 j + w2 x2 j − d ≥ c ( first group ) w1 x1 j + w2 x2 j + d ≤ c (sec ond group ) no restriction of sign on w1, w2 and d In such a model, d is a measure of the performance of the model. As d increases the performance of the model increases. No restriction rule on the sign of d minimizes the group intersection, in turn, wrong classification. Maximization of d means maximizing the minimum distance of observations’ discriminant score to cut off value. In some models d may be observation’s variable. This can be symbolized as: m Max ∑ d j j =1 wi xij + wi xij − d j ≥ c ( first group ) wi xij + wi xij + d j ≤ c (second group ) (no restriction of sign on w1, w2 and dj ) (1) 3.3. Discriminant Analysis by Classical Modelling Methods There are several models for solving classification problems. In this section, models, which are widely used in literature, are described with their advantages and disadvantages. MMD-1 (Freed and Glover, 1981a) aims to minimize the maximum deviation (distance of observation classification score to cut off point, d). Model can be represented as: MMD-1 (Freed and Glover, 1981a): Z = max d Xiw + d ≤ c Xiw - d ≥ c c: cut-off point, constant for each observation, no restriction in sign d: deviation, constant for each observation, no restriction in sign w: coefficient matrix, no restriction in sign A characteristic of MMD-1 is that, unbounded solution means a perfect classification. However, this model may yield trivial solution as well. In order to avoid trivial solution, matrix transformation may be applied. But this time optimal solution can fade away with the elimination of the trivial solution. Therefore, it can be said that matrix transformation is not applicable for this kind of model. Another model, named as MMD-2 (Freed and Glover, 1981b) investigates e, which is deviation of wrong classified observation (can also be called as external deviation), rather than d. Model, tries to minimize the wrong classification deviation and “c” is again a constant for all observations. MMD-2 (Freed and Glover, 1981b): z = min e Xiw - e ≤ c Xiw + e ≥ c c: cut-off point, constant for each observation, no restriction in sign 6/28 e: external deviation of wrong classified observation, positive w: coefficient matrix, no restriction in sign The solutions of MMD-2 are always bounded, but trivial solution can not be avoided. Matrix transformation is not applicable for this kind of model as well. In order to avoid trivial solution normalization constraint, which is w’+ c = s, “s” as a positive constant, can be used (Freed and Glover, 1986a). This constant can also eliminate the optimal solution. Therefore p one other normalization constraint, ∑ i =1 w + c ≤ s and p ∑w+ c =s may be another i =1 alternative for preventing the elimination of optimal solution (Freed and Glover, 1986b). Another normalisation constraint is generated by Erenguc and Koehler (1990) which is formulized as w’= s. However, this constraint may limit the feasible region. Another model suggested by Hand (1981) also minimizes the external deviation: min (e1′+ e2′) Xiw – e1 + c ≤ -bi Xiw + e2 + c ≥ bi c: cut-off point, constant for each observation, no restriction in sign e1, e2: external deviation, positive w: coefficient matrix, no restriction in sign b1 and b2: positive matrix, generally determined to be equal This model generates less trivial solution; therefore this can be said to be more stable. However strict classification may form a gap between the groups. Avoiding use of “b” values may remove the gap. For trivial solution, the constraint w’1+ c = s can be used (Koehler, 1989). Some models (Freed and Glover, 1981a,b; 1986a,b) consider internal (deviation of right classified observations, i) and external deviations seperately. Therefore, four types of objective function can be summarized as: Objective 1: minimizing maximum external deviations (MSID-1) Objective 2: maximizing minimum internal deviations (MSID-2) Objective 3: maximizing weighted average of internal deviations of group 1 and group 2 (MSID-3) Objective 4: minimizing weighted average of external deviations of group 1 and group 2 (MSID-4,5) MSID-6 (Bajgier and Hill, 1982) model depends on the idea of maximizing the internal deviation while minimizing the external deviation. The model is: min H1(e1+e2)- H2(i1+i2) X1w - i1+ e1-c=0 X2w - i2 + e2-c=0 i1, i2, e1, e2 ≥ 0 H1, H2: weight of internal and external deviations, positive c: cut-off point, constant for each observation, positive e1, e2: external deviation, positive i1, i2: internal deviation, positive w: coefficient matrix, no restriction in sign 7/28 It is rare to obtain a trivial solution for this model. The only case when no solution exists is where H1<H2 condition is satisfied (Koehler, 1989). H1 and H2 should be carefully determined in order not to get the no solution case. There are also hybrid models which merge one or more of the objectives mentioned above. Since these combined forms of models are very detailed for the scope of this paper, they are excluded. However, further readings about these studies can be found in (Glover et. al.,1988; Glover, 1988). Another model suggested by Retzlaff-Roberts (1996) considers the ratio of internal deviation to external deviation. This model combines the advantages of several other models described above. The ratio obtained will be unitless and this will yield a more reliable solution. This is also a hybrid model whose objective function is defined in a different way. The objective function of model is defined as: max k0i0 + ∑ ki ii i∈G h0 e0 + ∑ hi ei i∈G k, h: weight coefficients In order to linearize the model, the denominator is equalized to unity and the objective function is formulated to maximize the numerator. The model can be expressed as: max h0α 0 + ∑ hiα i i∈G s.t k 0 β 0 + ∑ ki β i = 1 i∈G X 1w − α 0 − α i + β 0 + β i = c, i ∈ G1, X 1w + α 0 + α i − β 0 − β i = c, i ∈ G2, α 0 , α i , β 0 , βi ≥ 0 w: coefficient matrix, no restriction in sign Another group of programming models used in classification is mixed integer programming models. The first model discussed here is suggested by Bajgier and Hill (1982). Model minimizes external deviation plus number of wrong classified observations and maximizes the internal deviation. Model can be demonstrated as follows: min P1 (I1+I2)+ P2 (e1+e2)- P3 (i1+i2) Xiw + i1- e1-c=0 Xiw – i2 + e2-c=0 e1≤M I1 e2≤M I2 i1, i2, e1, e2 ≥ 0 I1, I2: binary variables M: big number P1, P2, P3: positive weights 8/28 MIP-2 considers just one objective which is minimizing the number of wrong classified observations. Deviation is not considered in this model. MIP-2 (Freed and Glover, 1986b; Glover, 1988; Stam and Joachimstahler, 1988): min I1+ I2 X1w≤ c+ M I1 X2w≥ c- M I2 Since there is no sign restriction for the cut-off value c in this model, it is possible to come up with a trivial solution such as c=0, w=0, I1=0, I2=0. In the case c is positive, w=0, I1=0, I2=(c/M)1 and the objective function being equal to (cN2/M) is also the solution to the problem. If M is big enough (cN2/M) will merge to zero which will yield a trivial solution. Another mixed integer programming technique determines an an upper level/lover level boundary around the cut-off value and observations placed in this area is examined. The model can be shown as: MIP-3 (Gehrlein,1986) min I1+I2 X1w≤(u1-c)+ M I1 X1w≥(l1-c)- M I1 X2w≤(u2-c)+ MI2 X2w≥(l1-c)- M I2 u1- l1≥ ε u2- l2≥ ε l1- u2+Ml12≥ ε l2- u1+Ml21≥ ε I12+ I21 = 1 w, u1, u2, c, l1, l2 :no restriction in sign I12, I21: binary variables M: big number ε: small number MIP-4 (Erenguc and Koehler, 1988), which is very similar to MIP-2, which is only different by the normalization constraint w≠0. This constraint makes all the possible solutions to take place in the solution set. In addition to that, if that constraint does not work, Xiw ≤ c+MI1 constraint can be replaced by X1w ≤ c1+ε (Erenguc and Koehler, 1988). 3.4. Mathematical Modelling versus Statistical Techniques Statistical techniques depend on basic assumptions. They can be listed as: 1. groups are seperate and defined properly 2. samples are selected from the population randomly 3. variables distribute normally 4. equal variance-covariance matrix. There are many studies in literature comparing the performance of mathematical modelling and statistical techniques. Depending on these studies, some conclusions can be remarked. They can be summarized as follows: 1. Mathematical modelling is superior over statistical techniques, when observations distribution is not normal. Financial data is generally not normal, in fact bent right (Deakin, 1976). Therefore mathematical modelling is a better tool for financial 9/28 2. 3. 4. 5. 6. forecasting (Bajgier and Hill, 1982; Freed and Glover 1986a; Joachimstaler and Stam, 1988; Lam and Moy, 1997). Statistical techniques have better performance for normal distibution and equal variance-covariance conditions. This is also basic assumption in statistical models. Bajgier and Hill (1982), Mathematical modelling techniques have higher forecasting performance when groups highly intersect. One of the main assumptions for statistical techniques is that groups should have different average vectors (Hosseini and Armacost, 1994). Mathematical modelling techniques are more suitable tools when individual weights appointed to observations are needed (Erenguc and Koehler, 1990; Lam and Moy, 1997). Statistical techniques are more sensitive to outliers than mathematical techniques (Glorfeld and Olson, 1982). When the number of observations of the groups are not balanced, mathematical modelling have superior results than statistical techniques (Markowski, 1994). All these remarks show that, the performance of the mathematical modelling will be superior to the statistical technique when financial data is used for prediction. MCDM procedure begins with decision maker’s formation of an alternative set, A = (a1, a2, a3,… ak) (companies). In the second stage, these alternatives are analised considering several criterias [g = (g1, g2, g3,.. gn)] (financial ratios, operational ratios etc.) in order to obtain optimum solution. The optimum solution stands for a solution which is satisfactory and not overcomed by any other solution. Examining the general logic of the model, it can be seen that this is a pairwise comparison of alternatives, such as: If gji > gki , aj > ak aj alternative is favored to ak If gji = gki , a j ~ ak aj alternative is indifferent to ak There are some different MCDM models which chose the best alternative, which classify the groups or rank the alternatives according to their performances. Some MCDM techniques used in financial area are: (Zopounidis, Doumpos, 2002): 1. Analytic Hierarchy Process - AHP (Srinivasan and Ruparel, 1990) 2. Outranking relations: ELECTRE (Bergeron et.al., 1996; Andenmatten, 1995) 3. Preferential Classification: UTA, UTADIS, MHDIS (Zopounidis, 1995; Zopounidis and Doumpos, 1999; Doumpos and Zopounidis, 1999; Doumpos et.al., 2002) 4. Rough Set Theory (Slowinski and Zopounidis, 1995). Mathematical programming techniques are new in the field of manipulation forecasting. Doumpos and Zopounidis (2002) who worked on mathematical programming previously applied UTADIS method in order to obtain a high performance of financial manipulation forecasting. UTADIS being mainly a Multi Criteria Decision Making (MCDM) method, aims to classify the observations into different groups. General form of MCDM is given as: Max./Min. [g1(x), g2(x), g3(x),… gn(x))] s.t. x є B 10/28 where x stands for variable set vector, g objective function and B optimal solution. In fact, it is not so easy, even not probable, to optimize all the objective functions. Therefore, it is aimed to get a compromise solution of all, where this solution can not be defeated by other solutions. In a more detailed way by UTADIS (UTilities Additives DIScriminantes; Jacquet-Lagreze, 1995; Doumpos and Zopounidis, 1997, 2002), decision maker determines the alternatives without prioritizing them. Instead, groups formed by these alternatives are classified (C1> C2> C3>… Cq). Model can only solve this classification problem if the conditions given below are provided: m U ( g ) = ∑ u i ( g i ) ∈ [0,1] i =1 s.t U(aj) ≥ t1 t1 > U(aj) ≥ t1 ∀ aj є C1 ∀ aj є C2 ……….. ……….. U(aj) < tq-1 ∀ aj є Cq t: upper and lover bound 4. Aim of the Study The primary goal of this study is to introduce a new algorithm for mathematical programming to detect earnings management practices and expects to yield higher level of performance than other techniques used in this area. Besides, the performance of the developed algorithm is compared with a statistical technique and the relative efficiency of mathematical modelling and statistical technique is discussed. In this regard, financial data of the 126 ISE firms is applied to a statistical model and a mathematical model in order to compare the performances. 4.1. Data Financial statement data are obtained from the database maintained by the Istanbul Stock Exchange. The sample consists of 126 Turkish manufacturing companies listed during the period from 1992 through 20022. Manipulators were identified from Istanbul Stock Exchange (ISE)’s and Capital Markets Board of Turkey (CMBT)’s weekly bulletins issued from 1992 through 2002, where these companies had to restate their earnings to comply with the CMBT’s accounting standards at the request of the Board’s internal investigation. Capital Markets Board of Turkey (CMBT), investigated these 126 companies’ financial statements corresponding to the years 1992-2002, and detected earnings management practices in 168 observations and no signs of earnings management practices in 1.040 observations. To find out these investigations conducted by CMBT, where manipulation of financial information existed and the cases where manipulation of financial information did not exist, ISE’s daily bulletins corresponding the dates between 01.01.1992-31.07.2004 and CMBT’s weekly bulletins corresponding to the dates between 01.01.1996-31.07.2004 were investigated using some key words (financial statements, balance sheet, income statement, profit, loss, income, 2 In accordance with the International Accounting Standard No. 29 “Financial Reporting in Hyperinflationary Economies” ("IAS 29"), ISE listed companies adjusted their financial statements for inflation effects in 2003, due to this fact, we have only included years 1992 through 2002 for sound comparison. 11/28 cost, audit report, capitalization, and restatement). According to the information gathered from these bulletins, the companies which were determined and announced to the public as having exercised manipulation of financial information as a result of the CMBT’s investigations and/or the ones which received qualified audit opinion changing the values in their financial statements about their publicly available financial statements or the companies which had changed the values in their financial statements after balance sheet date were considered as the companies which exercised manipulation of financial information. In addition, the companies which had changed the information in their financial statements prepared to be registered with the CMBT during the investigations done by CMBT were also considered as the companies which exercised manipulation of financial information. The model is formed on the basis of year 1997 and applied to all other years’ data in order to measure the performance of the model. There are 126 firms with eleven years data, each of which is considered to be an element, therefore resulting in a total of 1260 observations. However, because of the deficiencies in the data, 27 observations are left out from the observation set and the study is carried out with 1233 observations. In base year 1997, there is a total of 126 firms, of which, 21 of them are manipulated their financial statements and had to restate their financial statements in accordance with the CMBT’s directives. Our research relies on the assumption that the Capital Markets Board of Turkey has (on average) correctly identified firms that intentionally overstated reported earnings. 4.2. Methodology The methodology carried out in this study is given in Figure 1. As seen in the figure, statistical techniques first determine the manipulators and nonmanipulators then clusters manipulators as 4 different groups: very significant danger group, serious risk of earnings group, grey zone group, no evidence group. Mathematical modelling follows a step by step procedure, determining each group individuals once at a time. The first step is a mixed integer programming approach which aims to determine the group membership in high performance. In the consequent stages linear programming approach tries to minimize the distance between the observations, to make clusters, and to minimize the wrong classified distance. 12/28 Data gathering (10 financial data of 10 year 126 firms) PART I: Formulation of the model with the data of year 1997 Formulation of the model with the data of year 1997 PART II- STEP I: Classification of non manipulator candidates and very significant danger group by MIP approach Classification of manipulators and non manipulators by PROBIT PART II- STEP II: Determination of serious risk of earnings group by LP approach Clustering of manipulators as being very significant danger, serious risk of earnings, grey zone and no evidence PART II- STEP III: Determination of grey zone and no evidence group by LP approach Figure 1 – Methodology 4.3. Variable Measurement To examine the motivations for earnings management firms, we require measures of the various constructs in the following section. Although there is no formal theoretical guidance on the selection of variables in the earnings management literature, through a review of CMBT practices and empirical research, especially on Beneish’s studies (1997, 1999), we identified 10 financial ratios and indexes The functions and calculation methods of 10 independent variables that have been determined for our empirical study are explained below3. 3 The data set and explanations related to 10 independent variables determined for our study are taken from the studies of Beneish (1997, 1999), Küçüksözen (2005) and Küçüksözen and Küçükkocaoğlu (2005). 13/28 Leverage Index (LVGI) LVGI = Total Debt t /Total Assetst Total Debt t -1 /Total Assetst -1 The LVGI is the ratio of total debt to total assets in year t relative to the corresponding ratio in year t-1. An LVGI greater that 1 indicates an increase in leverage. This variable was included to capture incentives in debt covenants for earnings manipulation. Inventory Index (DINV) DINV = Inventories t /Sales t Inventories t −1 /Sales t −1 The DINV is the ratio of inventories to sales in year t relative to the corresponding ratio in year t-1. According to Beneish (1997) firms generally use different inventory methods (LIFO, FIFO, Average Costing) in order to manipulate the profits. Financial Expenses Index (FEI) FEI = Financial Expensest /Salest Financial Expensest −1 /Salest −1 The FEI is the ratio of financial expenses to sales in year t relative to the corresponding ratio in year t-1. Most of the financial manipulation cases detected by the CMBT in Turkey involves in the manipulation of financial expenses where firms allocate these expenses into their accounts receivables, inventories, next year’s expenses, associates, plant, property and equipment, intangible assets, and/or continuing investments, instead of recording financing expenses as current period expenses on the income statement. In this regard, company’s managers will be able to reach their own final results by capitalizing important portion of financing expenses by increasing profit. Or, to decrease profit, they will record financing expenses as current period expense. Due to the flexible structure of tax law on the subject of recording financing expenses as expense for current period or capitalizing them, the applications of financial information manipulation are enchained. Within this framework, it is assumed that there is a correlation between this index and manipulation of financial information. Days’ sales in receivables index (DSRI) DSRI = Receivablest /Salest Receivablest −1 /Salest −1 The DSRI index shows the change in receivables at time t by comparing them at time t-1 according to sales. As long as there is no extreme change in the policy of credit sales of the company, this index is expected to have a linear structure. An important increase in this index is based not only on the accountancy of consignment sales recorded as trade receivables and 14/28 sales toward the increase in income as well as profit of the company but also on the creation of trade receivables from current accounts of group companies. These two applications are considered as the indicators of the manipulation of financial information. According to Beneish (1997) a large increase in days’sales in receivables could be the result of a change in credit policy to spur sales in the face of increased competition, but disproportionate increases in receivables relative to sales could also suggest revenue inflation. Gross Margin Index (GMI) GMI = (Salest −1 − Cost of Goods Sold t −1 )/Salest −1 (Salest − Cost of Goods Sold t )/Salest The GMI is the ratio of the gross margin in year t-1 to the gross margin in year t. When the GMI is greater than 1, gross margings have deteriorated. Lev and Thiagarajan (1993) suggested that deterioration of gross margin is a negative signal about a company’s prospects. So, if companies with poorer prospects are more likely to engage in earnings manipulation. We expected a positive relationship between GMI and the probability of earnings manipulation. Asset Quality Index (AQI) Current Assetst + PPEt ) / Total Assetst AQI = Current Assetst -1 + PPEt -1 ) (1 − / Total Assetst -1 (1 − Asset Quality Index shows the change in other non current assets except current assets and plant, property and equipment within total assets by compared to previous year. If the AQI is greater than 1, the company has potentially increased its involvement in cost deferral. An increase in asset realization risk indicates an increased propensity to capitalize, and thus defer, costs (Beneish,1997). Therefore, we expected a positive correlation between asset quality index and financial information manipulation. Sales Growth Index (SGI) Sales SGI = t Sales t −1 Sales growth does not necessarily prove the manipulation of financial information. According to the professionals, growing companies that take sales growth into account are more inclined to manipulate financial information compared to other companies; because, the structure of debt/equity and the needs of resources create pressure on managers in order to increase sales in these companies. If a decrease in the prices of common stock is observed related to slowing 15/28 down on the sales growth of these companies, more pressure will be seen on managers in order to manipulate financial information in such a case. Depreciation Index (DEPI) DEPI = Depreciationt −1 /(Depreciationt −1 + PP& Et −1 ) Depreciationt /(Depreciationt + PP& Et ) The DEPI is the ratio of the rate of depreciation in year t-1 to the corresponding rate in year t. A DEPI greater than 1 indicates that the rate at which assets are being depreciated has slowedraising the possibility that the company has revised upward the estimates of assets’ useful lives or adopted a new method that is income increasing (Beneish, 1997). In this study, depreciation expenses were not directly calculated by using data from balance sheet and income statement. For this reason, depreciation expense of any period is determined as the difference between accumulated depreciation of current period and accumulated depreciation of previous period. This amount may create difference in terms of current period’s depreciation expense. In this context, in depreciable assets, the change in current period will vary the amount of accumulated depreciation without affecting depreciation expense very much. Also, as it is mentioned below, this approach is going to be more appropriate to calculate depreciation expense by considering that these companies belong to manufacturing sector as well as there is no big change in their depreciable assets. As mentioned above, if this proportion is greater than 1, this situation indicates that the company decreases its depreciation expenses in order to declare high profit by considering that the expected useful life of plant, property and equipment will be lengthened or the method of depreciation will be changed in such a way to reduce expenses. On the other hand, it is expected that this index will not change very much by considering that companies which constitute our study are manufacturing companies in reel sector. In manufacturing industry, it is not expected that depreciable assets of these companies will increase or decrease very much in the context of purchases and sales. By taking this factor into account, if an important increase is observed on this index on a yearly basis, this situation is accepted as an indicator of financial information manipulation. For this reason, it is assumed that there is a positive correlation between depreciation expenses and financial information manipulation in our model. Sales, General and Administrative Expenses Index (SGAI) (Mkt. Sales Expenses + Gen. Adm. Expenses ) / Gross Sales SGAI = t t + Gen. Adm. Expenses (Mkt. Sales Expenses t −1 t ) / Gross Sales t −1 t −1 The SGAI is the ratio of sales, general and administrative expenses to sales in year t relative to the corresponding measure in year t-1. It is expected that there is a correlation which will not change for a long time between marketing, sales, distribution and general administrative expenses to sales. These expenses will change according to main activities of the company; in other words, these expenses are variable expenses based on change in sales. In this context, it is expected that sales are manipulated or expenses are under priced in case of important changes which take place in this variable, in other words, in case of a significant decrease in 16/28 the proportional relationship between sales and these expenses, as long as there is no important increase in efficiency, it is assumed that there is a positive correlation between SGAI index and financial information manipulation. Leverage Index (LVGI) LVGI = (LTDt + CurrentLiabilities t ) /TotalAssetst (LTDt −1 + CurrentLiabilities t −1 ) /TotalAssetst −1 The LVGI is the ratio of total debt to total assets in year t relative to the corresponding ratio in year t-1. An LVGI greater that 1 indicates an increase in leverage. This variable was included to capture incentives in debt covenants for earnings manipulation. Total Accruals to Total Assets Index (TATA) ( TATA ) = Δ Current Assets − Δ Cash and Marketabl e Securitie s − (Δ Short Term Debt − Δ Current Portion of the Long Term Debt − Δ Deferred Taxes and some other Legal Laibilitie s) − Depreciati on Expenses Total Assets t t can be calculated as above. When we calculate total accruals to total assets index, we try to show the change in debt-receivables and revenue-expense items within the framework of accrual basis and based on company’s administrative initiatives. The reason behind this variable being in this model, is to determine any manipulation of financial information applications based on increase in revenue or decrease in expense or vice versa within the framework of accrual basis. In this context, if this variable, in other words, non-cash working capital increases or decreases dramatically, it is assumed that manipulation of financial information takes place. 4.4. Mathematical Model Applications As mentioned above, the algorithm used to determine the manipulators is a three step procedure consisting of application of three mathematical models after determination of variables (financial ratios) to be used in models. The first model is a MIP approach, which is different from other consequent stages, in the fact that it contains some integer variables in the objective function. Second and third stages are LP approaches. While MIP approach tries to maximize the number of true classification number and the internal deviation minus external deviation, LP tries to maximize the internal deviation minus external deviation. The model can be formulated as: 17/28 STEP I – Mixed Integer Programming (detection of very significant danger group) ∑ [t(bin m max i =1 n + i /(m + n) + d(i)) − q(bin i− /(m + n) + e(i)) ] p ∑∑ nm(k, j) cof(j) + d(k) − e(k) ≤ c − ε (1) k =1 j=1 m p ∑∑ m(i, j) cof(j) − d(i) − e(i) ≥ c + ε (2) d(k) M ≥ bin +k e(k) ≤ M bin −k (3) d(i) M ≥ bin i+ e(i) ≤ M bin i− (4) i =1 j=1 bin i+ + bin i− ≤ 1 (5) bin +k + bin −k ≤ 1 m: number of manipulated cases n: number of nonmanipulated cases i: manipulated case k: nonmanipulated case c: cut off point j: variable number p: number of coefficients t,q: internal and external deviation weights cof(j): coefficient of jth variable d(i): internal deviation of manipulated case e(i): external deviation of manipulated case d(k): internal deviation of nonmanipulated case e(k): external deviation of nonmanipulated case STEP II – Linear Programming (detection of serious risk group) n ∑ d(k) + e(k) min k =1 n p ∑∑ nm(k, j) cof(j) − d k =1 j=1 2 (k) + e 2 (k) = c 2 d 2 (k) M ≥ bin +k e 2 (k) ≤ M bin −k bin +k + bin −k ≤ 1 18/28 STEP III – Linear Programming (detection of grey zone and no evidence group) s ∑ d(k) + e(k) min k =1 s p ∑∑ nm(k, j) cof(j) − d k =1 j=1 2 (k) + e 2 (k) = c 2 d 2 (k) M ≥ bin k+ e 2 (k) ≤ M bin −k bin +k + bin −k ≤ 1 s: number of grey zone and no evidence groups member As can be seen from the method explained above, the first step, which classifies the very significant danger group, tries to maximize, both the number of correctly classified observations and the amount of internal deviation d minus external deviation e. Maximization of the difference between the internal and external deviation bring about the maximization of internal deviation and minimization of external deviation. By using weights (t and q), model can prioritize the correct classification and decreases wrong classified observations. These are type I and type II indicators. Increasing t (weight of correct classified observation and internal deviation), results in a decrease in type I error; whereas increasing q results in a decrease in type II error. This, in turn, can give the decision maker the chance of formulation of the model according to the problem’s priority. Since the aim of this study is determine the firms which are manipulators, type I error is more important for this model. Therefore, t is determined to be 3 whereas q is 1 for this calculation. Also using ε (a very small number), avoids the placement of observations on the cut-off point which, in fact, is a typical problem very often encountered in classification problems. cof (j) is a free variable which means that there is no sign restriction for the coefficient of variables, since some variables may effect the objective value positively while some others effect in the negative way. For some models, restriction of coefficient can be useful in order to avoid unbounded coefficients which yields unbounded p objective values by adding the constraint ∑ cof(j) = 1 . In this study the model is formulated j=1 by using this constraint as well however results have shown that addition of restriction of coefficients decreases the efficiency of the model. Step II minimizes both type of deviations to make the observations form clusters composed observations which are at optimal adjacency. Also the constraint bink+ + bink− ≤ 1 is added to the problem in order to restrict the model in cases of both internal and external deviations are grater than zero. As the result of step II, the second cut off point (c1) divides the serious risk of earnings zone from others. Like the second step, step III results in the third cut off point (c2) which determines the grey zone as well as the no evidence zone. The graphical representation of step I, step II and step III procedures are given in Figure 2 and Figure 3 respectively. As shown in Figure 2 only the observations determined to be manipulators are obtained in classification. Similarly, observations determined to be either in the serious risk of earnings zone, grey zone group or in no evidence group (zone) is applied in the model so that these observations are placed in the right groups (Fig.3). 19/28 Figure 2. Classification of Very Significant Danger Cases (Step I) Figure 3. Determination of Very Serious Risk, Grey and No Evidence Zone (Step II &III) 4.5. Application of Model As indicated before, the first part of the study forms a model by using 1997 data. In the second step, the formulation deduced, is applied to the years 1992 through 2002 in order to evaluate the performance of the model. The first and the second steps are both linear models. The weights of the variables in Step I are listed in Table 1. 20/28 Table 1 – Coefficients (weights) of independent variables (financial ratios/indexes) in Step I Independent Variable (Financial Ratios) Days’ Sales in Receivables Index (DSRI) Gross Margin Index (GMI) Total Accruals to Total Assets (TATA) Days to Inventory Index (DINV) Leverage Index (LVGI) Depreciation Index (DEPI) Sales, General and Administrative Expenses Index (SGAI) Sales Growth Index (SGI) Asset Quality Index (AQI) Financial Expenses Index (FEI) STEP I Coefficients 16,4952 -13,1584 8,3547 8,1473 -2,5999 1,8112 1,6927 -1,559 1,2698 0,6528 As seen in in Table 1, Gross Margin Index, Sales Growth Index, Leverage Index are inversely proportional to the score of the observation. This means that the smaller the firms’s gross margin index, the greater the probability of manipulation. This claim is also true for Sales Growth and Leverage Indexes as well. Another invention deduced fom the model is that the first variable, which is days' sales index, is the most effective variable to the result of the firm’s status, whether it’s a manipulator or nonmanipulator. The second highly weighted variable is Gross Margin Index with a coefficient of -13,1584 (also remember that this is an inversely proportional variable to the discrimination score of the firm). Least effective variable with a coefficient of 0,6528 is financial expenses. As mentioned above, most of the financial manipulation cases detected by the CMBT in Turkey involves in the manipulation of financial expenses, for this reason, we have added this variable into the model. However, the model shows that this is not an important for the discrimination; therefore the weight of the ratio is appointed to be minimal. The model’s cut-off value is found to be 15,7112. The firms with a higher score is said to be manipulators (very significant danger group). There is also a probabilistic chance of being manipulator for the firms below this point. In order to group these firms, another model should be applied. The aim of the second step is to classify the remaining observations. As the second and third step solutions obtained, serious risk of earnings, grey zone and no evidence groups are clarified. The results obtained by application of all three steps are given in Table 2. Since the aim of the study is to determine whether the firm is a manipulator or not, type II error is underestimated and classification performance is calculated mainly according to whether the model determines a manipulator firms to be a manipulator or not. 21/28 Table 2 – Classification of the firms as the result of the analysis Year 1993 1994 1995 1996 1997 1998 1999 2000 2001 2002 Total Number of firms determined as manipulators at the end of the analysis 13 18 15 19 21 20 15 16 23 11 139 Number of actual manipulators Performance (%) 12 12 11 18 21 16 12 12 17 8 171 92,3 66,7 73,3 94,7 100,0 80,0 80,0 75,0 73,9 72,7 81,3 Another point to be discussed at this stage is performance of the model according to type I and type II error. Table 3 shows performance of the model considering both types of the errors. The results are given in the fourth column, (a), which is the detection of actual manipulators and (b), which is the detection of actual nonmanipulator can be adjusted by setting different t and q values in step I formula presented in section 4.4. Increasing t value increases (a), and increasing q value increases (b). Several runs during execution of this study, however, show that (a) value increases as the value of t until it’s value is increased to 3. After this value (a) doesn’t change even if t value is increased. Table 3 - Type I and Type II errors of the model. Calculated M Calculated NM Actual 139 32 (type I error) Manipulators Actual Nonmanipulators 375 (type II error) 687 % 0,8129 (a) Total % 0,66991 0,6469 (b) At this point, comperative efficiency of the models is another point to be discussed. Another study which was carried by Küçüksözen and Küçükkocaoğlu (2005) used nearly the same data in order to detect falsified financial statements by using statistical techniques. In this study, financial data of 126 Turkish manufacturing companies listed during the period from 1992 through 2002 is processed by using a revised version of Beneish’s (1999) statistical technique. We have compared the performance of our model with Küçüksözen and Küçükkocaoğlu’s (2005) application of Beneish Model (1999) and listed the results in Table 4. 22/28 Table 4 - Comperative efficiency of the proposed algorithm and Küçüksözen and Küçükkocaoğlu’s (2005) application of Beneish Model (1999) Models Manipulator Non-Manipulator Percentage Küçüksözen and Küçükkocaoğlu’s 38% 61% of Fair (2005) application of Beneish Model Prediction (1999) Cutting Plane Algorithm (CPA) 81% 65% As seen in Table 4, cutting plane algorithm is much more efficient for detection of manipulator firms when compared with the Beneish model, i.e %38 for Beneish model and %81 for cutting plane algorithm. Although performance gap between the models is high for manipulator firms, it is not so different for nonmanipulator firms, i.e. %61 for Beneish model and %65 for cutting plane algorithm. As a result, Cutting Plane Algorithm can be said to have higher performance when compared to Beneish Model. Conclusion Turkey, one of the most important and fastest growing economies in the world in recent years, occupies a central position as the financial and business hub of Middle East region. Besides being the preferred choice of Multinational Companies direct investment arena, it is also becoming one of the leading financial markets especially for the Arabic capital investment funds that was fled from European countries and USA. In order to maintain and enhance the growing reputation of its financial market, it is important that Turkey continues to adopt measures that maintain the confidence of international investors. This would include, among other things, maintaining a high standard of financial reporting. The main contribution of this paper to the literature is the development and application of a conceptually new method intended to estimate the approximate amount of earnings management from the publicly available information. The methodology is based on cutting plane formulation using mathematical programming where the model improves the major drawbacks of the existing models and advances the estimation methodologies of earnings management literature into a new level. The model deduced from the data of 1997 is used to detect the falsified financial statements during the years of 1992-2002. As an initial part of the analysis, 1997 data is used to formulate the model. The results obtained by applying the model and the actual data are compared in order to calculate the performance of the model introduced. During the analysis, 10 ratios and indexes are used for detecting falsified financial statements. One of which out of 10, “financial expenses index”, although not widely used in the earnings manipulation literature, is included in the model, since it is the most common type of manipulation example in Turkey. The model exposed some conclusions about the variables’ effect on the earnings management potential of the firm, for example, as the gross margin index, sales growth index and leverage index increases and the probability of manipulation decreases. 23/28 The results of the model show high performance when compared with other statistical methods used in the earnings management literature. Model’s performance on forecasting the manipulator firms is 81%, which is a higher value than that of nonmanipulator firms, 65% numerically. The model introduced also has the flexibility of adjusting the preference of detecting manipulators over nonmanipulators or vice versa. When compared with Küçüksözen and Küçükkocaoğlu’s (2005) study which uses probit technique, using the same data, the three stage algorithm introduced in this paper has 43% higher detection ability for manipulator cases and 4% higher detection ability for nonmanipulator cases. However, given the flexibility of international accounting standards, and the motivations of managers for misrepresentation, such standards will keep providing opportunities to disclose false financial statements to the managers. The ability to identify earnings management practices from the accounting ratios and/or indexes of a particular firm will remain the privilege of auditors and regulators of tomorrow (Babalyan, 2004). 24/28 References Andenmatten, A., (1995), Evaluation du Risque de defaillance des emetteurs d’ obligations: une approache par l’ aide multicriterie a la decision, Presses Polytechiques et Universitaries Romandes: Lausanne. Ayres, F.L., (1994), “Perceptions of earnings quality: What managers need to know”, Management Accounting, Vol:75, No:9, pp: 27-29. Bell TB, Szykowny S, Willingham J. (1993). Assessing the likelihood of fraudulent financial reporting: a cascaded logic approach. Working Paper, KPMG Peat Marwick: Montvale, NJ. Bajgier, S.M., Hill, A.V. (1982), An experimental comparison of statistical and linear programming approaches to the discriminant problem, Decision Sciences 13, pp: 604-618. Bauer,S., Kasnakoglu, H., (1990), Non-linear Programming Models for Sector. and Policy Analysis, Economic Modelling, Vol:7, Is:3, pp: 275-290. Beneish, M. D., (1997), "Detecting GAAP Violation: Implications for Assessing Earnings Management Among Firms with Extreme Financial Performance", Journal of Accounting and Public Policy, Vol:16, No: 3, pp: 271-309. Beneish, M. D., (1999), “The Detection of Earnings Manipulation”, Financial Analysts Journal, Vol:55, No:5, pp:24-36. Bergeron, M., Martel, J.M., Twarabimenye, P., (1996), The evaluation of corporate loan applications based on the MCDA, Journal of Euro-Asian Management 2 (29), pp: 16-46. Coakley, J.R., Brown, C.E., (2000). Artificial Neural Networks in Accounting and Finance: Modelling Issues, International Journal of Intelligent Systems in Accounting, Finance and Management. 9, pp: 119-144. Coderre, G.D. (1999), Fraud Detection. Using Data Analysis Techniques to Detect Fraud, Global Audit Publications, Vancover. Cox, R., Weirich, T., (2002), “The Stock Market Reaction To Fraudulent Financial Reporting”, Managerial Auditing Journal, Vol : 17, No: 7 , pp: 374-382. Deakin, E. B., (1976), Distributions of financial ratios: Some empirical evidence, The Accounting Review Vol:51 No:1, pp: 90-96. DeAngelo, L.E. (1986), “Accounting Numbers as Market Valuation Substitutes: A Study of Management Buyouts of Public Stockholders”, The Accounting Review, Vol:61, No:3, pp: 400-420, July 1986. Dechhow, P.M., Sloan, R.G., Sweeney, A.P., (1995), “Detecting Earnings Management”, The Accounting Review, Vol:70, No:2, pp: 193-225, April 1995. 25/28 Dechow, P., Sloan, R., Sweeney, A., (1996), “Causes and Consequences of Earnings Manipulation: An Analysis of Firms Subject to Enforcement Actions by the SEC,” Contemporary Accounting Research, Vol: 13, No:1, pp:1-36. Doumpos, M., Zopounidis, C., (1999), A multicriteria discrimination method for the prediction of financial distress: the case of greece, Multinational Finance Journal 3(2) , pp: 71-101. Doumpos, M., Kosmidou, K., Baourakis, G., Zopounidis, C, (2002), Credit risk assesment using a multicriteria hierarchical discrimiantion approach: a comporative analysis, European Journal of perational Research 138(2) , pp: 392-412. Erenguc, S.S., Koehler, G.J, (1990), Survey of mathematical programming models and experimental results for linear discrminant analysis, Managerial and Decision Economics 11, pp: 215-225. Fanning K, Cogger K.O., Srivastava R. (1995), “Detection of management fraud: A neural network approach”, Proceedings of the 11th Conference on Artificial Intelligence for Applications, ISBN:0-8186-7070-3, pp: 220. Fanning K, Cogger KO, (1998), “Neural Network Detection of Management Fraud Using Published Financial Data”, Intelligent Systems in Accounting, Finance and Management,. 7, pp: 21–41 Freed, N., Glover, F. (1981a), A Linear Programming Approach to the Discriminant Problem, Decision Sciences 12, pp: 68-73. Freed, N., Glover, F. (1981b), Simple but powerful goal programming models for discriminant problems, European Journal of Operations Research 7, pp: 44-60. Freed, N., Glover, F. (1986a), Evaluating alternative linear programming models to solve the two group discriminant problem, Decision Sciences 17, pp: 151-162. Freed, N., Glover, F. (1986b), Resolving certain difficulties and improving the classification power of LP discriminant analysis formulations, Decision Sciences 17, pp: 589-595. Gehrlein, W.V., (1986), General matematical programming formulations for the statistical classification problem, Operations Research Letters 5(6) , pp: 299-304. Glorfeld, L.W., Olson, D.L. (1982), Using the l1 metric for robust analysis of the two group discriminant problem, Proceedings of the American Institude of the Decision Sciences 2, San Francisco, CA, pp: 297-298. Glover, F. (1988), Improved linear and integer programming models for discriminant analysis, Center for applied artificial intelligence, University of Colorado, Boulder, CO. Glover, F., Keene, S., Duea, B. (1998), A new class of models for the discriminant problem, Decision Sciences 19, pp: 269-280. 26/28 Green BP, Choi JH. (1997), Assessing the risk of management fraud through neural network technology. Auditing: A Journal of Practice and Theory 16: No. 1, pp: 14–28. Hand, D.J., (1981), Discrimination and classification, New York, John Wiley. Hansen, J.V., McDonald, J.B., Messier, W.F. and Bell, T.B., (1996), A generalized qualitative-response model and the analysis of management fraud, Management Science, VOl. 42. No.7, pp: 1022-1032. Healy, P.M., (1985), “The Effect of Bonus Schemes on Accounting Decisions,” Journal of Accounting and Economics, Vol:7, pp: 85-107. Healy, P.M., Wahlen, J.M., (1999), “A Review of the Earnings Management Literature and Its Implications for Standard Setting” Accounting Horizons, Vol.13 No.4, pp: 365-383, December 1999. Hosseini, J.H., Armacost, R.L., (1994), The two-group discriminant problem with equal group mean vectors: An experimental evaluation of siz linear/nonlinear programming formulations, European Journal of Operations Research 77, pp: 241-252. Jacquet-Lagreze, E., (1995), An application of the UTA discriminant model for the evaluation of R&D projects, Kluwer Academic Publishers: Dordrecht, pp: 203-211. Joachimstaler, E.A., Stam, A., (1988), Four approaches to the classification problem in discriminant analysis: An experimental study, Decision Sciences 19, 1988, pp: 322-333. Koehler, G.J., (1989), Characterization of unacceptable solutions in LP discriminant analysis, Decision Sciences 20, pp: 239-257. Küçüksözen, C., (2005), “Finansal Bilgi Manipülasyonu: Nedenleri, Yöntemleri, Amaçları, Teknikleri, Sonuçları Ve İMKB Şirketleri Üzerine Ampirik Bir Çalışma”, SPK Yayınları, No.183, Temmuz 2005, Ankara. Küçüksözen, C., Küçükkocaoğlu, K., (2005), “Finansal Bilgi Manipülasyonu: İMKB Şirketleri Üzerine Ampirik Bir Çalışma”, "1st International Accounting Conference On The Way To Convergence" Kasım 2004, İstanbul, Muhasebe Bilim Dünyası (Mödav) Conference Booklet. Lam, K.F., Moy, J.W., (1997) , An experimantal comparison of some recently developed linear programming approaches to the discriminant problem, Journal of Computers and Operation Research 24, pp: 593-599. Lin, J.W, Hwang, M., Becker, J.D, (2003), “A Fuzzy Neural Network for Assessing the Risk of Fraudulent Financial Reporting”, Managerial Auditing Journal, Spring. Lev, B., Thiagarajan, R., (1993), ‘Fundamental information analysis’. Journal of Accounting Research, Vol. 31, pp. 190–215. Jones, J., (1991), “Earnings management during import relief investigations”, Journal of Accounting Research, Vol: 29, No:2, pp:193-228. 27/28 Lawrence, J, Androlia P. (1992), Three-step method evaluates neural networks for your application. EDN 93-100. Luoma, M., Laitinen, E.K., (1991), Survival analysis as a tool for company failure prediction, Omega International Journal of Management Science 19(6), pp: 673-678. Markowski, C.A., (1994), An adaptive statistical method for the discriminant problem, European Journal of Operation Research 73, pp: 480-486. Porter, B., Cameron, A. (1987), "Company fraud – what price the auditor?", Accountant’s Journal, pp: 44-7. Reagle, D., Salvatore, D., (2000), Forecasting Financial Crisis in Emerging Market Economies, Open Economies Review, Vol:11, No:3, pp: 247-259. Retzlaff-Roberts, D.L., (1996), Relating discriminant analysis and data envlopment analysis to one another, Computers Operations Research 23(4), pp: 311-322. Slowinski, R., Zopounidis, C., (1995), Application of the rough set approach to evaluation of bankruptcy risk, International Journal of Intelligent Systems in Accounting Finance and Management 4, pp: 27-41. Spathis, C., (2002), “Detecting False Financial Statements Using Published Data: Some Evidence From Greece”, Managerial Auditing Journal, Vol: 17, No: 4, pp: 179-191. Spathis, C., Doumpos M., Zopounidis, C., (2002), “Detecting falsified financial statements: a comparative study using multicriteria analysis and multivariate statistical techniques”, The European Accounting Review, Vol: 11, No: 3, pp: 509–535. Srinivasan, V., Ruparel, B., (1990), CGX: an expert support system for credit granting, European Journal of Operation Research 45, pp: 293-308. Stam, A., Joachimstahler, E.A., (1990), A comparison of a robust mixed-integer approach to existing methods for establishing classification rules for the discriminant problem, European Journal of Operation Research 46, pp: 113-122. Teoh, S. H., I.Welch, and T. J. Wong, (1998a), Earnings management and the under performance of seasoned equity offerings, Journal of Financial Economics 50 (October), pp: 63-99. Tingyan, X, (1990), A Combined Growth Model for Trend Forecasts, Technological Forecasting and Social Change, Vol:38, No:2, pp:175-186. Waters, H., (1990), The Recursive Calculation of the Moments of the Profit on a Sickness Insurance Policy, Insurance Mathematics and Economics, Vol:4, No:2-3, Special Issue, pp: 101-113. 28/28