ieee transactions on signal processing

advertisement

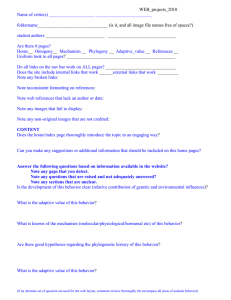

IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 50, NO. 7, JULY 2002 1583 Adaptive Null-Forming Scheme in Digital Hearing Aids Fa-Long Luo, Senior Member, IEEE, Jun Yang, Senior Member, IEEE, Chaslav Pavlovic, and Arye Nehorai, Fellow, IEEE Abstract—We propose an effective adaptive null-forming scheme for two nearby microphones in endfire orientation that are used in digital hearing aids and in many other hearing devices. This adaptive null-forming scheme is mainly based on an adaptive combination of two fixed polar patterns that act to make the null of the combined polar pattern of the system output always be toward the direction of the noise. The adaptive combination of these two fixed polar patterns is accomplished by simply updating an adaptive gain following the output of the first polar pattern unit. The value of this gain is updated by minimizing the power of the system output, and related adaptive algorithms to update this gain are also given in this paper. We have implemented this proposed system on the basis of a programmable DSP chip and performed various tests. Theoretical analyses and testing results demonstrated the effectiveness of the proposed system and the accuracy of its implementation. Fig. 1. Common direction processing system with two omnidirectional microphones in endfire orientation. Index Terms—Adaptive signal processing, array signal processing, beamforming, hearing aids, microphones, noise reduction, speech enhancement. I. INTRODUCTION H EARING devices with directionality make use of the direction difference between the target signal and the noise. There are two types of directional devices: one with fixed directionality (or, say, with the fixed polar pattern) [1]–[4] and the other with adaptive directionality that can track the varying or moving noise sources [5]–[8]. Many techniques have been used to achieve directionality in both fixed mode and adaptive mode [3], [7], [9], [10]. However, most of these techniques can be neither immediately employed nor implemented in instruments such as hearing aids because of the limit of hardware size, the number and distance of microphones, computational speed, mismatch of microphones, power supply, and other practical factors relating to these hearing instruments [7], [11], [12]. Manuscript received September 18, 2000; revised March 13, 2002. The work of A. Nehorai was supported by the Air Force Office of Scientific Research under Grants F49620-99-1-0067 and F49620-00-1-0083, the National Science Foundation under Grant CCR-0105334, and the Office of Naval Research under Grant N00014-01-1-0681. The associate editor coordinating the review of this paper and approving it for publication was Dr. Brian Sadler. F.-L. Luo was with the R&D Department, GN ReSound Corporation, Redwood City, CA 94063 USA. He is now with Quicksilver Technology, Inc., San Jose, CA 95119 USA. J. Yang was with the R&D Department, GN ReSound Corporation, Redwood City, CA 94063 USA. He is now with ForteMedia, Inc., Cupertino, CA 95014 USA. C. Pavlovic was with the R&D Department, GN ReSound Corporation, Redwood City, CA 94063 USA. He is now with Sound ID, Palo Alto, CA 94303 USA A. Nehorai is with the Electrical and Computer Engineering Department, University of Illinois at Chicago, Chicago, IL 60607 USA. Publisher Item Identifier S 1053-587X(02)05634-9. Fig. 2. Three typical polar patterns obtained by the system in Fig. 1 with using different delay values. From left to right, the patterns are bidirectional, hypercardioid, and cardioid. For example, in common hearing aids such as behind-the-ear aids, there can be only two microphones, and the distance between these two microphones is only about 10 mm [13]. The most common technique in use in hearing aids is a directional microphone or a dual-omnimicrophone system with some fixed polar patterns, as shown in Fig. 1. The directional system in Fig. 1 can provide different polar patterns by selecting different values of delay . By way of example, Fig. 2 shows three , polar patterns with the value of delay being 0, , respectively, where is the distance between the two and microphones, and is the sound speed. The direction directly in front of the hearing-aid wearer is represented as 0 , whereas 180 represents the direction directly behind the wearer. The thick line stands for the gain as a function of direction of the sound arrival where the gain from any given direction is represented by the distance from the center of the circle. These three polar patterns are called bidirectional pattern (with null at 90 and 270 ), hypercardioid pattern (with null at 110 and 250 ), and cardioid pattern (with null at 180 ), respectively. Obviously, the cardioid system attenuates sound the most from directly behind the wearer, whereas the bidirectional system attenuates sound the most from directly to the left and to the right of the wearer. In different listening environments, users select one of these three polar patterns using control buttons to achieve the best noise reduction performance, given the specific listening 1053-587X/02$17.00 © 2002 IEEE 1584 environment. However, for time-varying and moving-noise environments, this fixed directional system delivers degraded performance and therefore, the system with adaptive directionality is highly desirable. For a system with two nearby microphones in endfire orientation, the direct way to achieve adaptive directionality is to adaptively change the delay of the system in Fig. 1 so that its value is equal to the transmission delay value of the noise between the two microphones. From a performance and complexity point of view, the key problem in this method is in effectively estimating the delay value of the noise when noise and the target speech are both present. Another problem related to this method is how to implement this delay unit in real time since this delay is usually a fractional sample delay. For example, if the sampling rate is 16 000 Hz, one sample interval is 62.5 s, but the largest transmission delay value of the noise between the two microphones and about 29.1 s with mm. In the scheme of is Fig. 1 with fixed mode, this fractional-sample delay is usually implemented during the A/D stage by processing that is similar to an up-sampling-shift method [13]. In the adaptive mode, the up-sampling-shift method is no longer suitable, and the adaptive implementation of a fractional-sample delay is instead accomplished by adaptively updating the coefficients of a specific filter (ideally, an all-pass filter with linear phase) [14]. These problems prevent this idea from being of practical value in a hardware implementation. On the basis of these problems, this paper proposes a more practical and effective adaptive directionality system for hearing devices with two nearby endfire orientation microphones. This adaptive directionality system is based mainly on an adaptive combination of two fixed polar patterns that are arranged to make the null of the combined polar pattern of the system output always be toward the direction of the noise. The null of one of these two fixed polar patterns is at 0 (straight ahead of the subject) and the other’s null is at 180 . Both polar patterns are cardioid. The first fixed polar pattern can be implemented by deand laying the front microphone signal with the value being subtracting it from the rear microphone signal. Likewise, the second polar pattern is implemented by delaying the rear miand subtracting it from crophone signal with the value being the front microphone signal. The adaptive combination of these two fixed polar patterns is accomplished by adding an adaptive gain following the output of the first polar pattern. Different gain values will provide the combined polar pattern with nulls at different degrees. The value of this gain is updated by minimizing the power of the system output. Related adaptive algorithms to update this gain are given in this paper. The hardware implementation of this proposed system has been completed and tested. The test results demonstrated the accuracy and effectiveness of the proposed scheme. It should be noted that for systems with two nearby microphones, there have been also some very effective algorithms such as the scheme proposed by Vanden Berghe and Wouters [11] and the scheme proposed by Elko and Pong [15]. In comparison with the two-stage scheme in [11], our proposed system and algorithm are very simple because ours requires only an adaptive gain (one-tap adaptive filter). As a matter of fact, the structure of Fig. 3 in this paper is the same as that of Fig. 2 in [15], and this paper presents some sim- IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 50, NO. 7, JULY 2002 Fig. 3. Schematic of the proposed adaptive null-forming system. ilar discussions concerning the algorithms, implementations, and tests, as in [15], although we did our work independently. In comparison with [15], the major contributions of this paper could be summarized as follows. 1) The unique mapping relationship between the time delay and the adaptive gain for forming a null is established in our paper. 2) We prove that this unique relationship between the time delay and the adaptive gain is independent of frequency for the related configurations. 3) The entire shape of the polar pattern resulting from changing the adaptive gain has been proved to be exactly the same as the entire shape of the polar pattern resulting from changing the time delay. 4) The optimum gain value and related adaptive algorithms are derived in the case where the target signal and noise are both present and the corresponding system has been proved to provide the maximal signal-to-noise ratio (SNR). The rest of this paper is organized as follows. Section II presents the proposed scheme and makes related theoretical analyses. Hardware implementation of this proposed scheme is dealt with in Section III. Section IV will give various test results demonstrating the effectiveness and accuracy of the proposed scheme. In Section V, we will give some conclusions. II. PROPOSED ADAPTIVE NULL-FORMING SYSTEM The proposed scheme is shown in Fig. 3, where the received signals at the front microphone and the rear microphone are and , respectively. delay unit in two channels; output of the system; adaptive gain; output of the adaptive gain processing unit. From the scheme of Fig. 1, it can be easily seen that the polar is cardioid with the null at 180 ; likewise, the pattern of is cardioid but the null is at 0 . The polar polar pattern of is a combination of pattern of the whole system output and and determined by the gain . The relationwith the gain ship of the null of the system output is as follows: (1) is the angle of where is the frequency of the signal, and the null along the line between the two microphones. To prove LUO et al.: ADAPTIVE NULL-FORMING SCHEME IN DIGITAL HEARING AIDS 1585 TABLE I RELATIONSHIP BETWEEN THE NULL AND THE GAIN WITH DIFFERENT FREQUENCIES this relationship, we use the front microphone as a reference , , and as channel and have The null at the polar pattern means that the output power at this direction, that is, we have (2) where is the angle of the source along the line between the two microphones. It should be noted that all right-side terms , which is of (2) should be multiplied by a variable the signal received in the front microphone. However, could be considered as 1 for the sake of simplicity using the front microphone as a reference channel. From (2), we can obtain the output power of the proposed system as (3) Furthermore, using the related trigonometric identities yields Rearranging the above equation yields (1) and concludes the proof of (1). , we will consider only the Because in the following, realizing that all related interval . It can been conclusions apply also to the interval , then seen from (1) that for all frequencies, if ; if , then . This result can also be obtained directly from Fig. 3 because means , the polar pattern of is the same as that , and because means that and of will be identical and will make be zero when the signal comes from 90 . Except for these two special cases, (1) shows that the relationship between the null and the gain depends on the frequency of the signal. However, with the approximation for the frequency range of interests as used for obtaining the polar pattern of the scheme in Fig. 1, (1) can be approximated as (4) This result means that the relationship between the null and the gain will be independent of the frequency. In effect, the difference between (1) and (4) is very small, especially when is in the range from 90 to 180 . The lower the frequency, the smaller this difference will be. To illustrate this, Table I shows a mm and at frequencies 500 Hz, 1000 set of results with 1586 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 50, NO. 7, JULY 2002 patterns, respectively, as shown in Fig. 2. In effect, the corresponding null can also be obtained from (4) or Table I. These results suggest that the polar-pattern null of the system output for all frequencies can be moved by simply updating and keeping the shape of the polar pattern the gain value the same as that of the scheme in Fig. 1 with the corresponding delay value determined by (8). Next, we show how to adaptively update the gain value so that the corresponding null can always be toward the noise instead of toward the target signal in the case where the target signal and noise are both present. In this case, we have (9) (10) (11) Fig. 4. Comparison of the result (solid line) of (4) with the result (dashed line) of (1) at 6000 Hz frequency. Hz, 2000 Hz, 4000 Hz, 6000 Hz, and 8000 Hz, respectively. For further illustrations, Fig. 4 compares the results of (1) and (4) at 6 kHz frequency. From (4), we also have for (5) This shows that the relationship between the null and the gain , we is a monotonic function. In other words, given a gain can get the unique null for all frequency ranges of interest. Previously, we have discussed the relationship of the polarwith the gain . Now, pattern null of the system output we will investigate the shape of the whole polar pattern. Before this, we first present the relationship of the output power of the fixed system in Fig. 1 against the angle using the approxas follows [1]. imation (12) (13) (14) and are the desired signal part and the noise where part in the front microphone, respectively, and is the delay of the noise transmission from the front microphone to the rear microphone. In the above, we also assume that the desired signal comes straight ahead, that is, its angle is at 0 . By substituting (9) and (10) into (12), we get (15) contains only the noise part. This property shows that is, is equivalent to minimizing that minimizing the power of because the power of the noise part contained in the output the target signal and the noise are not correlated. The optimal that minimizes the power of : gain (16) (6) Similarly, the output power approximated from (3) as of the proposed system can be can be obtained by (17) (7) A comparison of (6) with (7) shows that these two systems will provide exactly the same polar pattern with mapping relationship (8) , 0.4903, and 0, reFor example, if we choose , spectively, then the corresponding delay equals 0, , respectively, according to (8), and these two systems and will provide the bidirectional, hypercardioid, and cardioid polar , , and are the power of , the cross-corwhere and , and the power of , respectively relation of [16]. Moreover, the objective function (16) is a quadratic func. On the basis of all tion with unique minimization point the above properties, we can conclude that the null of the system , which is determined by minimizing the system output power (16) or determined by (17), will always be toward the direction of the noise when the target signal and the noise are both present. Now, the problem becomes how to adaptively update the opti, given the samples of and [as obmization gain tained by (11) and (12), respectively] rather than the cross-corand the power . We discuss this problem in relation Section III together with other issues of the hardware implementation of the system. LUO et al.: ADAPTIVE NULL-FORMING SCHEME IN DIGITAL HEARING AIDS 1587 III. IMPLEMENTATION OF THE PROPOSED ADAPTIVE NULL-FORMING SYSTEM Having the sample of and , we can obtain the optimized gain using any available adaptive algorithms, such as the LMS, NLMS, LS, or RLS algorithm, because (16) is a typical quadratic optimization problem with only one coefficient. The fact that only one coefficient need be calculated makes related adaptive algorithms very simple and makes the real-time hardware implementation of the proposed system possible. The LMS version for getting the adaptive gain can be written as (18) where is a step parameter that is positive constant less than , and is the power of the input . For better performance and faster convergence speed, can also be time varying (as used by the normalized LMS algorithm [16]). Based on this, an algorithm with varying-step can be obtained: (19) is the estiwhere is a positive constant less than 2, and . mated power of Equations (18) and (19) are suitable for the sample-by-sample adaptive mode. However, in our hardware implementation of this proposed scheme, we use the frame-by-frame adaptive mode because of other processing in hearing aids is also based on the frame-by-frame mode. With this mode, the following steps are used to calculate the adaptive gain [16]. First, we and and the estimate the cross-correlation between at the th frame by power of (20) (21) is the number of all samples in a frame respectively, where and of and equals 56 in our implementation. Second, and , and then, the (17) are replaced with the estimated estimated adaptive gain is obtained by (17). We have implemented this proposed scheme in one programmable (assembly code) DSP chip together with other necessary processing such as A/D and D/A. Other practical factors considered in our hardware implementation include the following. 1) In this proposed scheme, the two microphones are assumed to have the same frequency response. However, this is not the case since in real-world devices, these two microphones are always mismatched. In order to overcome this problem, we first measure the frequency responses of the two microphones, and we then design and add a matching filter at the rear channel on the basis of the measured mismatch. From our measurements, a first-order IIR filter can compensate well for the misand have the desired carmatch and can make dioid polar pattern with null at 180 and 0 , respectively. Fig. 5 presents an example related to matching filter de- Fig. 5. Upper curve is the measured mismatch, the bottom curve is the frequency response of the designed matching filter, and the middle curve is the frequency response difference of the two channels after matching. sign where the upper curve is the measured mismatch between the front and rear microphones, the bottom curve is the frequency response of the designed matching filter, and the middle curve is the frequency response difference of the two channels after matching. 2) In order to get a better estimate and make the frame-byframe processing smoother, we estimate the cross-correand and the power of by lation between (22) (23) and are two adjustable parameters such that , , and . Obviously, if and , (22) and (23) becomes (20) and (21), respectively. would be at de3) The null of the total system output gree less than 90 (that is, in the front hemisphere) when is larger than 1. the absolute value of the gain This has the potential of canceling the target signal in the front hemisphere. To avoid this problem, we limit the dynamic range of the gain to the range 1 to 1. This limit is also convenient for the hardware implementation because in hardware implementation, all digital values are in the range from 1 to 1. 4) In the hardware implementation of this proposed system, the total program memory usage is 129 words, the processing time is 0.32 ms, the propagation delay is 0.04 ms, and the current is 38 A, which shows that the power cost of this scheme is very small. where IV. TESTING RESULTS With the DSP code to implement this algorithm being completed and being pulled to GN ReSound behind-the-ear (BTE) devices, we made extensive tests to verify the algorithm and its implementation. This kind of device used GN ReSound’s own DSP chip and related platform (operation systems and assembly languages). In these tests, a Knowles EM 4346 microphone and a Knowles EM 3356 microphone were put in the endfire config- 1588 IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 50, NO. 7, JULY 2002 Fig. 6. (Left) Measured polar pattern of x(n) and (right) measured polar pattern of y (n). Fig. 7. (Left) Measured polar patterns of the system output z (n) with the gain value being uration to the upper front and upper rear part of a BTE device, respectively. The distance of these two microphones was about 1.2 cm. This BTE device was connected with a 2-cc coupler. The sampling rate in this processing was 16 000 Hz. The distance between the device and the speaker was about 1.0 m. The input of the speaker was a frequency-sweep signal with frequencies from 200 Hz to 8000 Hz and with 70 dB SPL. The room noise was about 50 dB SPL. A Brüel & Kjær Type 2012 Time-Frequency Analyzer was used to measure and analyze related input and output signals. In order to get the polar patterns, we changed the angle between the device and speaker at each 10 by turning the device, which is easier and more accurate than by moving the speaker. With this setup, 36 sets of data with all frequencies from 200 Hz to 8000 Hz, corresponding to 36 positions (angles) of the device, can be obtained. These 36 sets of data were used to obtain the polar patterns in combination with our polar-pattern generation software. The main testing can be summarized as follows. and were measured to 1) The polar patterns of verify that they were the desired fixed polar patterns, that was cardioid with the is, that the polar pattern of was carnull at 180 and that the polar pattern of dioid with the null at 0 . Fig. 6 shows the measured polar and . It should be noted that the polar pattern of was measured by reversing the position of pattern of 00.2174 and (right) 00.4903, respectively. the two microphones, and hence, its measured polar pattern should be the same as the measured one of . 2) With a given specific value of the adaptive gain, the polar was measured to see pattern of the system output if the null of the polar pattern and the given value of the gain met the unique relationship given in (4) and Table I. Two examples are shown in Fig. 7 with values of the gain being 0.2174 and 0.4903, respectively. 3) A source (the target signal) was set straight ahead (0 ), and another source (the noise) was set at specific degree (toward which the null of the proposed adaptive system should be) to see if the gain value obtained by the adaptive algorithm [(17), (22), and (23)] met the relationship (4) and Table I. As a matter of fact, when we measured each polar pattern, we moved the second source from 0 to 360 successively, as mentioned above. With this measurement method, the measured polar pattern obtained by the proposed adaptive null-forming system is shown in Fig. 8. This pattern results because the null of the system should always be toward the degree of this moving source. Note that we limit the gain value to the range from 1 to zero. 4) A speaker with pure-tone sound (the target signal) was set at 0 and a wide band noise with adjustable frequency bandwidth and sound pressure level was set at different LUO et al.: ADAPTIVE NULL-FORMING SCHEME IN DIGITAL HEARING AIDS 1589 a gain calculation is involved in the adaptive algorithm, the computational complexity is low, which makes the proposed scheme realizable in hardware implementation. Theoretical analyses and various tests demonstrated the effectiveness of the proposed system. As a result, this proposed scheme can serve as a new and practical tool for noise reduction and signal enhancement in many hearing devices. ACKNOWLEDGMENT Fig. 8. Measured polar pattern of the system output corresponding to testing 3. angels. The angles of the noise source were changed, (omni-directionality), and then, the outputs of (fixed cardioid directionality), and (adaptive directionality) were measured to further see the performance improvement of the proposed adaptive system over the fixed system of Fig. 1. Moreover, in order to see how this scheme function under real-world conditions, we have also devised the hearing in noise test (HINT). The HINT was utilized to assess speech understanding of sentences at threshold level under varying noise conditions. The HINT was an adaptive procedure whereby the masker (uninterrupted speech-shaped noise) remained at 65 dBA and the speech signal (sentences) level varied. The sentences were delivered at 0 azimuth, and the noise was delivered at 1) 90 and 270 ; 2) 180 ; 3) 90, 180, and 270 ; simultaneously. Subjects were tested in three different conditions: 1) omni-directional system; 2) fixed directionality system in Fig. 1; 3) proposed adaptive directionality system. All these tests showed that there was more mean benefit over the omni-directional system for the proposed adaptive directionality system than for the fixed directional system in Fig. 1. V. CONCLUSIONS In this paper, we propose an effective adaptive null-forming scheme for two nearby microphones in endfire orientation and deal with its implementation and tests. The null can be adaptively moved by simply changing a gain value instead of changing a fractional-sample delay value. We presented and proved the unique mapping relationship between the null and the gain value, and we discussed related adaptive algorithms for updating the gain. The null corresponding to the gain obtained by the given adaptive algorithm can be guaranteed to be always toward the noise source in the case where the target signal and the noise are both present. Because only The authors are grateful to the anonymous reviewers and the associate editor for their very useful suggestions and valuable comments. They also thank N. Michael, S. Petrovic, C. Struck, and other co-workers at the R&D Department, GN ReSound Corporation, for their contributions to the implementation and testing of this proposed system. A U.S. patent application based on this proposed system is pending. REFERENCES [1] M. Valente, “Use of microphone technology to improve user performance in noise,” Trends Amplificat., vol. 4, no. 3, pp. 112–135, 1999. [2] W. Soede, A. J. Berkhout, and F. A. Bilsen, “Development of a directional hearing instrument based on array technology,” J. Acoust. Soc. Amer., vol. 94, no. 2, pp. 785–798, 1993. [3] J. M. Kates and M. R. Weiss, “A comparison of hearing-aid array-processing techniques,” J. Acoust. Soc. Amer., vol. 99, no. 5, pp. 3138–3148, 1996. [4] J. G. Desloge, W. M. Rabinowitz, and P. M. Zurek, “Microphone-array hearing aids with binaural output-part I: Fixed-processing systems,” IEEE Trans. Speech Audio Processing, vol. 5, pp. 529–542, Nov. 1997. [5] D. P. Welker, J. E. Greenberg, J. G. Desloge, and P. M. Zurek, “Microphone-array hearing aids with binaural output-part II: A two-microphone adaptive system,” IEEE Trans. Speech Audio Processing, vol. 5, pp. 543–551, Nov. 1997. [6] J. E. Greenberg, “Modified LMS algorithm for speech processing with an adaptive noise canceller,” IEEE Trans. Speech Audio Processing, vol. 6, pp. 338–351, July 1998. [7] J. E. Greenberg and P. M. Zurek, “Evaluation of an adaptive beamforming method for hearing aids,” J. Acoust. Soc. Amer., vol. 91, no. 3, pp. 1662–1676, 1992. [8] L. J. Griffiths and C. W. Jim, “An alternative approach to linearly constrained adaptive beamforming,” IEEE Trans. Antennas Propagat., vol. AP-30, pp. 27–34, Jan. 1982. [9] M. W. Hoffman, T. D. Trine, K. M. Buckley, and D. J. Van Tasell, “Robust adaptive microphone array processing for hearing aids: Realistic speech enhancement,” J. Acoust. Soc. Amer., vol. 96, no. 2, pp. 759–770, 1994. [10] H. Saruwatari, S. Kajita, K. Takeda, and F. Itakura, “Speech enhancement using nonlinear microphone array with complementary beamforming,” in Proc. Int. Conf. Acoust., Speech, Signal Process., 1999, pp. 69–72. [11] J. Vanden Berghe and J. Wouters, “An adaptive noise canceller for hearing aids using two nearby microphones,” J. Acoust. Soc. Amer., vol. 103, no. 6, pp. 3621–3626, 1998. [12] F.-L. Luo, J. Yang, C. Pavlovic, and A. Nehorai, “An FFT Based Algorithm for Adaptive Directionality of Dual Microphones,” Dept. Elect. Eng. Comput. Sci., Univ. Illinois at Chicago, Chicago, IL, UIC-EECS-00-8, 2000. [13] B. W. Edwards, Z. Hou, C. J. Struck, and P. Dharan, “Signal processing algorithms for a new, software based, digital hearing device,” Hearing J., vol. 51, no. 9, pp. 44–52, 1998. [14] T. I. Laakso, V. Vaelimaeki, M. Karjalainen, and U. K. Laine, “Splitting tools for fractional delay filter design,” IEEE Signal Processing Mag., vol. 13, pp. 30–60, Jan. 1996. [15] G. W. Elko and A.-T. Nguyen Pong, “A simple adaptive first-order differential microphone,” in Proc. IEEE Workshop Appl. Signal Process. Audio Acoust., 1995. [16] S. Haykin, Adaptive Filter Theory. Englewood Cliffs, NJ: PrenticeHall, 1996. 1590 Fa-Long Luo (SM’95) received the B.S., M.S. and Ph.D. degrees, all with honors, in electronics engineering from Xidian University, Xi’an, China, in 1983, 1989, and 1992, respectively. From 1983 to 1986, he was an engineer with Changfeng Electronic Systems Corporation. He was with Tsinghua University, Beijing, China, as a Research Fellow from December 1991 to December 1993. From May 1994 to October 1998, he was with University of Erlangen-Nuremberg, Nuremberg, Germany, as a Principal Research Scientist, where he was first supported by the Alexander von Humboldt Foundation of Germany and then by the German Research Foundation. From October 1998 and February 1999, he was a Senior Project Leader of Cybernetics InfoTech, Inc. From March 1999 to May 2001, he was with GN ReSound Corporation, Redwood City, CA, as a Senior Research Scientist and Project Manager. In June 2001, joined Quicksilver Technology as a Senior Member of Technical Staff. He has seven U.S. patents pending. He has authored two books: Applied Neural Networks for Signal Processing (Cambridge, UK: Cambridge Univ. Press, 1997, 1998, and 1999) and Neural Networks and Signal Processing (Beijing, China: National Electronic Industrial Publishing House of China, 1993). He has also written more than 80 articles in journals and conferences. As a principal investigator, he has proposed and conducted more than 20 research and industry projects in signal and data processing with various applications and their implementation. His inventions on the spectral contrast enhancement and adaptive microphone array system have been successfully used and implemented in hearing aid products, and significant performance improvements have been achieved. Dr. Luo received the National Young Investigator Award of China, which is biennially granted to up to 100 individuals who have made internationally or nationally recognized extraordinary contributions in science and technology and is nominated from young scientists, engineers, educators, and technical executives over all China, in 1994. He received the National Outstanding Science and Technology Book Award of China in 1995. He has been a technical committee member (Neural Networks Technical Committee) of IEEE Neural Networks Council since 1998. From June 1997 to December 2000, he was a technical committee member (Neural Networks for Signal Processing) of the IEEE Signal Processing Society. As a Guest Editor, he edited a special issue of Signal Processing on neural networks (vol. 64, no. 3, 1998). He is the Executive Guest Editor of a special issue of Speech Communication on speech processing for hearing aids. He was an Associate Editor of an IEEE Control Systems Society Conference. As a reviewer, session chair, and technical committee member, he has served on many international journals and conferences. He is an editorial board member of International Journal of Information Fusion and IEEE Communication Surveys and Tutorials. Jun Yang (SM’99) received the B.S. degree from Huazhong (Central China) University of Science and Technology in 1985, the M.S. degree from Northwestern Polytechnic University in 1988, and the Ph.D. degree from Xidian University, Xi’an, China, in 1991, all in electronic engineering. From November 1991 to October 1993, she was with Tsinghua University, Beijing, China, where she was promoted to Associate Professor in 1993. She was with Oldenburg University of Germany and Viennatone Corporation of Austria as a Research Fellow and Principal Engineer, respectively, from October 1993 to November 1998. In December 1998, she joined GN ReSound Corporation, Redwood City, CA, as a Senior Research Engineer and then became the Chief Scientist of Spatializer Audio Laboratories, Inc. Since March 2001, she has been a Senior Audio Engineer with VM Labs, Inc. and a Principal DSP Engineer with ForteMedia, Inc., respectively. She has five U.S. patents (pending, co-inventor) and has authored 40 journal articles and conference papers on signal processing with its various applications such as audio, communications, and hearing aids. The hearing devices with some of her inventions are well sold in the world market. As a principal investigator, she has also conducted several research projects on communications, speech coding and processing, and an audio interface for blind computer users. Most of her projects, because of the importance and excellence, were granted by government agencies or foundations of Germany and China, respectively. Her works and contributions have received wide attention and were reported by Science and Technology Daily (the national science and technology newspaper of China) and published IEEE TRANSACTIONS ON SIGNAL PROCESSING, VOL. 50, NO. 7, JULY 2002 by the State Science and Technology Committee of China. She has been a reviewer for Speech Communication Dr. Yang has also received several university-level awards for her excellent work in science and technology. She has been a reviewer for the IEEE TRANSACTIONS ON SPEECH AND AUDIO PROCESSING. She is also a Member of Audio Engineering Society and was a Senior Member of the Chinese Institute of Electronics. Chaslav Pavlovic received the M.S. and B.S. degrees in electrical engineering and the Ph.D. degree in audiology. He is currently the Executive Vice President for Research and Development at Sound ID. Previously, for eight years, he was with GN ReSound (formerly ReSound Corporation), a hearing healthcare company, where he was the Senior Vice President for Research and Development. During his tenure, ReSound (subsequently GN ReSound) established itself as a technology leader in hearing products and grew to become the second largest hearing instrument company in the world. He was a Full Professor of speech technology at Aix en Provence, France, and an Associate Professor of Audiology at the University of Iowa, Iowa City, He has held other academic appointments. He has more than 100 publications on hearing aids, speech intelligibility, and speech quality. Dr. Pavlovic was the Chair of the 1997 ASA work group that developed ANSI S3.5: the Speech Intelligibility Index Standard. He has also been the Coordinator of the European Audiological Tests and Station (EURAUD) project; Chair of the American National Standards Institute S3-79 Writing Group (Calculation of the Articulation Index); USA representative to the International Standards Organization ISO/TC 43/SC1; Coordinator of the Overall Quality Assessment Subgroup European Consortium for Speech Assessment Methods (SAM, Project Esprit); Coordinator of participating French laboratories on projects TIDE and OSCAR (pattern extraction hearing aids); Member of the American National Standards Institute S12-8 Writing Group (rating noise with respect to speech interference); Member of the Editorial Board of Acoustics; Staff Editor of the Journal D’Acoustique; Board of Directors of the Journal D’Acoustique; and Member of the Technical Committee on Speech Communication of the Acoustical Society of America. Arye Nehorai (S’80–M’83–SM’90–F’94) received the B.Sc. and M.Sc. degrees in electrical engineering from the Technion—Israel Institute of Technology, Haifa, in 1976 and 1979, respectively, and the Ph.D. degree in electrical engineering from Stanford University, Stanford, CA, in 1983. After graduation, he worked as a Research Engineer for Systems Control Technology, Inc., Palo Alto, CA. From 1985 to 1995, he was with the Department of Electrical Engineering, Yale University, New Haven, CT, where he became an Associate Professor in 1989. In 1995, he joined the Department of Electrical Engineering and Computer Science, The University of Illinois at Chicago (UIC), as a Full Professor. From 2000 to 2001, he was Chair of the Department’s Electrical and Computer Engineering (ECE) Division, which is now a new department. He holds a joint professorship with the ECE and Bioengineering Departments at UIC. His research interests are in signal processing, communications, and biomedicine. He is on the Editorial Board of Signal Processing and was an Associate Editor for Circuits, Systems, and Signal Processing. Dr. Nehorai is Editor-in-Chief of the IEEE TRANSACTIONS ON SIGNAL PROCESSING. He is also a Member of the Publications Board of the IEEE Signal Processing Society. He has previously been an Associate Editor of the IEEE TRANSACTIONS ON ACOUSTICS, SPEECH, AND SIGNAL PROCESSING, the IEEE SIGNAL PROCESSING LETTERS, the IEEE TRANSACTIONS ON ANTENNAS AND PROPAGATION, and the IEEE JOURNAL OF OCEANIC ENGINEERING. He served as Chairman of the Connecticut IEEE Signal Processing Chapter from 1986 to 1995 and is currently the Chair and a Founding Member of the IEEE Signal Processing Society’s Technical Committee on Sensor Array and Multichannel (SAM) Processing. He was the co-General Chair of the First IEEE SAM Signal Processing Workshop, held in 2000, and will again serve in this position in 2002. He was corecipient, with P. Stoica, of the 1989 IEEE Signal Processing Society’s Senior Award for Best Paper. He received the Faculty Research Award from the UIC College of Engineering in 1999. In 2001, he was named University Scholar of the University of Illinois. He has been a Fellow of the Royal Statistical Society since 1996.