a code of ethics for robotics engineers

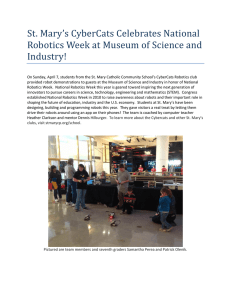

advertisement