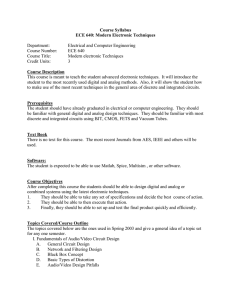

Computer-aided design of analog and mixed

advertisement