Designing and Teaching Courses to Satisfy the ABET Engineering

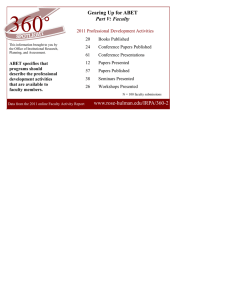

advertisement