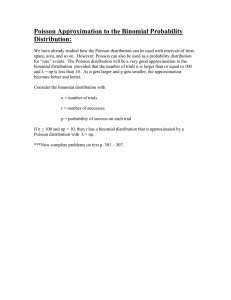

Probability Homework: Poisson, Binomial, Distributions

advertisement

BASIC PROBABILITY : HOMEWORK 2 Exercise 1: where does the Poisson distribution come from? (corrected) Fix λ > 0. We denote by Xn has the Binomial distribution with parameters n and λ/n. Then prove that for any k ∈ N we have P [Xn = k] −−−→ P [Yλ = k], n→∞ where Yλ has a Poisson distribution of parameter λ. Exercise 2 (corrected) Prove Theorem 2.2. Exercise 3 (corrected) Let X and Y be independent Poisson variables with parameters λ and µ. Show that (1) X + Y is Poisson with parameter λ + µ, (2) the conditional distribution of X, given that X + Y = n, is binomial, and find the parameters. Exercise 4 (homework) Suppose we have n independent random variables X1 , . . . , Xn with common distribution function F . Find the distribution function of maxi∈[1,n] Xi . Exercise 5 (homework) Show that if X takes only non-negative integer values, we have ∞ X E[X] = P [X > n]. n=0 An urn contains b blue and r red balls. Balls are removed at random until the first blue ball is drawn. Show that the expected number drawn is (b + r + 1)/(b + 1). 1 2 Hint : Pr n=0 n+b b = r+b+1 b+1 . Exercise 6 (homework) Let X have a distribution function if x < 0 0 1 F (x) = 2 x if 0 ≤ x ≤ 2 1 if x > 2 and let Y = X 2 . Find (1) P ( 12 ≤ X ≤ 32 ), (2) P (1 ≤ X < 2), (3) P (Y ≤ X), (4) P (X ≤ 2Y ), (5) P (X + Y ≤ 34 ), √ (6) the distribution function of Z = X Exercise 7: Chebyshev’s inequality (homework) Let X be a random variable taking only non-negative values. Then show that for any a > 0, we have 1 P [X ≥ a] ≤ E[X]. a Deduce that 1 P [|X − E[X]| ≥ a] ≤ 2 var(X), a p and explain why var(X) is often referred to as the standard deviation. 3 Exercise 1 (solution) We have n λ k λ P [Xn = k] = (1 − )n−k k n n n(n − 1) . . . (n − k + 1) λk λ (1 − )n−k = k n k! n k λ → e−λ , k! since for any k > 0, we have that (1 − nλ )n−k → e−λ . Exercise 2 (solution) The condition fX,Y (x, y) = fX (x)fy (y) for all x, y can be rewritten P (X = x and Y = y) = P (X = x)P (Y = y) for all x, y which is the very definition of X and Y being independent. Now for the second part of the theorem. Suppose that fX,Y (x, y) = g(x)h(y) for all x, y, we have X X h(y), fX,Y (x, y) = f (x) fX (x) = fY (y) = y y X X fX,Y (x, y) = h(y) y g(x). y Now 1= X fX (x) = X x x g(x) X h(y), y so that fX (x)fY (y) = g(x)h(y) X g(x) X h(y) = g(x)h(y) = fX,Y (x, y). y x Exercise 3 (solution) Let us notice that for X and Y non-negative independent random variables then n X P [X + Y = n] = P [X = n − k]P [Y = k], k=0 so in our specific case of Poisson random variables n X e−λ λn−k e−µ µk P [X + Y = n] = (n − k)! k! k=0 4 n e−λ−µ X n n−k k e−λ−µ (µ + λ)n λ µ = . = n! k=0 k n! For the second part of the question P [X = k, X + Y = n] P [X = k | X + Y = n] = P [X + Y = n] k n−k P [X = k]P [Y = n − k] n λ µ = = . P [X + Y = n] k (µ + λ)n Hence the conditional distribution is binomial with parameters n and λ/(λ + mu).