Preferred reporting items for systematic review and meta

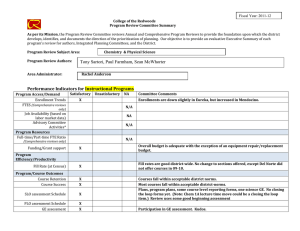

advertisement