A LINGUISTICALLY-INFORMATIVE APPROACH TO DIALECT

advertisement

A LINGUISTICALLY-INFORMATIVE APPROACH TO DIALECT RECOGNITION USING

DIALECT-DISCRIMINATING CONTEXT-DEPENDENT PHONETIC MODELS*

Nancy F. Chen, Wade Shen, Joseph P. Campbell

MIT Lincoln Laboratory, Lexington, MA, USA

nancyc@mit.edu, swade@ll.mit.edu, jpc@ll.mit.edu

ABSTRACT

We propose supervised and unsupervised learning algorithms to

extract dialect discriminating phonetic rules and use these rules

to adapt biphones to identify dialects. Despite many challenges

(e.g., sub-dialect issues and no word transcriptions), we discovered dialect discriminating biphones compatible with the linguistic

literature, while outperforming a baseline monophone system by

7.5% (relative). Our proposed dialect discriminating biphone system achieves similar performance to a baseline all-biphone system

despite using 25% fewer biphone models. In addition, our system

complements PRLM (Phone Recognition followed by Language

Modeling), verified by obtaining relative gains of 15-29% when

fused with PRLM. Our work is an encouraging first step towards a

linguistically-informative dialect recognition system, with potential

applications in forensic phonetics, accent training, and language

learning.

Index Terms— dialect recognition, phonetic rules

1. INTRODUCTION

Dialect is an important, yet complicated, aspect of speaker variability. A dialect is the language characteristics of a particular population, where the categorization is primarily regional. Linguistic

studies in dialects are limited to small-scale populations due to timeconsuming manual analyses [1]. While these studies provide insight

into how humans identify dialects, large-scale population testing is

required to establish statistical significance.

On the other hand, automatic dialect recognizers are typically

trained on many speakers and efficiently process large amounts

of data. However, current automatic dialect recognizers do not

explicitly model the underlying rules that define dialects, making

it difficult to interpret system models and recognition results in a

linguistically-meaningful manner.

In forensic phonetics, it is important that recognition results of

a dialect recognizer are justifiable on linguistic grounds [2]. The

goal of this work is thus to bridge the gap between the linguistic and

engineering approaches by constructing an informative system that

*This work is sponsored by the Command, Control and Interoperability

Division (CID), which is housed within the Department of Homeland Security’s Science and Technology Directorate under Air Force Contract FA872105-C-0002. Opinions, interpretations, conclusions and recommendations are

those of the authors and are not necessarily endorsed by the United States

Government. Nancy Chen is also supported by the NIH Ruth L. Kirschstein

National Research Award.

978-1-4244-4296-6/10/$25.00 ©2010 IEEE

5014

discovers dialect-discriminating pronunciation patterns and explicitly uses them to recognize dialects. These dialect-specific patterns

are phonetic rules interpretable by humans. Many of these rules

are phonetic-context dependent; e.g, /w/ in Indian English is often

dropped when in the middle of a word, so “towel” sounds like “tall”.

There are many challenges in designing an informative dialect

recognizer that incorporates phonetic rules. (1) Standard phone recognizers inadequately capture acoustic differences across dialects.

For example, in Indian English there is retroflex /d/, but a typical

phone recognizer may not capture the retroflexness. The retroflex

/d/ might be recognized as a typical /d/, /r/, or some phone less interpretable. (2) State-of-the-art phone recognizers are still far from

perfect when there are no word transcriptions to force-align to. As

a result, transcriptions generated by phone recognizers are noisy estimates of the true acoustic units. Though these limitations make

it challenging to interpret dialect-specific phonetic rules, our work

demonstrates a first step towards an informative dialect recognizer.

Some researchers have investigated phonetic variations between

native and non-native speech (e.g. [3, 4]), focusing on phoneticcontext independent rules, and on improving speech recognition. [5]

modeled trajectories of acoustic observations to classify accents, but

these models are difficult to interpret at the phonetic level as the

observations are multi-dimensional. [6] analyzes accents with articulatory models, which complements phoneitc analyses in our work.

Many techniques in dialect identification are ported from language identification [7]. These methods usually fall into two categories: (1) Acoustic modeling which often uses Gaussian mixture

models (GMM) or Hidden Markov Models (HMM) [8, 9, 10, 11];

(2) Language modeling (LM), which captures statistics of phonotactic distributions; e.g., phone recognition followed by language modeling (PRLM) [12]. Since linguistics literature suggests that dialect

differences often occur in certain phonetic contexts [13], we extend

adapted phonetic models (APM) [9] to consider phonetic contexts

and select phonetic contexts that are dialect-discriminating.

The contributions of this paper are multifold: (1) we propose

algorithms to discover dialect-specific context-dependent rules with

and without using transcriptions; (2) despite many challenges in our

dialect recognition task (e.g., sub-dialects in Indian English, lack of

word transcriptions to obtain forced-aligned phones), we discovered

dialect-specific rules compatible with the linguistic literature while

achieving a 7.5% relative gain over a baseline monophone1 system;

1 “Phone” usually refers to “monophone”, where a phone’s surrounding

phones do not affect the identity of the phone of interest; e.g., monophone [t]

is always referred to as [t], regardless of its surrounding phones. A biphone

is a monophone in the context of another monophone; e.g., biphone [t+a] is

ICASSP 2010

(3) our proposed system complements PRLM, verified by obtaining relative gains of 14.6-29.3% in fusion experiments. Our work

is an important step towards bridging linguistic and engineering approaches to analyzing dialects, and is potentially useful in applications such as forensic phonetics and accent training tools.

2. METHODS

We exploit the fact that for a given phone recognizer, biphones have

different recognition precision across dialects due to acoustic differences. Modeling the acoustics of these dialect discriminating biphones allows us to distinguish between dialects.

2.1. Supervised Learning

2.1.1. Selecting Dialect Discriminating Biphone Models

We pass a phonetically-labeled speech sequence from dialect d ∈

{d1 , d2 } through a root phone recognizer λ that models monophones

[9].

Let α be a recognizer-hypothesized phone and g(α) be the

ground-truth phone corresponding to α. Biphone αβ is defined as

the phone α that is followed by phone β. We consider biphone

αβ to be dialect discriminating between dialect d1 and d2 if λ’s

recognition precision of biphone αβ is different for the two dialects:

|P (g(α) = α|αβ , λ, d1 ) − P (g(α) = α|αβ , λ, d2 )| ≥ θ0

(1)

for some preset threshold θ0 and if there is sufficient occurrences of

biphone αβ .

2.2. Unsupervised Learning

We now discuss the case if the ground-truth phones from the pronunciation dictionary are not given as in Section 2.1.

2.2.1. Adapt All Biphone Models

We use the 1-best hypothesis of root phone recognizer λ as groundtruth and then perform adaptation of Eq (3) for all biphones αβ ∈

{biphone set} labeled by λ.

2.2.2. Select Dialect Discriminating Biphone Models

For each dialect d ∈ {d1 , d2 }, we denote yd,αβ to be all the acoustic

observations of biphones labeled as αβ by the root recognizer λ in

the training corpus. Let Td,αβ be the total duration of yd,αβ .

Consider the log likelihood ratios

!

P (yd1 ,αβ |λbi

d1 ,αβ )

1

d1

log

(4)

zαβ =

Td1 ,αβ

P (yd1 ,αβ |λbi

d2 ,αβ )

!

P (yd2 ,αβ |λbi

d1 ,αβ )

1

d2

log

.

(5)

zαβ =

Td2 ,αβ

P (yd2 ,αβ |λbi

d2 ,αβ )

The greater the value of zαd1β , the better the acoustics of biphone

bi

αβ is modeled by λbi

d1 ,αβ relative to λd2 ,αβ . Similarly, the smaller

d2

the value of zαβ , the better the acoustics of biphone αβ is modeled

bi

d1

d2

by λbi

d2 ,αβ relative to λd1 ,αβ . If zαβ is in general greater than zαβ ,

then biphone αβ is dialect discriminating between dialects d1 and

d2 . Therefore, we define biphone αβ to be dialect discriminating if

zαd1β − zαd2β ≥ θ

2.1.2. Adaptation of Dialect Discriminating Biphone Models

(a) Monophone Adaptation. Since the root recognizer λ was

trained on a different corpus from our data, for each monophone α,

we first adapt its acoustic model λ(α) to a dialect specific one [9],

where for d ∈ {d1 , d2 },

adapt

λ(α) −→ λd (α)

(2)

(b) Biphone Adaptation. Using standard monophone to biphone

adaptation methods in standard speech recognition systems, we

adapt the dialect-specific monophone model λd (α) to a dialect specific biphone model λbi

d (αβ ) for dialect discriminating biphones αβ

defined by Eq (1), i.e.,

adapt

λd (α) −→ λbi

d (αβ )

(3)

The adaptation process in Eq (3) is similar to that in Eq (2) except the adaptation data are now observations corresponding to biphone αβ .

2.1.3. Dialect Recognition

Dialect recognition is accomplished via a likelihood ratio test by

using the adapted biphone models to compute per-frame log likelihoods. Non-dialect discriminating biphones that occur in the test utterances are modeled as dialect-specific monophones obtained from

Eq (2).

defined as the monophone [t] followed by monophone [a].

5015

(6)

where θ is the decision threshold.

2.2.3. Dialect Recognition

Dialect recognition is accomplished as in Section 2.1.3.

2.2.4. Remarks

This method can still be used when we have ground-truth phones:

the root recognizer’s 1-best hypotheses in Section 2.2.1 are replaced

with ground-truth g from the dictionary pronunication. The learned

biphones are expected to be more interpretable, since there are no

longer phone recognition errors.

2.3. Comparison with PRLM

In Eq.(1), the biphone recognition precision difference across dialects is generated by an acoustic difference that is dialect-specific.

We use this dialect-specific information directly in the supervised

method (Section 2.1), and implicitly in the unsupervised method

(Section 2.2). In contrast, PRLM models differences in biphone

occurrence frequency, and is consequently blind to dialect-specific

acoustic differences. Therefore, our approach and PRLM complement each other.

3. EXPERIMENTS

Experimental Setup. We evaluated our system on the NIST LRE07

English dialect task [18]. Our system was trained on 104 hours

of Callfriend, LRE05 test set, OGI’s foreign accented English, and

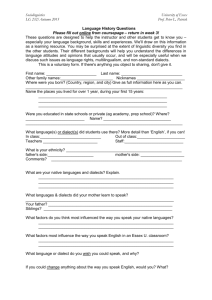

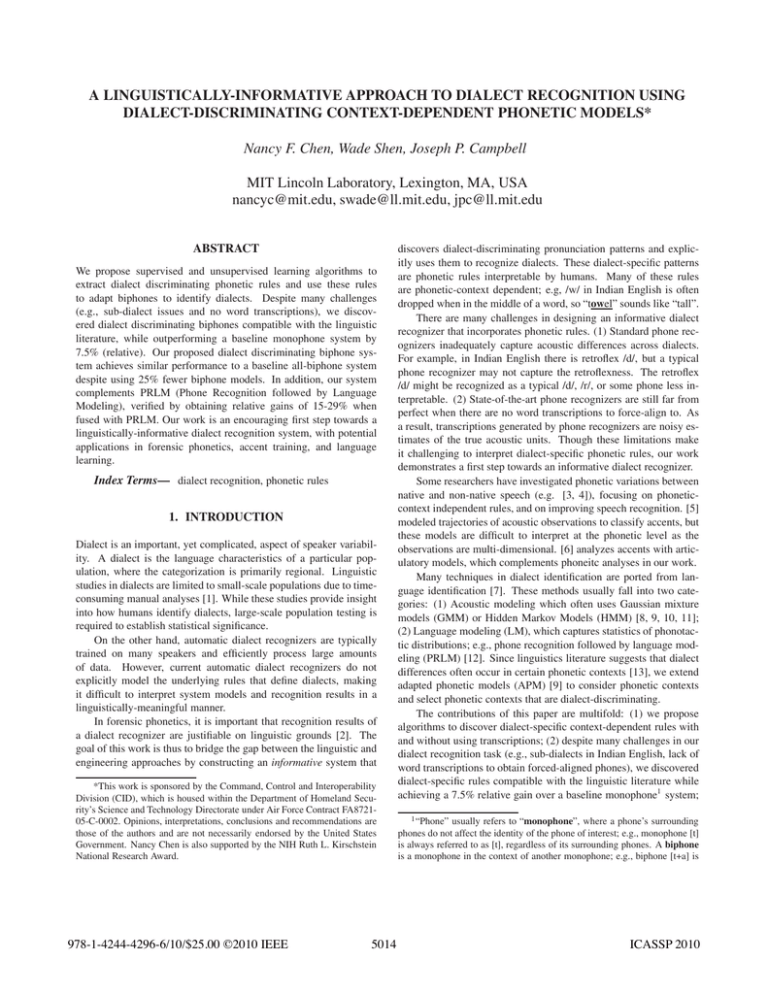

3.1. Pilot Study: Analyzing Dialect-Specific /r/ Biphones

We discuss a pilot study where we analyze hundreds of labeled /r/

using the supervised method in Section 2.1.

40

Miss probability (in %)

LDC’s Mixer and Fisher corpora [14, 15, 16, 17]. The LRE07 test

set includes 80 trials of American English and 160 trials of Indian

English. Since the test set is small, it was difficult to conclusively

interpret detection error trade-off (DET) curves. Therefore, we used

two test sets: (1) Test-LRE07 (the official NIST LRE07 30-second

test trials). Results are obtained by pooling [18] and a backend classifier [19]. (2) Test-Linc: 1,498 trials of 30-second segments from

Mixer and LRE05; 233 trials are Indian English, and 1,265 trials are

American English. Neither of these test sets were included in training. Pooling was not used because it is inappropriate to consider

the classes to be independent in closed-set two-way classification.

Pooling was used in Test-LRE07 for comparison with NIST LRE07.

The baseline APM and PRLM system configurations are the

same as [9]; the root HMM modeled 47 English monophones (3

states/phone, 128 Gaussians/state) using 23 hours of transcribed

words from Switchboard-II phase 4 (Cellular).

monophones

r−biphones

all biphones

proposed biphones

PRLM

PRLM + proposed

PRLM + all biphones

20

10

5

2

1

1

2

5

10

20

False Alarm probability (in %)

40

Fig. 1. DET curve results of Test-Linc.

3.1.1. Rule Extraction Conditioned on Phonetic Context

A small data set was phonetically labeled manually to analyze the

dialect-specific context-dependent phonetic rules of /r/2 . In the Indian English training set, 200 recognizer-hypothesized /r/ were labeled. 50% of them were incorrectly hypothesized, but showed no

bias toward any phone. In the American English training set, 500 hypothesized /r/ were labeled; 80% were correctly hypothesized, and

the remaining showed no bias toward any phone.

We observe that in certain phonetic contexts, the phone recognition precision of /r/ is dialect-specific, supporting the hypothesis

that some biphones are dialect-specific. For example, if the root recognizer hypothesizes a segment to be /r/ followed by /dx/, then the

recognition accuracy of /r/ is 0% for Indian English, but 90% for

American English.

Dialect discriminating /r/ biphones were selected by setting θ0 in

Eq (1) to be one standard deviation above the mean of the empirical

distribution of |P (g(α) = α|αβ , λ, d1 ) − P (g(α) = α|αβ , λ, d2 )|

for all α, β and if the biphone occurs more than the average occurrences of all biphones.

Table 1. System comparison in EER %.

System

Test-LRE07

Test-Linc

monophone APM

13.8

10.5

r-biphones

12.5

10.4

unfiltered biphones

14.7

9.4

filtered biphones (proposed)

15.6

9.7

PRLM

12.8

9.7

PRLM+unfiltered biphones

10.6

6.6

PRLM+proposed

10.9

6.9

3.1.2. Results and Discussion

Dialect-specific /r/ biphones extracted in Section 3.1.1 were adapted

according to Section 2.1.2. /r/ in other contexts and all other phones

were modeled as monophones. The equal error rate (EER) of the rbiphone system improves monophone APM by 9.1% on Test-LRE07

and 1.5% on Test-Linc, (see Table 1). The DET plot of Test-Linc in

Fig. 1 show that the r-biphone system improves detection error of

monophone APM when false alarms are penalized heavily. DET

plots of Test-LRE07 are not shown due to space constraints.

Below are possible reasons why /r/ biphones learned in Section

3.1.1 resulted in inconsistent improvement. (1) The labeled data only

corresponds to 1 min of speech, so the rules may not be general. (2)

The labeled Indian English /r/ were mainly from speakers whose first

language is Hindi, but the first language of speakers in the test set

may be other Indian languages, which may possess different dialectspecific rule. While it is more difficult to resolve (2), as it depends

on documentation of the given corpora, (1) can be resolved by using

more data. Thus, we take an unsupervised approach to study more

data in the next section.

3.2. Unsupervised Learning of Dialect-Specific Biphones

We now use the unsupervised method in Section 2.2 to learn phonetic

rules. We focus our discussion on Test-Linc because it is a larger

data set resulting in more robust results. We will however comment

on Test-LRE07 when possible.

3.2.1. System Setup and Analysis

2 /r/ was chosen because it showed high acoustic likelihood differences

between the American and Indian English adapted monophone recognizers.

5016

We use θ = 0.1 in Eq (6) on Test-Linc to obtain a selected biphone

set. This biphone set is used to evaluate the performance of the dialect discriminating biphone system on Test-LRE07. Similarly, we

use θ = 0.1 in (6) on Test-LRE07 to obtain a selected biphone set for

Test-Linc; the resulting dialect discriminating biphone system uses

Equal error rate (EER) % of Test−Linc

11.2

11

10.8

amount of pruned biphones vs. EER

monophone APM EER performance

proposed biphone system (θ = 0.1)

challenges (e.g., sub-dialect issues and no transcriptions), we discovered dialect-specific biphones compatible with the linguistic

literature, while outperforming a baseline monophone system by

7.5% (relative). These results suggest that if word transcriptions

are provided, we can potentially retrieve more dialect-specific rules,

demonstrating our work is an encouraging first step towards a

linguistically-informative dialect recognizer. In addition, our proposed system obtains relative gains of 14.6-29.3% when fused with

PRLM, indicating it complements phonotactic approaches. Applications of this work include forensic phonetics and language learning.

10.6

10.4

10.2

10

9.8

9.6

9.4

10

15

20

25

30

35

Amount of pruned biphones (%) determined by sweeping θ on Test−LRE07

40

Fig. 2. EER of Test-Linc as a function of pruned biphones determined by Test-LRE07.

ACKNOWLEDGMENTS

The authors thank Jim Glass, Reva Schwartz, and Ken Stevens for

their input.

25% fewer biphone models (2,381 to 1,789) than the all-biphone

system, while achieving comparable performance (EER difference

is 2.9%).

It turns out that 90% of the dialect discriminating /r/ biphones

extracted by the supervised method are retained by the unsupervised

method, rather than 75% which would be expected if the process

were completely random. Henceforth, the results we present use this

value of θ unless stated otherwise.

For the purpose of analysis, we display in Fig. 2 the EER of TestLinc as a function of pruned biphones determined by Test-LRE07.

The lowest EER was obtained when 18% biphones were pruned, so

our choice of θ is not optimal with respect to EER. Another observation is that with 29% pruned biphone models in the dialect discriminating biphone system, EER becomes that of monophone APM.

On the Test-Linc data set, the proposed dialect discriminating

biphone system outperforms the monophone APM system by 7.5%

(see Table 1). However, on Test-LRE07, the monophone APM outperforms the dialect discriminating biphone system. We suspect this

is due to the small test set, because monophone APM even outperforms the all-biphone case.

Discussion of Learned Rules. Though it is challenging to interpret the learned rules because of phone recognition errors, we

find some rules consistent with linguistic knowledge. For example, [dx+r], [dx+axr], and [dx+er] were selected as dialect discriminating biphones. In American English, the intervocalic /t/ is often

implemented as a flap consonant [dx] (e.g. “butter”), while this is

uncommon in Indian English. Since Indian English has British influence, other biphones such as [ae+s] and [ae+th] might be from

words such as “class” and “bath”, which are produced differently in

American and British accents. [aw+l] was also a selected biphone.

In some Indian languages there are no [w], and thus [w] is often

deleted in word-medial positions (e.g. “towel”). Biphones such as

[r+dx] learned in Section 3.1.1, though less interpretable, were also

selected by the unsupervised method.

5. REFERENCES

3.2.2. Fusion with PRLM

To empirically verify if the proposed system complements PRLM as

described in Section 2.3, we fuse our filtered-biphone system with a

PRLM system [9], resulting in relative gains of 14.6% and 29.3% on

Test-LRE07 and Test-Linc with a backend [19]. In addition, the EER

performance of the filtered-biphone and unfiltered-biphone systems

are still comparable after fusing with PRLM (see Table 1 & Fig. 1).

4. CONCLUSION

We present systematic approaches to discover dialect-specific biphones, and use these biphones to identify dialects. Despite many

5017

[1] Labov, W. et al,“The Atlas of North American English: Phonetics, Phonology, and Sound Change,” Mouton de Gruyter, Berlin,

2006.

[2] Rose, P., “Forensic Speaker Identification, Taylor and Francis,

2002.

[3] Fung, P., Liu, Y., “Effects and Modeling of Phonetic and Acoustic Confusions in Accented Speech,” JASA, 118(5):3279-3293,

2005.

[4] Livescu, K., Glass, J., “Lexical Modeling of Non-Native speech

for automatic speech recognition”, ICASSP, 2000.

[5] Angkititrakul, P., Hansen, J., “Advances in phone-based modeling for automatic accent classification,” IEEE TASLP, vol. 14,

pp. 634-646, 2006.

[6] Sangwan, A. and Hansen, J., “On the use of phonological features for automatic accent analysis,” Interspeech 2009.

[7] Zissman, M., “Comparison of Four Approaches to Automatic

Language Identification of Telephone Speech,” IEEE TSAP.,

4(1):31-44, 1996.

[8] Choueiter, G., Zweig, G., Nguyen, P. “An Empirical Study of

Automatic Accent Classification,” ICASSP, 2008.

[9] Shen, W., Chen, N., Reynolds, D., “Dialect Recognition using

Adapted Phonetic Models,” Interspeech, 2008.

[10] Torres-Carrasquillo, P., Gleason, T., Reynolds, D., “Dialect

Identification using Gaussian Mixture Models,” Odyssey 2004.

[11] Huang, R., Hansen, J. “Unsupervised Discriminative Training with Application to Dialect Classification” IEEE TASLP

15(8):2444-2453, 2007.

[12] Zissman, M., Gleason, T., Rekart, D., Losiewicz, B., “Automatic Dialect Identification of Extemporaneous Conversational

Latin American Spanish Speech,” ICASSP 1995.

[13] Wells, J., “Accents of English: Beyond the British Isles,” Cambridge University Press, 1982.

[14] Callfriend

American

English

corpus:

http://www.ldc.upenn.edu/Catalog/docs/LDC96S46

[15] CSLU

foreign-accented

English

corpus:

http://www.cslu.ogi.edu/corpora/fae/

[16] Cieri, C. et al., “The Fisher Corpus: a Resource for the Next

Generations of Speech-to-Text,” LREC, 2004.

[17] Cieri, C. et al., “The Mixer Corpus of Multilingual, Multichannel Speaker Recognition Data,” LREC, 2004.

[18] NIST

Language

Recognition

Evaluation

2007:

http://www.nist.gov/speech/tests/lang/2007

[19] Reynolds, D. et al., “Automatic Language Recognition via

Spectral and Token Based Approaches,” in J. Benesty et al.

(eds.), Springer Handbook of Speech Processing, Springer,

2007.