Summary of some Rules of Probability with Examples

advertisement

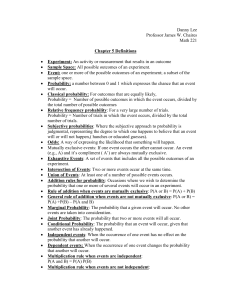

Summary of some Rules of Probability with Examples CEE 201L. Uncertainty, Design, and Optimization Department of Civil and Environmental Engineering Duke University Henri P. Gavin Spring, 2016 Introduction Engineering analysis involves operations on input data (e.g., elastic modulus, wind speed, static loads, temperature, mean rainfall rate) to compute output data (e.g., truss bar forces, evaporation rates, stream flows, reservoir levels) in order to assess the safety, serviceability, efficiency, and suitability of the system being analyzed. There can be considerable uncertainty regarding the input data, but this uncertainty often can be characterized by a range of values (minimum and maximum expected values), an average expected value with some level of variability, or a distribution of values. For example, Figures 1 shows probability distributions of daily precipitation and daily max and min temperatures for Durham NC (27705) from June 1990 to June 2013. Clearly, any calculated prediction of the level of Lake Michie involves temperature and rainfall rate. Long-term predictions of lake levels should be reported in terms of expected values and uncertainty or variability, reflecting uncertainties in the input data. These calculations involve the propagation of uncertainty. In this course you will propagate uncertainty to determine the probability of failure of the systems you design. This kind of failure analysis requires: 1. the identification of all possible modes of failure; 2. the evaluation of failure probabilities for each failure mode; and 3. combining these failure probabilities to determine an overall failure probability This, in turn, requires methods based on the theory of sets (e.g., the union and intersection of sets and their complements) and the theory of probability (e.g., the probability that an event belongs to a particular set among all possible sets). In the following sections probability concepts are illustrated using Venn diagrams. In these diagrams the black rectangle represents the space of all possible events with an associated probability of 1. The smaller rectangles represent subsets and their intersections. It is helpful to associate the areas of these rectangles with the probability of occurence of the associated events. So think of the black rectangle as having an area of 1 and the smaller rectangles having areas less than 1. 2 CEE 201L. Uncertainty, Design, and Optimization – Duke University – Spring 2016 – H.P. Gavin 10 0.05 10-1 P.D.F. 0.04 0.03 10-2 0.02 10-3 0.01 10-4 0 -10 C.D.F. 0 0 10 20 30 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 10-5 0 10 101 102 103 1 0.8 0.6 0.4 0.2 -10 0 10 20 30 max and min temp, deg C 0 10-1 100 101 102 daily precipitation, mm 103 Figure 1. Probability Density Functions and Cumulative Distribution Functions of precipitation, minimum temperature, and maximum temperature in Durham NC from June 1990 to June 2013. CC BY-NC-ND H.P. Gavin 3 Rules of Probability A Complementary Events A’ If the probability of event A occurring is P [A] then the probability of event A not occurring, P [A0 ], is given by P [A0 ] = 1 − P [A]. (1) Example: This and following examples pertain to traffic and accidents on a certain stretch of highway from 8am to 9am on work-days. If a year has 251 work-days and 226 work-days with no accident (on the stretch of highway between 8am and 9am) the probability of a work-day with no accident is 226/251=0.90. The probability of a work-day with an accident (one or more) is 1-0.90=0.10. A Mutually Exclusive (Non-Intersecting) Events (ME) B Mutually exclusive events can not occur together; one precludes the other. If A and B are mutually exclusive events, then P [A and B] = 0. (2) All complementary events are mutually exclusive, but not vice versa. Example: If X represents the random flow of traffic in cars/minute, the following sets of events are mutually exclusive: 0 < X ≤ 5, 5 < X ≤ 10, and 20 < X ≤ 50. The pair of events X = 0 and 20 < X ≤ 50 are mutually exclusive but not complementary. A Collectively Exhaustive Events (CE) B If A and B are collectively exhaustive events then the set [A and B] represents all possible events, and P [A or B] = 1. (3) A pair of complementary events are ME and CE. The sum of the probabilities of a set of ME and CE events must be 1.00. Example: Let Y represent the random number of accidents from 8am to 9am on a work-day. If P [Y = 0] = 0.90 (no accident) and P [Y = 1] = 0.04 then P [Y > 1] = 0.06. Example: The following sets are mutually exclusive and collectively exhaustive: X = 0, 0 < X ≤ 5, 5 < X ≤ 10, 10 < X ≤ 20, 20 < X ≤ 50, and 50 < X. In the above if the “<” were replaced by “≤” the sets would be CE but not ME. CC BY-NC-ND H.P. Gavin 4 CEE 201L. Uncertainty, Design, and Optimization – Duke University – Spring 2016 – H.P. Gavin A Conditionally Dependent Events B A B If the occurrence (or non-occurrence) of an event B affects the probability of event A, then A and B are conditionally dependent; P [A|B| = P [A and B] / P [B] (4) In this equation the set B can be thought of as a more restrictive set of all possibilities. The set B is therefore the new set of all possibilities and the probability of A given B is represented as the area associated with the intersection of A and B (the green rectangle) divided by the area associated with the set B (the red rectangle). Example: The term P [Y > 1|10 < X ≤ 20] denotes the probability of more than one accident from 8am to 9am given the traffic flow is between 11 and 20 cars per minute (inclusive). If A and B are mutually exclusive, the occurrence of one implies the non-occurrence of the other, and therefore a dependence between A and B. So P [A|B] = P [B|A] = 0. If A and B are mutually exclusive, A is a subset of B 0 , and P [A|B 0 ] = 1. So all mutually exclusive events are conditionally dependent, but not vice versa. Example: The events [Y > 1] and [10 < X ≤ 20] are dependent but not mutually exclusive. A Statistically Independent Events B If the occurrence (or non-occurrence) of event B has no bearing on the probability of A occurring, then A and B are statistically independent; P [A|B] = P [A] and P [B|A] = P [B]. (5) Example: If Z represents the random number of migrating geese above above the certain stretch of highway from 9am-10am on a work-day, P [Y > 1|Z > 20] = P [Y > 1]. Intersecting sets of events can be dependent or independent. Example: The events [Y > 1] and [Z > 20] can occur on the same day (are not mutually exclusive; are intersecting) but are independent. A pair of events cannot be both mutually exclusive and independent. Example: The events [Z ≤ 10] and [Z > 20] are mutually exclusive. But the occurrence of one implies the non-occurrence of the other, and therefore a dependence. CC BY-NC-ND H.P. Gavin 5 Rules of Probability A Intersection of Events B • If A and B are conditionally dependent events, then (from equation (4)) the intersection: P [A and B] = P [A ∩ B] = P [AB] = P [A|B] · P [B] (6) Example: If P [Y > 1|10 < X ≤ 20] = 0.05 and P [10 < X ≤ 20] = 0.25 then P [Y > 1 ∩ 10 < X ≤ 20] = (0.05)(0.25) = 0.0125. • If A and B are independent events: P [A|B] = P [A]; and P [A and B] = P [A ∩ B] = P [AB] = P [A] · P [B] (7) Example: P [Y > 1|Z ≤ 20] = P [Y > 1]. If P [Z > 20] = 0.04 then P [Y > 1 ∩ Z ≤ 20] = (0.06)(0.96) = 0.0576. • If A, B, and C, are dependent events: P [A ∩ B ∩ C] = P [ABC] = P [A|BC] · P [BC] = P [A|BC] · P [B|C] · P [C]. (8) • If A, B, and C are independent events, P [A|BC] = P [A], P [B|C] = P [B], and P [A ∩ B ∩ C] = P [ABC] = P [A] · P [B] · P [C] (9) A Union of Events B A B • For any two events A and B, P [A or B] = P [A ∪ B] = P [A] + P [B] − P [A and B]. (10) Example: P [Y > 1 ∪ 10 < X ≤ 20] = 0.06 + 0.25 − 0.0125 = 0.2875 • If A and B are mutually exclusive, P [A and B] = 0 and P [A or B] = P [A ∪ B] = P [A] + P [B]. (11) Example: P [Y = 0 ∪ Y > 1] = 0.90 + 0.06 = 0.96 . . . . . . the same as (1 − P [Y = 1]). • For any n events E1 , E2 , · · · , En , P [E1 ∪ E2 ∪ · · · ∪ En ] = 1 − P [E10 ∩ E20 ∩ · · · ∩ En0 ] (12) • If E1 , E2 , · · · , En are n mutually exclusive events, P [E1 ∪ E2 ∪ · · · ∪ En ] = P [E1 ] + P [E2 ] + · · · + P [En ] (13) CC BY-NC-ND H.P. Gavin 6 CEE 201L. Uncertainty, Design, and Optimization – Duke University – Spring 2016 – H.P. Gavin A E1 E2 E3 E4 Theorem of Total Probability If E1 , E2 , · · · , En are n mutually exclusive (ME) and collectively exhaustive (CE) events, and if A is an event that shares the same space as the events Ei , (P [A|Ei ] > 0 for at least some events Ei ) then via the intersection of dependent events and the union of mutually exclusive events: P [A] = P [A ∩ E1 ] + P [A ∩ E2 ] + · · · + P [A ∩ En ] P [A] = P [A|E1 ] · P [E1 ] + P [A|E2 ] · P [E2 ] + · · · + P [A|En ] · P [En ] (14) Example: The table below shows the probabilities of a number of events. P [X = 0] 0.00 P [Y > 1|X = 0] 0.00 P [0 < X ≤ 10] 0.20 P [Y > 1|0 < X ≤ 10] 0.00 P [10 < X ≤ 20] 0.25 P [Y > 1|10 < X ≤ 20] 0.05 P [20 < X ≤ 50] 0.35 P [Y > 1|20 < X ≤ 50] 0.07 P [50 < X] 0.20 P [Y > 1|50 < X] 0.115 So . . . P [Y > 1] = (0)(0)+(0.0)(0.20)+(0.05)(0.25)+(0.07)(0.35)+(0.115)(0.20) = 0.06 A Bayes’ Theorem B For two dependent events A and B, P [A|B] is the fraction of the area of B that is in A and P [A|B]P [B] is the area of B in A. Likewise, P [B|A] is the fraction of the area of A that is in B and P [B|A]P [A] is the area of A in B. Clearly, The area of A in B equals the area of B in A. P [A and B] = P [A|B] · P [B] = P [B|A] · P [A]. So, P [A|B] = P [B|A] · P [A] . P [B] (15) If event A depends on n ME and CE events, E1 , . . . , En P [Ei |A] = P [A|Ei ] · P [Ei ] . P [A|E1 ] · P [E1 ] + P [A|E2 ] · P [E2 ] + · · · + P [A|En ] · P [En ] (16) Example: Using the probabilities from the table above, given an observation of more than one accident, find the probability that the traffic was very heavy (50 < X). P [50 < X|Y > 1] = P [Y > 1|50 < X]P [50 < X]/P [Y > 1] = (0.115)(0.20)/(0.06) = 0.383. Note that if A is a subset of B, then P [B|A] = 1 and P [A|B] = P [A]/P [B]. Note also that P [A|B] ≈ P [B|A] if and only if P [A] ≈ P [B]. CC BY-NC-ND H.P. Gavin 7 Rules of Probability Bernoulli sequence of independent events with probability p A Bernoulli sequence is a sequence of trials for which an event may or may not occur. In a Bernoulli sequence the occurrence of an event in one trial is independent of an occurrence in any other trial. If we have a sequence of trials, we may wish to know the probability of multiple occurrences in a given number of trials. This will depend upon the probability of the event in any single trial and the number of ways multiple events can occur in the given number of trials. Example: Given that the probability of an accident on any given work-day is 0.10, and assuming the probability of an accident today in no way depends on the occurrence of an accident on any other day, what is the probability of two days with an accident (and three days with zero accidents) in one work-week? Given: P [one or more accident on any work-day] = p = 0.10 From complementary events: P [no accident on any work-day] = 1 − p = 1 − 0.10 = 0.90 From the intersection of independent events: P [an accident on any two work-days] = (p)(p) = (0.10)2 = 0.010 From the intersection of independent events: P [no accident on any three work-days] = . . . . (1 − p)(1 − p)(1 − p) = (1 − 0.10)3 = 0.729 From the intersection of independent events: P [an accident on any two work-days ∩ no accident on any three work-days] . = (p)2 (1 − p)5−2 = (0.010)(0.729) From the union of independent events: number of ways to pick two days out of five = . . . . 4 + 3 + 2 + 1 = 5!/(2! 3!) = 120/((2)(6)) = 10 P [two days out of five with an accident] = (10)(.010)(.729) = 0.0729 This is called the binomial distribution. P [n events out of m attempts] = m! pn (1 − p)m−n n!(m − n)! (17) As a special case, P [0 events out of m attempts] = (1 − p)m The expected number of events out of m attempts is pm. The more data we have in determining the probability of an event, the more accurate our calculations will be. If n events are observed in m attempts, p ≈ n/m, and p becomes more precise as m (and n) get bigger. CC BY-NC-ND H.P. Gavin 8 CEE 201L. Uncertainty, Design, and Optimization – Duke University – Spring 2016 – H.P. Gavin Poisson process with mean occurrence rate ν (mean return period T = 1/ν). For a Bernoulli sequence with a small event probability p and a large number of trials, the binomial distribution approaches the Poisson distribution, in which the event probability p is replaced by a mean occurrence rate, ν, or a return period, T = 1/ν. P [n events during time t] = (t/T )n exp(−t/T ) n! (18) Special cases: P [time between two events > t] = P [0 events during time t] = e−t/T P [time between two events ≤ t] = 1 − P [0 events during time t] = 1 − e−t/T Example: Given that the probability of an accident on any work-day is 0.10, the return period for work-days with accidents is 1/0.10 work-days or 10 work-days. On average, drivers would expect a day with one or more accidents every other work-week. Assuming such accidents are a Poisson process, P [two work-days with accidents in five days] = . . . . (5/10)2 /2! exp(−5/10) = 0.0758 . . . only slightly more than what we got assuming a Bernoulli sequence. P [time between work-days with accidents > T work-days] = . . . . P [0 accidents in T work days] = e−T /T = e−1 = 0.368 P [one or more accidents in T work-days] = 1 − e−T /T = 1 − e−1 = 0.632 The Normal distribution The figures below plot P [n days with accidents in m workdays] vs. n, the number days with accidents, for m = 5, 20, and 50 work-days. As m and t become large the Binomial distribution and the Poisson distribution approach √ theqNormal distribution with a mean value of pm or t/T and a standard deviation of pm or t/T . Binomial Poisson Normal 0.35 0.2 Probability Probability 0.3 Binomial Poisson Normal 0.2 0.3 0.5 0.4 Binomial Poisson Normal 0.25 0.2 0.15 Probability 0.6 0.15 0.1 0.1 0.05 0.1 0 -0.5 0 0.5 1 1.5 2 2.5 3 3.5 number of days with multi-car accidents in one work-week 0.05 0 0 1 2 3 4 5 6 7 8 number of days with multi-car accidents in four work-weeks 0 0 2 4 6 8 10 12 14 number of days with multi-car accidents in ten work-weeks CC BY-NC-ND H.P. Gavin 9 Rules of Probability The Exponential distribution Consider the random time T1 between two random events in a Poisson process. P [T1 > t] = P [no events in time t] = exp(−t/T ) The complementary event is P [T1 ≤ t] = 1 − P [no events in time t] = 1 − exp(−t/T ) This is the distribution function for an exponential distribution, and describes the inter-event time, or the time between successive events. Examples 1. What is the probability of flipping a head in three coin tosses? (a) P [ a H on any toss ] = 0.5 . . . P [ a H in 3 tosses ] = 0.5 + 0.5 + 0.5 = 1.5 > 1 ??? Clearly wrong. Probabilities must be between 0 and 1! A H on the first toss is not mutually exclusive of a H on any other toss. (b) Instead, using the union of multiple mutually exclusive events . . . There are seven mutually exclusive ways to get a H in three tosses: (H1 T2 T3 ); (T1 H2 T3 ); (T1 T2 H3 ); (H1 H2 T3 ); (H1 T2 H3 ); (H1 T2 H3 ); (H1 H2 H3 ); The probability of each is the same (0.5)3 , so P [ a H in 3 tosses ] = 7 · (0.5)3 = 0.875 (c) In fact, there is only one way not to get a head in three tosses: (T1 T2 T3 ). Making use of complementary probabilities and the intersection of independent events (tossing three T ): P [ a H in 3 tosses ] = 1 − P [ 0 H in 3 tosses ] = 1 − (0.5)3 = 0.875. (d) Invoking the Bernouli distribution, we can say there are three mutually exclusive groups of making a H in three tosses: P [ a H in 3 tosses ] = P [ 1 H in 3 tosses ]+P [ 2 H in 3 tosses ]+P [ 3 H in 3 tosses ] = 0.375 + 0.375 + 0.125 = 0.875. Make a Venn diagram of three intersecting circles to help illustrate these four solutions. 2. Consider a typical year having 36 days with rain. The probability of rain on any given day is 0.10. The return period for days with rain is 10 days. Use the Binomial and Poisson distributions to find: P [one rainy day in 31 days] P [one rainy day in 60 days] Bernoulli (31!)/(1!30!) 0.11 (1 − 0.1)30 = 0.131 (60!)/(1!59!) 0.11 (1 − 0.1)59 = 0.012 Poisson ((31/10)1 /1!) exp(−31/10) = 0.140 ((60/10)1 /1!) exp(−60/10) = 0.015 3. What is the probability of exactly one event in one day for a Poisson process with return period of T days? P [one occurrence in one day] = ((1/T )1 /1!) exp[−1/T ] = (1/T ) exp(−1/T ) For T 1 day, P [one occurrence in one day] ≈ (1/T ). 4. What is the probability of exactly one event in T days for a Poisson process with return period of T days? P [one occurrence in T days] = ((T /T )1 /1!) exp[−T /T ] = exp(−1) = 0.368 CC BY-NC-ND H.P. Gavin 10 CEE 201L. Uncertainty, Design, and Optimization – Duke University – Spring 2016 – H.P. Gavin 5. What is the probability of one or more events in T days for a Poisson process with return period of T days? P [one or more occurrences in T days] = = = = 1 − P [no occurrences in T days] 1 − ((T /T )0 /0!) exp[−T /T ] 1 − exp(−1) 0.632 6. What is the return period T of a Poisson process with a 2% probability of exceedance in fifty years? 0.02 = = = − ln(1 − 0.02) = T = P [n > 0] 1 − P [n = 0] 1 − ((50/T )0 /0!) exp(−50/T ) −50/T 2474.9 years 7. In the past 50 years there have been two large earthquakes (M > 6) in the LA area. What is the probability of a large earthquake in the next 15 years, assuming the occurrence of earthquakes is a Poisson process? The mean return period is T = 50/2 = 25 years. P [one or more EQ in 15 years] = 1 − P [no EQ in 15 years] = = 1 − ((15/25)0 /0!) exp(−15/25) = 0.451 (In actuality, the occurrence of an earthquake increases the probability of earthquakes, for a year or two.) 8. Consider the following events (E: electrical power failure); (F : flood) with probabilities: P [E] = 0.2; P [F ] = 0.1; P [E|F ] = 0.1. . . . Note that P [E] > P [E|F ]. So, P [E ∩ F ] = P [E|F ] · P [F ] = (0.1)(0.1) = 0.01 If E and F were assumed to be independent, then the probability of E and F would be calculated as P [E ∩ F ] = P [E] · P [F ] = (0.2)(0.1) = 0.02 Neglecting the conditional dependence of random events can lead to large errors. CC BY-NC-ND H.P. Gavin 11 Rules of Probability 9. Assume that during rush-hour one out of ten-thousand drivers is impaired by alcohol. Also assume that breathalyzers have a 2% false-positive rate and a 0% false-negative rate. If a police officer stops a car at random and finds that the driver tests positively for alcohol, what is the likelihood that the officer has correctly identified an impaired driver? assign random variables to events: D =drunk, D0 =sober, B =breathalyzer positive, B 0 =breathalyzer negative. Given: P [D] = 0.0001, P [B|D0 ] = 0.02, P [B 0 |D] = 0, Find: P [D|B]. D and D0 are complementary, P [D0 ] = 1 − P [D] = 0.9999 (B 0 |D) and (B|D) are complementary, P (B|D) = 1 − P (B 0 |D) = 1 Bayes’ Theorem with the Theorem of Total Probability: P [D|B] = P [B|D] · P [D] P [B|D] · P [D] = P [B] P [B|D] · P [D] + P [B|D0 ] · P [D0 ] (1)(0.0001) = (1)(0.0001) + (0.02)(0.9999) (1)(0.0001) = 0.00497 ≈ 0.5 percent = 0.020098 This surprisingly-low probability is an example of the base rate fallacy of the false positive paradox which arises when the event being tested (“the driver is drunk” in this example) occurs with very low probability. Seen another way, the test changes the probability of finding a drunk driver from one in ten-thousand to one in two-hundred. 10. Ang+Tang, v.1, example 2.27, p. 57. Consider emission standards for automobiles and industry in terms of three events: • I: industry meets standards; • A: automobiles meet standards; and • R: there has been an acceptable reduction in pollution. Given: P [I] = 0.75; P [A] = 0.60; A and I are independent events; P [R|AI] = 1; P [R|AI 0 ] = 0.80; P [R|A0 I] = 0.80; and P [R|A0 I 0 ] = 0. Find: P [R]. The following set of events are CE and ME: AI, AI 0 , A0 I, A0 I 0 . So, from the Theorem of Total Probability, P [R] = P [R|AI] · P [AI] + P [R|AI 0 ] · P [AI 0 ] + P [R|A0 I] · P [A0 I] + P [R|A0 I 0 ] · P [A0 I 0 ] CC BY-NC-ND H.P. Gavin 12 CEE 201L. Uncertainty, Design, and Optimization – Duke University – Spring 2016 – H.P. Gavin Since A and I are independent, • P [AI] = (0.60)(0.75) = 0.45; • P [AI 0 ] = (0.60)(0.25) = 0.15; • P [A0 I] = (0.40)(0.75) = 0.30; • P [A0 I 0 ] = (0.40)(0.25) = 0.10. So, P [R] = (1.0)(0.45) + (0.80)(0.15) + (0.80)(0.30) + (0)(0.1) = 0.81 If it turns out that pollution is not reduced (R0 ), what is the probability that it is entirely due to a failure to control automobile exhaust (A0 I)? P [A0 I|R0 ] = (1 − 0.8)(0.3) P [R0 |A0 I] · P [A0 I] = = 0.32 0 P [R ] (1 − 0.81) If it turns out that pollution is not reduced (R0 ), what is the probability that automobile exhaust was not controlled (A0 )? The events I and I 0 are complementary and the events A0 I and A0 I 0 are mutually exclusive. P [A0 |R0 ] = P [A0 I|R0 ] + P [A0 I 0 |R0 ] P [R0 |A0 I] · P [A0 I] P [R0 |A0 I 0 ] · P [A0 I 0 ] + = P [R0 ] P [R0 ] (1 − 0.8)(0.3) (1 − 0)(0.10) + = 0.84 = (1 − 0.81) (1 − 0.81) If it turns out that pollution is not reduced (R0 ), what is the probability that industry emissions were not controlled (I 0 )? The events A and A0 are complementary and the events AI 0 and A0 I 0 are mutually exclusive. P [I 0 |R0 ] = P [AI 0 |R0 ] + P [A0 I 0 |R0 ] P [R0 |AI 0 ] · P [AI 0 ] P [R0 |A0 I 0 ] · P [A0 I 0 ] = + P [R0 ] P [R0 ] (1 − 0.8)(0.15) (1 − 0)(0.10) = + = 0.68 (1 − 0.81) (1 − 0.81) So, if pollution is not reduced it is more likely that it has to do with cars not meeting standards than industry. CC BY-NC-ND H.P. Gavin 13 Rules of Probability 11. Ang+Tang, v.1, example 2.25, p. 55 Consider damaging storms causing flooding and storm-damage in a county and within a city. Suppose that the probability of a storm in a county is 20% in any given year. During such a storm: the probability of a flood in the county is 25%; the probability of flood in the city during a flood in the county is 5%; and if there is no flood in the county the probability of storm damage in the city is 10%. Assume floods only happen during storms and if a flood happens in a city, property will be damaged with absolute certainty. If there is a flood in the county without a flood in the city, the probability of storm damage in the city is 15%. There can be no flood in the city without a flood in the county. What is the annual probability of storm damage in the city? In terms of events: • S: storm in the county • F : flood in the county (only happens if there is a storm in the county) • W : flood in the city (only happens if there is a flood in the county) • D: storm damage in the city the probabilities above (and their complements) can be summarized in the table below. storm P [S] = 0.20 flood in county P [F |S] = 0.25 P [F 0 |S] = 0.75 P [S 0 ] = 0.80 P [F |S 0 ] = 0 P [F 0 |S 0 ] = 1 flood in city P [W |F ] = 0.05 P [W 0 |F ] = 0.95 P [W |F 0 ] = 0 P [W 0 |F 0 ] = 1 damage in city P [D|W ] = 1 P [D|SF W 0 ] = 0.15 P [D|SF 0 ] = 0.10 P [D|S 0 ] = 0 0 P [W |F ] = 0 The events (SF ), (SF 0 ), and (S 0 ) are CE and ME. So, from the Theorem of Total Probability, P [D] = P [D|SF ] · P [SF ] + P [D|SF 0 ] · P [SF 0 ] + P [D|S 0 ]P [S 0 ] The conditional probabilities needed to solve this problem can be found from further application of the Theorem of Total Probability and the intersection of dependent events. CC BY-NC-ND H.P. Gavin 14 CEE 201L. Uncertainty, Design, and Optimization – Duke University – Spring 2016 – H.P. Gavin • If there is a storm, a flood in the county, and a flood in the city, damage in the city will certainly occur (P [D|SF W ] = 1), and might occur even if there is not a flood in the city (P [D|SF W 0 ] = 0.15). P [D|SF ] = P [D|SF W ] · P [W |SF ] + P [D|SF W 0 ] · P [W 0 |SF ] = (1.0)(0.05) + (0.15)(0.95) = 0.1925 • P [SF ] = P [F |S] · P [S] = (0.25)(0.20) = 0.05 (intersection of dependent events)) • P [D|SF 0 ] = 0.10 (given) • P [SF 0 ] = P [F 0 |S] · P [S] = (0.75)(0.20) = 0.15 (intersection of dependent events) • P [D|S 0 ] = 0 (given) So, P [D] = (0.1925)(0.05) + (0.10)(0.15) + (0)(0.8) = 0.025 Note that while there is twice the likelihood of damage when there is a flood in the county as compared to no flood in the county (P [D|SF ] ≈ 0.19 vs P [D|SF 0 ] = 0.1), the probability of damage and a flood in the county is less than the probability of damage and no flood in the county. P [D ∩ SF ] = P [D|SF ] · P [SF ] = (0.1925)(0.05) ≈ 0.010 P [D ∩ SF 0 ] = P [D|SF 0 ] · P [SF 0 ] = (0.10)(0.15) = 0.015 W P[SF]=0.05 D SF’ P[SF’]=0.15 S’ P[S’]=0.8 CC BY-NC-ND H.P. Gavin