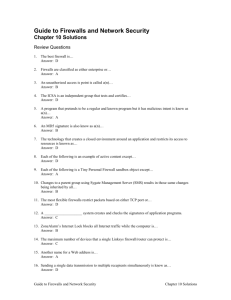

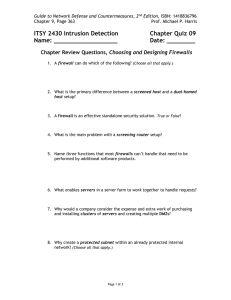

a firewall model for testing user

advertisement