Lecture 3 Statistical vs. Systematic Errors,Rejection of Data

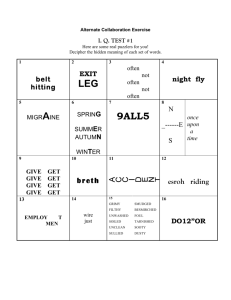

advertisement

PHYSICS 2150

EXPERIMENTAL MODERN

PHYSICS

Lecture 3

Statistical vs. Systematic Errors,Rejection of Data;

Weighted Averages

SO FAR WE DISCUSS “STATISTICAL

UNCERTAINTY”. NOW WHAT

ABOUT UNCERTAINTY INVOLVED

WITH MEASUREMENT ITSELF ?

(SYSTEMATIC UNCERTAINTY)

ANALYZING ERROR ON A

QUANTITY

• You

are in a car on a bumpy road on a rainy day, and are

trying to measure the length of the moving windshield wiper

with a shaky ruler.

• You

• The

measure it 37 times.

histogram of your results

is at right. It doesn’t look very

gaussian. But, with only 37

measurements plotted in lots

of bins, distributions often look

ratty.

1σ error

on mean

ANALYZING ERROR ON A

QUANTITY

• The

1σ uncertainty on the

mean is 1.1 cm.

• If

we assume the underlying

distribution is nevertheless

gaussian and centered on the

true value, we can turn this

into a confidence level: the

wiper length is between 55.1

and 57.3 cm with 68%

confidence.

1σ error

on mean

ANALYZING ERROR ON A

QUANTITY

• Stop

the car, go outside and measure the wiper properly:

it is 61.0 cm long!

• We

said the wiper length was between 55.1 and 57.3 cm with

68% confidence.

• We

are off by over 4 sigma. This is shockingly unlikely!

• Clearly

there is a systematic shift. The distribution does not

center on the true value.

• More

data points won’t get us any closer to the true value.

We need to make better measurements, or find the source of

the error and apply a correction to the data.

STATISTICAL (RANDOM) VS.

SYSTEMATIC UNCERTAINTIES

Statistical

Systematic

No preferred direction

Bias on the measurement: Only

one direction

Stays the same for each

Changes with each data point:

measurement: more data won‘t

taking more data reduces mean.

help you

Gaussian model is usually good

(except counting experiments)

Gaussian model is usually

terrible, but we use it anyway

STATISTICAL (RANDOM) VS.

SYSTEMATIC UNCERTAINTIES

• Keep

statistical, systematic errors separate. Report results as

something like:

g = [965 ± 30(stat) ± 12(sys)] cm/s2

• Add

in quadrature (note that this assumes Gaussian

distribution) to compare with known values:

g = [965 ± 32(total)] cm/s2

EXAMPLE: E/M EXPERIMENT

• Electrons

accelerated with 40, 60, or 80V

• Magnetic

field perpendicular to velocity

force F = e v B forces

electrons into circular paths

• Lorentz

m v2

• Centripetal force: F =

r

• Electron

energy:

2

1

m

v

2

= eV

8µ0 N I

• Magnetic field of Helmholtz coils is B =

a

125

e

V a2

• This gives

= 3.906 2 2 2 2

m

µ0 N I r

2

e

Va

= 3.906 2 2 2 2

m

µ0 N I r

Variable

V

a

μ0

N

r

I=IT-I0

IT

I0

Definition

Accelerating Potential

Helmholtz Coil radius

Permeability of free space

Number of turns in each coil

Electron beam radius

Net current

Total current

Cancellation current

How Determined

measured

measured

constant

given

given

calculated

measured

measured

Want e/m with systematic and statistical uncertainties

DATA FOR

2

e

Va

= 3.906 2 2 2 2

m

µ0 N I r

Three measurements of I0={0.17, 0.20, 0.23} ⇾ I0=0.20±0.03A

Compute e/m for 14 different combinations of V and pin radii

Entry

I (A)

r (m)

V (V)

e/m (1011C/kg)

1

1.75

0.0572

40

2.08

2

1.99

0.0509

40

2.08

3

2.28

0.0447

40

2.00

4

2.67

0.0384

40

1.98

5

3.20

0.0321

40

1.97

6

2.20

0.0572

60

1.97

7

2.49

0.0509

60

1.94

8

2.85

0.0447

60

1.92

9

3.34

0.0384

60

1.90

10

4.03

0.0321

60

1.86

11

2.58

0.0572

80

1.91

12

2.90

0.0509

80

1.91

13

3.30

0.0447

80

1.88

14

3.39

0.0384

80

1.85

E/M: STATISTICAL UNCERTAINTY

• Mean:

N

x̄ =

• Standard

xi = 1.946 · 10 C/kg

11

1

N

deviation:

i=1

1

2

N

x

=

(xi

1

N 1

2

x̄)

i=1

• Uncertainty

on mean:

x̄

• Result

= 0.072 · 10 C/kg

=

x

N

= 0.019 · 10 C/kg

11

with statistical uncertainty only:

e

11

= (1.946 ± 0.019) · 10 C/kg

m

11

E/M: SYSTEMATIC UNCERTAINTY

2

e

Va

= 3.906 2 2 2 2

m

µ0 N I r

Uncertainty in a power:

q

x

= |n|

|q|

|x|

q(x) = x

n

Error propagation gives:

e

m

e

m

=

sys

V

V

2

a

+ 2

a

2

I

+ 2

I

2

r

+ 2

r

2

E/M: SYSTEMATIC UNCERTAINTY

e

m

e

m

=

sys

V

V

2

a

+ 2

a

2

I

+ 2

I

• Acceleration Voltage:

V

0.1V + 0.001 · 60V

=

= 0.003

V

60V

• Helmholtz

Coil radius:

a

0.3 cm

=

= 0.009

a

33.2 cm

• Electron

beam radius:

r

0.002 cm

=

= 0.004

r

4.47 cm

2

r

+ 2

r

2

E/M: SYSTEMATIC UNCERTAINTY

• Net

Current: I = I0 + IT

I=

( IT )2 + ( I0 )2

from statistical uncertainty:

I0 = 0.20 ± 0.03 A

from meter:

(0.003 · 0.2 A + 0.01 A) = 0.011 A

I0 =

(0.03 A)2 + (0.01 A)2 = 0.032 A

from meter:

(0.003 · 2.85 A + 0.01 A) = 0.019 A

I

=

I

(0.032 A)2 + (0.019 A)2

= 0.013

2.65 A

E/M: SYSTEMATIC UNCERTAINTY

e

m

e

m

e

m

e

m

e

m

e

m

e

m

=

sys

=

sys

V

V

2

a

+ 2

a

2

I

+ 2

I

2

r

+ 2

r

2

0.0032 + (2 · 0.009)2 + (2 · 0.013)2 + (2 · 0.004)2

= 0.033 = 3.3%

sys

sys

= 0.033 · 1.946 · 10

11

C/kg = 0.064 · 10

11

C/kg

E/M: UNCERTAINTY

• Statistical:

e

m

stat

= 0.019 · 10

11

C/kg

• Systematical:

e

m

• (Almost)

sys

= 0.064 · 1011 C/kg

Final Result:

e

11

= (1.946 ± 0.019(stat.) ± 0.064(sys.)) · 10 C/kg

m

• Final

Result (significant digits!):

e

11

= (1.95 ± 0.02(stat.) ± 0.06(sys.)) · 10 C/kg

m

E/M: SUMMARY OF RESULTS

• Measured Value:

e

11

= (1.95 ± 0.02(stat.) ± 0.06(sys.)) · 10 C/kg

m

• Accepted Value:

e

= (1.758820088 ± 0.000000039) · 1011 C/kg

m

• Discrepancy

from Accepted Value:

e

= (1.95

m

• Significance

e

m

total

=

1.76) · 10

11

C/kg = 0.19 · 10

11

C/kg

of Discrepancy:

1.95

= 2.8

0.022 + 0.062

„the result is off by 2.8σ“

HOW GOOD IS OUR RESULT?

What is the probability for a value to deviate more than

2.8σ from the mean for a Gaussian distribution?

• Probability

2.8! :

µ+2.8

e

µ 2.8

(x µ)2

2 2

• Probability

2.8! :

1

µ 2.8

-3

-2

µ

-1

µ µ+

0

1

2

3

µ+2.8

that x inside

dx = 0.99489

that x outside

0.99489 = 0.00511 = 0.51%

• Very

unlikely result

This result requires further analysis of possible error sources

PREVIOUS LECTURE:

GAUSS DISTRIBUTION

p(x|µ, ) =

1

2

e

1

2

(

x

µ

2

)

µ=2, σ=0.25

1.5

µ=3, σ=0.5

1.0

µ=4, σ=1

0.5

2

4

6

8

WE CAN NOW ANSWER WHY

ERRORS ADD IN QUADRATURE

Measure independent quantities A and B and calculate sum

p(A|µA, σA)

1.0

0.8

p(B|µB, σB)

0.6

0.4

0.2

2

4

6

8

WHAT IS p(A + B|µA+B ,

• Probability

p(A|µA ,

A+B )

?

to measure A AND B simultaneously:

A)

· p(B|µB ,

B)

e

e

1 A µA 2

)

2(

A

h

A

1

(

2

·e

1 B µB

2(

B

µA 2

B µB

) +(

A

B

)2

)2

i

x2

y2

(x + y)2

(px oy)2

+

=

+

o

p

o+p

op(o + p)

e

• We

2

1 (A+B µA µB )

2 + 2

2

A

B

·e

1 2

2Z

now have in fact probability density for A+B and Z:

p(A + B, Z|µA + µB , (

2

A

+

B)

1

2

)

e

2

1 (A+B µA µB )

2 + 2

2

A

B

·e

1 2

2Z

HOW ABOUT Z?

• We

only care about A+B, so we integrate over all values of Z:

p(A + B) =

p(A + B, z)dz

e

• Probability

2

1 (A+B µA µB )

2 + 2

2

A

B

density for A+B is also a Gaussian

1

2

A

+

2

B

with the standard deviation

A+B

dz

2

p(A + B) =

=

·e

1 2

2Z

2

A

+

2

B

2

e

2

1 (A+B µA µB )

2 + 2

2

A

B

WE CAN NOW ANSWER WHY

ERRORS ADD IN QUADRATURE

p(A|µA, σA)

A+B

=

1.0

2

A

+

2

B

0.8

p(B|µB, σB)

p(A+B|µA+µB, (σB2+σB2)1/2)

0.6

0.4

0.2

2

4

6

8

10

12

WE CAN ALSO JUSTIFY THE

MEAN BEING THE BEST ESTIMATE

• Obtain

data finite data set x1, x2, ...,xN and want to find the

true value X

60

60

p(x)?

50

40

40

30

20

20

10

0

-6V

-5

-4

-3

-2

-1

-5V

-4V

-3V

-2V

-1V

Electrostatic Grain Potential

If we would know the limiting distribution p(x), we would also

know X, but we don‘t!

DO WE REALLY NEED THE

LIMITING DISTRIBUTION?

• Let‘s

assume that the deviation of an individual measurement

xi from X follows a Gaussian distribution

p(xi ) =

• The

1

x

2

e

1

2

(

xi

X

2

)

probability to obtain the data set x1,...,xN is then

p(x1 , x2 , ..., xN ) = p(x1 ) · p(x2 ) · . . . · p(xN )

1

N e

11

N e

1

2

(

x1

X

PN

1 1 x1 X

(

22 2

i

2

) · ... · e

2

2

(x

X)

)1

· ... · e

1

2

1

2

“

“

xN

X

xN

X

”2

”2

MAXIMUM LIKELIHOOD

PRINCIPLE

PN

1 1 x1 X

2

11 ) ·22p(x

1 X)

(

)

2

i ·(x

p(x1 , x2 , ..., xN ) = p(x

)

.

.·. .·.p(x

. · eN )

e

2

N 1

• Which

1

2

2

is the most likeliest values for X for our data set

x1, x2, ..., xN?

• the

xN

X for which p(x1, x2, ..., xN) is maximum

• p(x1, x2, ..., xN)

is maximum if the exponent is minimum

N

• Need

2

to find minimum of „chi square“:

d

•

=

dX

• The

“

N

(xi

i

1

X) = 0 or X =

N

mean is the best estimate for X

N

2

=

X)2

2

i=1

xi = x

i=1

(xi

X

REJECTING DATA

• DON‘T!!!!!!!

• Best

way is to take more data!

REJECTING DATA

• We

often find suspicious

data points

10

• Different

way the data

was collected?

8

6

4

• Error

2

2

4

6

8

during data

recording?

10

-2

• It

is ever legitimate to

discard them?

REJECTING DATA

• Be

10

very careful - you are treading in the footsteps of a

long line of practitioners of pathological science!

8

• There

6

should be an external reason for rejecting data!

• But4 even

2

• The

• By

this may not been enough:

data may just be in conflict with our expectation

2

4

6

8

10

rejecting data we may bias the data set and produce

-2

bogus results

REJECTING DATA

10

• 8There

6

are no general recipes for rejecting data!

• All

4

2

procedures for removing suspicious data are

controversial!

• Will

-2

describe one which is popular in textbooks

(but not2in real life):

Chauvenet‘s

criterion

4

6

8

10

A CAUTIONARY TALE:

HOW TO LOOK FOR A PARTICLE

1.Look in high-energy collisions

for events with multiple output

particles that could be decay

products

(displaced from primary

interaction, if particle is longlived as with the K0).

2.Reconstruct a relativistic

invariant mass from the

momenta of the decay

products.

Those of you doing the K meson

experiment have already seen this

A CAUTIONARY TALE:

HOW TO LOOK FOR A PARTICLE

3.Make a histogram of the

masses from candidate events

4.Look for a peak, indicating a

state of well-defined mass

A CAUTIONARY TALE:

ONE PEAK OR TWO?

MeV using their background and resonance assumptions, one obtains an acceptable confidence level for the dipole.

One also obtains an acceptable dipole fit

over the whole mass spectrum if one assumes a second-order background. Furthermore, one has to note that the extremely crucial background behavior at

both ends of the spectrum is based on

2-6 events per 10-MeV bin. The same

procedures increase the confidence level

for a dipole in the p°ir+ events by a

considerable amount. Aside from statistics and background considerations,

one must bear in mind the very general

fact that it is much easier not to see a

splitting than to see it, because of a

variety of resolution-killing effects that

are normally hard to track down, both

in counter and bubble-chamber experiments.

Exciting new results on the neutral

A2 were reported, at the Kiev International High Energy Conference in September, by T. Massam of the group at

CERN headed by A. Zichichi. In the

first reported observation of the splitting

in A2n, the CERN counter group measured the recoil neutron in the chargeexchange reaction

•CERN experiment in late 1960s

observed A2 mesons

500 -

•Particle appeared to be a

a.

a.

doublet

o

UJ

CO

•Statistical significance of split is

400 -

very high

7I-- + p - * n + A2°

at a beam momentum of 3.2 GeV/c.

They saw a marked dip at the center of

the A2fl. Confidence levels for a single

peak, incoherent double peak and dipole were 1%, 23% and 67% respectively.

•There is really only one particle!!

Dependence of splitting

300

1.22

1.25

1.30

1.35

MISSING MASS (GEV)

Fits to the two-peak structure of data from the CERN missing-mass and boson spectrometer group for the A2, 1965-68. The black curve is the fit for two coherent

To arrive at some conclusions concerning the A2 splitting we will look for

variables the effect may depend on. The

dependence or independence might give

a clue to the nature of the A2. We will

discuss the possible dependence of the

A2 splitting on four quantities: bombarding energy, final state, production

reaction and momentum transfer.

The effect of symmetric splitting has

A CAUTIONARY TALE:

HOW DID THIS HAPPEN?

MeV using their background and resonance assumptions, one obtains an acceptable confidence level for the dipole.

One also obtains an acceptable dipole fit

over the whole mass spectrum if one assumes a second-order background. Furthermore, one has to note that the extremely crucial background behavior at

both ends of the spectrum is based on

2-6 events per 10-MeV bin. The same

procedures increase the confidence level

for a dipole in the p°ir+ events by a

considerable amount. Aside from statistics and background considerations,

one must bear in mind the very general

fact that it is much easier not to see a

splitting than to see it, because of a

variety of resolution-killing effects that

are normally hard to track down, both

in counter and bubble-chamber experiments.

Exciting new results on the neutral

A2 were reported, at the Kiev International High Energy Conference in September, by T. Massam of the group at

CERN headed by A. Zichichi. In the

first reported observation of the splitting

in A2n, the CERN counter group measured the recoil neutron in the chargeexchange reaction

•In an early run, a dip showed up. It

was a statistical fluctuation, but

people noticed it and suspected it

might be real.

500 -

a.

a.

•Subsequent runs were looked at as

o

UJ

CO

400 -

they came in. If no dip showed up,

the run was investigated for

problems. There’s usually a minor

problem somewhere in a complicated experiment, so most of these

runs were cut from the sample.

7I-- + p - * n + A2°

at a beam momentum of 3.2 GeV/c.

They saw a marked dip at the center of

the A2fl. Confidence levels for a single

peak, incoherent double peak and dipole were 1%, 23% and 67% respectively.

Dependence of splitting

300

1.22

1.25

1.30

1.35

MISSING MASS (GEV)

Fits to the two-peak structure of data from the CERN missing-mass and boson spectrometer group for the A2, 1965-68. The black curve is the fit for two coherent

To arrive at some conclusions concerning the A2 splitting we will look for

variables the effect may depend on. The

dependence or independence might give

a clue to the nature of the A2. We will

discuss the possible dependence of the

A2 splitting on four quantities: bombarding energy, final state, production

reaction and momentum transfer.

The effect of symmetric splitting has

A CAUTIONARY TALE:

HOW DID THIS HAPPEN?

MeV using their background and resonance assumptions, one obtains an acceptable confidence level for the dipole.

One also obtains an acceptable dipole fit

over the whole mass spectrum if one assumes a second-order background. Furthermore, one has to note that the extremely crucial background behavior at

both ends of the spectrum is based on

2-6 events per 10-MeV bin. The same

procedures increase the confidence level

for a dipole in the p°ir+ events by a

considerable amount. Aside from statistics and background considerations,

one must bear in mind the very general

fact that it is much easier not to see a

splitting than to see it, because of a

variety of resolution-killing effects that

are normally hard to track down, both

in counter and bubble-chamber experiments.

Exciting new results on the neutral

A2 were reported, at the Kiev International High Energy Conference in September, by T. Massam of the group at

CERN headed by A. Zichichi. In the

first reported observation of the splitting

in A2n, the CERN counter group measured the recoil neutron in the chargeexchange reaction

•When a dip appeared, they didn’t

500 -

look as carefully for a problem.

a.

•So an insignificant fluctuation was

a.

o

boosted into a completely wrong

“discovery.”

UJ

CO

400 -

7I-- + p - * n + A2°

at a beam momentum of 3.2 GeV/c.

They saw a marked dip at the center of

the A2fl. Confidence levels for a single

peak, incoherent double peak and dipole were 1%, 23% and 67% respectively.

•Lesson: Don’t let result influence

which data sets you use/want.

Dependence of splitting

300

1.22

1.25

1.30

1.35

MISSING MASS (GEV)

Fits to the two-peak structure of data from the CERN missing-mass and boson spectrometer group for the A2, 1965-68. The black curve is the fit for two coherent

To arrive at some conclusions concerning the A2 splitting we will look for

variables the effect may depend on. The

dependence or independence might give

a clue to the nature of the A2. We will

discuss the possible dependence of the

A2 splitting on four quantities: bombarding energy, final state, production

reaction and momentum transfer.

The effect of symmetric splitting has