Factors in the selection of a risk assessment method

advertisement

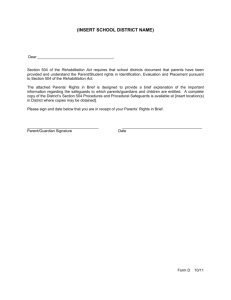

Factors in the selection of a risk assessment method Sharman Lichtenstein Senior Lecturer, Department of Information Systems, Monash University, Melbourne, Australia A risk assessment method is used to carry out a risk assessment for an organization’s information security. Currently, there are many risk assessment methods from which to choose, each exhibiting a variety of problems. For example, methods may take a long time to perform, may rely on subjective estimates for the security input data, may rely heavily on quantification of financial loss due to vulnerability, or may be costly to purchase and use. This paper discusses requirements for an ideal risk assessment method, and develops and evaluates factors to be considered in the selection method. Empirical research was carried out at two large, Australian organizations, in order to determine and validate factors. These factors should be of use to organizations in the evaluation, selection or development of a risk assessment method. Interesting conclusions are drawn about decision making in organizational information security. The author wishes to acknowledge Mr David Dick for his research assistance. Information Management & Computer Security 4/4 [1996] 20–25 © MCB University Press [ISSN 0968-5227] [ 20 ] Introduction Risk assessment is a fundamental decisionmaking process in the development of information security, resulting in the selection of appropriate safeguards for an information system. It is a two-stage process. In the first stage, a risk analysis process defines the scope of the risk assessment, identifies information resources (assets), and determines and prioritizes risks to the assets. In the second stage, a risk management process makes decisions to control unacceptable risks; a decision may be to transfer a risk (for example via insurance), ignore a risk, or reduce a risk, via selection of appropriate safeguards. Risk assessments also fulfil other roles, including the engagement of management in information security decision making, and enabling definition and refinement of security policy. Formal and comprehensive risk assessment methods are essential for the direction and control of data collection and data interpretation, for the provision of defined deliverables, and generally to underpin the risk assessment process with a phased framework[1]. Each method is based on an underlying risk model, which typically varies from one method to another. A risk model consists of a set of key concepts and terms[2], and references assets, threats, risks, impacts, safeguards, vulnerabilities and the relationships between them. Risk assessment methods are usually classified as quantitative or qualitative. Qualitative methods produce descriptive estimates for risks – for example, “very high” risk – whereas quantitative methods produce numeric exposure estimates for risks, often measured in dollar terms – for example, by annual loss exposure. Many methods employ some degree of automation. Risk assessment methods have evolved over three generations[3]. First generation methods are based on checklists of safeguards which are checked for presence, and then recommended if absent. Second generation methods determine information security requirements as a fundamental process within the method. Third generation methods identify logical information security requirements and physical information security requirements. Formal risk assessment methods differ in a variety of ways, for example, in their underlying risk models. Each method has its own peculiar set of problems, for example, a dependence on subjective estimates of information security input data. Problems with current risk assessment methods are described by many authors[1,4-9]. Organizations must frequently select or develop a risk assessment method, necessitating a comparison of many diverse and imperfect methods. This selection or development should be based both on an organization’s specific requirements, as well as on a set of ideal requirements for a risk assessment method[10]. Organizations consider and evaluate a set of factors for each method being considered, based on such requirements, in order to choose a method. This paper aims to specify a set of factors to be considered in the selection of a risk assessment method. The paper begins by describing a set of ideal requirements for a risk assessment method. Factors to be considered in the selection of a risk assessment method are then proposed and discussed. The paper presents and discusses empirical results obtained from testing the factors in two large, Australian organizations. A conclusion evaluates the research results, and gives directions for future research. Requirements for a risk assessment method An ideal risk assessment method which would suit all organizations does not exist, as each organization possesses its own unique characteristics. For example, some organizations would be more interested in methods which concentrate on deliberate attacks, whereas other organizations require methods which focus on natural disaster threats, and associated disaster recovery procedures. However, the requirements for an ideal risk assessment method have been studied by many[1,3,5,8-12], and are discussed below. A method should be complete in its coverage of all information security components Sharman Lichtenstein Factors in the selection of a risk assessment method Information Management & Computer Security 4/4 [1996] 20–25 (for example, by including databases of all possible threats and safeguards to consider) in a system. Many methods do not include all components; for example, some methods perform a risk analysis, but do not perform risk management. The property of completeness includes information technology completeness, information security completeness and risk approach completeness[13]. Information technology completeness is a measurement of the range of safeguards which a method has to recommend; the range should include coverage of personnel, documentation, software, data, networks, hardware and physical environment, and should extend to cope with new and emerging technologies, for example digital signatures for EDI technology. Most methods omit modelling of the non-technical security environment. Methods should ideally consider nontechnical as well as technical security. Information security completeness refers to coverage of all types of risks which have an impact (disclosure, modification, unavailability). Risk approach completeness refers to inclusion of risk identification, risk analysis, risk management and risk monitoring procedures. A method should be capable of operating at any desired level of granularity. Most methods do not address exposed assets at low enough levels of granularity. For example, if the security of individual fields of data needs to be assessed, the method should provide this capability. Strategic, high level reviews, as well as detailed reviews, should be able to be carried out by the method. A method should be adaptable. The method should be capable of translating the requirements of an organization’s current security policy into appropriate safeguards. The method should also be flexible in being able to be applied to both existing and proposed information systems, and to all types of system configurations (for example, centralized and distributed systems). A method should be able to be customized for a given situation. A method should also provide a variety of qualitative and quantitative techniques; many methods only provide a limited set of techniques. A method should produce reliable and accurate results, irrespective of the certainty, subjectivity or completeness of the security data available. Most methods are based on incomplete, unreliable, subjective estimates. There are usually many variables which can influence overall organizational risk. The security analyst often attempts to condense all these variables into estimates of frequency of occurrence and impact, using subjective opinion. Further, most organizations do not release details of past breaches, in order to protect customer confidence, and historical data used for estimation purposes is thus often incomplete and inaccurate. Finally, estimates may also be massaged by security analysts to produce desired results. Further, many methods rely too heavily on quantification of financial loss. Some risks cannot be financially quantified (for example, the loss of confidentiality of customer data). Excessive time may be required for data collection for the quantification of financial loss. Also, attackers exploit the weakest link in a chain, and risk management based on financial loss does not result in equal strength safeguards; attackers will attack the weakest safeguards. A method should be easy to use. Ideally, a method would not require security experts for its application. A security analyst should not need special software, or complicated training in the use of the method. Many methods in fact require specially trained or skilled staff to execute them. Information security expertise could, however, be automated in the form of a knowledge base, and then accessed by a method. Any automated features of the method should also be easy to use. A method should be inexpensive to purchase and use. Many methods are considered too costly to justify the benefits obtained by using them. This is usually because formal risk analyses are based on complex techniques which require time-consuming training in the use of the method, and in data collection, estimation and analysis techniques. A method should be fast to use. The time taken to conduct a risk assessment requires resources which cost money. Furthermore, the risk assessment results may be required quickly. A method should employ a standard risk model. The underlying risk model is usually inadequate; for example, the dynamic nature of risk is not incorporated in current risk models. If the findings of a risk assessment turn out to be invalid, the situation is often blamed on a poor statistical risk model, rather than on poor estimates or inadequate analyst skills. A well-known, conventional risk model is FIPS 79[14]. A more recent, minimal, generic model is that of Mayerfeld[15]. However, a standardized risk model has not yet been achieved. A method should be able to facilitate analysis of different safeguard possibilities; for example, by allowing exploration of different configurations of safeguards. The method should thus be iterative in this respect. [ 21 ] Sharman Lichtenstein Factors in the selection of a risk assessment method Information Management & Computer Security 4/4 [1996] 20–25 A method should integrate with existing systems development methods and CASE tools. Current systems development tools do not document security-related information in a form suitable for use by most risk assessment methods. A method should be able to give clear, precise and well-justified recommendations to management. Managers must be able to understand all of the arguments which led to the selection of particular safeguards. Flexible reporting facilities also assist in presenting results in a useful form. Finally, a method should have an acceptable and appropriate level of automation. • • Factors in the selection of a risk assessment method The idealized requirements above, together with work carried out by several authors[1,5,7,9,12,13,16-18], suggest that the following factors should be considered in the selection of a risk assessment method: • Cost. The cost of employing, as well as the cost of purchasing, a method must be considered. Time spent in gathering the security data, and time spent in making complex estimates (for example, financial estimates of security data which are difficult to quantify financially), contribute to the cost of using a method. The provision of qualitative as well as quantitative techniques provides the flexibility to lower these costs. • External influences. The selected method may require the approval of external parties, such as the government or other authorities. • Agreement. Management and the security analysts should concur as to the selected method. • Organizational structure. Modern, adaptive organizations may require fast, inexpensive methods[5], whereas more traditional organizations may prefer a well-documented, comprehensive method with more timeconsuming, yet more rigorous techniques. • Adaptability. A method must be able to adapt to an organization’s needs. The method must be able to implement existing security policies. Portability and modifiability of the method are required[9]. The method should also be able to be applied to a variety of types of systems. The method should allow the trying out of different combinations of safeguards and should enable gathered and analysed security data to be stored and reused in future risk assessments. • Complexity. The complexity of a method should be limited such that the method is [ 22 ] • • • • • within the grasp of both managers and security analysts. Simple and precise recommendations should be given to managers at the end of the risk assessment. Completeness. A risk assessment method must consider all aspects of a system’s information security (information technology completeness, information security completeness and risk approach completeness[13]). Eloff et al.[13] evaluate completeness using Boolean metrics to determine the presence of the required method components. A method should also be able to operate at the desired degree of granularity[9]. Level of risk. The level of risk of the organization and its systems can influence the choice of method. A high risk organization typically needs a more rigorous method than a low risk organization. Qualitative methods enable major problem areas to be identified quickly and cheaply, making them well suited to low/medium risk systems[18]. Organizational size. The size of an organization needs to be considered, as the larger an organization, the greater the resources it has to spend on purchase or use of a method. In addition, a large organization may require a method to be more adaptable, for use with a variety of information systems. Organizational security philosophy. Different organizations may place an emphasis on particular aspects of information security, reflecting a particular philosophy. For example, military organizations rank confidentiality above integrity. Consistency. This factor includes the reliability of the results, regardless of the method of estimation of the security input data required by the method. The method should produce the same results, irrespective of the analysts who apply it! The techniques for gathering security data play a significant role in the consistency of the method. Usability. The method should be understandable, easy to use, simple and capable of handling errors[9]. It should be usable by the available security analysts (who may be untrained in the particular method) and must be understandable not only by the analysts, but also by managers. Feasibility. The method should be feasible in terms of its availability, practicality and scope[9]. Note that the compatibility of the risk assessment method with existing systems development methods and CASE tools affects the practicality of the method. The availability and practicality of a method are related to its cost, in terms of time and effort spent, and its purchase cost; the overall cost must obviously be acceptable and justifiable for a method to be considered feasible. Sharman Lichtenstein Factors in the selection of a risk assessment method Information Management & Computer Security 4/4 [1996] 20–25 • Validity. The method should produce valid results, with recommendations for safeguards which, in practical terms, are implementable. Validity is composed of relevance, scope and practicality[9]. • Credibility. The results of the method must be acceptable to security analysts and to management. They must believe the method to be intuitively credible and reliable[9]. The credibility of a method is enhanced if the security analysts are confident that the method is complete, if the results of the method can be proven to be correct, and if the method does not rely on simplistic, subjective estimates and calculations of security data. The credibility of the underlying risk model contributes strongly to the overall credibility of the method. • Automation. Automation support within a method should be considered, and should be at a level acceptable to management and security analysts. Automated methods are faster; however, the associated loss of human intuition and creativity may lead to less economical and less efficient safeguards being selected. Nonetheless, Katzke[10] argues that a method should be fully automated, and Anderson[1] favours a significant level of automation. Table I Importance of factors in the selection of a risk assessment method at organizations X and Y Factor Cost External influences Agreement Organizational structure Adaptability Complexity Completeness Level of risk Organizational size Organizational security philosophy Consistency Usability Feasibility Validity Credibility Automation Action • Case study results and discussion The two organizations (organization X and organization Y) which participated in the case studies are large, Australian companies with substantial information technology divisions. Two interviews took place with the information technology security manager at each organization, in which the interviewees were asked to consider the factors in the above list, and any additional factors which they considered important when selecting their risk assessment methods. Each organization rated the factors on a Likert scale of one to ten, in order to determine the relative importance of each factor in the selection process. The results are presented in Table I. Note that an extra factor, “action”, was introduced by organization X, although not considered of great importance by organization Y. A discussion of the comments made by each organization follows: • Cost. Organization X regarded this factor as absolutely critical. The cost of applying the method (i.e. the cost of employing the security analysts) was regarded as more important than the cost of purchasing the method. Subsequently, the organization was reluctant to purchase methods which required the hiring of special security experts to employ the methods. Organization Y • • • • Organization Organization X (rating) Y (rating) 10 5 3 1 5 7 5 4 6 10 8 8 8 8 2 1 6 5 4 5 10 5 9 10 6 10 5 3 10 2 7 10 7 3 regarded a method which took a short time to apply as important, as this lowered the cost of use. External influences. Organization X considered this factor only rarely to be important. Clients of the organization occasionally needed reassurance regarding the risk assessment method chosen. Organization Y could not see any need to consider external influences. Agreement. Organization X did not require agreement, as management was rarely consulted, believing the decision to be of little strategic importance. Organization Y did not require agreement between management and security experts in selecting a method. Organizational structure. Organization X was in a transition phase from a traditional structure and culture to an adaptive one, and hence preferred faster, inexpensive risk assessment methods, although it did not rate this factor very highly. Organization Y regards itself as an adaptive organization, and currently employs an inexpensive, usable method, which would be expected in an adaptive organization. Adaptability. Organization X preferred a method which was flexible enough to adapt to a variety of organizational requirements. Organization Y was not sure whether its method was highly adaptable, but nevertheless believed that this was a critical factor. Complexity. Organizations X and Y did not desire complex recommendations or arguments. [ 23 ] Sharman Lichtenstein Factors in the selection of a risk assessment method Information Management & Computer Security 4/4 [1996] 20–25 [ 24 ] • Completeness. Organization X believed that a method needed thorough lists of security components and complete coverage of steps in the risk assessment process. Organization Y strongly believed in the availability of thorough lists of security components in a method, and in the presence of all risk assessment steps. • Level of risk. Neither organization X nor Organization Y considered this to be an important factor. • Organizational size. Organization X had the financial resources to be able to employ a number of different methods on different projects. Organization Y had selected a method that was inexpensive and usable, but did not think organizational size would affect any selection. • Organizational security philosophy. Organization X required classification of data by security levels within its policy, and therefore had this constraint on any selection of a method. Organization Y believed that any method selected should comply with its security policy (which in turn reflected its security philosophy). • Consistency. Organizations X and Y relied on the expertise of their own security analysts to obtain valid results, rather than on the consistency of the method if used by a number of experts. • Usability. Organizations X and Y believed this to be a critical factor. They did not wish to especially employ security experts who were skilled in a particular method, and did not wish to incur the overheads in training their own analysts in the use of a method. • Feasibility. Organization X typically applied risk assessment to new systems, rather than existing systems, and thus spent little time gathering data. Therefore, the feasibility of data collection and analysis was not considered a significant factor. Organization Y believed that any method needed to be able to gather and input data quickly. • Validity. Organizations X and Y both regarded the validity of the results of the method as critical. • Credibility. Organization X believed this factor to be critical, as money spent on the recommended safeguards needed to be justifiable. Organization Y believed this factor to be important because managers needed to understand and feel comfortable with the results of the risk assessment. This tied in with the need for low complexity; if managers do not understand why a particular safeguard has been recommended, they will not implement it. • Automation. Organization X employed mostly manual risk assessment methods, but were in favour of automating input of security data, as well as the production of reports. Organization Y’s risk assessment method used minimal automation. • Action. Organization X proposed this factor, and regarded it as crucial. Action is the likelihood that management will implement the recommendations of the risk assessment method. The effort involved in the risk assessment has been wasted if the recommendations are not implemented! Organization Y relied on the persuasive powers of its security analysts to get recommendations implemented, rather than on the recommendations themselves! Organization X made the following additional comment: formal measurement of the factors did not take place. Instead, the several factors considered critical were evaluated informally, for each method considered. The primary objective in selecting a method was to select the cheapest method that gets results. Organization Y made the following additional comment: as in organization X, the factors considered were informally measured, and only cost was quantitatively measured. It should be noted that it had evaluated a number of available risk assessment methods before deciding to instruct its own security experts to develop an in-house method. Nonetheless, this method was developed with the factors which it considered the most important (as shown by Table I) in mind. Conclusion Seventeen factors in the selection of a risk assessment method for an organization were identified from the literature, as well as from the empirical research. The two case studies highlighted the significance of seven factors: usability; credibility; complexity; completeness; adaptability; validity; and cost. These factors may be interrelated. For example, the usability of a method helps to reduce its cost. Both organizations agreed that the other factors in Table I were not as important as the seven factors listed above. It appears that the extra investment in evaluating the other factors was expected to result in the same recommendation as would be made after a consideration of the major seven factors alone. Therefore, the extra investment was not made. Several of the proposed factors appeared of limited usefulness, according to the empirical results: external influences, level of risk, and organizational structure. Another observation was that the factors considered were all measured qualitatively, rather than quantitatively, with the exception of cost. Sharman Lichtenstein Factors in the selection of a risk assessment method Information Management & Computer Security 4/4 [1996] 20–25 The research results suggest that the two major groups of stakeholders using the risk assessment method, i.e. the security analysts and the managers, have different agendas. The agenda of the security analyst is represented by all of the factors except cost. The analysts would like the method to be simple (minimal complexity) and easy to use (maximum usability), without the need to acquire additional skills through special training. They would like the method to be very comprehensive in its coverage of security components such as threats and safeguards, and in the level of granularity handled by the method. They would like the method to be adaptable in a variety of situations. Finally, they would like the method to be credible, in order to ensure the actual and the perceived validity of the results which they produce via the method. The driving factors for the managers, on the other hand, are the cost, credibility and validity of the method. They would like the method to be inexpensive and not require training of security analysts (maximum usability, minimal complexity). They desire the method to be highly credible (requires completeness), for its results to be accepted as valid. Managers sometimes depend on developing an understanding with their analysts regarding the expected outcome of a risk assessment. If necessary, a method must be able to be adjusted (requires adaptability) to produce the required results! Two themes highlighted by the above discussion are those of accountability and power: “Who will be blamed if the risk assessment results are ‘incorrect’?”, and “How can each party get what they want from the risk assessment method?” A research question emanating from these conclusions, for future risk assessment method design, is: “How can we design a risk assessment method which argues its recommendations in a manner which is credible and palatable to management?” Further research needs to be carried out to corroborate, and add to, the findings in this study, and also to develop a rigorous method which utilizes the proposed factors in the selection or development of a risk assessment method. References 1 Anderson, A.M., “Comparing risk analysis methodologies”, Proceedings of the IFIP TC11 Seventh International Conference on Information Security, North Holland, New York, NY, Amsterdam, 1991, pp. 301-11. 2 Bodeau, D.J., “A conceptual model for computer security risk analysis”, Proceedings of Eighth Annual Computer Security Applications 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 Conference, IEEE Computer Society Press, Los Alamitos, CA, 1992, pp. 56-63. Baskerville, R., “Information systems security design methods: implications for information systems development”, ACM Computing Surveys, Vol. 25 No. 4, 1993, pp. 373-414. Anderson, A.M., “The risk data repository: a novel approach to security risk modelling”, Proceedings of the IFIP TCII Seventh International Conference on Information Security, North Holland, New York, NY, Amsterdam, 1993, pp. 185-94. Baskerville, R., “Risk analysis as a source of professional knowledge”, Computers & Security, Vol. 10 No. 8, 1991. Birch, D.G.W., “An information driven approach to network security”, Second International Conference on Private Switching Systems and Networks, Institution of Electrical Engineers, London, 1992. Caelli, W., Longley, D. and Shain, M., Information Security Handbook, Macmillan, Basingstoke, 1991. Clark, R., “Risk management – a new approach”, Proceedings of the Fourth IFIP TCII International Conference on Computer Security, North Holland, New York, NY, Amsterdam, 1989. Garrabrants, W.M., Ellis, A.W.III, Hoffman, L.J. and Kamel, M., “CERTS: a comparative evaluation method for risk management methodologies and tools”, Sixth Annual Computer Security Conference, IEEE Computer Society Press, Los Alamitos, CA, 1990. Katzke, S.W., “A government perspective on risk management of automated information systems”, Proceedings of the 1988 Computer Security Risk Management Model Builders Workshop, 1987, pp. 3-20. Birch, D.G.W. and McEvoy, N.A., “Risk analysis for information systems”, Journal of Information Technology, Vol. 7 No. 1, 1992. Moses, R., “A European standard for risk analysis”, Proceedings of COMPSEC International, Elsevier, Oxford, 1993. Eloff, J.H.P., Labuschagne, L. and Badenhorst, K.P., “A comparative framework for risk analysis methods”, Computers & Security, Vol. 12 No. 6, 1993. FIPS 79, Guideline for Automatic Data Processing Risk Analysis, FIPS PUB 65, National Bureau of Standards, US Department of Commerce, 1979. Mayerfeld, H.T., “Framework for risk management: a synthesis of the working group reports”, First Computer Security Risk Management Model Builders Workshop, NIST, Gaithersburg, MD, 1989. Saltmarsh, T.J. and Browne, P.S., “Data processing – risk assessment”, Advances in Computer Security Management, Vol. 2, 1983. Wong, K. and Watt, W., Managing Information Security: A Non-technical Management Guide, Elsevier Advanced Technology, Amsterdam, 1990. Bennett, S.P. and Kailay, M.P., “An application of qualitative risk analysis to computer security for the commercial sector”, Proceedings of the Eighth Annual Computer Security Applications Conference, IEEE Computer Society Press, Los Alamitos, CA, 1992, pp. 64-73. [ 25 ]